OB Jun: good news! "Oceanbase version 2.2" is officially launched on the official website! (click to read the original text and download it directly) OceanBase 2.2 is a stable version that successfully supports tmall double 11 in 2019, and it is also the version used for TPC-C testing and ranked first in TPC-C performance. We will continue to launch the "OceanBase 2.2 handlebar series" for you in the next time, and take you to experience the powerful functions of OceanBase 2.2. Welcome to continue to pay attention!

Introduction

Oceanbase version 2.2 has been downloaded through the official website recently( https://oceanbase.alipay.com/download/resource ), 2.2 supports Oracle tenants. OceanBase won the top of TPC-C benchmark released by TPC audit on October 2, 2019, using Oracle tenants. More than 207 Alibaba cloud high configuration ECS servers were used in TPC-C test, because TPC-C standard is very meticulous and strict in application, database and other specifications. Generally speaking, it is difficult for ordinary enterprises or individuals to have such conditions to test.

Benchmark SQL is an open source TPC-C test program, which weakens the key standards of TPC-C (data distribution and application execution behavior), making it possible to run TPC-C with several servers. Of course, this result cannot be compared with the official TPC-C result. However, using BenchmarkSQL to compare the transaction processing capabilities of different databases has certain reference significance, especially compared with Sysbench.

OceanBase test tenant preparation

1.sys tenant parameter modification

BenchmarkSQL will load a large amount of data, and the memory consumption rate of OceanBase will be very fast in a short period of time. Therefore, some tuning needs to be done for memory freeze merge and current limiting parameters.

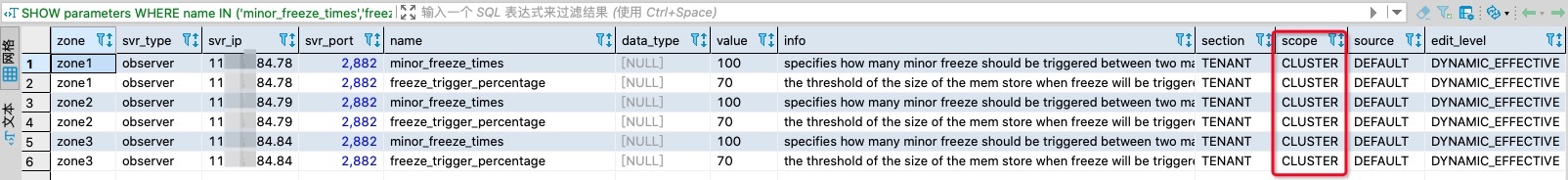

In sys tenant:

ALTER SYSTEM SET enable_merge_by_turn=FALSE; ALTER SYSTEM set minor_freeze_times=100; ALTER SYSTEM set freeze_trigger_percentage=70; ALTER SYSTEM set writing_throttling_trigger_percentage=70 tenant='obbmsql'; ALTER SYSTEM set writing_throttling_maximum_duration='10m' tenant='obbmsql'; show parameters where name in ('minor_freeze_times','freeze_trigger_percentage');

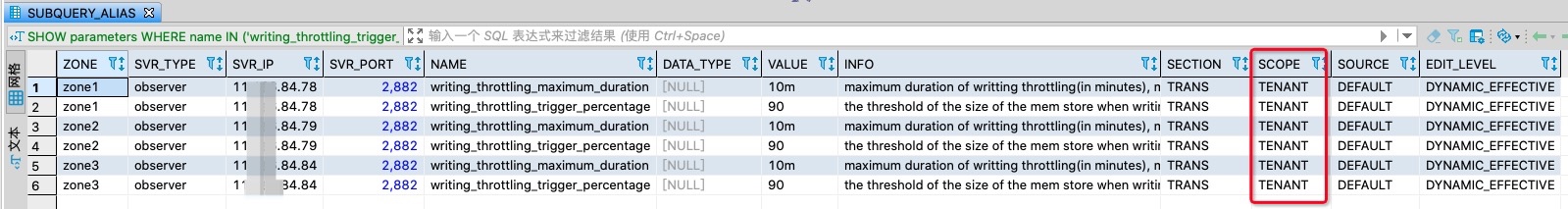

Note: the business tenant current limit parameter is modified in sys tenant, and the corresponding tenant name needs to be specified. Then check to confirm that it needs to be in the business tenant.

In business tenants:

SHOW parameters WHERE name IN ('writing_throttling_trigger_percentage','writing_throttling_maximum_duration');

2. Business tenant parameter modification

Compared with Oracle/MySQL, OceanBase has a default SQL timeout and transaction timeout mechanism. This may lead to SQL error in later view of modified data. So let's change these parameters first.

set global recyclebin=off; set global ob_query_timeout=1000000000; set global ob_trx_idle_timeout=1200000000; set global ob_trx_timeout=1000000000;

In addition, a separate schema (i.e. user) needs to be prepared for bmsql.

drop user tpcc cascade; create user tpcc identified by 123456; grant all privileges on tpcc.* to tpcc with grant option ; grant create, drop on *.* to tpcc;

The user name of the test tenant is TPCC @ obbmsql ා obdemo or obdemo:obbmsql:tpcc.

3. OBProxy configuration modification

OBProxy is the access agent of OceanBase, and some of its internal parameters may also affect performance. If the following compression parameters consume a certain amount of CPU, they can be turned off during the test.

$ obclient -h127.1 -uroot@sys#obdemo -P2883 -p123456 -c -A oceanbase alter proxyconfig set enable_compression_protocol=False; show proxyconfig like 'enable_compression_protocol';

After the parameter is modified, the obproxy process needs to be restarted

[admin@xxx /home/admin] $kill -9 `pidof obproxy` [admin@h07d17167.sqa.eu95 /home/admin] $cd /opt/taobao/install/obproxy [admin@xxx /opt/taobao/install/obproxy] $bin/obproxy bin/obproxy

BenchmarkSQL preparation

The official download address of BenchmarkSQL is: https://sourceforge.net/projects/benchmarksql/ After downloading, please refer to the instructions in HOW-TO-RUN.txt to compile and install BenchmarkSQL first. Then modify some scripts to add support for OceanBase as recommended below. You can also download my compiled and modified BenchmarkSQL directly. The address is: https://github.com/obpilot/benchmarksql-5.0 .

1. Prepare OceanBase driver file

BenchmarkSQL connects to various databases through jdbc. The test tenant of OceanBase is Oracle type, so you need to borrow the lib/oracle directory and put the relevant jar package into it. The OceanBase client - *. Jar is provided by OceanBase, and other jar packages can be obtained from the Internet.

[admin@xxx /home/admin/benchmarksql-5.0]$ll lib/oracle/ total 3728 -rwxr-xr-x 1 admin admin 52988 Jul 12 2019 commons-cli-1.3.1.jar -rwxr-xr-x 1 admin admin 245274 Jul 12 2019 commons-lang-2.3.jar -rwxr-xr-x 1 admin admin 2256213 Jul 12 2019 guava-18.0.jar -rwxr-xr-x 1 admin admin 54495 Jul 12 2019 json-20160810.jar -rwxr-xr-x 1 admin admin 1121698 Dec 3 15:04 oceanbase-client-1.0.8.jar -rwxr-xr-x 1 admin admin 174 Jul 12 2019 README.txt -rwxr-xr-x 1 admin admin 76997 Jul 12 2019 toolkit-common-logging-1.10.jar

2. Prepare OB configuration file

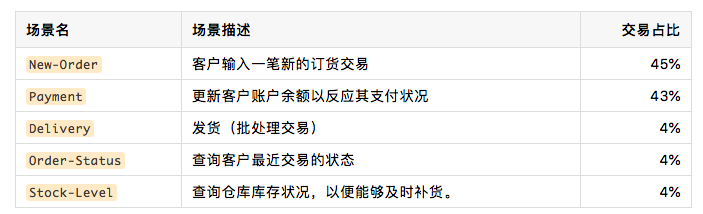

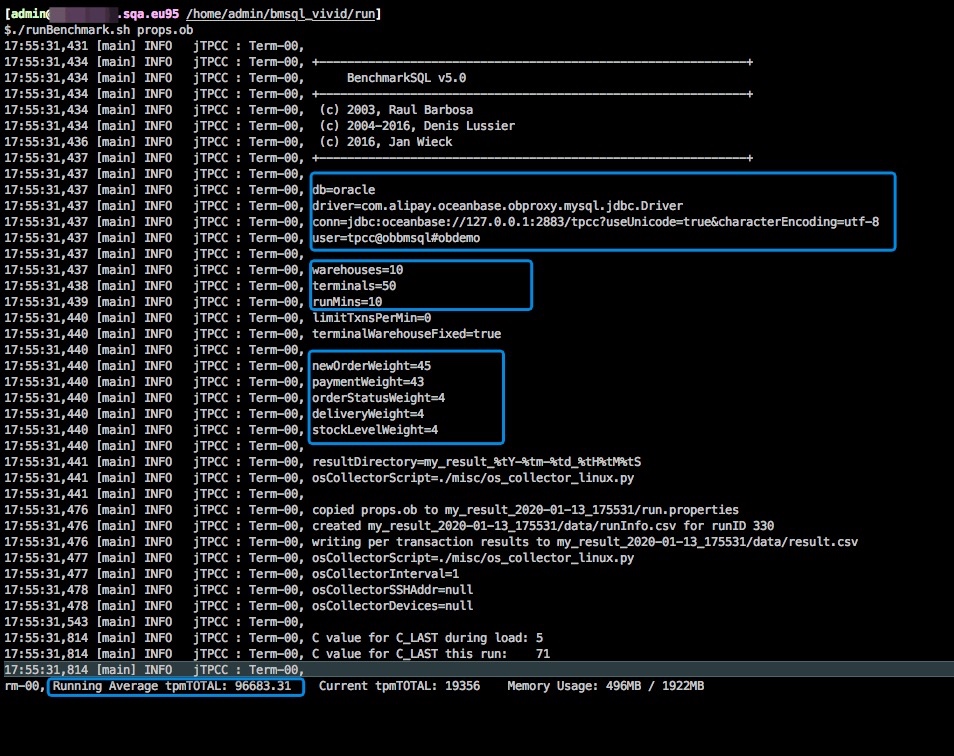

$cat props.ob db=oracle driver=com.alipay.oceanbase.obproxy.mysql.jdbc.Driver conn=jdbc:oceanbase://127.0.0.1:2883/tpcc?useUnicode=true&characterEncoding=utf-8 user=tpcc@obbmsql#obdemo password=123456 warehouses=10 loadWorkers=10 //fileLocation=/home/t4/tmp terminals=10 //To run specified transactions per terminal- runMins must equal zero runTxnsPerTerminal=0 //To run for specified minutes- runTxnsPerTerminal must equal zero runMins=10 //Number of total transactions per minute limitTxnsPerMin=0 //Set to true to run in 4.x compatible mode. Set to false to use the //entire configured database evenly. terminalWarehouseFixed=true //The following five values must add up to 100 newOrderWeight=45 paymentWeight=43 orderStatusWeight=4 deliveryWeight=4 stockLevelWeight=4 // Directory name to create for collecting detailed result data. // Comment this out to suppress. resultDirectory=my_result_%tY-%tm-%td_%tH%tM%tS osCollectorScript=./misc/os_collector_linux.py osCollectorInterval=1 //osCollectorSSHAddr=user@dbhost //osCollectorDevices=net_eth0 blk_sda

Be careful:

- The number of warehouses determines the amount of data. The number of formal pressure measuring warehouses is generally more than 10000.

- The number of loadmakers determines the performance of data loading. If OceanBase tenant resources are very small (especially memory resources), the loading speed should not be too fast; otherwise, it is easy to burst tenant memory.

- The concurrent number (terminals) is the number of concurrent clients that do TPC-C test later. This can be adjusted for each test to facilitate the observation of performance under different pressures.

- Run min is the time of each test, the longer the test result, the better and stable. Because sometimes data access has a preheating process, the effect will be reflected in the memory hit rate.

3. Create BenchmarkSQL related tables

1) Table creation script

The SQL script does not need to be executed directly.

create table bmsql_config ( cfg_name varchar2(30) primary key, cfg_value varchar2(50) ); create tablegroup tpcc_group partition by hash partitions 12; create table bmsql_warehouse ( w_id integer not null, w_ytd decimal(12,2), w_tax decimal(4,4), w_name varchar2(10), w_street_1 varchar2(20), w_street_2 varchar2(20), w_city varchar2(20), w_state char(2), w_zip char(9), primary key(w_id) )tablegroup='tpcc_group' partition by hash(w_id) partitions 12; create table bmsql_district ( d_w_id integer not null, d_id integer not null, d_ytd decimal(12,2), d_tax decimal(4,4), d_next_o_id integer, d_name varchar2(10), d_street_1 varchar2(20), d_street_2 varchar2(20), d_city varchar2(20), d_state char(2), d_zip char(9), PRIMARY KEY (d_w_id, d_id) )tablegroup='tpcc_group' partition by hash(d_w_id) partitions 12; create table bmsql_customer ( c_w_id integer not null, c_d_id integer not null, c_id integer not null, c_discount decimal(4,4), c_credit char(2), c_last varchar2(16), c_first varchar2(16), c_credit_lim decimal(12,2), c_balance decimal(12,2), c_ytd_payment decimal(12,2), c_payment_cnt integer, c_delivery_cnt integer, c_street_1 varchar2(20), c_street_2 varchar2(20), c_city varchar2(20), c_state char(2), c_zip char(9), c_phone char(16), c_since timestamp, c_middle char(2), c_data varchar2(500), PRIMARY KEY (c_w_id, c_d_id, c_id) )tablegroup='tpcc_group' use_bloom_filter=true compress partition by hash(c_w_id) partitions 12; create sequence bmsql_hist_id_seq; create table bmsql_history ( hist_id integer, h_c_id integer, h_c_d_id integer, h_c_w_id integer, h_d_id integer, h_w_id integer, h_date timestamp, h_amount decimal(6,2), h_data varchar2(24) )tablegroup='tpcc_group' use_bloom_filter=true compress partition by hash(h_w_id) partitions 12; create table bmsql_new_order ( no_w_id integer not null , no_d_id integer not null, no_o_id integer not null, PRIMARY KEY (no_w_id, no_d_id, no_o_id) )tablegroup='tpcc_group' use_bloom_filter=true compress partition by hash(no_w_id) partitions 12; create table bmsql_oorder ( o_w_id integer not null, o_d_id integer not null, o_id integer not null, o_c_id integer, o_carrier_id integer, o_ol_cnt integer, o_all_local integer, o_entry_d timestamp, PRIMARY KEY (o_w_id, o_d_id, o_id) )tablegroup='tpcc_group' use_bloom_filter=true compress partition by hash(o_w_id) partitions 12; create table bmsql_order_line ( ol_w_id integer not null, ol_d_id integer not null, ol_o_id integer not null, ol_number integer not null, ol_i_id integer not null, ol_delivery_d timestamp, ol_amount decimal(6,2), ol_supply_w_id integer, ol_quantity integer, ol_dist_info char(24), PRIMARY KEY (ol_w_id, ol_d_id, ol_o_id, ol_number) )tablegroup='tpcc_group' use_bloom_filter=true compress partition by hash(ol_w_id) partitions 12; create table bmsql_item ( i_id integer not null, i_name varchar2(24), i_price decimal(5,2), i_data varchar2(50), i_im_id integer, PRIMARY KEY (i_id) )use_bloom_filter=true compress locality='F,R{all_server}@zone1, F,R{all_server}@zone2, F,R{all_server}@zone3' primary_zone='zone1' duplicate_scope='cluster'; create table bmsql_stock ( s_w_id integer not null, s_i_id integer not null, s_quantity integer, s_ytd integer, s_order_cnt integer, s_remote_cnt integer, s_data varchar2(50), s_dist_01 char(24), s_dist_02 char(24), s_dist_03 char(24), s_dist_04 char(24), s_dist_05 char(24), s_dist_06 char(24), s_dist_07 char(24), s_dist_08 char(24), s_dist_09 char(24), s_dist_10 char(24), PRIMARY KEY (s_w_id, s_i_id) )tablegroup='tpcc_group' use_bloom_filter=true compress partition by hash(s_w_id) partitions 12;

Be careful:

- The number of partitions in the table creation statement can be adjusted according to the actual situation, which is related to the number of cluster nodes. If the cluster is 3 (1-1-1), it is recommended to be 6 or multiple of 6; if the cluster is 6 (2-2-2), it is recommended to be 12 or multiple of 12; if the cluster is 9 (3-3-3), it is recommended to be 36 or multiple of 36. In this way, the number of partitions on each node can be balanced as much as possible during the later elastic scaling test.

- The bmsql item above uses the copy table function, and there will be a copy on all nodes of the tenant. Of course, there is always only one primary replica. Please refer to OceanBase transaction engine features and application practice sharing for the function introduction of replication table.

- The table creation statement does not contain the non primary key index, which is to load the data later for faster performance.

2) build tables

./runSQL.sh props.ob ./sql.oceanbase/tableCreates.sql

After the table is created, you can view the primary replica distribution

SELECT t1.tenant_id,t1.tenant_name,t2.database_name,t3.table_id,t3.table_Name,t3.tablegroup_id,t3.part_num,t4.partition_Id,t4.zone,t4.svr_ip,t4.role, round(t4.data_size/1024/1024) data_size_mb from `gv$tenant` t1 join `gv$database` t2 on (t1.tenant_id = t2.tenant_id) join gv$table t3 on (t2.tenant_id = t3.tenant_id and t2.database_id = t3.database_id and t3.index_type = 0) left join `__all_virtual_meta_table` t4 on (t2.tenant_id = t4.tenant_id and ( t3.table_id = t4.table_id or t3.tablegroup_id = t4.table_id ) and t4.role in (1)) where t1.tenant_id = 1001 order by t3.tablegroup_id, t4.partition_Id, t3.table_name ;

4. Loading data

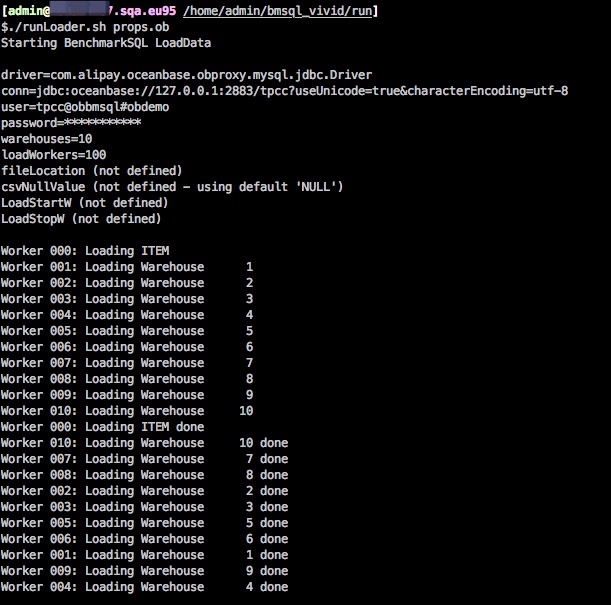

1) Start loading data

./runLoader.sh props.ob

2) Observe data loading performance

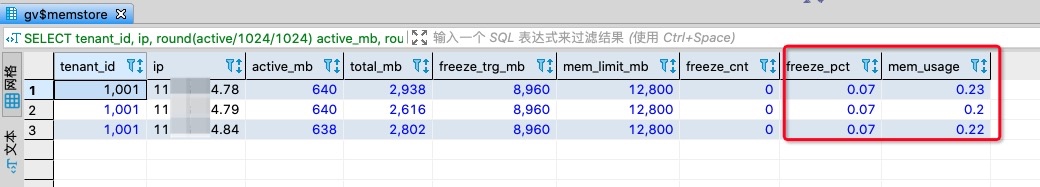

In order to observe the data write speed, you can repeatedly execute the following SQL under the sys tenant, mainly to observe the incremental memory growth rate and the total incremental memory, and whether it is close to the total incremental memory limit.

SELECT tenant_id, ip, round(active/1024/1024) active_mb, round(total/1024/1024) total_mb, round(freeze_trigger/1024/1024) freeze_trg_mb, round(mem_limit/1024/1024) mem_limit_mb , freeze_cnt , round((active/freeze_trigger),2) freeze_pct, round(total/mem_limit, 2) mem_usage FROM `gv$memstore` WHERE tenant_id IN (1001) ORDER BY tenant_id, ip;

Of course, another way to watch data load is to use monitoring. OCP monitoring or dooba script monitoring.

python dooba.py -h 127.1 -uroot@sys#obdemo -P2883 -p123456

After dooba enters, the default is sys tenant. Select business tenant by letter lowercase 'c'. Press the number '1' to view the help, the number '2' to view the tenant overview, the number '3' to view the tenant's machine performance information, press TAB to switch the current focus, press the letter lowercase 'd' to delete the current TAB, and press the letter capital R to restore all tabs. NET TAB in the overview has no meaning to be deleted to save screen space.

5. index

There are only two indexes. Since the related table is a partitioned table, you can build a global index or a local index. We build local indexes.

$cat ./sql.oceanbase/indexCreates.sql create index bmsql_customer_idx1 on bmsql_customer (c_w_id, c_d_id, c_last, c_first) local; create index bmsql_oorder_idx1 on bmsql_oorder (o_w_id, o_d_id, o_carrier_id, o_id) local;

Start indexing. OceanBase indexing will return soon, and index building is asynchronous.

./runSQL.sh props.ob ./sql.oceanbase/indexCreates.sql

6. Data verification

Check the amount of data in each table

obclient> select /*+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_CONFIG; *+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_STOCK;+----------+ | COUNT(*) | +----------+ | 4 | +----------+ 1 row in set (0.06 sec) obclient> select /*+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_WAREHOUSE; +----------+ | COUNT(*) | +----------+ | 10 | +----------+ 1 row in set (0.06 sec) obclient> select /*+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_DISTRICT; +----------+ | COUNT(*) | +----------+ | 100 | +----------+ 1 row in set (0.06 sec) obclient> select /*+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_CUSTOMER; +----------+ | COUNT(*) | +----------+ | 300000 | +----------+ 1 row in set (0.34 sec) obclient> select /*+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_HISTORY; +----------+ | COUNT(*) | +----------+ | 300000 | +----------+ 1 row in set (0.10 sec) obclient> select /*+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_NEW_ORDER; +----------+ | COUNT(*) | +----------+ | 90000 | +----------+ 1 row in set (0.07 sec) obclient> select /*+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_OORDER; +----------+ | COUNT(*) | +----------+ | 300000 | +----------+ 1 row in set (0.11 sec) obclient> select /*+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_ORDER_LINE; +----------+ | COUNT(*) | +----------+ | 3001782 | +----------+ 1 row in set (0.27 sec) obclient> select /*+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_ITEM; +----------+ | COUNT(*) | +----------+ | 100000 | +----------+ 1 row in set (0.08 sec) obclient> select /*+ parallel(16) read_consistency(weak) */ count(*) from TPCC.BMSQL_STOCK; +----------+ | COUNT(*) | +----------+ | 1000000 | +----------+ 1 row in set (0.63 sec)

In order to avoid the generated data not conforming to the specification (such as transaction failure caused by intermediate error), run the following verification script

#!/usr/bin/sh cc1=" SELECT /*+ no_use_px parallel(8) */ * FROM( SELECT w.w_id, w.w_ytd, d.sum_d_ytd FROM bmsql_warehouse w, (SELECT /*+ no_use_px parallel(8) */ d_w_id, sum(d_ytd) sum_d_ytd FROM bmsql_district GROUP BY d_w_id) d WHERE w.w_id= d.d_w_id ) x WHERE w_ytd != sum_d_ytd; " cc2=" SELECT /*+ no_use_px parallel(8) */ * FROM( SELECT d.d_w_id, d.d_id, d.d_next_o_id, o.max_o_id, no.max_no_o_id FROM bmsql_district d, (SELECT /*+ no_use_px parallel(8) */ o_w_id, o_d_id, MAX(o_id) max_o_id FROM bmsql_oorder GROUP BY o_w_id, o_d_id) o, (SELECT /*+ no_use_px parallel(8) */ no_w_id, no_d_id, MAX(no_o_id) max_no_o_id FROM bmsql_new_order GROUP BY no_w_id, no_d_id) no WHERE d.d_w_id= o.o_w_id AND d.d_w_id= no.no_w_id AND d.d_id= o.o_d_id AND d.d_id= no.no_d_id ) x WHERE d_next_o_id - 1!= max_o_id OR d_next_o_id - 1!= max_no_o_id; " cc3=" SELECT /*+ no_use_px paratLel(8) */ * FROM( SELECT /*+ no_use_px parallel(8) */ no_w_id, no_d_id, MAX(no_o_id) max_no_o_id, MIN(no_o_id) min_no_o_id, COUNT(*) count_no FROM bmsql_new_order GROUP BY no_w_id, no_d_Id ) x WHERE max_no_o_id - min_no_o_id+ 1!= count_no; " cc4=" SELECT /*+ no_use_px parallel(8) */ * FROM ( SELECT o.o_w_id, o.o_d_id, o.sum_o_ol_cnt, ol.count_ol FROM (SELECT /*+ no_use_px parallel(8) */ o_w_id, o_d_id, SUM(o_ol_cnt) sum_o_ol_cnt FROM bmsql_oorder GROUP BY o_w_id, o_d_id) o, (SELECT /*+ no_use_px parallel(8) */ ol_w_id, ol_d_id, COUNT(*) count_ol FROM bmsql_order_line GROUP BY ol_w_id, ol_d_id) ol WHERE o.o_w_id = ol.ol_w_id AND o.o_d_id = ol.ol_d_id ) x WHERE sum_o_ol_cnt != count_ol; " cc5=" SELECT /*+ no_use_px parallel(8) */ * FROM ( SELECT o.o_w_id, o.o_d_id, o.o_id, o.o_carrier_id, no.count_no FROM bmsql_oorder o, (SELECT /*+ no_use_px parallels) */ no_w_id, no_d_id, no_o_id, COUNT(*) count_no FROM bmsql_new_order GROUP BY no_w_id, no_d_id, no_o_id) no WHERE o.o_w_id = no.no_w_id AND o.o_d_id = no.no_d_id AND o.o_id = no.no_o_id ) x WHERE (o_carrier_id IS NULL AND count_no = 0) OR (o_carrier_id IS NOT NULL AND count_no != 0); " cc6=" SELECT /*+ no_use_px parallel(8) */ * FROM ( SELECT o.o_w_id, o.o_d_id, o.o_id, o.o_ol_cnt, ol.count_ol FROM bmsql_oorder o, (SELECT /*+ no_use_px parallel(8) */ ol_w_id, ol_d_id, ol_o_id, COUNT(*) count_ol FROM bmsql_order_line GROUP BY ol_w_id, ol_d_id, ol_o_id) ol WHERE o.o_w_id = ol.ol_w_id AND o.o_d_id = ol.ol_d_id AND o.o_id = ol.ol_o_id ) x WHERE o_ol_cnt != count_ol; " cc7=" SELECT /*+ no_use_px parallel(8) */ * FROM ( SELECT /*+ no_use_px parallel(8) */ * FROM ( SELECT o.o_w_id, o.o_d_id, o.o_id, o.o_ol_cnt, ol.count_ol FROM bmsql_oorder o, (SELECT /*+ no_use_px parallel(8) */ ol_w_id, ol_d_id, ol_o_id, COUNT(*) count_ol FROM bmsql_order_line GROUP BY ol_w_id, ol_d_id, ol_o_id) ol WHERE o.o_w_id = ol.ol_w_id AND o.o_d_id = ol.ol_d_id AND o.o_id = ol.ol_o_id ) x WHERE o_ol_cnt != count_ol; " cc7=" SELECT /*+ no_use_px parallel(8) */ * FROM ( SELECT /*+ no_use_px parallel(8) */ ol.ol_w_id, ol.ol_d_id, ol.ol_o_id, ol.ol_delivery_d, o.o_carrier_id FROM bmsql_order_line ol, bmsql_oorder o WHERE ol.ol_w_id = o.o_w_id AND ol.ol_d_id = o.o_d_id AND ol.ol_o_id = o.o_id ) x WHERE (ol_delivery_d IS NULL AND o_carrier_id IS NOT NULL) OR (ol_delivery_d IS NOT NULL AND o_carrier_id IS NULL); " cc8=" SELECT /*+ no_use_px parallel(8) */ * FROM ( SELECT w.w_id, w.w_ytd, h.sum_h_amount FROM bmsql_warehouse w, (SELECT /*+ no_use_px parallel(8) */ h_w_id, SUM(h_amount) sum_h_amount FROM bmsql_history GROUP BY h_w_id) h WHERE w.w_id = h.h_w_id) x WHERE w_ytd != sum_h_amount; " cc9=" SELECT /*+ no_use_px parallel(8) */ * FROM ( SELECT d.d_w_id, d.d_id, d.d_ytd, h.sum_h_amount FROM bmsql_district d, (SELECT /*+ no_use_px parallel(8) */ h_w_id, h_d_id, SUM(h_amount) sum_h_amount FROM bmsql_history GROUP BY h_w_id, h_d_id) h WHERE d.d_w_id = h.h_w_id AND d.d_id = h.h_d_id ) x WHERE d_ytd != sum_h_amount; " cc_list="$cc1|$cc2|$cc3|$cc4|$cc5|$cc6|$cc7|$cc8|$cc9" oldIFS=$IFS IFS="|" counter=0 for sql in $cc_list do let counter++ echo `date '+%F %X'`" cc$counter start" obclient -Dtpcc -h127.1 -P2883 -utpcc@obbmsql#obdemo -p123456 -A -c -e "$sql" #echo $? if [[ $? -ne 0 ]];then IFS=$oldIFS echo `date '+%F %X'`" cc$counter failed" exit 1 fi echo `date '+%F %X'`" cc$counter finished" done IFS=$oldIFS

BenchmarkSQL TPC-C scenario analysis

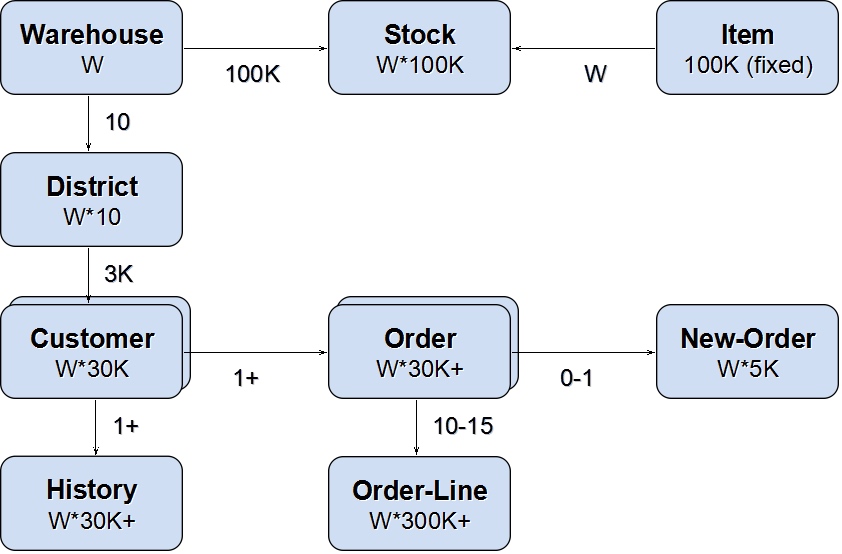

1. E-R model

2. scene SQL

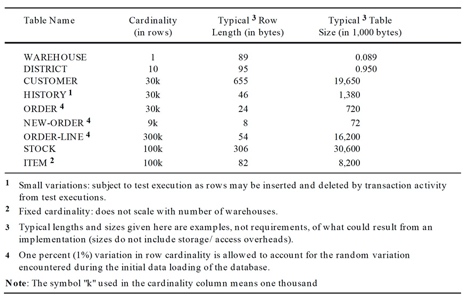

There are five types of transactions to be processed by TPC-C system:

The scale of the scenario is defined in the database configuration file. Here is the default. For the first four types of transactions, the response time is required to be within 5 seconds; for inventory status query transactions, the response time is required to be within 20 seconds.

These five types of Transactions Act on the nine tables shown in Figure 1. Transaction operation types include update, insert, delete and cancel operations.

The following is the transaction SQL of TPCC (which is heavy in the past, but may not be complete) that I got through the full SQL audit of OceanBase in advance.

1) Scenario 1: new order

SELECT d_tax, d_next_o_id FROM bmsql_district WHERE d_w_id = 778 AND d_id = 5 FOR UPDATE; SELECT c_discount, c_last, c_credit, w_tax FROM bmsql_customer JOIN bmsql_warehouse ON (w_id = c_w_id) WHERE c_w_id = 778 AND c_d_id = 5 AND c_id = 2699; UPDATE bmsql_district SET d_next_o_id = d_next_o_id + 1 WHERE d_w_id = 778 AND d_id = 5 ; INSERT INTO bmsql_oorder ( o_id, o_d_id, o_w_id, o_c_id, o_entry_d, o_ol_cnt, o_all_local) VALUES (5686, 5, 778, 2699, timestamp '2020-01-04 13:49:34.137', 8, 1); INSERT INTO bmsql_new_order ( no_o_id, no_d_id, no_w_id) VALUES (5686, 5, 778); SELECT i_price, i_name, i_data FROM bmsql_item WHERE i_id = 7752 ; -- Cycle 8 times SELECT s_quantity, s_data, s_dist_01, s_dist_02, s_dist_03, s_dist_04, s_dist_05, s_dist_06, s_dist_07, s_dist_08, s_dist_09, s_dist_10 FROM bmsql_stock WHERE s_w_id = 778 AND s_i_id = 7752 FOR UPDATE; -- Cycle 8 times SHOW VARIABLES WHERE Variable_name = 'tx_read_only'; UPDATE bmsql_stock SET s_quantity = 47, s_ytd = s_ytd + 8, s_order_cnt = s_order_cnt + 1, s_remote_cnt = s_remote_cnt + 0 WHERE s_w_id = 778 AND s_i_id = 7752; -- Cycle 8 times SHOW VARIABLES WHERE Variable_name = 'tx_read_only'; INSERT INTO bmsql_order_line ( ol_o_id, ol_d_id, ol_w_id, ol_number, ol_i_id, ol_supply_w_id, ol_quantity, ol_amount, ol_dist_info) VALUES (5686, 5, 778, 1, 7752, 778, 8, 589.36, 'lYvcNHkOvt3iNoBb5W29umGO'); -- Cycle 8 times COMMIT;

2) Scenario 2: new order

SELECT c_id FROM bmsql_customer WHERE c_w_id = 778 AND c_d_id = 2 AND c_last = 'PRICALLYPRES' ORDER BY c_first; SELECT c_first, c_middle, c_last, c_balance FROM bmsql_customer WHERE c_w_id = 778 AND c_d_id = 2 AND c_id = 2694; SELECT o_id, o_entry_d, o_carrier_id FROM bmsql_oorder WHERE o_w_id = 778 AND o_d_id = 2 AND o_c_id = 2694 AND o_id = ( SELECT max(o_id) FROM bmsql_oorder WHERE o_w_id = 778 AND o_d_id = 2 AND o_c_id = 2694 ); SELECT ol_i_id, ol_supply_w_id, ol_quantity, ol_amount, ol_delivery_d FROM bmsql_order_line WHERE ol_w_id = 778 AND ol_d_id = 2 AND ol_o_id = 4494 ORDER BY ol_w_id, ol_d_id, ol_o_id, ol_number; ROLLBACK;

3) Scenario 3: Payment

UPDATE bmsql_district SET d_ytd = d_ytd + 4806.11 WHERE d_w_id = 778 AND d_id = 10; SELECT d_name, d_street_1, d_street_2, d_city, d_state, d_zip FROM bmsql_district WHERE d_w_id = 778 AND d_id = 10; UPDATE bmsql_warehouse SET w_ytd = w_ytd + 4806.11 WHERE w_id = 778; SELECT w_name, w_street_1, w_street_2, w_city, w_state, w_zip FROM bmsql_warehouse WHERE w_id = 778 ; SELECT c_id FROM bmsql_customer WHERE c_w_id = 778 AND c_d_id = 10 AND c_last = 'ESEBAROUGHT' ORDER BY c_first; SELECT c_first, c_middle, c_last, c_street_1, c_street_2, c_city, c_state, c_zip, c_phone, c_since, c_credit, c_credit_lim, c_discount, c_balance FROM bmsql_customer WHERE c_w_id = 778 AND c_d_id = 10 AND c_id = 502 FOR UPDATE; UPDATE bmsql_customer SET c_balance = c_balance - 4806.11, c_ytd_payment = c_ytd_payment + 4806.11, c_payment_cnt = c_payment_cnt + 1 WHERE c_w_id = 778 AND c_d_id = 10 AND c_id = 502; INSERT INTO bmsql_history ( h_c_id, h_c_d_id, h_c_w_id, h_d_id, h_w_id, h_date, h_amount, h_data) VALUES (502, 10, 778, 10, 778, timestamp '2020-01-04 13:49:34.148', 4806.11, 'HfYovpM6 b6aJtf2Xk6'); COMMIT;

4) Scenario 4:

SELECT count(*) AS low_stock FROM ( SELECT s_w_id, s_i_id, s_quantity FROM bmsql_stock WHERE s_w_id = 778 AND s_quantity < 10 AND s_i_id IN ( SELECT ol_i_id FROM bmsql_district JOIN bmsql_order_line ON ol_w_id = d_w_id AND ol_d_id = d_id AND ol_o_id >= d_next_o_id - 20 AND ol_o_id < d_next_o_id WHERE d_w_id = 778 AND d_id = 1 ) ); ROLLBACK;

5) Scenario 5:

SELECT no_o_id FROM bmsql_new_order WHERE no_w_id = 778 AND no_d_id = 1 ORDER BY no_o_id ASC; DELETE FROM bmsql_new_order WHERE no_w_id = 778 AND no_d_id = 1 AND no_o_id = 4488; UPDATE bmsql_oorder SET o_carrier_id = 2 WHERE o_w_id = 778 AND o_d_id = 1 AND o_id = 4488; SELECT o_c_id FROM bmsql_oorder WHERE o_w_id = 778 AND o_d_id = 1 AND o_id = 4488; UPDATE bmsql_order_line SET ol_delivery_d = timestamp '2020-01-04 13:49:34.181' WHERE ol_w_id = 778 AND ol_d_id = 1 AND ol_o_id = 4488; SELECT sum(ol_amount) AS sum_ol_amount FROM bmsql_order_line WHERE ol_w_id = 778 AND ol_d_id = 1 AND ol_o_id = 4488; UPDATE bmsql_customer SET c_balance = c_balance + 3733.14, c_delivery_cnt = c_delivery_cnt + 1 WHERE c_w_id = 778 AND c_d_id = 1 AND c_id = 1260; <---Cycle 8 times---> commit

Note: there may be transaction SQL not found.

3. TPC-C output index

There are two main indicators of TPC-C test results:

- Traffic indicator (tpmC): describes the number of new order transactions that the system can process per minute while executing Payment, order status, Delivery and stock level transactions. The larger the flow index value is, the better. tpm is the abbreviation of transactions per minute; C refers to the C benchmark program in TPC. It is defined as the number of new orders processed by the system per minute. Note that while processing new orders, the system also processes other four types of transaction requests as shown in Figure 1. As can be seen from Figure 1, new order requests cannot exceed 45% of all transaction requests. Therefore, when the performance of a system is 1000 tpmC, it actually processes more than 2000 requests per minute.

- Price/tpmC: the ratio of test system price to traffic index. The smaller the price performance, the better.

Run BenchmarkSQL TPC-C test

1. OceanBase memory freeze and merge

A large amount of data has been loaded in front, and the increments of OceanBase are all in memory. You need to make a major freeze to release the incremental memory. There are two steps to this event. One is the freezing operation, which is very fast. The second is the merge operation, which is related to the amount of incremental data. It usually takes several minutes or dozens of minutes.

It is recommended to do a major freeze event to free memory every time the test is repeated. The disadvantage is that the memory data access in the subsequent test needs another preheating process.

1) Observe incremental memory usage

select tenant_id, ip, round(active/1024/1024) active_mb, round(total/1024/1024) total_mb, round(freeze_trigger/1024/1024) freeze_trg_mb, round(mem_limit/1024/1024) mem_limit_mb, freeze_cnt, round(total/mem_limit,2) total_pct from `gv$memstore` where tenant_id>1001 order by tenant_id;

2) Initiate memory major freeze event

ALTER SYSTEM major freeze;

3) Observe consolidation progress

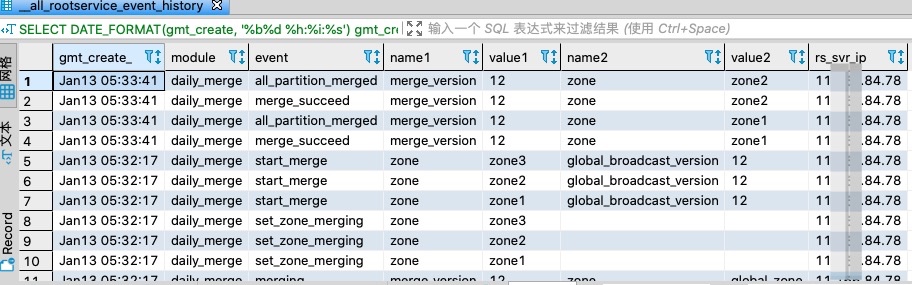

Observe merge events

SELECT DATE_FORMAT(gmt_create, '%b%d %H:%i:%s') gmt_create_ , module, event, name1, value1, name2, value2, rs_svr_ip FROM __all_rootservice_event_history WHERE 1 = 1 AND module IN ('daily_merge') ORDER BY gmt_create DESC LIMIT 100;

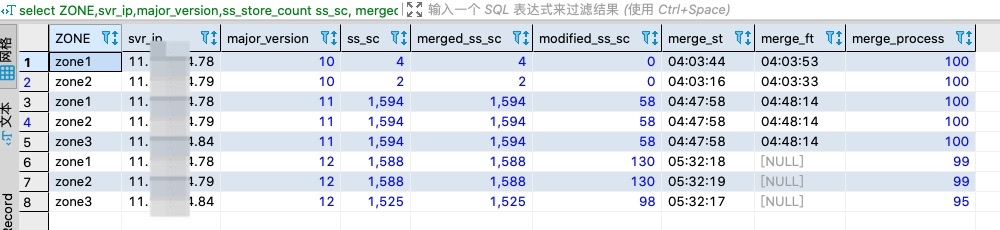

Observe consolidation progress

select ZONE,svr_ip,major_version,ss_store_count ss_sc, merged_ss_store_count merged_ss_sc, modified_ss_store_count modified_ss_sc, date_format(merge_start_time, "%h:%i:%s") merge_st, date_format(merge_finish_time,"%h:%i:%s") merge_ft, merge_process from `__all_virtual_partition_sstable_image_info` s order by major_version, zone, svr_ip ;

2. Run TPC-C test

1) Run test program

$./runBenchmark.sh props.ob

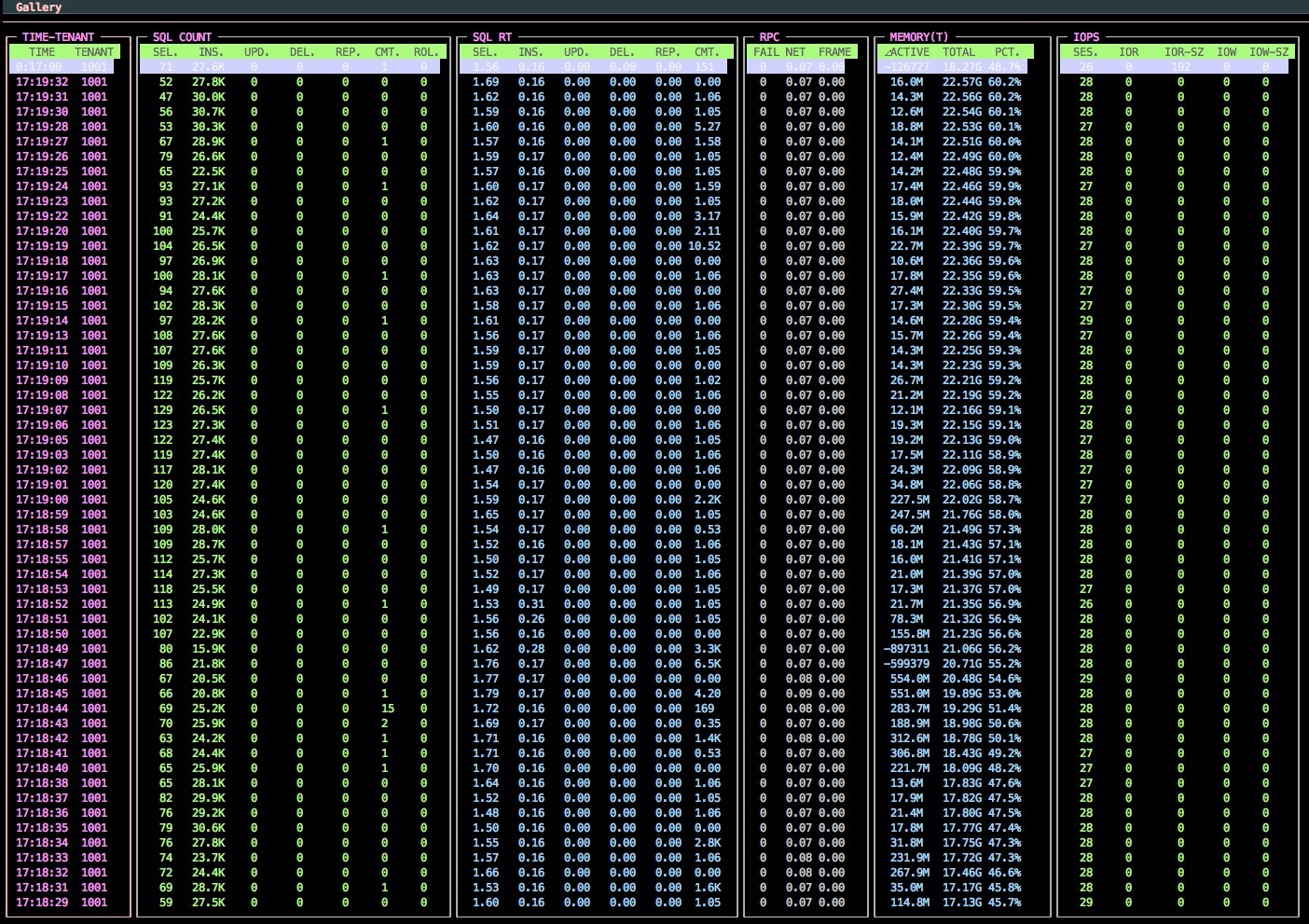

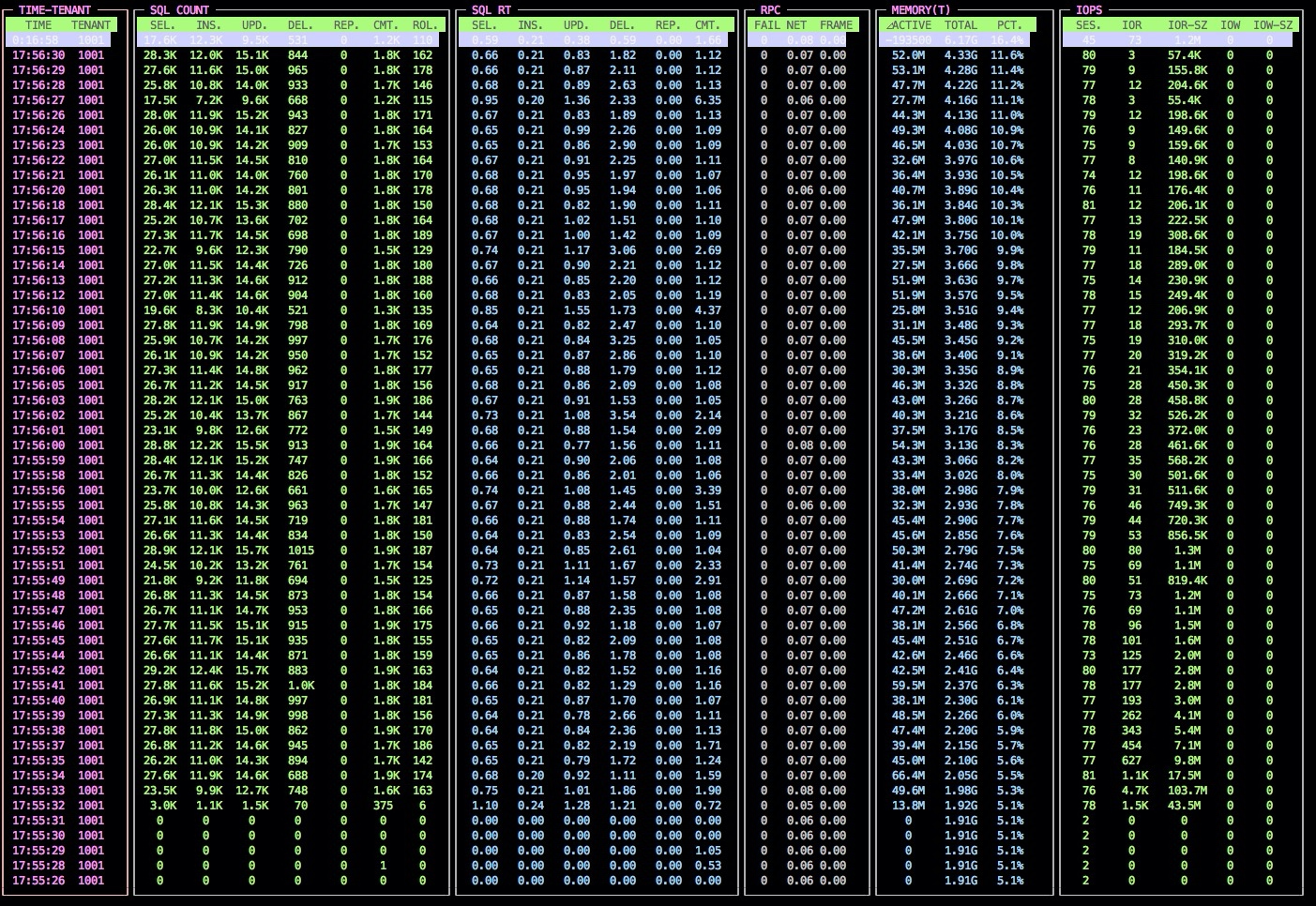

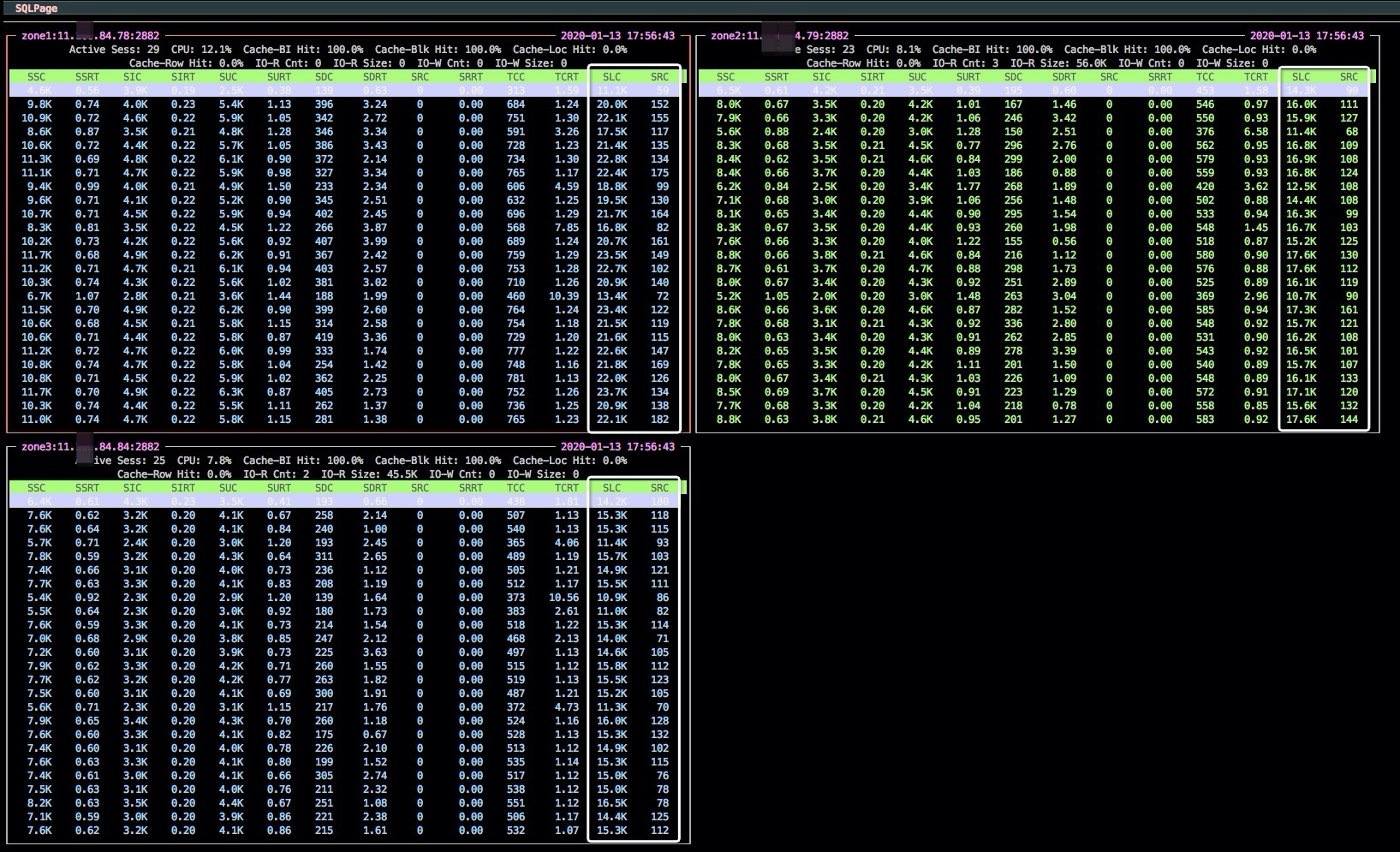

2) Performance monitoring

Be careful:

This monitoring interface focuses on QPS/TPS, RT, increment of incremental memory and the proportion of total memory. In addition, we can see that there are still many physical read IO during the test.

Be careful:

This monitoring interface focuses on the QPS and TPS distribution of each node and the proportion of remote SQL in the total QPS (SRC/SLC). TPC-C business definition will have about 1% of remote warehouse transaction transactions. In OceanBase, this transaction has a certain probability of being a distributed transaction.

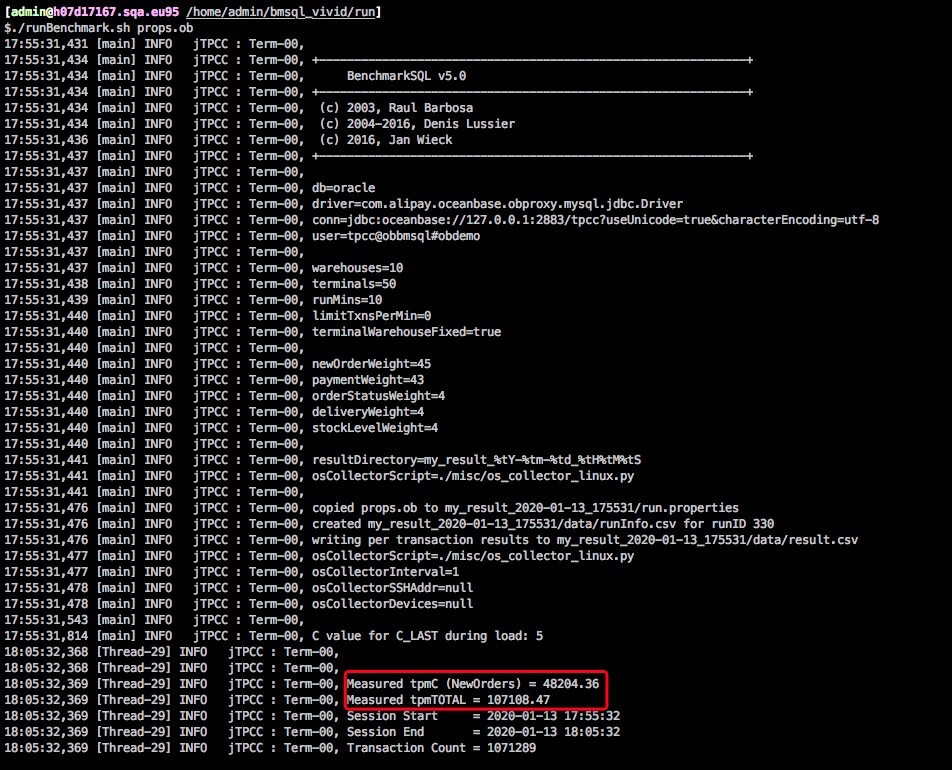

3) TPC-C Report

Results are generated after the run.

From the graph, the tpmC result is 48204. The total resource of this business tenant is 20C25G*3. The number of warehouses is only 10. If the machine is better and 10000 warehouses are built, the result should be higher.

The run also generates a folder

$ll my_result_2020-01-13_175531/ total 16 drwxrwxr-x 2 admin admin 4096 Jan 13 17:55 data -rw-rw-r-- 1 admin admin 5130 Jan 13 18:10 report.html -rwxr-xr-x 1 admin admin 1050 Jan 13 17:55 run.properties

The above is the whole process of running TPC-C test program through BenchmarkSQL. Students who are interested in it can also follow the steps above. For more feedback, please leave a message in the comment area.

Apply for free experience of oceanbase version 2.2 now

"Oceanbase version 2.2" is officially launched on the official website! Oceanbase version 2.2 is a stable version that successfully supports tmall's double 11 promotion in 2019. It is also used for TPC-C testing and ranked first in TPC-C performance. Oceanbase version 2.2 is widely used not only in ant financial services and online banking, but also in some financial institutions.

Want to experience oceanbase version 2.2 now?

Get links for free:

https://oceanbase.alipay.com/download/resource

If you encounter problems during installation and use and want to have technical exchanges with frontline experts of OceanBase, we provide two channels for developer users:

Join OceanBase technology exchange nail group, open the nail search group number: 21949783 (Note: OB 2.2) to join

We attach great importance to the experience and experience from every developer user, and hope to get your valuable feedback.