After more than a week of learning and trying, I believe everyone is familiar with the syntax, structure, and some basic packages and uses of python. Now we are officially ready to start collecting, but there is a big problem. Just like when collecting the American case station before, we have to write several lines of instructions for each collection, and we have not inherited some cookie s Reference and other information, so simply using python urllib can not meet our actual needs. Then, let's analyze what should be included in a collection class that can be used daily.

1. After instantiating the collection class, it brings some header information, such as user agent and accept. You can add it manually without adding it manually

2. After the collection is executed, obtain the collected response header, analyze the data in it, record the execution of the execution, and inherit the obtained information when the collection method is called next time

3. It can collect plain text content or binary stream, which is convenient for collecting pages and downloading relevant documents

4. Support different character codes and response codes, such as gbk, utf8, gzip, deflate, etc

5. Support different request methods, such as get, put, post, delete, head, etc

6. The status code can be returned regardless of whether an exception is collected

7. Various header information can be forged and added, and information such as cookie s can be forged and added, such as Oauth:xxxx, signature: XXX, etc

8. It supports automatic jump collection such as 301 and 302 and meta automatic jump collection

9. Automatically complete the website completion. We don't need to calculate ourselves after extracting the link according to the collection target

10. If possible, try to support asynchronous collection

11. If possible, try to support event delegation

12. If possible, try to support proxy

13. If possible, try to support breakpoint continuous download and upload

These are the needs summarized by Lao Gu in c# the collection for several years. When all these are met, collection is a very simple thing

Let's try to realize the above requirements step by step

------------------------------------------------------------------------------------------------

Let's continue to put the class under the previously mentioned custom folder. If you don't know, please refer to Illiterate Python entry diary: on the fifth day, build a python debugging environment and preliminarily explore the use of pymssql , after installing spyder, there is a method to create a user-defined class

Well, in order not to be confused with other packages, we call the folder of this class spider. The collection class is easy to understand

Well, let's start with a basic definition. Let's use Ajax for the class name. Anyway, it's normal for the package name to be inconsistent with the class name

class Ajax: def __init__(self): self.version = '0.1'

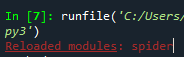

from spider import Ajax # Reloaded modules: spider

Well, the class definition is successful. Then, let's start to implement our requirements step by step

The first step is to define the method enumeration first. Because the number is limited, the specific implementation will be ignored first. Let's limit the method first

So, let's define enumeration

from enum import Enum class Ajax: def __init__(self): self.version = '0.1' self.agent = 'Mozilla/5.0 (Windows NT 5.1; rv:11.0) Gecko/20100101 Firefox/11.0' self.refer = '' self.current_url = '' self.method = self.Method.GET class Method(Enum): GET = 1 POST = 2 HEAD = 3 PUT = 4 DELETE = 5

Because this method is only used when initiating http requests, we define Metho as a subtype of Ajax and define several variables. I believe you will know what these are used when you see the variable name, so we won't explain it

Then, we encapsulate the request header. The request header is a dictionary, but it is a combination of multiple existing items, so we define it as an attribute

@property

def Header(self):

return {'refer':self.refer

,'user-agent':self.agent

,'accept':self.accept

,'accept-encoding':self.encoding

,'accept-language':self.lang

,'cache-control':self.cache}from spider import Ajax

ajax = Ajax()

print(ajax.Header)

# {'refer': '', 'user-agent': 'Mozilla/5.0 (Windows NT 5.1; rv:11.0) Gecko/20100101 Firefox/11.0', 'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8', 'accept-encoding': 'gzip, deflate, br', 'accept-language': 'zh-CN,zh;q=0.9', 'cache-control': 'no-cache'}Good. First, the request header information is like this, and then we need to modify it later, such as adding request headers and cookie s. We'll do it later. Let's do the request method first and try to collect it for the first time with our own encapsulated class

def Http(self,url,method=Method.GET,post=None):

self.current_url = url

req = request.Request(url)

for k in self.Header:

req.add_header(k,self.Header[k])

req.encoding = self.charset

res = request.urlopen(req)

data = res.read()

self.html = data.decode(encoding=(re.sub('[-]','',self.charset)))

return self.htmlOriginally, I saw someone use request Urlopen (req, headers) format, but I actually reported an error when using it. After careful Baidu search, it turned out that urlib2 package support was needed. Using PIP install urlib2, it was found that it was not an official package. That's OK. Anyway, we are encapsulated in a class. As long as the header information can be carried, there is no need to open so many packages and use the self-contained add_header is very good

In addition, it should be noted that in decode, encoding cannot use utf-8, but only utf8. There is no minus sign, so it is processed with regularization

from spider import Ajax

ajax = Ajax()

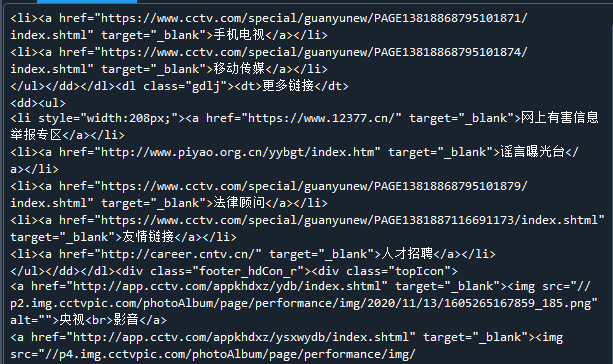

b = ajax.Http('https://www.cctv.com')

print(b)

It's easy to complete the first collection. Then, our first demand, even if we bring some header information, of course, we must adjust and add a lot of content later, but now it's like this first

At the same time, our first acquisition has been successful, but we have not taken down the corresponding header information, so now let's adjust the code and look at the response header information

def Http(self,url,method=Method.GET,post=None): self.current_url = url req = request.Request(url) for k in self.Header: req.add_header(k,self.Header[k]) req.encoding = self.charset res = request.urlopen(req,timeout=3) print(res.headers) data = res.read() self.html = data.decode(encoding=re.sub(r'[-]','',self.charset)) return self.html

Date: Wed, 23 Jun 2021 14:35:30 GMT Content-Type: text/html Transfer-Encoding: chunked Connection: close Expires: Wed, 23 Jun 2021 14:35:39 GMT Server: CCTVQCLOUD Cache-Control: max-age=180 X-UA-Compatible: IE=Edge Age: 171 X-Via: 1.1 PSbjwjBGP2sa180:8 (Cdn Cache Server V2.0), 1.1 PSgddgzk5hm168:0 (Cdn Cache Server V2.0), 1.1 chk67:1 (Cdn Cache Server V2.0) X-Ws-Request-Id: 60d346b2_PS-PEK-01fXq68_10786-48330

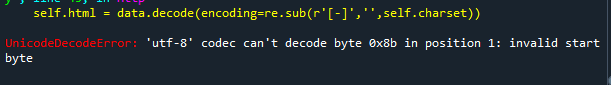

The corresponding head came back, but now there is a big problem and began to report errors

self.html = data.decode(encoding=re.sub(r'[-]','',self.charset)) UnicodeDecodeError: 'utf-8' codec can't decode byte 0x8b in position 1: invalid start byte

Um.... This error is still a burst. For the collection of errors, we directly return data without decoding. When we find the first three characters, \ x1f\x8b\x08, oh roar, this is obviously not the normal coding format. Baidu, that's it https://blog.csdn.net/weixin_36842174/article/details/88924660 , let's see. Before we are ready, we have encountered the fourth requirement. The page content is encoded in gzip format. Adjust the code

Um... Looking back at the response header, I found the gzip message. Let's open the response header we just got

Date: Thu, 24 Jun 2021 00:52:04 GMT Content-Type: text/html Transfer-Encoding: chunked Connection: close Expires: Thu, 24 Jun 2021 00:54:41 GMT Cache-Control: max-age=180 X-UA-Compatible: IE=Edge Content-Encoding: gzip Age: 23 X-Via: 1.1 PS-000-017po25:9 (Cdn Cache Server V2.0), 1.1 PS-TSN-01hDc143:15 (Cdn Cache Server V2.0) X-Ws-Request-Id: 60d3d734_PS-TSN-01hOH49_13803-39259

Content encoding: gzip, OK, let's ignore the coding problem. Let's deal with the response header first, and then deal with the subsequent content according to the response header, or return to the second step~~~~

Then, start parsing the response header

import re

import gzip

from enum import Enum

from urllib import request

class Ajax:

def __init__(self):

self.version = '0.1'

self.agent = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3870.400 QQBrowser/10.8.4405.400'

self.refer = ''

self.cache = 'no-cache'

self.lang = 'zh-CN,zh;q=0.9'

self.encoding = 'gzip, deflate, br'

self.accept = 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8'

self.current_url = ''

self.method = self.Method.GET

self.charset = 'utf-8'

self.__content_encoding = ''

self.html = ''

class Method(Enum):

GET = 1

POST = 2

HEAD = 3

PUT = 4

DELETE = 5

DEBUG = 6

@property

def Header(self):

return {'refer':self.refer

,'user-agent':self.agent

,'accept':self.accept

,'accept-encoding':self.encoding

,'accept-language':self.lang

,'cache-control':self.cache}

def Http(self,url,method=Method.GET,post=None):

self.current_url = url

req = request.Request(url)

for k in self.Header:

req.add_header(k,self.Header[k])

req.encoding = self.charset

res = request.urlopen(req,timeout=3)

self.__headers = str(res.headers)

self.__parseHeaders()

data = res.read()

enc = re.sub(r'[-]','',self.charset)

if 'gzip' in self.__content_encoding:

self.html = gzip.decompress(data).decode(enc)

else:

self.html = data.decode(encoding=enc)

return self.html

def __parseHeaders(self):

dict = {n.group(1).lower():n.group(2).strip() for n in re.finditer('([^\r\n:]+):([^\r\n]+)',self.__headers)}

if 'content-encoding' in dict:

self.__content_encoding = dict['content-encoding']

else:

self.__content_encoding = ''

Let's talk about all the codes so far. Well, gzip has been supported. Let's talk about deflate. As for the response header this time, there is no set cookie, so we won't operate cookies for the time being. We'll talk about cookies in other ways later

At the same time, there is no available information in the response header this time. Then continue with the third point. You can collect plain text content or binary stream to facilitate page collection and download related documents

In fact, whether you visit the page or download it, it is a normal HTTP request. The difference is that the page can be opened through the browser, and the others can be saved directly to the file as a binary stream. In other words, our HTTP method can actually access downloadable resources, but it has no relevant saving settings

Therefore, we create a new method to download separately

def Download(self,url,filename,method=Method.GET,post=None): req = request.Request(url) for k in self.Header: req.add_header(k,self.Header[k]) res = request.urlopen(req,timeout=3) data = res.read() f = open(filename,'wb+') f.write(data) f.close()

Let's try the first download, or test with the last xslt file, and download xlst directly to disk d

from spider import Ajax

ajax = Ajax()

ajax.Download('https://www.govinfo.gov/content/pkg/BILLS-117hr3237ih/xml/BILLS-117hr3237ih.xml', r'd:\\test.xml')

Good. There is one more test in disk D XML file, download function implementation, specific improvement later, hey, hey, hey, hey

Support different character codes and response codes, such as gbk and utf8, such as gzip and deflate. Well, this requirement has basically been put on the shelf. Gzip has supported page coding, and you can also use examples charset setting, well, go on to the next one

Support different request methods, such as get, put, post, delete, head, etc. This is also very important. There are some third-party interfaces that require a variety of request methods. In addition to the common get, post, put and delete, they are also very common, such as third-party cloud storage, old methods, and reading other people's articles https://blog.csdn.net/qq_35959613/article/details/81068042

Oh, another requests package has been introduced.. Uh huh, we also introduce, with a slight change, we can support various methods, and then refer to another article https://www.cnblogs.com/jingdenghuakai/p/11805128.html

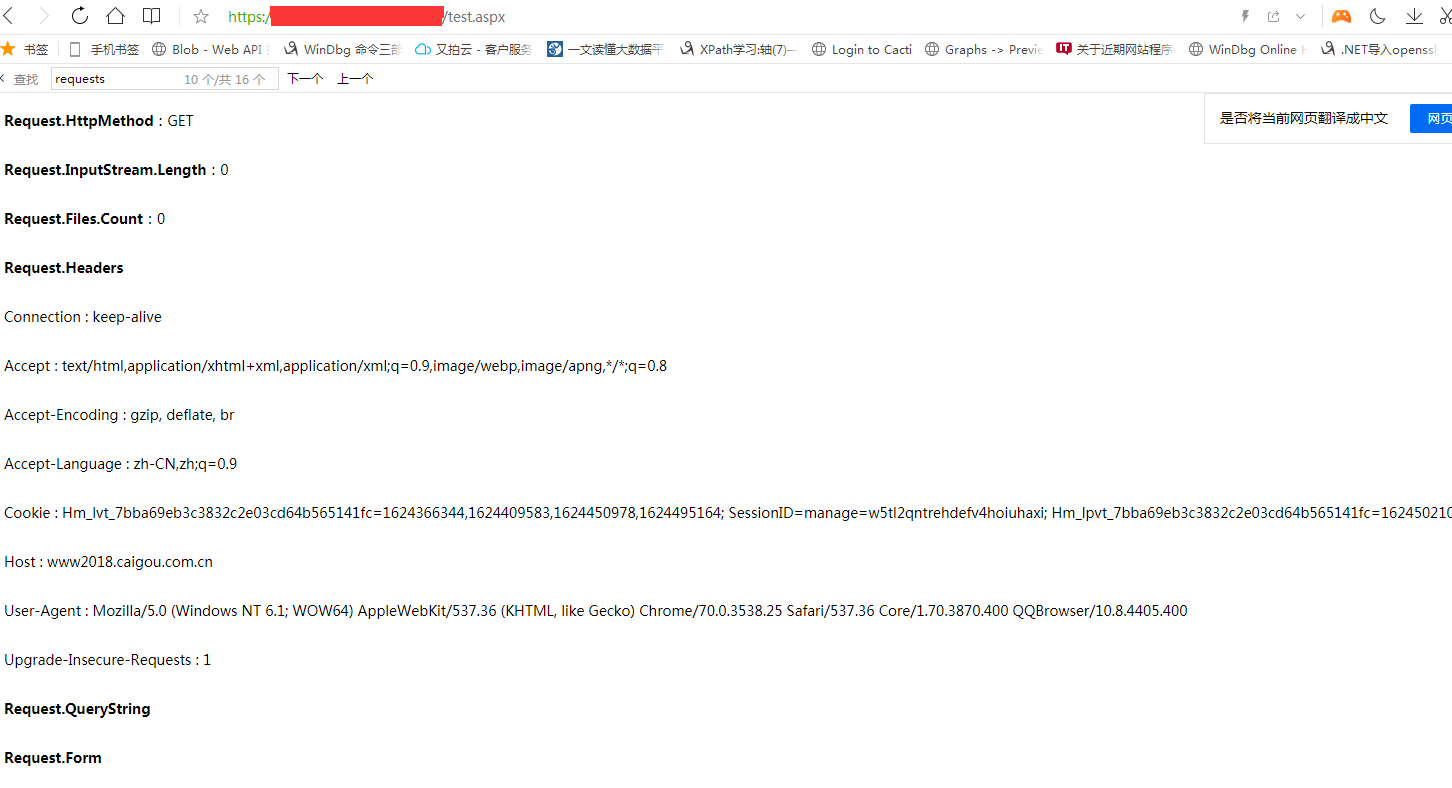

Uh huh, these articles are very good. Let's establish a test environment first. If we don't collect the ready-made website, we won't return the test details we need. Make a test page and feed back all the submitted contents, so that we can continue to write

protected void Page_Load(object sender, EventArgs e)

{

Response.Write("<div><b>Request.HttpMethod</b>: " + Request.HttpMethod + "</div>");

Response.Write("<div><b>Request.InputStream.Length</b>: " + Request.InputStream.Length.ToString() + "</div>");

Response.Write("<div><b>Request.Files.Count</b>: " + Request.Files.Count.ToString() + "</div>");

Response.Write("<div><b>Request.Headers</b></div>");

foreach (string key in Request.Headers.Keys)

{

Response.Write("<div>" + key + " : " + Request.Headers[key] + "</div>");

}

Response.Write("<div><b>Request.QueryString</b></div>");

foreach (string key in Request.QueryString.Keys)

{

Response.Write("<div>" + key + " : " + Request.QueryString[key] + "</div>");

}

Response.Write("<div><b>Request.Form</b></div>");

foreach (string key in Request.Form.Keys)

{

Response.Write("<div>" + key + " : " + Request.Form[key] + "</div>");

}

}

Lao Gu himself uses IIS and uses c# webform to test directly. You can create test files according to your own situation. Basically, you need to write the content so that you can test most of the functions

This is the result of Lao Gu's test in the browser for reference

Then, although the articles just now are very good, they also introduce a package.... Don't introduce it first, try to see if urllib can do it, just add a req Method settings

def Http(self,url,method=Method.GET,postdata=None): self.current_url = url req = request.Request(url) for k in self.Header: req.add_header(k,self.Header[k]) req.encoding = self.charset req.method = method.name res = request.urlopen(req,timeout=3) self.__headers = str(res.headers) self.__parseHeaders() data = res.read() enc = re.sub(r'[-]','',self.charset) if 'gzip' in self.__content_encoding: self.html = gzip.decompress(data).decode(enc) else: self.html = data.decode(encoding=enc) return self.html

import re

from spider import Ajax

ajax = Ajax()

b = ajax.Http('https://localhost/test.aspx')

b = re.sub('<div[^<>]*>','\\n',b)

b = re.sub('<[^<>]+>','',b)

print(b)Request.HttpMethod: GET Request.InputStream.Length: 0 Request.Files.Count: 0 Request.Headers Cache-Control : no-cache Connection : close Accept : text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8 Accept-Encoding : gzip, deflate, br Accept-Language : zh-CN,zh;q=0.9 Host : www2018.caigou.com.cn User-Agent : Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3870.400 QQBrowser/10.8.4405.400 Refer : Request.QueryString Request.Form

import re

from spider import Ajax

ajax = Ajax()

b = ajax.Http('https://localhost/test.aspx?x=1&y=2',Ajax.Method.POST,'a=1&b=2')

b = re.sub('<div[^<>]*>','\\n',b)

b = re.sub('<[^<>]+>','',b)

print(b)Request.HttpMethod: POST Request.InputStream.Length: 0 Request.Files.Count: 0 Request.Headers Cache-Control : no-cache Connection : close Content-Length : 0 Accept : text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8 Accept-Encoding : gzip, deflate, br Accept-Language : zh-CN,zh;q=0.9 Host : www2018.caigou.com.cn User-Agent : Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3870.400 QQBrowser/10.8.4405.400 Refer : Request.QueryString x : 1 y : 2 Request.Form

Oh ho, the httpmethod method has indeed changed. In other words, you can complete these by using urllib itself. There is no need to introduce other packages. Then continue to improve and support post first

When it comes to post, there are two types of submitted information, one is form, the other is pure text flow... This is also helpless. Let's support it. First, support the form method

def Http(self,url,method=Method.GET,postdata=None): self.current_url = url enc = re.sub(r'[-]','',self.charset) req = urllib.request.Request(url) for k in self.Header: req.add_header(k,self.Header[k]) req.encoding = self.charset req.method = method.name if postdata!=None: if isinstance(postdata,dict): postdata = urllib.parse.urlencode(postdata) postdata = postdata.encode(enc) res = urllib.request.urlopen(req,timeout=3,data=postdata) self.__headers = str(res.headers) self.__parseHeaders() data = res.read() if 'gzip' in self.__content_encoding: self.html = gzip.decompress(data).decode(enc) else: self.html = data.decode(encoding=enc) return self.html

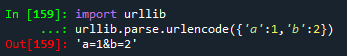

Just add a postdata judgment, judge whether it is a dictionary, and start to see others use urllib parse. URLEncode doesn't know what this method does. After actual testing, he found that this is the method of composing dict into querystring string...

Well, the value I passed is a = 1 & B = 2. There is no need to convert the format

Request.HttpMethod: POST

Request.InputStream.Length: 7

Request.Files.Count: 0

Request.Headers

Cache-Control : no-cache

Connection : close

Content-Length : 7

Content-Type : application/x-www-form-urlencoded

Accept : text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8

Accept-Encoding : gzip, deflate, br

Accept-Language : zh-CN,zh;q=0.9

Host : www2018.caigou.com.cn

User-Agent : Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3870.400 QQBrowser/10.8.4405.400

Refer :

Request.QueryString

x : 1

y : 2

Request.Form

a : 1

b : 2

python Request test

div {padding:10px;font-size:14px;margin-bottom:10px;}Good. The post succeeded. It's on request There are two parameters under form, the same request InputStream also gets the information, so this is the form submission method

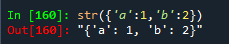

Then, some APIs need to submit a json string, so let's try it

Very good. You can directly use type conversion to get the json string corresponding to the dictionary, which is very convenient

Then, submit this string and try it

Request.HttpMethod: POST

Request.InputStream.Length: 16

Request.Files.Count: 0

Request.Headers

Cache-Control : no-cache

Connection : close

Content-Length : 16

Content-Type : application/x-www-form-urlencoded

Accept : text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8

Accept-Encoding : gzip, deflate, br

Accept-Language : zh-CN,zh;q=0.9

Host : www2018.caigou.com.cn

User-Agent : Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3870.400 QQBrowser/10.8.4405.400

Refer :

Request.QueryString

x : 1

y : 2

Request.Form

: {'a': 1, 'b': 2}

python Request test

div {padding:10px;font-size:14px;margin-bottom:10px;}Oh, Ho, InputStream got 16 characters in request This information is also reflected in the form, including curly braces, which is exactly 16 characters. Therefore, post supports completion

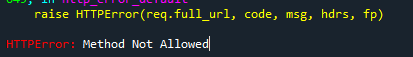

Then take a look at put and delete, and directly modify the method parameter

ajax.Http('https://www2018.caigou.com.cn/test.aspx?x=1&y=2',Ajax.Method.PUT)HTTPError: Method Not Allowed

Unexpected error, method not supported? PUT and DELETE are both errors. HEAD can be used. Well, the page response content is gone, and there is only the return header

Now let's debug put and delete. After searching the Internet for a long time, I found that httplib2 and urlib2 were useful. In the local sitepackages folder, I also found a urlib3 package.... In short, urllib itself cannot use the delete and put methods. After all, these two methods are not safe

It seems that we have to introduce urlib2 package....... Hmm???...... TNND, there is no urlib2 package in Python 3!!! Python 3 integrates urllib and urlib2, but does not support PUT and DELETE!!! God... A big pit... Forget it, let me change individual packages. requests are good. After some rectification, the whole code becomes like this

import gzip

import re

import requests

import zlib

from enum import Enum

class Ajax:

def __init__(self):

self.version = '0.1'

self.agent = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3870.400 QQBrowser/10.8.4405.400'

self.refer = ''

self.cache = 'no-cache'

self.lang = 'zh-CN,zh;q=0.9'

self.encoding = 'gzip, deflate, br'

self.accept = 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8'

self.current_url = ''

self.method = self.Method.GET

self.charset = 'utf-8'

self.__content_encoding = ''

self.html = ''

class Method(Enum):

GET = 1

POST = 2

HEAD = 3

PUT = 4

DELETE = 5

OPTIONS = 6

TRACE = 7

PATCH = 8

@property

def Header(self):

return {'refer':self.refer

,'user-agent':self.agent

,'accept':self.accept

,'accept-encoding':self.encoding

,'accept-language':self.lang

,'cache-control':self.cache}

def Http(self,url,method=Method.GET,postdata=None):

self.current_url = url

if postdata!=None:

if isinstance(postdata,str):

postdata = {n.group(1):n.group(2) for n in re.finditer('([^&=]+)=([^&]*)',postdata)}

if method == self.Method.GET:

res = requests.get(url=url,headers=self.Header,data=postdata)

elif method == self.Method.POST:

res = requests.post(url=url,headers=self.Header,data=postdata)

elif method == self.Method.HEAD:

res = requests.head(url=url,headers=self.Header,data=postdata)

elif method == self.Method.PUT:

res = requests.put(url=url,headers=self.Header,data=postdata)

elif method == self.Method.DELETE:

res = requests.delete(url=url,headers=self.Header,data=postdata)

enc = re.sub(r'[-]','',res.encoding)

if enc == 'ISO88591':

enc = 'gbk'

self.status = res.status_code

self.__headers = str(res.headers)

self.__parseHeaders()

if method == self.Method.HEAD:

return self.__headers

data = res.content

if 'gzip' in self.__content_encoding:

self.html = gzip.decompress(data).decode(enc)

elif 'deflate' in self.__content_encoding:

try:

self.html = zlib.decompress(data, -zlib.MAX_WBITS).decode(enc)

except zlib.error:

self.html = zlib.decompress(data).decode(enc)

else:

self.html = data.decode(encoding=enc)

return self.html

def __parseHeaders(self):

dict = {n.group(1).lower():n.group(2).strip() for n in re.finditer('([^\r\n:]+):([^\r\n]+)',self.__headers)}

if 'content-encoding' in dict:

self.__content_encoding = dict['content-encoding']

else:

self.__content_encoding = ''

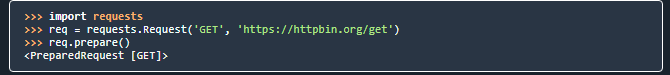

I don't want to remember so many methods. What I expect is that a method can call all methods. Unfortunately, requests Request didn't know how to use it and didn't find the relevant manual

According to the help, he got a PreparedRequest object with method. The problem is that if the subsequent documents are not found, how can this object make an actual request and obtain data? After a search, he still found an article https://blog.csdn.net/weixin_44523387/article/details/90732389

It turns out that this Request method needs a Session object, which maintains the Session and sends the Request, so.... It seems that subsequent cookie retention can also be realized by him? Then adjust our method again

import gzip

import re

import requests

import zlib

from enum import Enum

class Ajax:

def __init__(self):

self.version = '0.1'

self.agent = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.25 Safari/537.36 Core/1.70.3870.400 QQBrowser/10.8.4405.400'

self.refer = ''

self.cache = 'no-cache'

self.lang = 'zh-CN,zh;q=0.9'

self.encoding = 'gzip, deflate, br'

self.accept = 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8'

self.current_url = ''

self.method = self.Method.GET

self.charset = 'utf-8'

self.__content_encoding = ''

self.__session = requests.Session()

self.html = ''

class Method(Enum):

GET = 1

POST = 2

HEAD = 3

PUT = 4

DELETE = 5

OPTIONS = 6

TRACE = 7

PATCH = 8

@property

def Header(self):

return {'refer':self.refer

,'user-agent':self.agent

,'accept':self.accept

,'accept-encoding':self.encoding

,'accept-language':self.lang

,'cache-control':self.cache}

def Http(self,url,method=Method.GET,postdata=None):

self.current_url = url

if postdata!=None:

if isinstance(postdata,str):

postdata = {n.group(1):n.group(2) for n in re.finditer('([^&=]+)=([^&]*)',postdata)}

req = requests.Request(method=method.name,url=url,headers=self.Header,data=postdata)

pre = self.__session.prepare_request(req)

res = self.__session.send(pre)

enc = re.sub(r'[-]','',res.encoding)

if enc == 'ISO88591':

charset = re.findall('''<meta[^<>]*?charset=['"]?([^'""]+)['"\\s]?''',res.text,re.I)

if len(charset) == 0:

enc = re.sub(r'[-]','',self.charset)

else:

enc = re.sub(r'[-]','',charset[0])

self.status = res.status_code

self.__headers = str(res.headers)

self.__parseHeaders()

if method == self.Method.HEAD:

return self.__headers

data = res.content

if 'gzip' in self.__content_encoding:

self.html = gzip.decompress(data).decode(enc)

elif 'deflate' in self.__content_encoding:

try:

self.html = zlib.decompress(data, -zlib.MAX_WBITS).decode(enc)

except zlib.error:

self.html = zlib.decompress(data).decode(enc)

else:

self.html = data.decode(encoding=enc)

return self.html

def __parseHeaders(self):

dict = {n.group(1).lower():n.group(2).strip() for n in re.finditer('([^\r\n:]+):([^\r\n]+)',self.__headers)}

if 'content-encoding' in dict:

self.__content_encoding = dict['content-encoding']

else:

self.__content_encoding = ''

When initializing this instance, create a private variable__ session is used to send the request in this instance. Then, it is no longer necessary to judge what my request method is. This method supports it. Throw away a lot of if elif s, and then adjust the download method

def Download(self,url,filename,method=Method.GET,postdata=None):

if postdata!=None:

if isinstance(postdata,str):

postdata = {n.group(1):n.group(2) for n in re.finditer('([^&=]+)=([^&]*)',postdata)}

req = requests.Request(method=method.name,url=url,headers=self.Header,data=postdata)

pre = self.__session.prepare_request(req)

res = self.__session.send(pre)

data = res.content

f = open(filename,'wb+')

f.write(data)

f.close()So far, the requirements listed in 1, 2, 3, 4, 5 and 6 have been met. We will continue to realize the following requirements next time

Once again, Lao Gu's python has just begun to learn, starting from June 6, 2021. Please correct any mistakes and omissions