Author Yu Ziyi

Source: Ali technical official account

I. Introduction

This article is an introduction to the principles of V8 compilation, which aims to give you a perceptual understanding of the parsing process of JavaScript in V8. The main writing process of this paper is as follows:

- Interpreter and compiler: introduction to basic knowledge of computer Compilation Principle

- V8 compilation principle: Based on the knowledge of computer compilation principle, understand the parsing process of JavaScript in V8

- Runtime performance of V8: practice the specific performance of V8 in the parsing process in combination with the compilation principle of V8

II. Interpreter and compiler

You may have been wondering: is JavaScript an interpretative language? To understand this problem, we first need to understand what interpreters and compilers are and what their characteristics are.

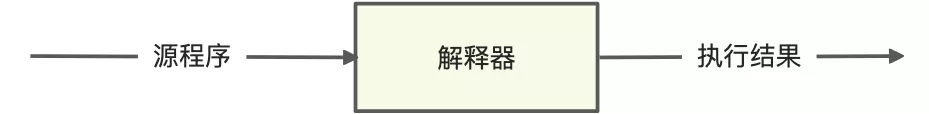

1 interpreter

The function of the interpreter is to take the source program written in a certain language as the input and the execution result of the source program as the output. For example, Perl, Scheme, APL, etc. are all converted and executed by the interpreter:

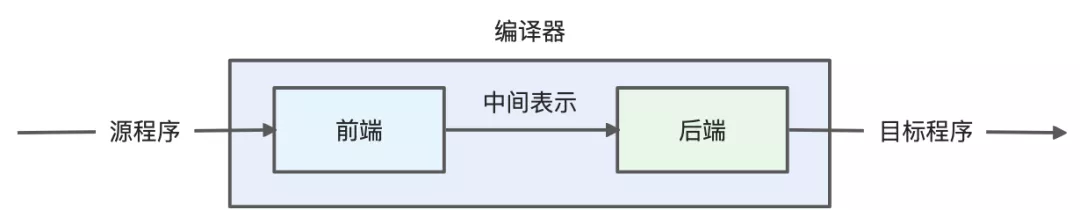

2 compiler

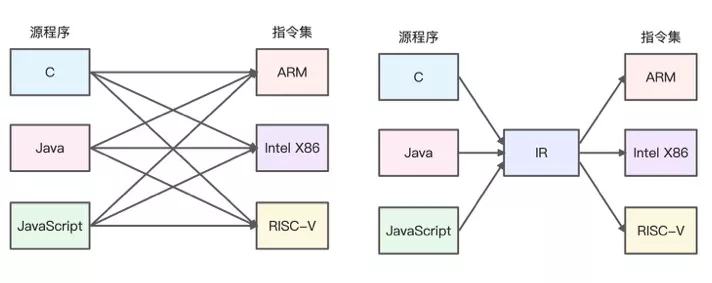

The design of compiler is a very large and complex software system design. Two relatively important problems need to be solved in the real design:

- How to analyze the source programs designed by different high-level programming languages

- How to map the function equivalence of the source program to the target machine of different instruction systems

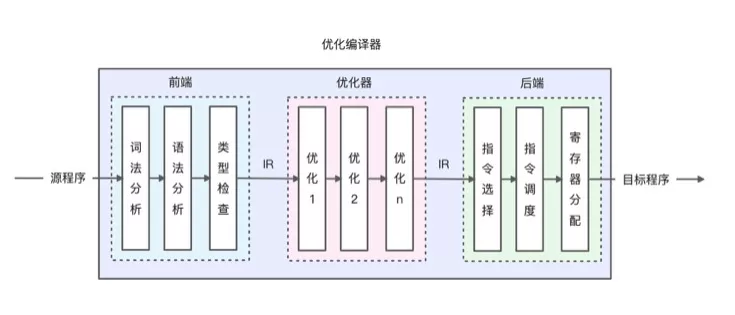

Intermediate representation (IR)

Intermediate Representation (IR) is a representation of program structure. It will be closer to assembly language or instruction set than Abstract Syntax Tree (AST). At the same time, it will also retain some high-level information in the source program. Its specific functions include:

- It is easy for the compiler to debug errors, and it is easy to identify whether it is the front end before IR or the back end after IR

- It can make the compiler's responsibilities more separated, and the compilation of source programs pays more attention to how to convert to IR, rather than adapting different instruction sets

- IR is closer to the instruction set, which can save more memory space than the source code

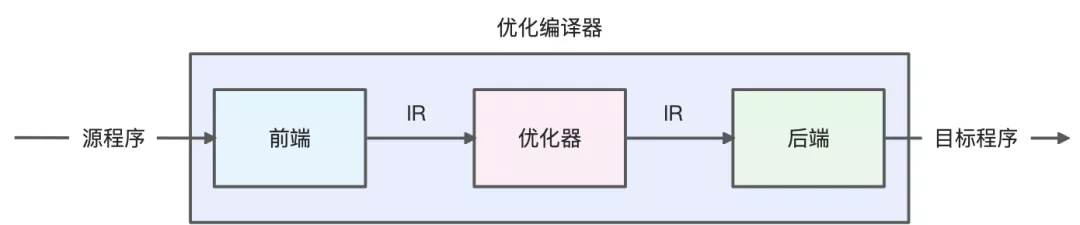

optimizing compiler

IR itself can optimize the source program through multiple iterations. During each iteration, it can study the code and record the optimization details, so as to facilitate subsequent iterations to find and use these optimization information, and finally output a better target program efficiently:

The optimizer can process IR one or more times, Thus, the target program with faster execution speed or smaller volume can be generated (for example, finding the constant calculation in the loop and optimizing it to reduce the number of operations). It may also be used to generate the target program with less exceptions or lower power consumption. In addition, the front-end and back-end can be subdivided into multiple processing steps, as shown in the following figure:

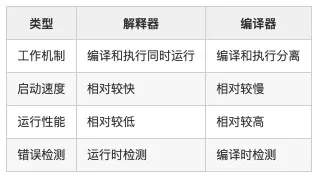

3 Comparison of their characteristics

The specific characteristics of the interpreter and compiler are compared as follows:

It should be noted that the early Web front-end requires a fast start-up speed of the page, so the interpretation execution method is adopted, but the performance of the page is relatively low during operation. In order to solve this problem, JavaScript code needs to be optimized at runtime, so JIT technology is introduced into JavaScript parsing engine.

4 JIT compilation technology

JIT (Just In Time) compiler is a dynamic compilation technology. Compared with traditional compilers, the biggest difference is that the compilation time and runtime are not separated. It is a technology of dynamic compilation of code in the process of running.

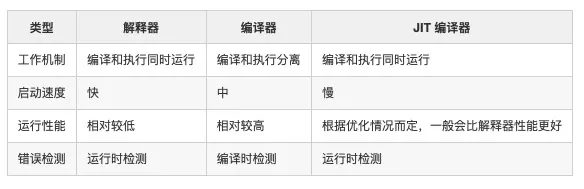

5 hybrid dynamic compilation technology

In order to solve the problem of slow performance of JavaScript at runtime, JIT technology can be introduced and mixed dynamic compilation can be used to improve the performance of JavaScript. The specific ideas are as follows:

After adopting the above compilation framework, the JavaScript language can:

- Fast startup speed: when JavaScript starts, it runs in the way of interpretation and execution, taking advantage of the fast startup speed of the interpreter

- High running performance: the code can be monitored during JavaScript running, so that JIT technology can be used to compile and optimize the code

Compiling principle of 3V 8

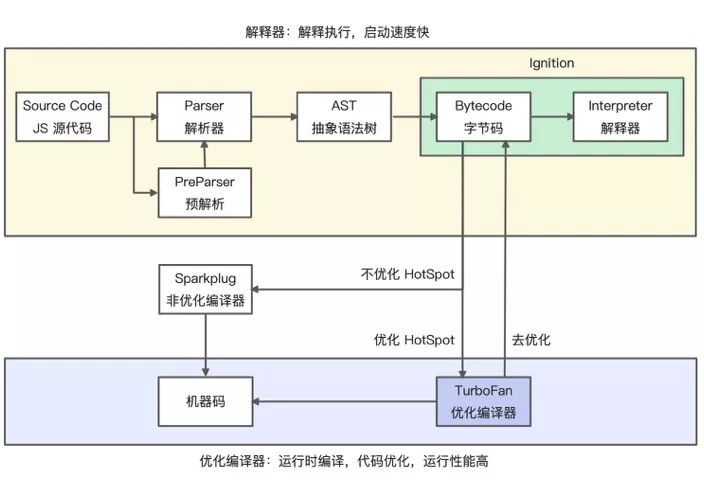

V8 is an open source JavaScript virtual machine, At present, it is mainly used in Chrome browsers (including open source Chromium) and Node.js. Its core function is to parse and execute JavaScript language. In order to solve the problem of poor performance of early JavaScript, V8 has experienced several historical evolution of compilation framework (interested students can learn about the early V8 compilation framework design). Hybrid dynamic compilation technology is introduced to solve the problem. The specific compilation framework is as follows:

1 ignition interpreter

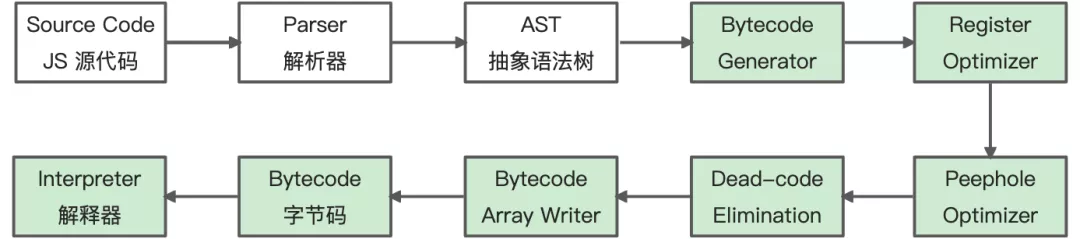

The main function of Ignition is to convert AST into Bytecode (Bytecode). In the process of running, it also uses type feedback technology and calculates HotSpot code (HotSpot, which repeats the running code, which can be a method or a loop body), is finally handed over to TurboFan for dynamic runtime compilation and optimization. The explanation and execution process of Ignition is as follows:

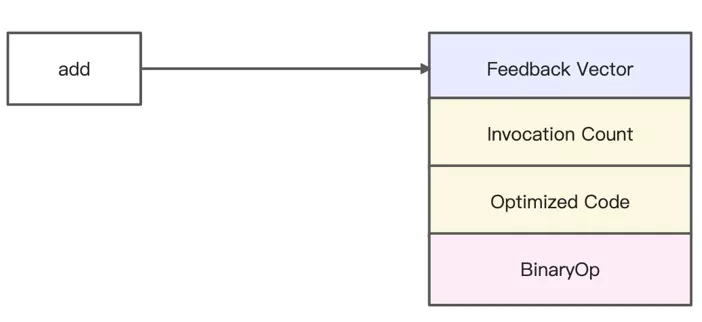

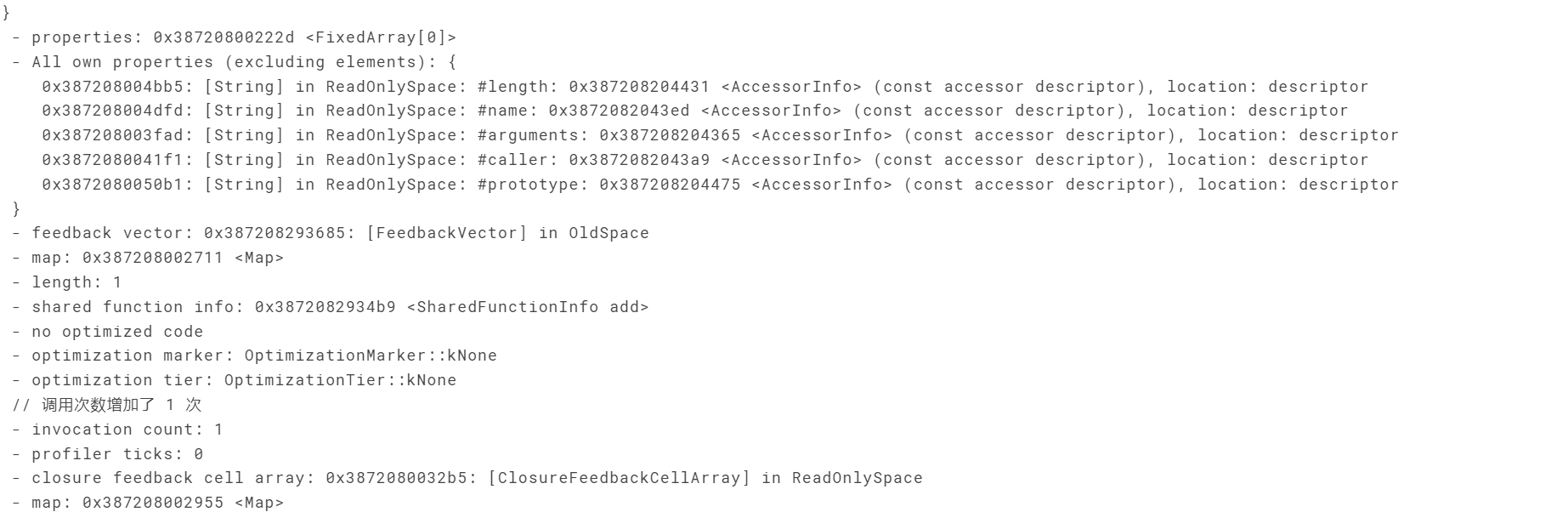

During the execution of bytecode interpretation, The runtime information that needs to be optimized will be directed to the corresponding Feedback Vector (Feedback Vector, previously also known as Type Feedback Vector). The Feedback Vector will contain various types of Feedback Vector Slot information stored according to the Inline Cache (IC), such as BinaryOp slot (data type of binary operation result), Invocation Count (number of function calls), Optimized Code information, etc.

The details of each execution process will not be explained too much here.2 turbofan optimization compiler

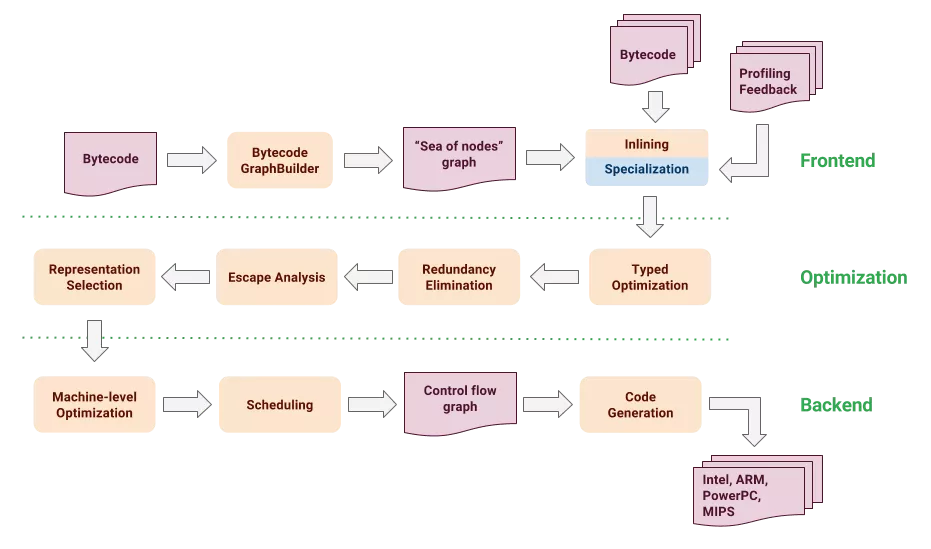

TurboFan uses JIT compilation technology to optimize the runtime compilation of JavaScript code. The specific process is as follows:

Attention should be paid to the Profiling Feedback part, which mainly provides the runtime Feedback Vector information generated during the implementation of Ignition interpretation. Turbofan will combine the bytecode and Feedback Vector information to generate the diagram (the diagram structure in the data structure) and pass the diagram to the front-end part, and then optimize and de optimize the code according to the Feedback Vector information.

De optimization here refers to returning the code to Ignition for interpretation and execution. The essence of de optimization is that the machine code can no longer meet the operation requirements. For example, a variable is changed from string type to number type, and the machine code is compiled as string type. At this time, it can no longer meet the operation requirements. Therefore, V8 will perform de optimization, Fallback the code to Ignition for interpretation and execution.IV. runtime performance of V8

After understanding the compilation principle of V8, we need to use V8 debugging tools to view the compilation and operation information of JavaScript, so as to deepen our understanding of the compilation process of V8.

1 D8 commissioning tools

If you want to know the compile time and runtime information of JavaScript in V8, you can use the debugging tool D8. D8 is the command line Shell of V8 engine. You can view AST generation, intermediate code ByteCode, optimization code, anti optimization code, statistics of optimization compiler, GC of code, etc. There are many ways to install D8, as shown below:

- Method 1: Download and compile the tool chain according to the official V8 documents Using d8 and Building V8 with GN

- Method 2: use D8 tools compiled by others, and the version may lag, such as Mac version

- Method 3: use JavaScript engine version management tools, such as jsvu, to download to the latest compiled JavaScript engine

This article uses method 3 to install the V8 debug tool. After installation, execute V8 debug -- help to view the commands:

# Execute the help command to view the supported parameters

v8-debug --help

Synopsis:

shell [options] [--shell] [<file>...]

d8 [options] [-e <string>] [--shell] [[--module|--web-snapshot] <file>...]

-e execute a string in V8

--shell run an interactive JavaScript shell

--module execute a file as a JavaScript module

--web-snapshot execute a file as a web snapshot

SSE3=1 SSSE3=1 SSE4_1=1 SSE4_2=1 SAHF=1 AVX=1 AVX2=1 FMA3=1 BMI1=1 BMI2=1 LZCNT=1 POPCNT=1 ATOM=0

The following syntax for options is accepted (both '-' and '--' are ok):

--flag (bool flags only)

--no-flag (bool flags only)

--flag=value (non-bool flags only, no spaces around '=')

--flag value (non-bool flags only)

-- (captures all remaining args in JavaScript)

Options:

# Print generated bytecode

--print-bytecode (print bytecode generated by ignition interpreter)

type: bool default: --noprint-bytecode

# Track optimized information

--trace-opt (trace optimized compilation)

type: bool default: --notrace-opt

--trace-opt-verbose (extra verbose optimized compilation tracing)

type: bool default: --notrace-opt-verbose

--trace-opt-stats (trace optimized compilation statistics)

type: bool default: --notrace-opt-stats

# Track information to optimize

--trace-deopt (trace deoptimization)

type: bool default: --notrace-deopt

--log-deopt (log deoptimization)

type: bool default: --nolog-deopt

--trace-deopt-verbose (extra verbose deoptimization tracing)

type: bool default: --notrace-deopt-verbose

--print-deopt-stress (print number of possible deopt points)

# View compiled AST

--print-ast (print source AST)

type: bool default: --noprint-ast

# View compiled code

--print-code (print generated code)

type: bool default: --noprint-code

# View optimized code

--print-opt-code (print optimized code)

type: bool default: --noprint-opt-code

# Allows you to use the native API syntax provided by V8 in your source code

--allow-natives-syntax (allow natives syntax)

type: bool default: --noallow-natives-syntax2 generate AST

We write an index JS file, write JavaScript code in the file, and execute a simple add function:

function add(x, y) {

return x + y

}

console.log(add(1, 2));Use the -- print AST parameter to print the AST information of the add function:

v8-debug --print-ast ./index.js [generating bytecode for function: ] --- AST --- FUNC at 0 . KIND 0 . LITERAL ID 0 . SUSPEND COUNT 0 . NAME "" . INFERRED NAME "" . DECLS . . FUNCTION "add" = function add . EXPRESSION STATEMENT at 41 . . ASSIGN at -1 . . . VAR PROXY local[0] (0x7fb8c080e630) (mode = TEMPORARY, assigned = true) ".result" . . . CALL . . . . PROPERTY at 49 . . . . . VAR PROXY unallocated (0x7fb8c080e6f0) (mode = DYNAMIC_GLOBAL, assigned = false) "console" . . . . . NAME log . . . . CALL . . . . . VAR PROXY unallocated (0x7fb8c080e470) (mode = VAR, assigned = true) "add" . . . . . LITERAL 1 . . . . . LITERAL 2 . RETURN at -1 . . VAR PROXY local[0] (0x7fb8c080e630) (mode = TEMPORARY, assigned = true) ".result" [generating bytecode for function: add] --- AST --- FUNC at 12 . KIND 0 . LITERAL ID 1 . SUSPEND COUNT 0 . NAME "add" . PARAMS . . VAR (0x7fb8c080e4d8) (mode = VAR, assigned = false) "x" . . VAR (0x7fb8c080e580) (mode = VAR, assigned = false) "y" . DECLS . . VARIABLE (0x7fb8c080e4d8) (mode = VAR, assigned = false) "x" . . VARIABLE (0x7fb8c080e580) (mode = VAR, assigned = false) "y" . RETURN at 25 . . ADD at 34 . . . VAR PROXY parameter[0] (0x7fb8c080e4d8) (mode = VAR, assigned = false) "x" . . . VAR PROXY parameter[1] (0x7fb8c080e580) (mode = VAR, assigned = false) "y"

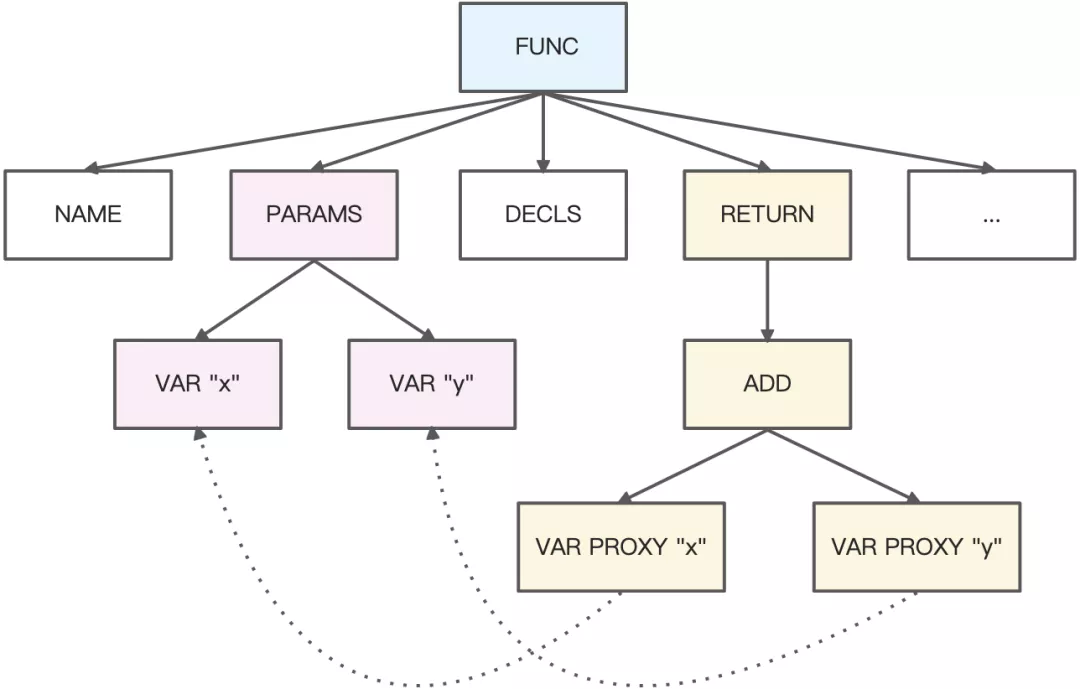

We describe the generated AST tree graphically:

The VAR PROXY node will be connected to the VAR node of the corresponding address in the real analysis phase.

3 generate bytecode

AST will generate bytecode (middle representation) through BytecodeGenerator function of Ignition interpreter. We can print bytecode information through -- print bytecode parameter:

v8-debug --print-bytecode ./index.js

[generated bytecode for function: (0x3ab2082933f5 <SharedFunctionInfo>)]

Bytecode length: 43

Parameter count 1

Register count 6

Frame size 48

OSR nesting level: 0

Bytecode Age: 0

0x3ab2082934be @ 0 : 13 00 LdaConstant [0]

0x3ab2082934c0 @ 2 : c3 Star1

0x3ab2082934c1 @ 3 : 19 fe f8 Mov <closure>, r2

0x3ab2082934c4 @ 6 : 65 52 01 f9 02 CallRuntime [DeclareGlobals], r1-r2

0x3ab2082934c9 @ 11 : 21 01 00 LdaGlobal [1], [0]

0x3ab2082934cc @ 14 : c2 Star2

0x3ab2082934cd @ 15 : 2d f8 02 02 LdaNamedProperty r2, [2], [2]

0x3ab2082934d1 @ 19 : c3 Star1

0x3ab2082934d2 @ 20 : 21 03 04 LdaGlobal [3], [4]

0x3ab2082934d5 @ 23 : c1 Star3

0x3ab2082934d6 @ 24 : 0d 01 LdaSmi [1]

0x3ab2082934d8 @ 26 : c0 Star4

0x3ab2082934d9 @ 27 : 0d 02 LdaSmi [2]

0x3ab2082934db @ 29 : bf Star5

0x3ab2082934dc @ 30 : 63 f7 f6 f5 06 CallUndefinedReceiver2 r3, r4, r5, [6]

0x3ab2082934e1 @ 35 : c1 Star3

0x3ab2082934e2 @ 36 : 5e f9 f8 f7 08 CallProperty1 r1, r2, r3, [8]

0x3ab2082934e7 @ 41 : c4 Star0

0x3ab2082934e8 @ 42 : a9 Return

Constant pool (size = 4)

0x3ab208293485: [FixedArray] in OldSpace

- map: 0x3ab208002205 <Map>

- length: 4

0: 0x3ab20829343d <FixedArray[2]>

1: 0x3ab208202741 <String[7]: #console>

2: 0x3ab20820278d <String[3]: #log>

3: 0x3ab208003f09 <String[3]: #add>

Handler Table (size = 0)

Source Position Table (size = 0)

[generated bytecode for function: add (0x3ab20829344d <SharedFunctionInfo add>)]

Bytecode length: 6

// Accept three parameters, an implicit this, and explicit x and y

Parameter count 3

Register count 0

// No local variables are required, so the frame size is 0

Frame size 0

OSR nesting level: 0

Bytecode Age: 0

0x3ab2082935f6 @ 0 : 0b 04 Ldar a1

0x3ab2082935f8 @ 2 : 39 03 00 Add a0, [0]

0x3ab2082935fb @ 5 : a9 Return

Constant pool (size = 0)

Handler Table (size = 0)

Source Position Table (size = 0)The add function mainly contains the following three byte code sequences:

// Load Accumulator Register // Load the value of register a1 into the accumulator Ldar a1 // The value of register a0 is read and accumulated in the accumulator, and the added result will continue to be placed in the accumulator // [0] points to the Feedback Vector Slot, and Ignition will collect the analysis information of the value to prepare for subsequent TurboFan optimization Add a0, [0] // Transfers control to the caller and returns the value in the accumulator Return

In the explanation of Ignition, the implementation of these bytecodes adopts a register architecture of address instruction structure.

For more information on bytecodes, see Understanding V8's Bytecode.4 optimization and de optimization

JavaScript is a weakly typed language. It does not need to limit the formal parameter data types of function calls like a strongly typed language, but can flexibly pass in various types of parameters for processing, as shown below:

function add(x, y) {

// +Operator is a very complex operation in JavaScript

return x + y

}

add(1, 2);

add('1', 2);

add(, 2);

add(undefined, 2);

add([], 2);

add({}, 2);

add([], {});In order to perform the + operator operation, many API s need to be called during the underlying execution, such as ToPrimitive (judge whether it is an object), ToString, ToNumber, etc. to convert the incoming parameters into data conforming to the + operator.

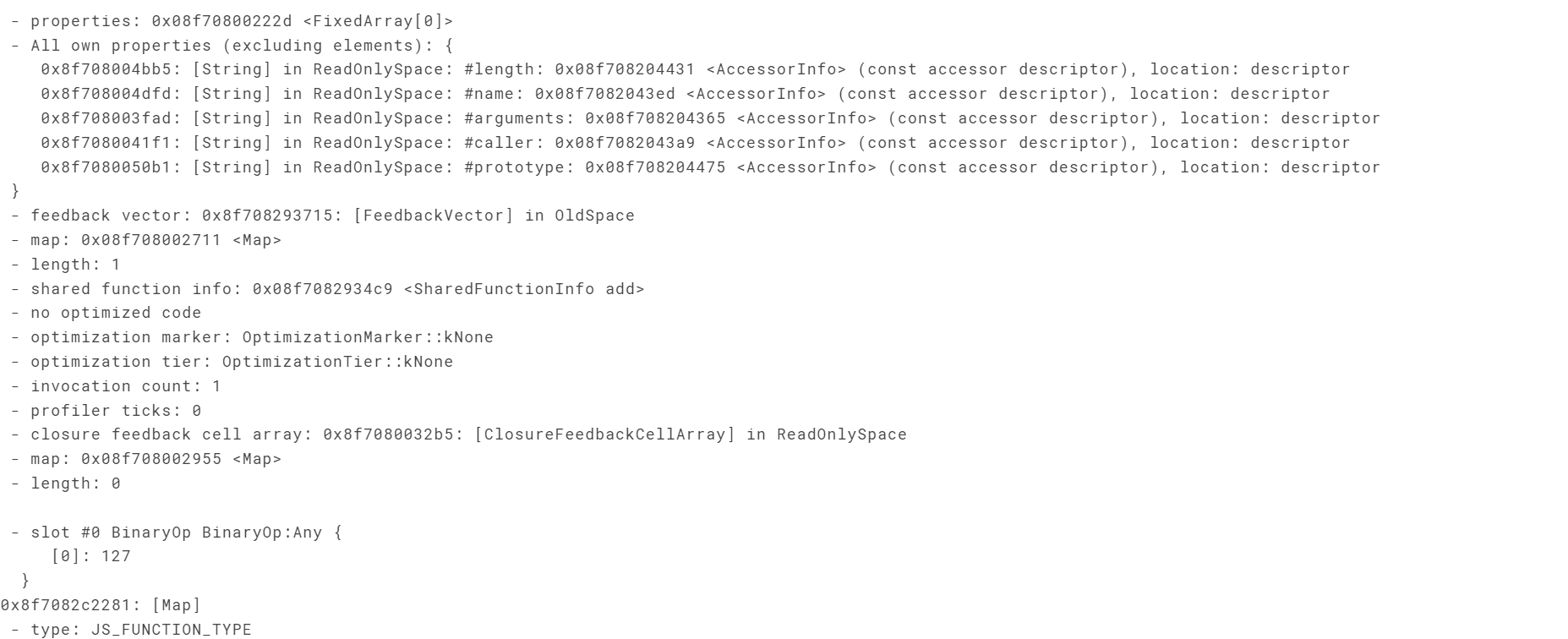

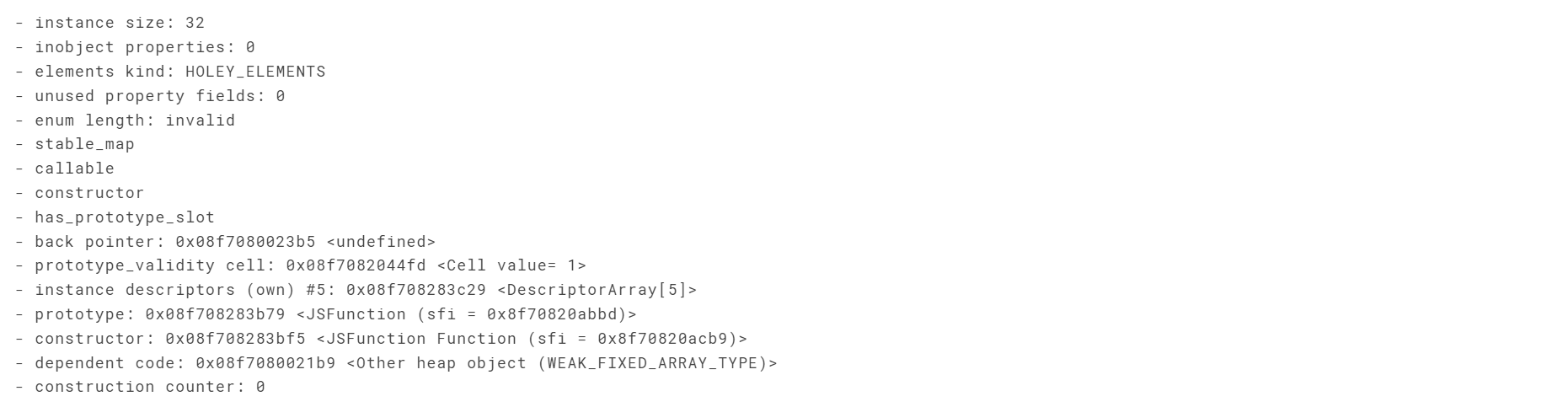

Here, V8 will speculate on the formal parameters x and y of JavaScript like a strongly typed language, so that some side-effect branch codes can be excluded during operation. At the same time, it will predict that the code will not throw exceptions. Therefore, the code can be optimized to achieve the highest running performance. In Ignition, the Feedback Vector is collected by bytecode, as shown below:

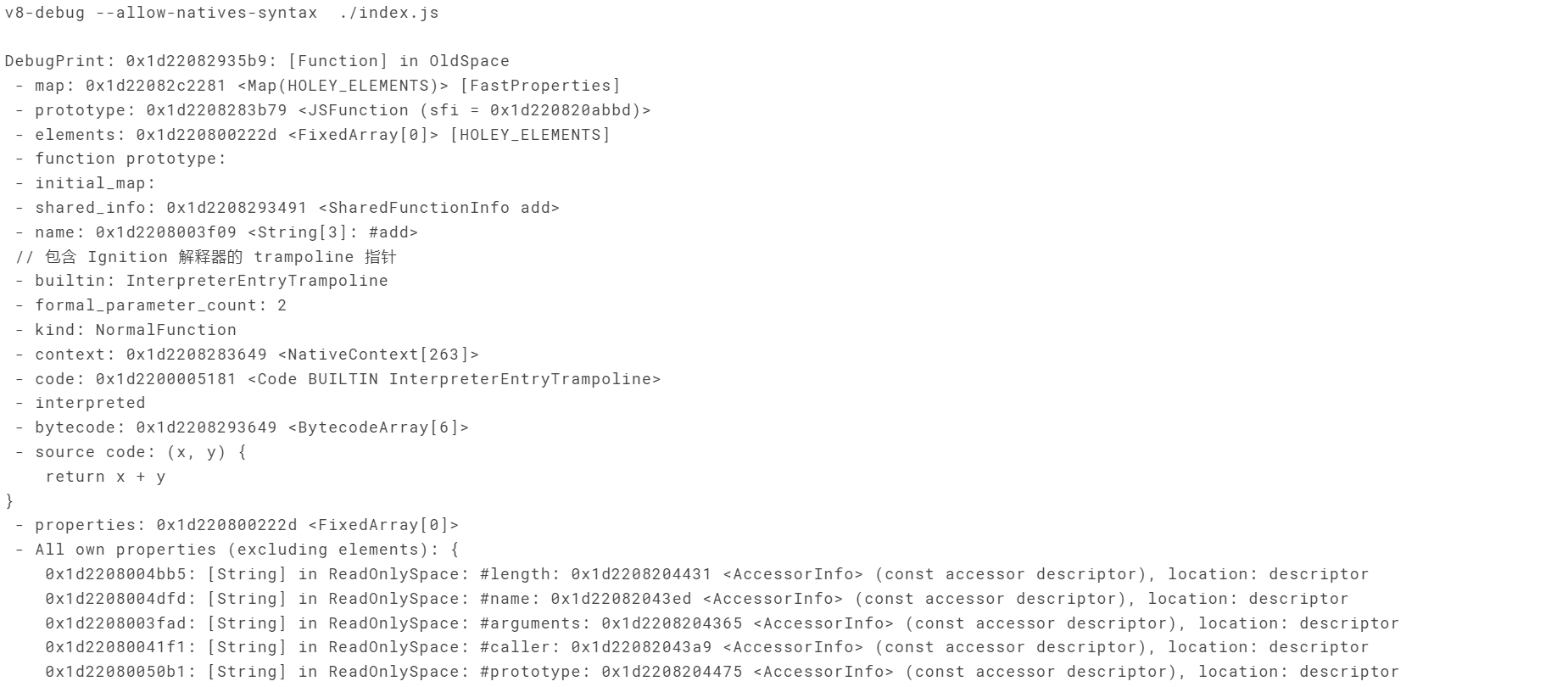

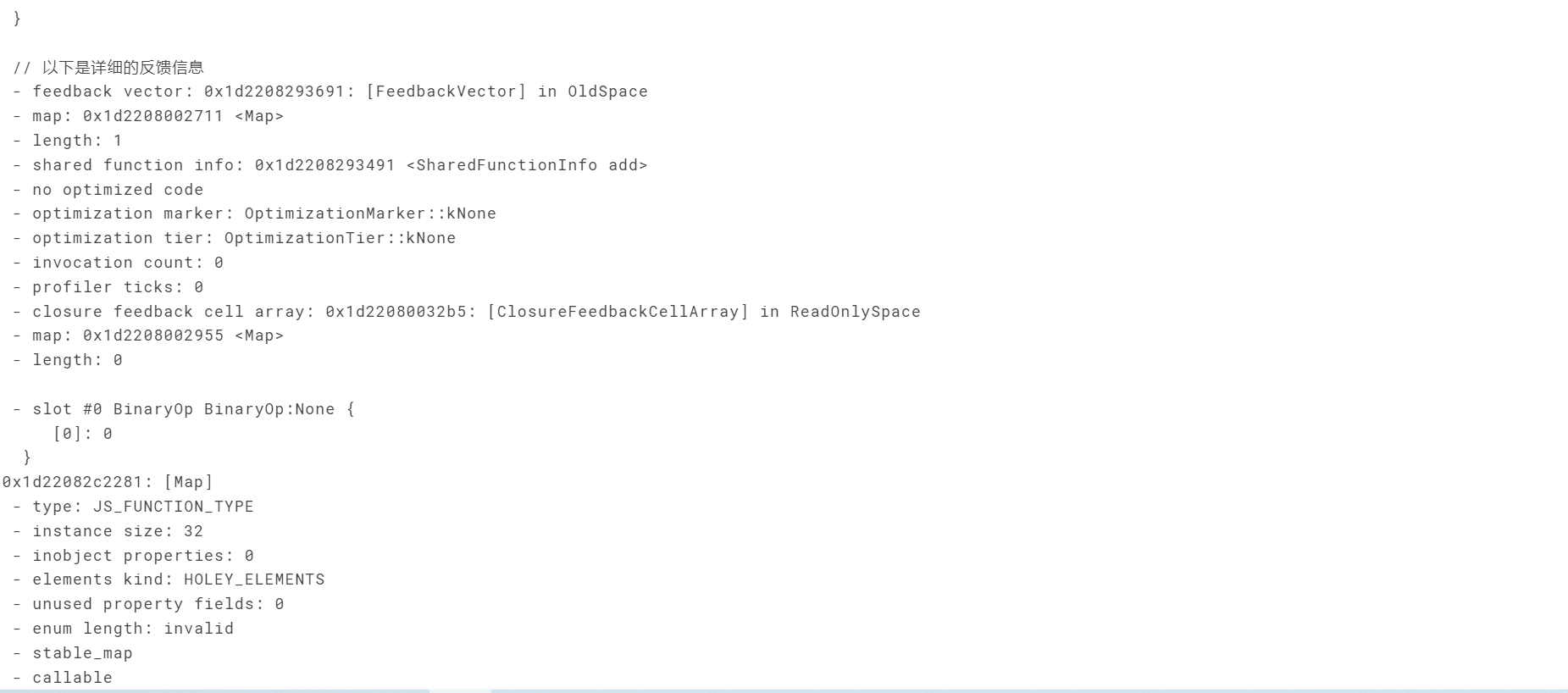

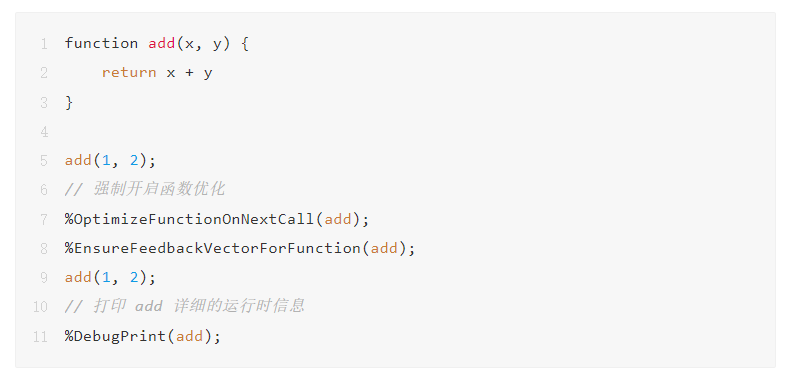

In order to view the runtime feedback information of the add function, we can print the runtime information of the add function through the Native API provided by V8, as shown below:

function add(x, y) {

return x + y

}

// Note that ClosureFeedbackCellArray is used by default here. In order to view the effect, the FeedbackVector is forced to be turned on

// For more information, see A lighter V8: https://v8.dev/blog/v8-lite

%EnsureFeedbackVectorForFunction(add);

add(1, 2);

// Print add detailed runtime information

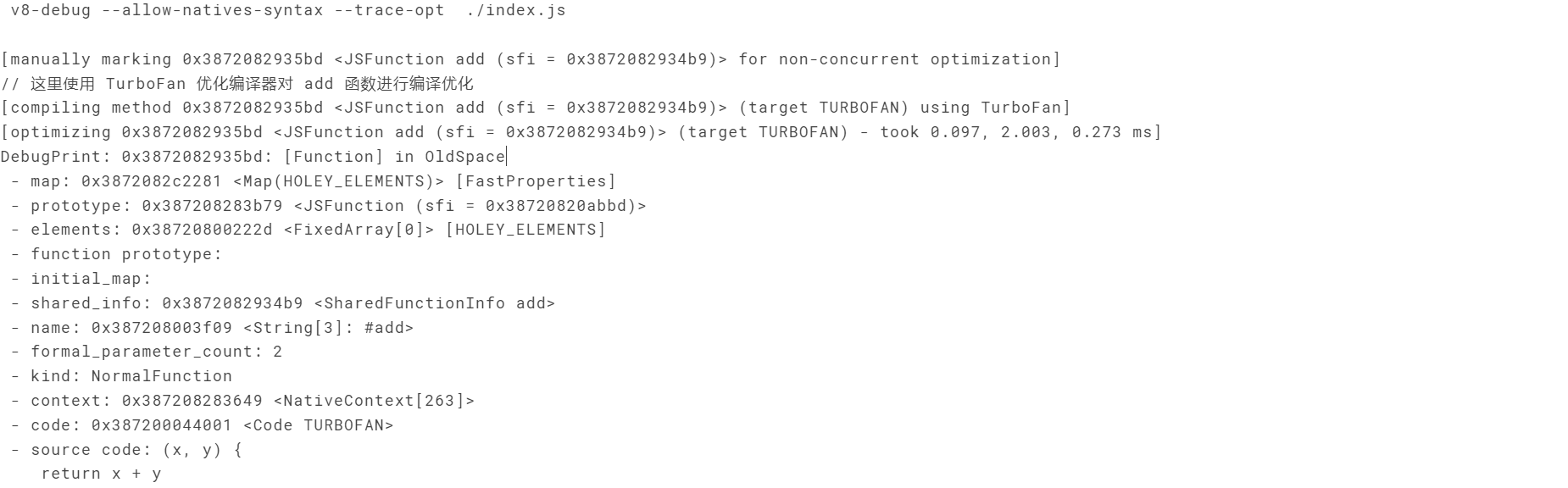

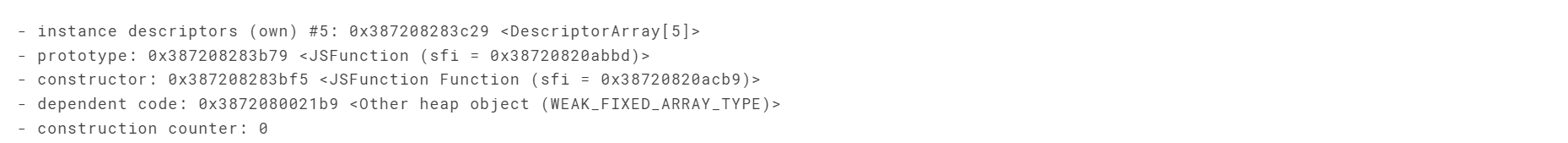

%DebugPrint(add);Through the --allow-natives-syntax parameter, you can call the%DebugPrint underlying Native API in JavaScript (more API can view the runtime.h header file of V8):

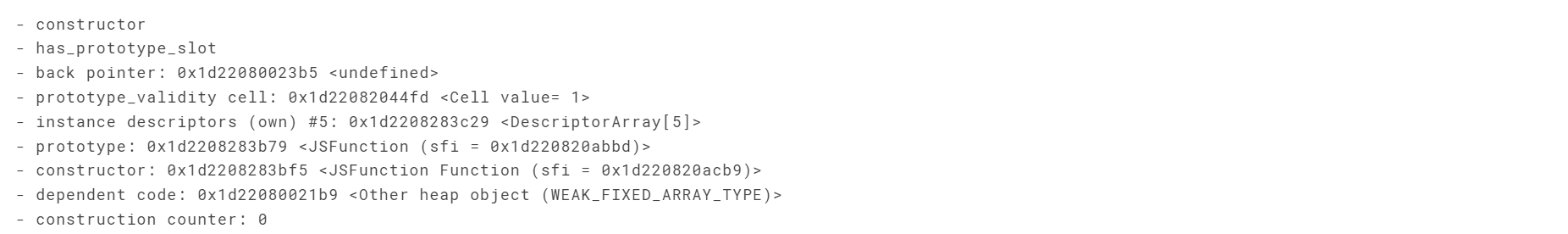

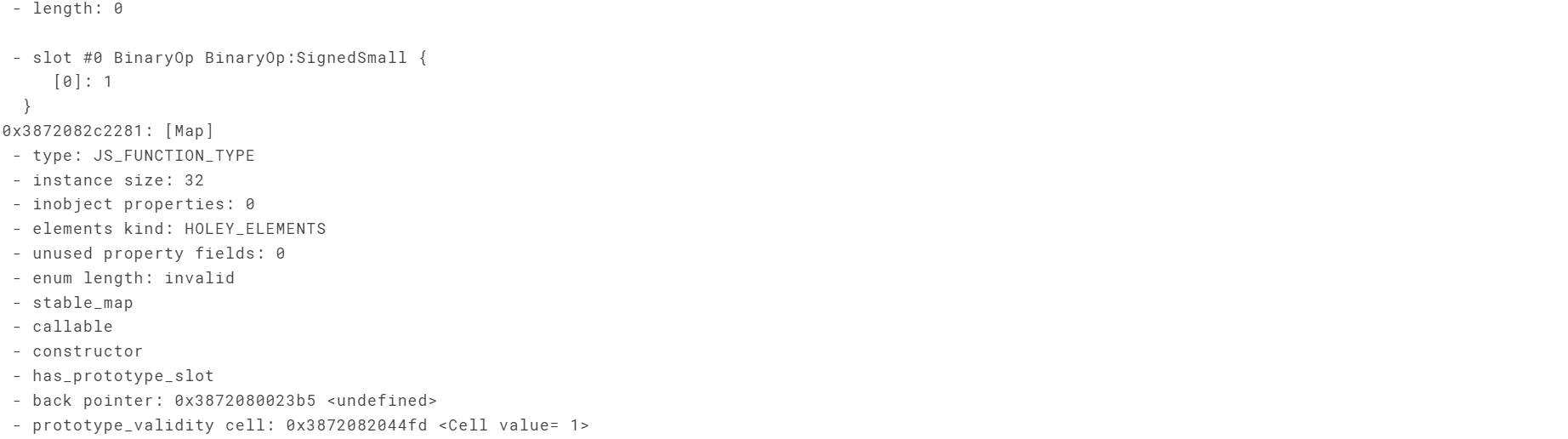

The SharedFunctionInfo (SFI) here retains an interpreterentry trampoline pointer information. Each function will have a rampoline pointer to the Ignition interpreter. Whenever V8 needs to go in for optimization, it will use this pointer to return the code to the function execution position corresponding to the interpreter.

In order to optimize the add function like the HotSpot code, a function optimization is forced here:

The -- trace opt parameter can track the compilation optimization information of the add function:

It should be noted that V8 will automatically monitor the structural changes of the code to perform de optimization. For example, the following codes:

function add(x, y) {

return x + y

}

%EnsureFeedbackVectorForFunction(add);

add(1, 2);

%OptimizeFunctionOnNextCall(add);

add(1, 2);

// Change the input parameter type of the add function. The previous parameter type is number, and the string type is passed here

add(1, '2');

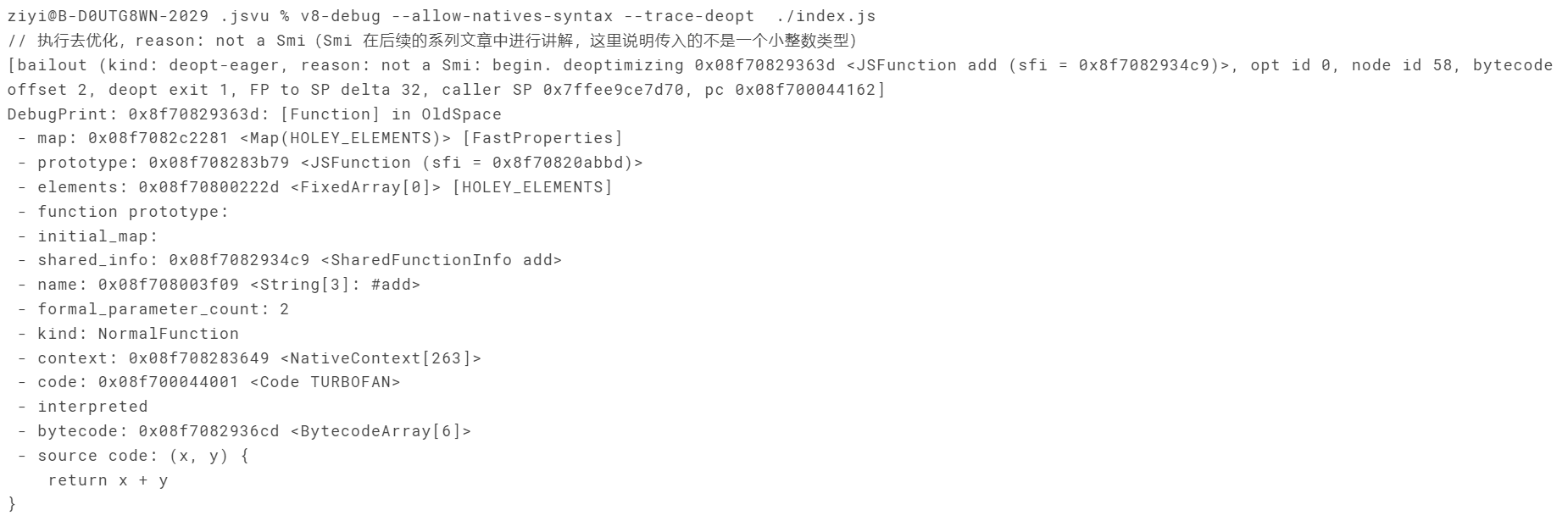

%DebugPrint(add);We can track the de optimization information of the add function through the -- trace deopt parameter:

It should be noted that the performance of the code will be lost in the process of de optimization. Therefore, in daily development, it is recommended to use TypeScript to declare the type of the code, which can improve the performance of the code to a certain extent.

V. summary

The research on V8 in this paper is still in a perceptual cognitive stage, and does not go deep into the underlying source code of V8. Through this article, you can have a perceptual understanding of the compilation principle of V8. At the same time, it is also suggested that you can use TypeScript, which can indeed play a better guiding role in the preparation of JavaScript code to a certain extent.

E-MapReduce introductory training camp

This course mainly introduces the basic knowledge system of Alibaba cloud's open source big data platform EMR.

click here , view details!