originate stackoverflow Last question Why it is faster to deal with an ordered array than to deal with an array without it , there are some discussions in the original text. Let's first reproduce the results and then explain why!

We have the following two sections of code. The code looks similar, but in fact, the logic is the same. They count the number less than THRESHOLD in the array. The only difference is that one is in the unordered array, and the other is in the ordered array. If the data sources of two arrays are the same (array size and data are the same), but one is out of order and the other is in order, how much do you think the performance gap between the two functions will be?

public static void countUnsortedArr() {

int cnt = 0;

for (int i = 0; i < MAX_LENGTH; i++) {

if (arr[i] < THRESHLOD) {

cnt++;

}

}

}

public static void countSortedArr() {

int cnt = 0;

for (int i = 0; i < MAX_LENGTH; i++) {

if (arrSotred[i] < THRESHLOD) {

cnt++;

}

}

}

Intuitively, the logic of the two pieces of code is exactly the same, because the statistics of data source consistency are the same, so there will be no difference in performance, but is it true? We use the benchmark tools in OpenJdk JMH Let's test it.

testing environment

MacBook Pro (13-inch, 2017)

CPU: 2.5 GHz Intel Core i7

JMH version: 1.21

VM version: JDK 11, Java HotSpot(TM) 64-Bit Server VM, 11+28

Test method: preheat one round, and then press test three rounds for each function, each round is 10s

The result is as follows: Schore represents the number of microseconds needed to execute this function once. The smaller the value, the higher the performance.

Benchmark Mode Cnt Score Error Units BranchPredictionTest.countSortedArr avgt 3 5212.052 ± 7848.382 us/op BranchPredictionTest.countUnsortedArr avgt 3 31854.238 ± 5393.947 us/op

Is it surprising that the statistics in the ordered array are much faster and the performance gap is six times. And after many tests, the performance gap is very stable. Does it feel illogical? Most program apes use high-level language to write code. In fact, the language itself encapsulates many bottom-level details. In fact, CPU optimizes branch jump instructions, which is the CPU branch prediction mentioned in our title. Before the detailed branch prediction, the goal of this paper is not to make clear the branch prediction, but to tell you the impact of branch prediction on performance. To learn more about CPU branch prediction, several references are listed at the end of this paper.

! [insert picture description here]( https://img-blog.csdnimg.cn/20190930115912874.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly94aW5kb28uYmxvZy5jc2RuLm5ldA==,size_16,color_FFFFFF,t_70 =400x)

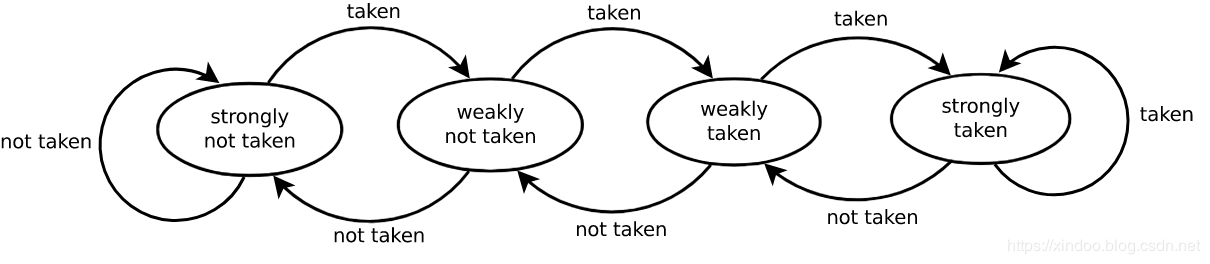

To say branch prediction, we should also mention the instruction pipeline mode of modern CPU. As shown in the figure above, in order to improve the throughput of instructions, modern CPU divides a single instruction into several stages, roughly divided into__ Fetch, decode, execute, write back__ An instruction can be executed without waiting for the last complete execution, just like the pipeline in the factory, which can greatly improve the throughput of instruction execution. In fact, there are more than four stages in modern CPUs. For example, intel and arm processors basically have more than ten stages, and the advantage of pipeline throughput is more obvious.

But the ideal is very good, showing very backbone. It is not that all instructions can be executed before the last instruction is executed. Maybe this instruction will depend on the execution result of the last instruction. In order to cope with the decrease of throughput caused by this data dependence, the CPU designer proposed many optimization methods, such as Command Adventure,Instruction out of order execution,forecast , we mentioned today__ Branch prediction__ It belongs to the category of "prediction".

The idea of branch prediction is also very simple. Since the dependent data hasn't been calculated yet, I'll guess a result, and then start executing the instruction in advance. Of course, it's not random guess. The prediction idea of modern CPU is to see the results of the previous prediction, just like the rain the day before yesterday and the rain yesterday. Then I can simply and roughly think that it's raining today. For details, see the reference materials at the end of the article . The idea is very simple, but the effect is surprisingly good. From the data of Wikipedia, we can know that the branch prediction accuracy of modern CPU can reach more than 90%.

Since the accuracy is not 100%, it means that there is a time of failure. If CPU finds that the prediction error will discard all the * * results after the prediction, then start the execution from the prediction branch, which is equivalent to many instructions running away. The cost of the prediction failure is very high. The execution of a regular instruction requires 10-20 instruction cycles. If the prediction fails, there may be an additional 30-40 instruction cycles. Back to our test code above, I prepared 100W data from 0-100w, and then counted the number less than 50w. In the case of disorder, there is a 50% probability of branch prediction failure. In the case of order, there is only one failure in 100W predictions. Branch prediction failure is the cause of performance gap.

performance optimization

Knowing why, How to optimize performance? Since the ordered array is the fastest, can we sort the array directly and then traverse it? Don't forget that the performance loss caused by extra sorting is far greater than that caused by branch prediction failure. Since the way to improve the success rate of branch prediction doesn't work, we can simply kill the logic that will lead to branch prediction?

Optimize 1

For my simple statistical logic, it can be directly completed by bit operation. Bit operations look complicated, but in fact, the idea is very simple. It is to directly convert the sign bits of integers into 0 and 1, and then add them to cnt. Without if less than judgment, there will be no jmp instruction in the generated instruction, so as to avoid the performance consumption caused by CPU branch prediction error.

The code is as follows:

@Benchmark

public static void count1() {

int cnt = 0;

for (int i = 0; i < MAX_LENGTH; i++) {

cnt += (-~(THRESHLOD-arr[i]) >> 31);

}

}

Optimization 2

Since I have optimized 1 for all bitwise operations, there must be optimized 2. In fact, I can also optimize performance with the?: binocular operator.

public static void count2() {

int cnt = 0;

for (int i = 0; i < MAX_LENGTH; i++) {

cnt += arr[i] < THRESHLOD ? 1 : 0;

}

}

Let's take a look at the performance gap again by putting the four methods together. Bit operation is undoubtedly the fastest. The way to use the?: trinary operator is also quite fast, which is similar to ordered data statistics. It can be determined that the trinary operator also successfully avoids the loss of performance from branch prediction error codes.

Benchmark Mode Cnt Score Error Units BranchPredictionTest.count1 avgt 3 3807.000 ± 16265.107 us/op BranchPredictionTest.count2 avgt 3 4706.082 ± 19757.705 us/op BranchPredictionTest.countSortedArr avgt 3 4458.783 ± 107.975 us/op BranchPredictionTest.countUnsortedArr avgt 3 30719.090 ± 4517.611 us/op

?: why is the three item operation so fast?

?: there is less than judgment in the expression. Why is there no branch jump? I also puzzled about this problem for a long time. Later, I generated assembly code of if and?: Logic in C language code, and finally found the difference between them.

C code

int fun1(int x)

{

int cnt = 0;

if (x < 0)

{

cnt += 1;

}

return cnt;

}

int fun2(int x)

{

int cnt = 0;

cnt += x > 0 ? 1 : 0;

return cnt;

}

Assembly code

.section __TEXT,__text,regular,pure_instructions

.build_version macos, 10, 14

.globl _fun1 ## -- Begin function fun1

.p2align 4, 0x90

_fun1: ## @fun1

.cfi_startproc

## %bb.0:

pushq %rbp

.cfi_def_cfa_offset 16

.cfi_offset %rbp, -16

movq %rsp, %rbp

.cfi_def_cfa_register %rbp

movl %edi, -4(%rbp)

movl $0, -8(%rbp)

cmpl $0, -4(%rbp)

jge LBB0_2 ## If the result judged by cmpl instruction is greater than or equal to, skip to LBB0_2 code block

## %bb.1:

movl -8(%rbp), %eax

addl $1, %eax

movl %eax, -8(%rbp)

LBB0_2:

movl -8(%rbp), %eax

popq %rbp

retq

.cfi_endproc

## -- End function

.globl _fun2 ## -- Begin function fun2

.p2align 4, 0x90

_fun2: ## @fun2

.cfi_startproc

## %bb.0:

pushq %rbp

.cfi_def_cfa_offset 16

.cfi_offset %rbp, -16

movq %rsp, %rbp

.cfi_def_cfa_register %rbp

xorl %eax, %eax

movl %edi, -4(%rbp)

movl $0, -8(%rbp)

movl -4(%rbp), %edi

cmpl $0, %edi

movl $1, %edi

cmovll %edi, %eax

addl -8(%rbp), %eax

movl %eax, -8(%rbp)

movl -8(%rbp), %eax

popq %rbp

retq

.cfi_endproc

## -- End function

.subsections_via_symbols

From the assembly code, we can see that there is no jge instruction (jump when greater or equal) in fun2. This is a typical jump instruction. It will jump to a code block according to the result of cmpl instruction. As mentioned above, the CPU only does branch pre-test when it jumps to the instruction. The implementation of?: is completely different. Although there are cmpl instructions, followed by cmovll instructions, this instruction will put the value into the% eax register according to the result of cmp. All subsequent instructions are executed serially, and there is no jump instruction at all, so there will be no performance loss caused by branch prediction failure.

Unlike what I thought before, jump instructions are not generated by size comparison, but by logical fork like if for. Conditional jump is only the result of size comparison.

Full benchmark Code:

@BenchmarkMode(Mode.AverageTime)

@OutputTimeUnit(TimeUnit.MICROSECONDS)

@State(Scope.Thread)

@Fork(3)

@Warmup(iterations = 1)

@Measurement(iterations = 3)

public class BranchPredictionTest {

private static Random random = new Random();

private static int MAX_LENGTH = 10_000_000;

private static int[] arr;

private static int[] arrSotred;

private static int THRESHLOD = MAX_LENGTH >> 1;

@Setup

public static void init() {

arr = new int[MAX_LENGTH];

for (int i = 0; i < MAX_LENGTH; i++) {

arr[i] = random.nextInt(MAX_LENGTH);

}

arrSotred = Arrays.copyOf(arr, arr.length);

Arrays.sort(arrSotred);

}

@Benchmark

public static void countUnsortedArr() {

int cnt = 0;

for (int i = 0; i < MAX_LENGTH; i++) {

if (arr[i] < THRESHLOD) {

cnt++;

}

}

}

@Benchmark

public static void countSortedArr() {

int cnt = 0;

for (int i = 0; i < MAX_LENGTH; i++) {

if (arrSotred[i] < THRESHLOD) {

cnt++;

}

}

}

public static void main(String[] args) throws RunnerException {

Options opt = new OptionsBuilder()

.include(BranchPredictionTest.class.getSimpleName())

.forks(1)

.build();

new Runner(opt).run();

}

}

epilogue

CPU branch prediction itself is to improve the way to avoid pipeline waiting under the pipeline. In fact, it is essentially to use the Locality principle Because of the existence of locality, in most cases, the technology itself brings positive performance (otherwise, it won't exist today), so we don't need to pay attention to its existence in most cases, or we need to write code with confidence and boldness. Don't change all if's to?: three dimensional operation, which may have a far greater impact on code readability than that of our blog Performance gains. Again, I just constructed an extreme data today to verify the performance difference, because the existence of locality is right in most cases.

Finally, I also found that if all the less than signs in the above benchmark were changed to greater than signs, all the performance differences would disappear. The actual test results are as follows. I don't know. It seems that there is no branch prediction. Can you explain it to someone who knows?

Benchmark Mode Cnt Score Error Units BranchPredictionTest.count1 avgt 3 3354.059 ± 3995.160 us/op BranchPredictionTest.count2 avgt 3 4047.069 ± 2285.700 us/op BranchPredictionTest.countSortedArr avgt 3 5732.614 ± 6491.716 us/op BranchPredictionTest.countUnsortedArr avgt 3 5251.890 ± 64.813 us/op

reference material

This article comes from https://blog.csdn.net/xindoo