Generally, we can use many different frameworks when training models. For example, some students like to use pytoch, some students like to use tensorflow, some students like MXNet, and Caffe, which is popular at the beginning of deep learning. In this way, different training frameworks lead to different model result packages, Different dependency libraries are required for model deployment reasoning, and there are great differences between different versions of the same framework, such as tensorflow. In order to solve this confusion LF AI This organization, together with Facebook, MicroSoft and other companies, has formulated the standard of machine learning model. This standard is called ONNX, Open Neural Network Exchage. All model packages (. PTH,. Pb) generated by other frameworks can be converted into this standard format. After being converted into this standard format, unified ONNX Runtime and other tools can be used for unified deployment.

This can actually be compared with the JVM,

A Java virtual machine (JVM) is a virtual machine that enables a computer to run Java programs as well as programs written in other languages that are also compiled to Java bytecode. The JVM is detailed by a specification that formally describes what is required in a JVM implementation. Having a specification ensures interoperability of Java programs across different implementations so that program authors using the Java Development Kit (JDK) need not worry about idiosyncrasies of the underlying hardware platform.

JAVA has JAVA language + jar package + JVM, and other languages, such as Scala, are also run on the JVM. Therefore, as long as different languages finally convert the program into a format that can be recognized by the JVM, they can run on the unified cross platform JVM JAVA virtual machine. The packages used by the JVM here are binary packages, so the contents are unknowable and difficult for humans to understand intuitively.

Here, the ONNX standard adopts the protocol buffers developed by Google as the format standard. This format is developed on the basis of XML and JSON. It is an easy to understand format for human beings. ONNX is introduced on the official website of ONNX as follows:

ONNX defines a common set of operators - the building blocks of machine learning and deep learning models - and a common file format to enable AI developers to use models with a variety of frameworks, tools, runtimes, and compilers.

The model sources supported by ONNX basically include all frameworks we use every day:

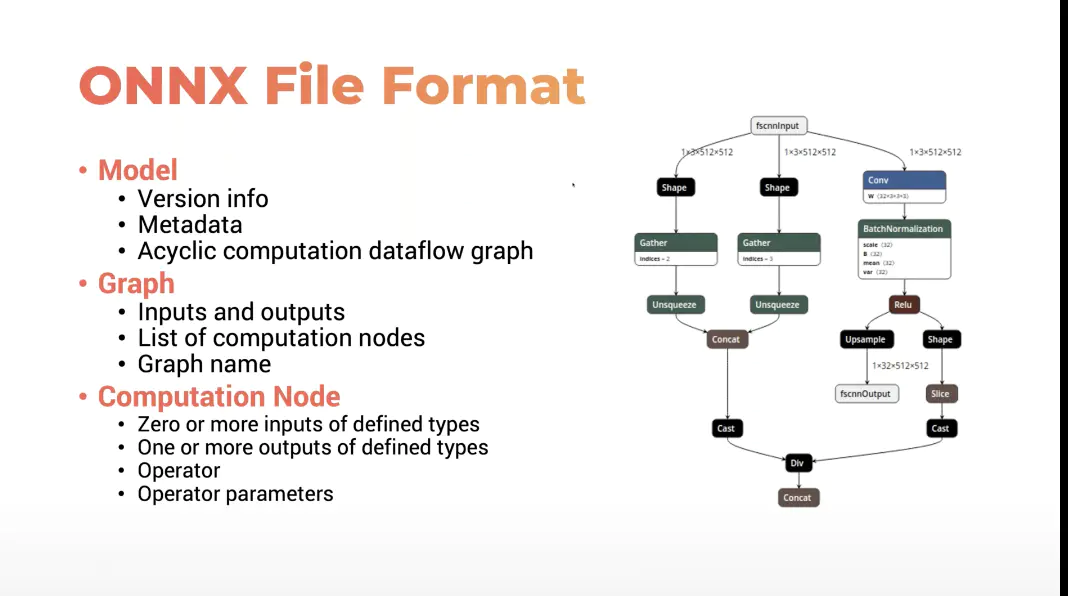

The file format of ONNX adopts Google's protocol buffers, which is consistent with that of caffe.

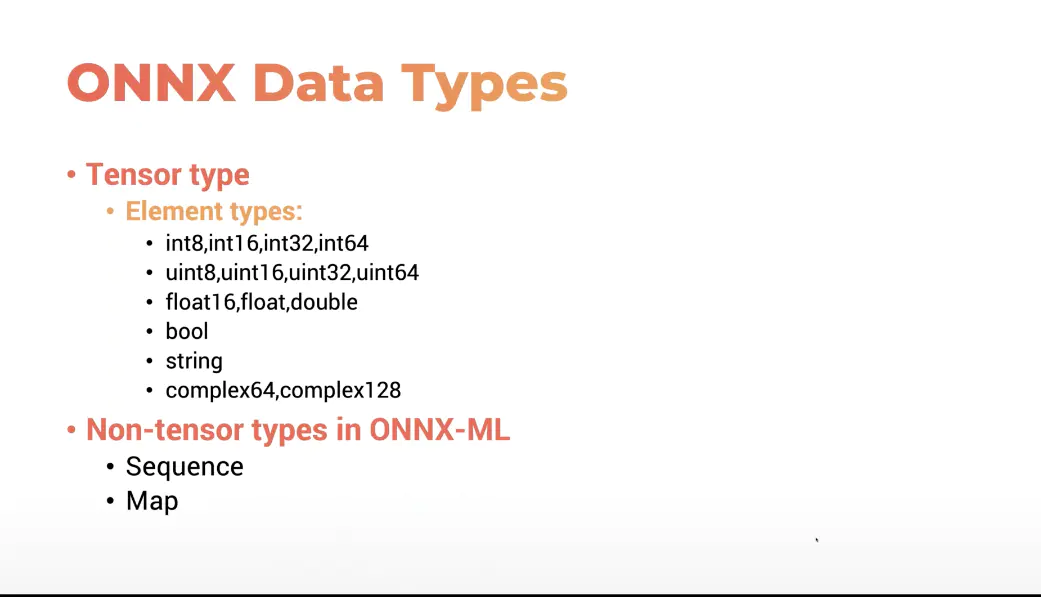

The data classes defined by ONNX include our common data types, which are used to define the output format in the model

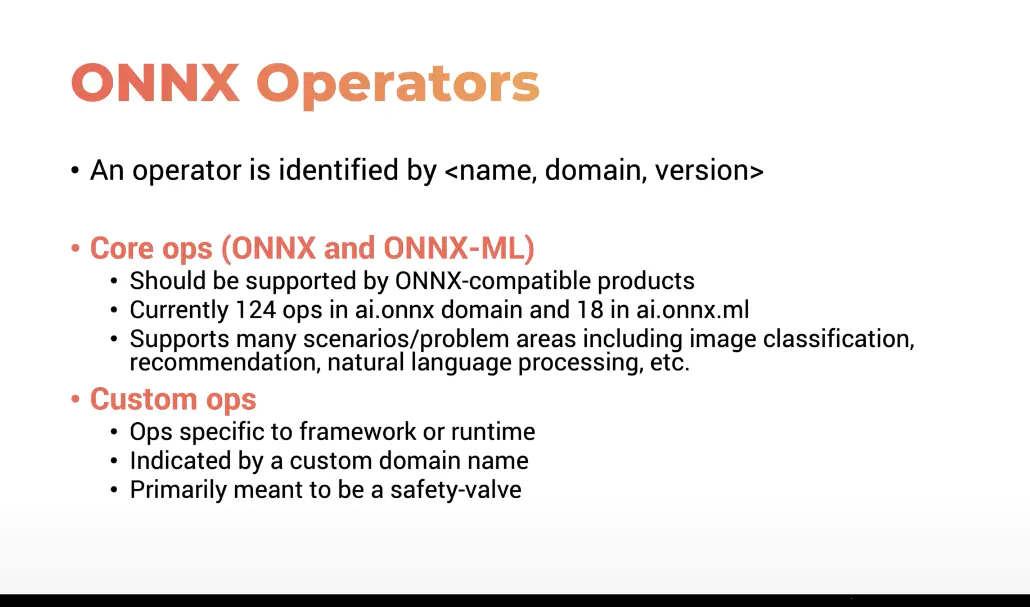

ONNX defines many common nodes, such as Conv,ReLU,BN, maxpool and so on. At the same time, it is constantly updating. When we encounter a node that is not in the built-in node library, we can also write a node ourselves

With the input / output and the calculation node, you can record a calculation diagram of the model from the input picture to the output according to the forward in the pytorch framework. ONNX stores this calculation diagram in a standard format. ONNX can be visualized through a tool Netron, as shown on the right side of the first figure;

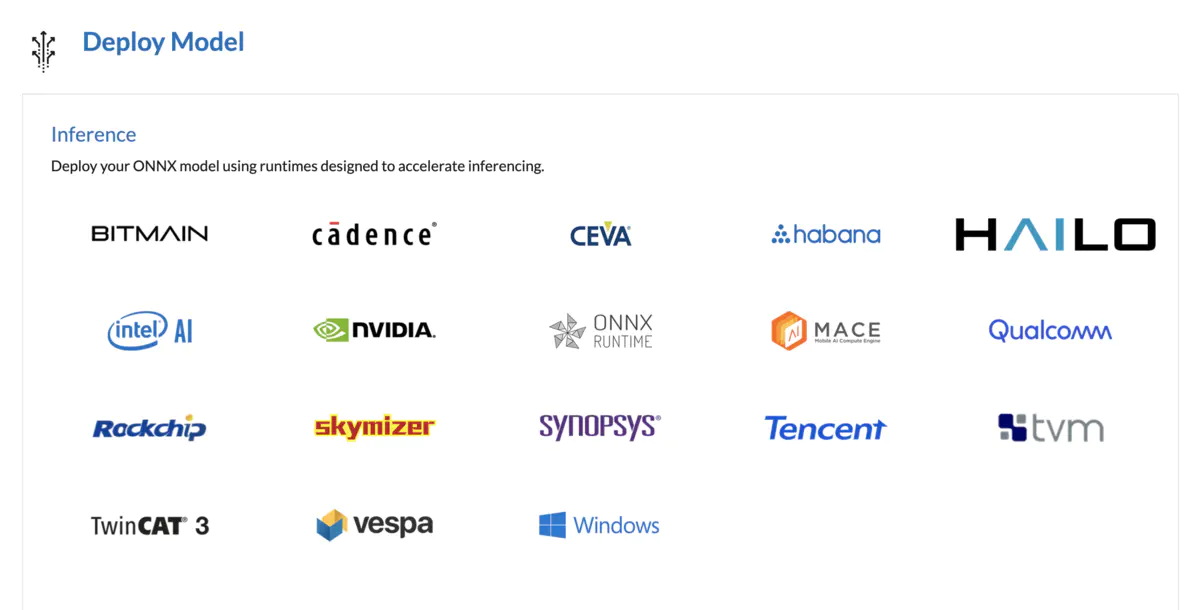

After saving into a unified ONNX format, you can use a unified running platform for information.

pytorch native supports ONNX format transcoding. The following is an example:

1. Convert pytorch model to onnx format and call torch directly onnx. export(model, input, output_name)

import torch from torchvision import models net = models.resnet.resnet18(pretrained=True) dummpy_input = torch.randn(1,3,224,224) torch.onnx.export(net, dummpy_input, 'resnet18.onnx')

2. View the generated onnx

import onnx

# Load the ONNX model

model = onnx.load("resnet18.onnx")

# Check that the IR is well formed

onnx.checker.check_model(model)

# Print a human readable representation of the graph

print(onnx.helper.printable_graph(model.graph))

- ONNX Runtime

The runtime supporting ONNX is similar to the JVM running the unified ONNX format model package, including interpreting, optimizing (integrating conv BN and other operations) and running the ONNX model.

reasoning

import onnxruntime as rt

import numpy as np

data = np.array(np.random.randn(1,3,224,224))

sess = rt.InferenceSession('resnet18.onnx')

input_name = sess.get_inputs()[0].name

label_name = sess.get_outputs()[0].name

pred_onx = sess.run([label_name], {input_name:data.astype(np.float32)})[0]

print(pred_onx)

print(np.argmax(pred_onx)

Complete code

import torch

from torchvision import models

net = models.resnet.resnet18(pretrained=True)

dummpy_input = torch.randn(1,3,224,224)

torch.onnx.export(net, dummpy_input, 'resnet18.onnx')

import onnx

# Load the ONNX model

model = onnx.load("resnet18.onnx")

# Check that the IR is well formed

onnx.checker.check_model(model)

# Print a human readable representation of the graph

print(onnx.helper.printable_graph(model.graph))

import onnxruntime as rt

import numpy as np

data = np.array(np.random.randn(1,3,224,224))

sess = rt.InferenceSession('resnet18.onnx')

input_name = sess.get_inputs()[0].name

label_name = sess.get_outputs()[0].name

pred_onx = sess.run([label_name], {input_name:data.astype(np.float32)})[0]

print(pred_onx)

print(np.argmax(pred_onx))