This paper aims to record the experimental report and implementation process of operating system course design pintos project1 at the end of this semester. The whole experiment refers to many articles and some codes. Some of them are the same as other articles. Please forgive me.

Part I Project Overview

1, Pintos introduction

Pintos is a simple operating system framework based on 80x86 architecture. It supports kernel level threads, can load and run user programs, and also has a file system. However, these functions are implemented in a simple form.

2, Project requirements

1. Project thread management

In this project, we need to make improvements in three parts to achieve the following functions:

- Part I: re implement timer_sleep() function to avoid non-stop switching between the ready and running state of the thread.

- Part II: realize priority scheduling

- Part III: realize multi-level feedback scheduling

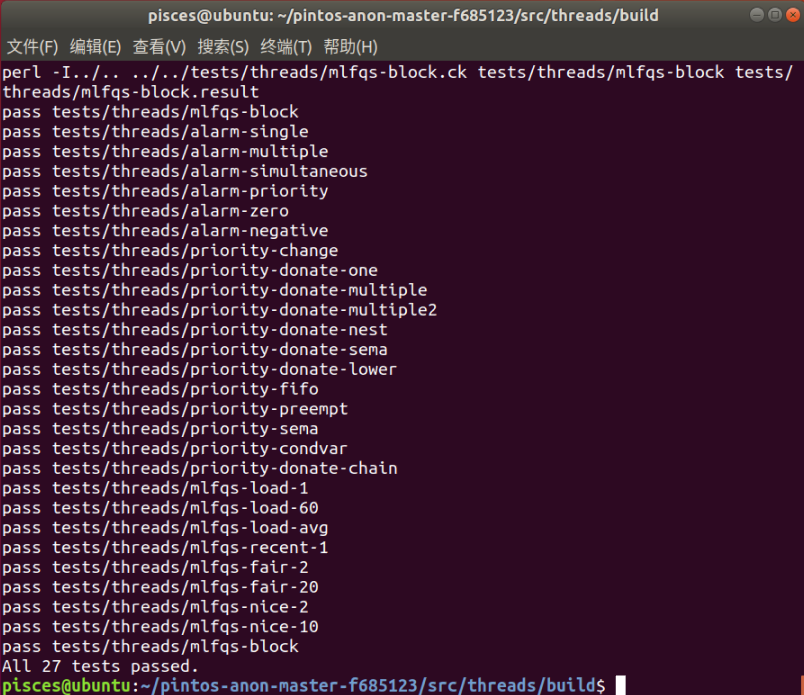

Final goal: make all 27 inspection points of the project pass.

3, Environment construction and configuration

1. Install Ubuntu 18.04LTS virtual machine in VMware Workstation and perform basic configuration.

2. Run the install Bochs Simulator command on the terminal

sudo apt-get install bochs

3. Use VIM to open / utils / pintos GDB and edit the GDBMACROS variable

GDBMACROS=/home/pisces/pintos-anon-master-f685123/src/utils/gdb-macros

4. Open the Makefile using VIM and edit the LOADLIBES variable name to LDLIBS

LDLIBS = -lm

5. Type make in / src/utils to compile utils

6. Edit / SRC / threads / make Vars: change the type of SIMULATOR to bochs

SIMULATOR = --bochs

7. In / src/threads and run to compile the thread directory make

8. Edit / utils/pintos

(1) The $sim variable is of type bochs

$sim = "bochs" if !defined $sim;

(2) Replace kernel Bin is the full path

my $name = find_file ('/home/pisces/pintos-anon-master-f685123/src/threads/build/kernel.bin');(3) Replace loader Bin is the full path

$name = find_file ("/home/pisces/pintos-anon-master-f685123/src/threads/build/loader.bin")9. Open ~ / bashrc and add the following statement to the last line

export PATH=/home/pisces/pintos-anon-master-f685123/src/utils:$PATH

10. Reopen the terminal and enter source ~ / Bashrc and run

11. Open the terminal under Pintos and enter Pintos run alarm multiple. The following situations will appear

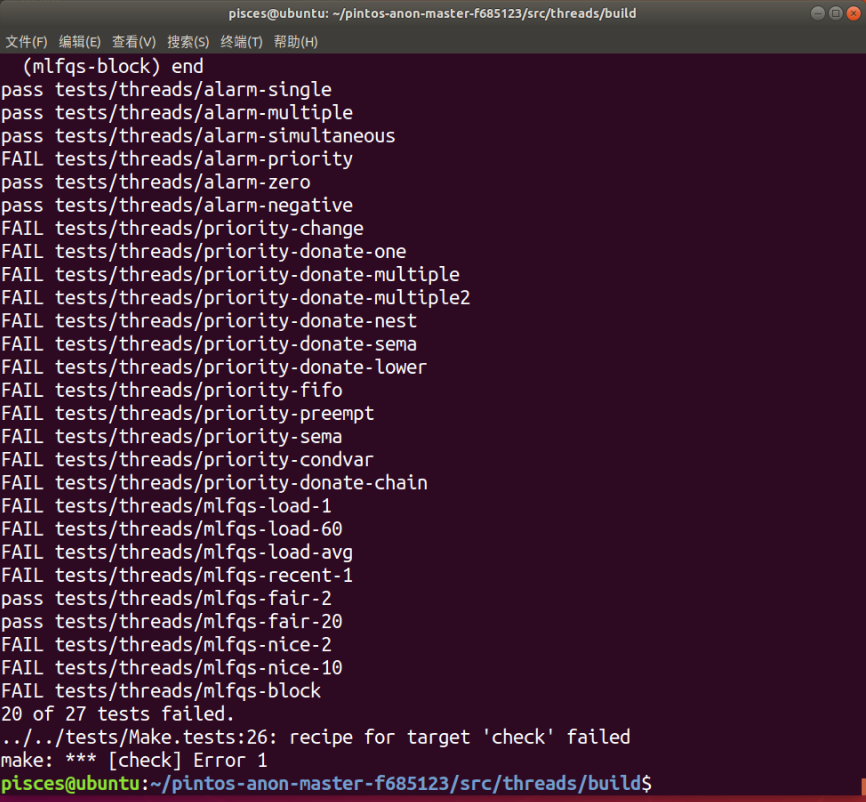

12. make and check under / threads/build, and the following results will appear

At this point, our environment is configured. Since project 1 has not been modified, it is normal to have 20 failed.

The second part is the implementation of project thread management

1, Thread management overview

For the alarm series of tests, busy and other problems need to be solved. Therefore, the "thread three state model" needs to be introduced to suspend the temporarily unused threads (yield CPU), which can improve the throughput of the system.

For the series of priority and preempt tests, it is necessary to implement a threaded "priority queue" data structure to support priority scheduling, Therefore, it can be implemented using the "heap" data structure (heap), but using O(n) The scanning algorithm can also pass the test. Therefore, in order to simplify the implementation and ensure the normal throughput of the system, ordinary scanning algorithm is used to realize priority scheduling. The basic idea is that when scheduling, the system starts from ready_ Select the thread with the highest priority in the list to obtain the execution of the CPU. When the thread dies or is blocked or a new thread is added, reschedule and maintain the data structure.

priority_ The fundamental problem to be solved by donation is the "priority reversal" problem, that is, low priority threads can run before high priority threads, resulting in a kind of deadlock. Therefore, you need to use data structures such as semaphore, condition, and lock. The basic idea is that the waiting queue of semaphores should be designed as a priority queue. Priority donation should be considered when obtaining locks, and preemption should be considered when releasing locks.

After realizing floating-point operation, multilevel feedback queue mlfqs calculates nice and recent according to the official scheduling formula_ CPU and timer Timer in C_ Interrupt() function can be modified.

2, Code file description

The following files and folders will be used in project 1

(1) Folder function description

| folder | function |

|---|---|

| threads | Basic kernel code |

| devices | IO device interface, timer, keyboard, disk and other codes |

| lib | The C standard library is implemented. The directory code will be compiled with Pintos kernel, and the user's program should also run in this directory. Both kernel programs and user programs can use #include to import header files in this directory |

(2) File description in threads / folder

| file | function |

|---|---|

| loader.h | Kernel loader |

| loader.S | |

| kernel.lds.S | The connection script is used to connect the kernel and set the kernel of the load address |

| init.h | Initialization of kernel, including main() function |

| init.c | |

| thread.h | Realize basic thread function |

| thread.c | |

| switch.h | Assembly language implements conventional threads for exchange |

| switch.S | |

| start.S | Jump to main function |

(3) Description of files in devices / folder

| file | function |

|---|---|

| timer.h | Implement the system timer, which runs 100 times per second by default |

| timer.c | |

| vga.h | The display driver is responsible for printing text on the screen |

| vga.c | |

| serial.h | The serial port driver calls the printf() function and passes the content into the input layer |

| serial.c | |

| disk.h | Supports disk read and write operations |

| disk.c | |

| kbd.h | Supports disk read and write operations |

| kbd.c | |

| input.h | The input layer program forms an input queue of incoming characters |

| input.c | |

| intq.h | Interrupt queue program, which manages kernel threads and interrupt handlers |

| intq.c |

3, Project analysis

The starting point of thread management project is timer_sleep() function. The following shows the overall structure of this function:

/* Sleeps for approximately TICKS timer ticks. Interrupts must

be turned on. */

void timer_sleep(int64_t ticks)

{

int64_t start = timer_ticks(); //Get start time

ASSERT(intr_get_level() == INTR_ON);

while (timer_elapsed(start) < ticks) //Check whether the current time is less than the set sleep time

thread_yield(); //Put the current thread into the ready queue and schedule the next thread

}In line 5, first obtain the start time of thread sleep, timer_ In the process of obtaining the time, the ticks() function adopts the method of turning off the interrupt, saving the program status word, and then turning on the interrupt to restore the program status word, so as to prevent the interrupt in the execution process. Since the subsequent programs also use the operation of switching the interrupt, it will be introduced next.

In line 7, the state of the current interrupt is asserted to ensure that the interrupt is open.

Next is the key part. First, look at timer_ The overall structure of the elapsed() function is as follows:

/* Returns the number of timer ticks elapsed since THEN, which

should be a value once returned by timer_ticks(). */

int64_t

timer_elapsed(int64_t then)

{

return timer_ticks() - then;

}We can see that this function actually calculates the sleep time of the current thread, and it returns the result to timer_ After the sleep() function, use the while loop to judge whether the sleep time has reached the ticks time (here, the ticks time is the local variable of the incoming quasi sleep time, not the global variable. The time from the start of the system to the present). If it does not, it will continue to thread_yield().

thread_ The overall structure of the yield() function is as follows:

/* Yields the CPU. The current thread is not put to sleep and

may be scheduled again immediately at the scheduler's whim. */

void thread_yield(void)

{

struct thread *cur = thread_current(); //Get the initial position of the current page (pointer to start)

enum intr_level old_level;

ASSERT(!intr_context());

old_level = intr_disable(); //Off interrupt

if (cur != idle_thread) //If the current thread is not an idle thread

list_push_back(&ready_list, &cur->elem); //Put the current thread into the ready queue

cur->status = THREAD_READY; //Modify program status to ready

schedule(); //Schedule next thread

intr_set_level(old_level); //Open interrupt

}(1) Acquisition of page pointer

In line 5, the cur pointer calls thread_current() function to obtain the pointer to the initial position of the page. Since this function is also nested at multiple levels, I will only briefly describe the process of function implementation here. First, this function obtains the value of the esp register, which is the register pointing to the top of the stack. In order to obtain the pointer to the top of the stack, we know that the size of a page in Pintos is the 12th power of 2. Therefore, the method is to shift the number 1 to the left by 12 bits in the binary and reverse it, and then combine it with the value in the esp register to obtain the top of the page pointer.

(2) Atomization operation

The so-called atomization operation refers to the off interrupt and on interrupt operation mentioned at the beginning, which are respectively realized by the following two statements:

old_level = intr_disable(); //Off interrupt ////Other operations intr_set_level(old_level); //Open interrupt

The basic implementation steps are to use the push and pop statements of the stack to get the value of the program status word register, and use the CLI instruction to turn off the interrupt. When recovering, put the value mov into the register and STI to turn on the interrupt.

(3) Thread switching

This step is reflected in lines 11-14 of the code. If the current thread is not an idle thread, it will be added to the ready queue. The way to add is to associate it with the previous and subsequent threads through the change of the pointer to form a queue and modify this thread to the ready state.

The implementation of the schedule() function is to obtain the current thread and the thread to be run, where prev = switch_threads(cur, next); Statement uses pure assembly to change the value in the register to the value pointing to the next thread, thread_ schedule_ The tail (prev) function marks the thread to be run as running state and determines whether the original thread has entered the state of extinction. If so, it will empty the page table information. Because these operations are too low-level, their understanding is relatively simple. Only the general idea is shown here and will not be in-depth for the time being.

From this, we can know that thread_ The yield () function is used to put the current thread into the ready queue and schedule the next thread. And timer_sleep() function is to put the running program into the ready queue continuously within a limited time, so as to achieve the purpose of sleep.

Of course, a major disadvantage of this is that threads constantly switch back and forth between running and ready States, which consumes a lot of resources. Therefore, we will improve it.

4, Thread management implementation part I - timer_ Re implementation of sleep() function

1. Realization thought

Because the original timer_ In the first mock exam, the sleep () function uses the method of running and ready to switch between the CPU resources. Considering that Pintos provides thread blocking mode (see the bottom thread state structure), I intend to add a variable to record the thread sleep time in the thread structure, by using Pintos clock interrupt (see the interrupt function below time). That is, each tick will be executed once, so that the variable recording the thread's sleep time will be reduced by 1 during each detection. When the variable is 0, it can represent that the thread can be awakened, so as to avoid excessive overhead of resources.

Thread state structure:

enum thread_status

{

THREAD_RUNNING, /* Running thread. */

THREAD_READY, /* Not running but ready to run. */

THREAD_BLOCKED, /* Waiting for an event to trigger. Blocking state*/

THREAD_DYING /* About to be destroyed. */

};Time interrupt function:

/* Timer interrupt handler. */

static void

timer_interrupt(struct intr_frame *args UNUSED)

{

ticks++;

thread_tick();

}2. Implementation steps

(1) Rewrite the thread structure and add the variable ticks to record the thread sleep time_ blocked

struct thread

{

/* Owned by thread.c. */

tid_t tid; /* Thread identifier. */

enum thread_status status; /* Thread state. */

char name[16]; /* Name (for debugging purposes). */

uint8_t *stack; /* Saved stack pointer. */

int priority; /* Priority. */

struct list_elem allelem; /* List element for all threads list. */

int64_t ticks_blocked; //Added variable - > record the time to block

/* Shared between thread.c and synch.c. */

struct list_elem elem; /* List element. */

#ifdef USERPROG

/* Owned by userprog/process.c. */

uint32_t *pagedir; /* Page directory. */

#endif

/* Owned by thread.c. */

unsigned magic; /* Detects stack overflow. */

};(2) Rewrite the thread creation function and increase the initial value assignment operation T - > ticks of the variable recording the thread sleep time_ blocked = 0;

tid_t

thread_create (const char *name, int priority,

thread_func *function, void *aux)

{

struct thread *t;

struct kernel_thread_frame *kf;

struct switch_entry_frame *ef;

struct switch_threads_frame *sf;

tid_t tid;

ASSERT (function != NULL);

/* Allocate thread. */

t = palloc_get_page (PAL_ZERO);

if (t == NULL)

return TID_ERROR;

/* Initialize thread. */

init_thread (t, name, priority);

tid = t->tid = allocate_tid ();

t->ticks_blocked = 0; //Added initialization operation

/* Stack frame for kernel_thread(). */

kf = alloc_frame (t, sizeof *kf);

kf->eip = NULL;

kf->function = function;

kf->aux = aux;

/* Stack frame for switch_entry(). */

ef = alloc_frame (t, sizeof *ef);

ef->eip = (void (*) (void)) kernel_thread;

/* Stack frame for switch_threads(). */

sf = alloc_frame (t, sizeof *sf);

sf->eip = switch_entry;

sf->ebp = 0;

/* Add to run queue. */

thread_unblock (t);

return tid;

}(3) Rewrite timer_sleep() function, get the current thread to be blocked, set the blocking time for it, and call Pintos's thread blocking function (note that this operation cannot be interrupted, so switch interrupt operation should be added)

void

timer_sleep (int64_t ticks)

{

if (ticks <= 0)

{

return;

}

ASSERT (intr_get_level () == INTR_ON);

enum intr_level old_level = intr_disable ();

struct thread *current_thread = thread_current ();

current_thread->ticks_blocked = ticks; //Set blocking time

thread_block (); //Call blocking function

intr_set_level (old_level);

}(4) In timer_ Insert thread into interrupt()_ foreach (blocked_thread_check, NULL); To calculate the blocking time of all blocked threads

static void

timer_interrupt (struct intr_frame *args UNUSED)

{

ticks++;

thread_tick ();

thread_foreach (blocked_thread_check, NULL);

}(5) Implement blocked_thread_check() function

In thread H states:

void blocked_thread_check (struct thread *t, void *aux UNUSED);

In thread C:

The implementation logic is that if the thread is in the blocking state and the blocking time has not ended, reduce its blocking time and judge that when it is no longer blocked, it will call Pintos function thread_unblock() puts the thread into the ready queue and changes it to the ready state.

void

blocked_thread_check (struct thread *t, void *aux UNUSED)

{

if (t->status == THREAD_BLOCKED && t->ticks_blocked > 0)

{

t->ticks_blocked--;

if (t->ticks_blocked == 0)

{

thread_unblock(t);

}

}

}So far, timer_ The wake-up mechanism of sleep () is written.

3. Implementation results

At this time, the result of re make check in / threads/build is as follows:

5, Implementation of thread management Part II - Implementation of priority scheduling

1. Ensure that the priority queue is maintained when inserting threads into the ready queue

(1) Realization idea:

Because Pintos preset function list_ insert_ With the existence of ordered (), you can directly use this function to complete the thread insertion according to the priority, so you only need to replace the statements in the function that involves inserting the thread directly at the end.

(2) Implementation steps:

Implement a priority comparison function thread_cmp_priority():

/* priority compare function. */

bool

thread_cmp_priority (const struct list_elem *a, const struct list_elem *b, void *aux UNUSED)

{

return list_entry(a, struct thread, elem)->priority > list_entry(b, struct thread, elem)->priority;

}Call Pintos preset function list_insert_ordered() replaces thread_unblock(),init_thread(),thread_ List in yield()_ push_ Back() function:

void

thread_unblock (struct thread *t)

{

enum intr_level old_level;

ASSERT (is_thread (t));

old_level = intr_disable ();

ASSERT (t->status == THREAD_BLOCKED);

list_insert_ordered (&ready_list, &t->elem, (list_less_func *) &thread_cmp_priority, NULL);

t->status = THREAD_READY;

intr_set_level (old_level);

}static void

init_thread (struct thread *t, const char *name, int priority)

{

enum intr_level old_level;

ASSERT (t != NULL);

ASSERT (PRI_MIN <= priority && priority <= PRI_MAX);

ASSERT (name != NULL);

memset (t, 0, sizeof *t);

t->status = THREAD_BLOCKED;

strlcpy (t->name, name, sizeof t->name);

t->stack = (uint8_t *) t + PGSIZE;

t->priority = priority;

t->magic = THREAD_MAGIC;

old_level = intr_disable ();

list_insert_ordered (&all_list, &t->allelem, (list_less_func *) &thread_cmp_priority, NULL);

intr_set_level (old_level);

}void

thread_yield (void)

{

struct thread *cur = thread_current ();

enum intr_level old_level;

ASSERT (!intr_context ());

old_level = intr_disable ();

if (cur != idle_thread)

list_insert_ordered (&ready_list, &cur->elem, (list_less_func *) &thread_cmp_priority, NULL);

cur->status = THREAD_READY;

schedule ();

intr_set_level (old_level);

}(3) Test results:

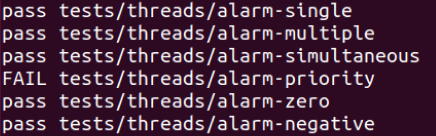

alarm_priority test passed successfully!

2. Continuous improvement of priority mechanism

(1) Ideas and implementation steps

According to the test cases given by Pintos, we can know that when the priority of a thread is changed, we need to immediately consider the priority of all threads. Therefore, we need to set the priority function thread_ set_ Add thread into priority()_ The yield () function ensures that the threads of the ready queue are reordered immediately after each modification of the thread priority. In addition, the special situation when creating a thread needs to be considered. If the priority of the created thread is higher than that of the running thread, the running thread needs to be added to the ready queue and the new thread needs to be ready to run. The code is as follows:

void

thread_set_priority (int new_priority)

{

thread_current ()->priority = new_priority;

thread_yield (); //Join the call of the thread prioritization function

}tid_t

thread_create (const char *name, int priority,

thread_func *function, void *aux)

{

struct thread *t;

struct kernel_thread_frame *kf;

struct switch_entry_frame *ef;

struct switch_threads_frame *sf;

tid_t tid;

ASSERT (function != NULL);

/* Allocate thread. */

t = palloc_get_page (PAL_ZERO);

if (t == NULL)

return TID_ERROR;

/* Initialize thread. */

init_thread (t, name, priority);

tid = t->tid = allocate_tid ();

t->ticks_blocked = 0;

/* Stack frame for kernel_thread(). */

kf = alloc_frame (t, sizeof *kf);

kf->eip = NULL;

kf->function = function;

kf->aux = aux;

/* Stack frame for switch_entry(). */

ef = alloc_frame (t, sizeof *ef);

ef->eip = (void (*) (void)) kernel_thread;

/* Stack frame for switch_threads(). */

sf = alloc_frame (t, sizeof *sf);

sf->eip = switch_entry;

sf->ebp = 0;

/* Add to run queue. */

thread_unblock (t); //Regardless of the currently running process, the new process is prioritized in the ready queue

//Newly added statement

if (thread_current ()->priority < priority)

{

thread_yield ();//If the priority of the currently running process is still lower than that of the new process, the currently running process shall be put into the ready queue

//Since the priority of the current running process is already the highest, and the priority of the newly created process is still high, it means that the new process has the highest priority at this time, so it should be executed

}

return tid;

}(2) Test results:

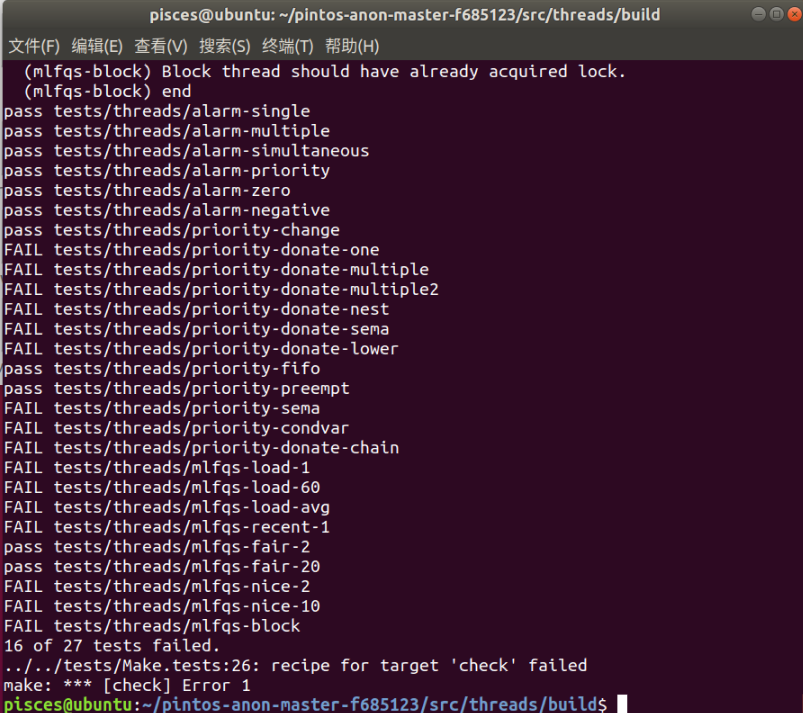

priority_change,priority_fifo and priority_preempt has passed the test!

3. Through the specific implementation of other priority test procedures

(1) Realization idea:

The priority donate one test case shows that if a thread finds that another thread with lower priority than itself already has the same lock when acquiring the lock, the thread will donate its priority to another thread, that is, raise the priority of another thread to be the same as itself.

The priority-donate-multiple and priority-donate-multiple2 test cases show that when restoring the priority after thread donation, we should also consider the donation of other threads to this thread, that is, we need to provide a data result to record all threads that donate priority to this thread.

The priority donate nest test case shows that priority donation can be recursive, so data results are needed to record which other thread the thread is waiting for to release the lock.

The priority donate lower test case shows that if the thread is in the donation state, the thread priority is still the donated priority when modifying, but the thread priority becomes the modified priority after releasing the lock.

Priority SEMA and priority condvar test cases show that the waiting queue of semaphores needs to be realized as priority queue, and the waiters queue of condition should also be realized as priority queue.

The priority donate chain test case shows that if the thread is not donated after the lock is released, the original priority needs to be restored immediately.

(2) Implementation steps:

Add the data structure of recording basic priority, holding lock of recording thread and waiting lock of recording thread to the thread structure:

struct thread

{

...

int base_priority; /* Base priority.Newly added */

struct list locks; /* Locks that the thread is holding.Newly added */

struct lock *lock_waiting; /* The lock that the thread is waiting for. Newly added*/

...

}Set the above data structure in init_ Initialization in thread:

static void

init_thread (struct thread *t, const char *name, int priority)

{

...

t->base_priority = priority;

list_init (&t->locks);

t->lock_waiting = NULL;

...

}Add the data structure of record donation and record maximum priority to the lock structure:

struct lock

{

...

struct list_elem elem; /* List element for priority donation. Newly added*/

int max_priority; /* Max priority among the threads acquiring the lock.Newly added */

};Modify synch Lock in C_ Acquire function, so that it can realize recursive donation in a circular way, and modify the max of the lock_ Priority member, and then through thread_ update_ The priority function updates the priority to realize priority donation:

void lock_acquire (struct lock *lock)

{

struct thread *current_thread = thread_current ();

struct lock *l;

enum intr_level old_level;

ASSERT (lock != NULL);

ASSERT (!intr_context ());

ASSERT (!lock_held_by_current_thread (lock));

if (lock->holder != NULL && !thread_mlfqs)

{

current_thread->lock_waiting = lock;

l = lock;

while (l && current_thread->priority > l->max_priority)

{

l->max_priority = current_thread->priority;

thread_donate_priority (l->holder);

l = l->holder->lock_waiting;

}

}Implement thread_donate_priority and lock_cmp_priority to update the thread priority and rearrange the position in the queue:

void thread_donate_priority (struct thread *t)

{

enum intr_level old_level = intr_disable ();

thread_update_priority (t);

if (t->status == THREAD_READY)

{

list_remove (&t->elem);

list_insert_ordered (&ready_list, &t->elem, thread_cmp_priority, NULL);

}

intr_set_level (old_level);

}bool lock_cmp_priority (const struct list_elem *a, const struct list_elem *b, void *aux UNUSED)

{

return list_entry (a, struct lock, elem)->max_priority > list_entry (b, struct lock, elem)->max_priority;

}Implement thread_hold_the_lock and lock_cmp_priority to achieve the record of having a lock on the thread. At the same time, the priority of the current thread is updated and rescheduled according to the maximum priority of the thread recorded by the lock:

void thread_hold_the_lock(struct lock *lock)

{

enum intr_level old_level = intr_disable ();

list_insert_ordered (&thread_current ()->locks, &lock->elem, lock_cmp_priority, NULL);

if (lock->max_priority > thread_current ()->priority)

{

thread_current ()->priority = lock->max_priority;

thread_yield ();

}

intr_set_level (old_level);

}bool lock_cmp_priority (const struct list_elem *a, const struct list_elem *b, void *aux UNUSED)

{

return list_entry (a, struct lock, elem)->max_priority > list_entry (b, struct lock, elem)->max_priority;

}Modify lock_ The release function changes the release behavior of the lock and implements thread_remove_lock:

void lock_release (struct lock *lock)

{

ASSERT (lock != NULL);

ASSERT (lock_held_by_current_thread (lock));

//new code

if (!thread_mlfqs)

thread_remove_lock (lock);

lock->holder = NULL;

sema_up (&lock->semaphore);

}void thread_remove_lock (struct lock *lock)

{

enum intr_level old_level = intr_disable ();

list_remove (&lock->elem);

thread_update_priority (thread_current ());

intr_set_level (old_level);

}Implement thread_update_priority, this function realizes the change of priority when releasing the lock. If the current thread still has a lock, it obtains the max of the lock it owns_ Priority, if it is greater than base_priority updates the priority of the donation:

void thread_update_priority (struct thread *t)

{

enum intr_level old_level = intr_disable ();

int max_priority = t->base_priority;

int lock_priority;

if (!list_empty (&t->locks))

{

list_sort (&t->locks, lock_cmp_priority, NULL);

lock_priority = list_entry (list_front (&t->locks), struct lock, elem)->max_priority;

if (lock_priority > max_priority)

max_priority = lock_priority;

}

t->priority = max_priority;

intr_set_level (old_level);

}Modify thread_ set_ The priority function completes the transformation of the new priority:

void thread_set_priority (int new_priority)

{

if (thread_mlfqs)

return;

enum intr_level old_level = intr_disable ();

struct thread *current_thread = thread_current ();

int old_priority = current_thread->priority;

current_thread->base_priority = new_priority;

if (list_empty (¤t_thread->locks) || new_priority > old_priority)

{

current_thread->priority = new_priority;

thread_yield ();

}

intr_set_level (old_level);

}Next, implement the two priority queues of SEMA and condvar, and modify cond_signal function, declare and implement the comparison function cond_sema_cmp_priority:

void cond_signal (struct condition *cond, struct lock *lock UNUSED)

{

ASSERT (cond != NULL);

ASSERT (lock != NULL);

ASSERT (!intr_context ());

ASSERT (lock_held_by_current_thread (lock));

if (!list_empty (&cond->waiters))

{

list_sort (&cond->waiters, cond_sema_cmp_priority, NULL);

sema_up (&list_entry (list_pop_front (&cond->waiters), struct semaphore_elem, elem)->semaphore);

}

}bool cond_sema_cmp_priority (const struct list_elem *a, const struct list_elem *b, void *aux UNUSED)

{

struct semaphore_elem *sa = list_entry (a, struct semaphore_elem, elem);

struct semaphore_elem *sb = list_entry (b, struct semaphore_elem, elem);

return list_entry(list_front(&sa->semaphore.waiters), struct thread, elem)->priority > list_entry(list_front(&sb->semaphore.waiters), struct thread, elem)->priority;

}Finally, the waiting queue of semaphore is realized as priority queue:

void sema_up (struct semaphore *sema)

{

enum intr_level old_level;

ASSERT (sema != NULL);

old_level = intr_disable ();

if (!list_empty (&sema->waiters))

{

list_sort (&sema->waiters, thread_cmp_priority, NULL);

thread_unblock (list_entry (list_pop_front (&sema->waiters), struct thread, elem));

}

sema->value++;

thread_yield ();

intr_set_level (old_level);

}void sema_down (struct semaphore *sema)

{

enum intr_level old_level;

ASSERT (sema != NULL);

ASSERT (!intr_context ());

old_level = intr_disable ();

while (sema->value == 0)

{

list_insert_ordered (&sema->waiters, &thread_current ()->elem, thread_cmp_priority, NULL);

thread_block ();

}

sema->value--;

intr_set_level (old_level);

}(3) Results achieved:

So far, all the test cases about priority have passed!

6, Implementation of thread management Part III - Implementation of multi-level feedback scheduling

1. Realization idea

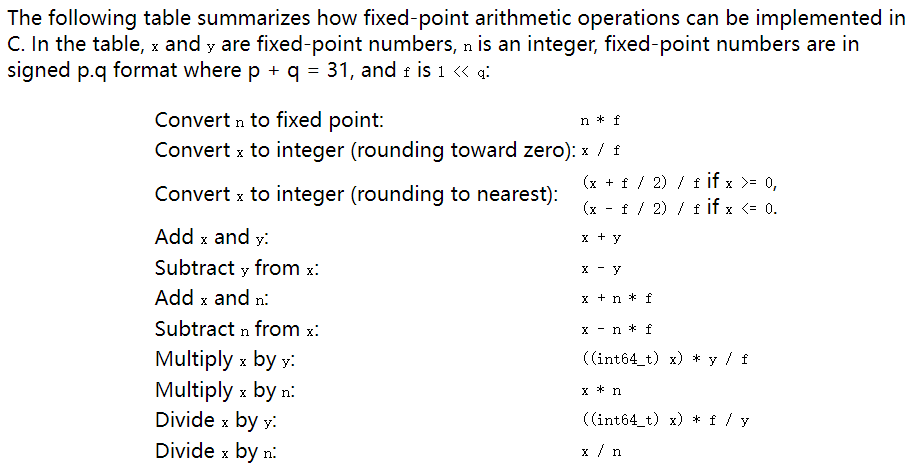

According to the official experimental guidance Pintos Projects: 4.4BSD Scheduler (neu.edu) It is necessary to maintain the priority of 64 queues, and some calculation formulas are needed to calculate the current priority. The calculation formula is as follows:

Therefore, the timer_ The interrupt function updates the priority at a fixed time, once every four ticks, and ensures the current_ CPU increases by 1.

2. Implementation process:

(1) Implementation of operation logic: new fixed_point.h file, and write the calculation program according to the calculation formula

#ifndef __THREAD_FIXED_POINT_H

#define __THREAD_FIXED_POINT_H

/* Basic definitions of fixed point. */

typedef int fixed_t;

/* 16 LSB used for fractional part. */

#define FP_SHIFT_AMOUNT 16

/* Convert a value to a fixed-point value. */

#define FP_CONST(A) ((fixed_t)(A << FP_SHIFT_AMOUNT))

/* Add two fixed-point value. */

#define FP_ADD(A,B) (A + B)

/* Add a fixed-point value A and an int value B. */

#define FP_ADD_MIX(A,B) (A + (B << FP_SHIFT_AMOUNT))

/* Subtract two fixed-point value. */

#define FP_SUB(A,B) (A - B)

/* Subtract an int value B from a fixed-point value A. */

#define FP_SUB_MIX(A,B) (A - (B << FP_SHIFT_AMOUNT))

/* Multiply a fixed-point value A by an int value B. */

#define FP_MULT_MIX(A,B) (A * B)

/* Divide a fixed-point value A by an int value B. */

#define FP_DIV_MIX(A,B) (A / B)

/* Multiply two fixed-point value. */

#define FP_MULT(A,B) ((fixed_t)(((int64_t) A) * B >> FP_SHIFT_AMOUNT))

/* Divide two fixed-point value. */

#define FP_DIV(A,B) ((fixed_t)((((int64_t) A) << FP_SHIFT_AMOUNT) / B))

/* Get the integer part of a fixed-point value. */

#define FP_INT_PART(A) (A >> FP_SHIFT_AMOUNT)

/* Get the rounded integer of a fixed-point value. */

#define FP_ROUND(A) (A >= 0 ? ((A + (1 << (FP_SHIFT_AMOUNT - 1))) >> FP_SHIFT_AMOUNT) \

: ((A - (1 << (FP_SHIFT_AMOUNT - 1))) >> FP_SHIFT_AMOUNT))

#endif /* threads/fixed-point.h */(2) Modify timer_interrupt function, which updates every four ticks and ensures that the current_ Requirements for CPU self increment 1:

static void

timer_interrupt (struct intr_frame *args UNUSED)

{

ticks++;

thread_tick ();

thread_foreach (blocked_thread_check, NULL);

//new code

if (thread_mlfqs)

{

mlfqs_inc_recent_cpu();

if (ticks % TIMER_FREQ == 0)

mlfqs_update_load_avg_and_recent_cpu();

else if (ticks % 4 == 0)

mlfqs_update_priority(thread_current());

}

}(3) Implement recent_cpu self increasing function

void mlfqs_inc_recent_cpu()

{

ASSERT(thread_mlfqs);

ASSERT(intr_context());

struct thread *cur = thread_current();

if (cur == idle_thread)

return;

cur->recent_cpu = FP_ADD_MIX(cur->recent_cpu, 1);

}(4) Implement mlfqs_update_load_avg_and_recent_cpu function

void

mlfqs_update_load_avg_and_recent_cpu()

{

ASSERT(thread_mlfqs);

ASSERT(intr_context());

size_t ready_cnt = list_size(&ready_list);

if (thread_current() != idle_thread)

++ready_cnt;

load_avg = FP_ADD (FP_DIV_MIX (FP_MULT_MIX (load_avg, 59), 60), FP_DIV_MIX(FP_CONST(ready_cnt), 60));

struct thread *t;

struct list_elem *e;

for (e = list_begin(&all_list); e != list_end(&all_list); e = list_next(e))

{

t = list_entry(e, struct thread, allelem);

if (t != idle_thread)

{

t->recent_cpu = FP_ADD_MIX (FP_MULT (FP_DIV (FP_MULT_MIX (load_avg, 2), \

FP_ADD_MIX (FP_MULT_MIX (load_avg, 2), 1)), t->recent_cpu), t->nice);

mlfqs_update_priority(t);

}

}

}(5) Implement mlfqs_update_priority function

void

mlfqs_update_priority(struct thread *t)

{

ASSERT(thread_mlfqs);

if (t == idle_thread)

return;

t->priority = FP_INT_PART (FP_SUB_MIX (FP_SUB (FP_CONST (PRI_MAX), \

FP_DIV_MIX (t->recent_cpu, 4)), 2 * t->nice));

if (t->priority < PRI_MIN)

t->priority = PRI_MIN;

else if (t->priority > PRI_MAX)

t->priority = PRI_MAX;

}(6) Add a member to the thread structure and add it in init_ Initialization in thread:

struct thread

{

...

int nice; /* Niceness. */

fixed_t recent_cpu; /* Recent CPU. */

...

}static void init_thread (struct thread *t, const char *name, int priority)

{

...

t->nice = 0;

t->recent_cpu = FP_CONST (0);

...

}(7) In thread Declare the global variable load in C_ avg:

fixed_t load_avg;

(8) In thread_ Initialize load in start_ avg:

void

thread_start (void)

{

load_avg = FP_CONST (0);

...

}(9) In thread H contains floating-point header files:

#include "fixed_point.h"

(10) In thread Modify thread in C_ set_ nice,thread_get_nice,thread_get_load_avg,thread_get_recent_cpu functions:

/* Sets the current thread's nice value to NICE. */

void

thread_set_nice (int nice UNUSED)

{

/* Solution Code */

thread_current()->nice = nice;

mlfqs_update_priority(thread_current());

thread_yield();

}

/* Returns the current thread's nice value. */

int

thread_get_nice (void)

{

/* Solution Code */

return thread_current()->nice;

}

/* Returns 100 times the system load average. */

int

thread_get_load_avg (void)

{

/* Solution Code */

return FP_ROUND (FP_MULT_MIX (load_avg, 100));

}

/* Returns 100 times the current thread's recent_cpu value. */

int

thread_get_recent_cpu (void)

{

/* Solution Code */

return FP_ROUND (FP_MULT_MIX (thread_current()->recent_cpu, 100));

}3. Results achieved:

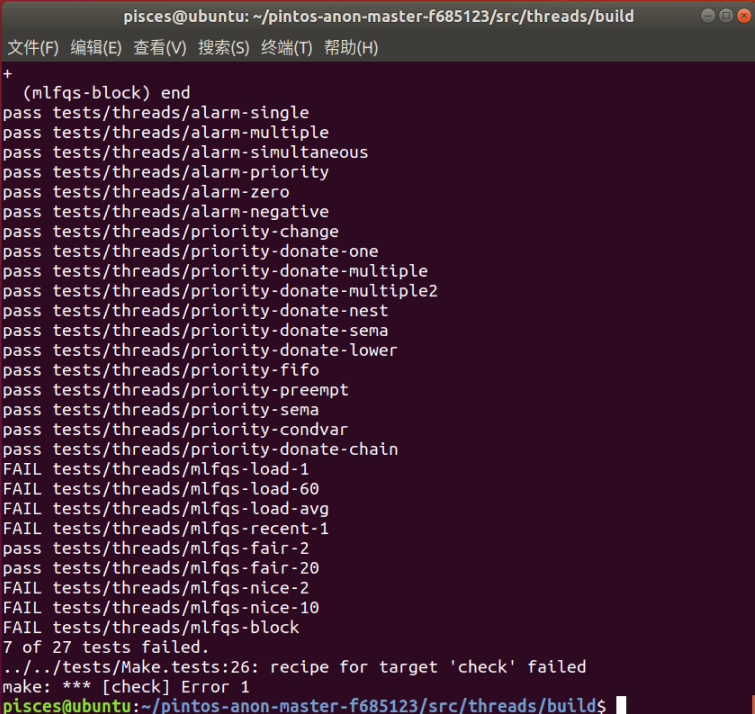

So far, 27 tests have passed and project I has been completed.