Previously, through the investigation of various textbooks, papers and blogs, we finally have a macro-understanding of visual SLAM, extracting and summarizing a number of feature extraction and tracking algorithms, and finally the preliminary scheme decided to study ORB-SLAM.

Take the time to remediate C++, configure the OpenCV3.4 environment in half a day, and quickly turn over Mao Nebula's "Getting Started with OpenCV3 Programming" in nearly a whole day, run through the routines inside, and discover the friendliness explodes.The content of each chapter, whether it is image filtering, image segmentation, image repair or even feature detection, is almost the process of input picture > processing picture with corresponding function > output picture.The exact principle of the function is not to be understood at all. OpenCV is all integrated and it's easy to run a demo.

But later, if you want to write your own code, you must study the specific theory in depth.Here we first record the results of our first run out of ORB feature point detection and matching.

Here is the code, the main source: https://www.cnblogs.com/Jessica-jie/p/8622449.html.

#include<iostream>

#include<vector>

#include<opencv2\core\core.hpp>

#include<opencv2\features2d\features2d.hpp>

#include<opencv2\highgui\highgui.hpp>

using namespace std;

using namespace cv;

int main()

{

Mat img1 = imread("img01.jpg");

Mat img2 = imread("img02.jpg");

// 1. Initialization

vector<KeyPoint> keypoints1, keypoints2;

Mat descriptors1, descriptors2;

Ptr<ORB> orb = ORB::create();

// 2. Extracting Feature Points

orb->detect(img1, keypoints1);

orb->detect(img2, keypoints2);

// 3. Computing feature descriptors

orb->compute(img1, keypoints1, descriptors1);

orb->compute(img2, keypoints2, descriptors2);

// 4. Match the BRIEF descriptors of the two images using BFMatch and Hamming distance as reference

vector<DMatch> matches;

BFMatcher bfMatcher(NORM_HAMMING);

bfMatcher.match(descriptors1, descriptors2, matches);

// 5. Display

Mat ShowMatches;

drawMatches(img1, keypoints1, img2, keypoints2, matches, ShowMatches);

imshow("matches", ShowMatches);

waitKey(0);

return 0;

}

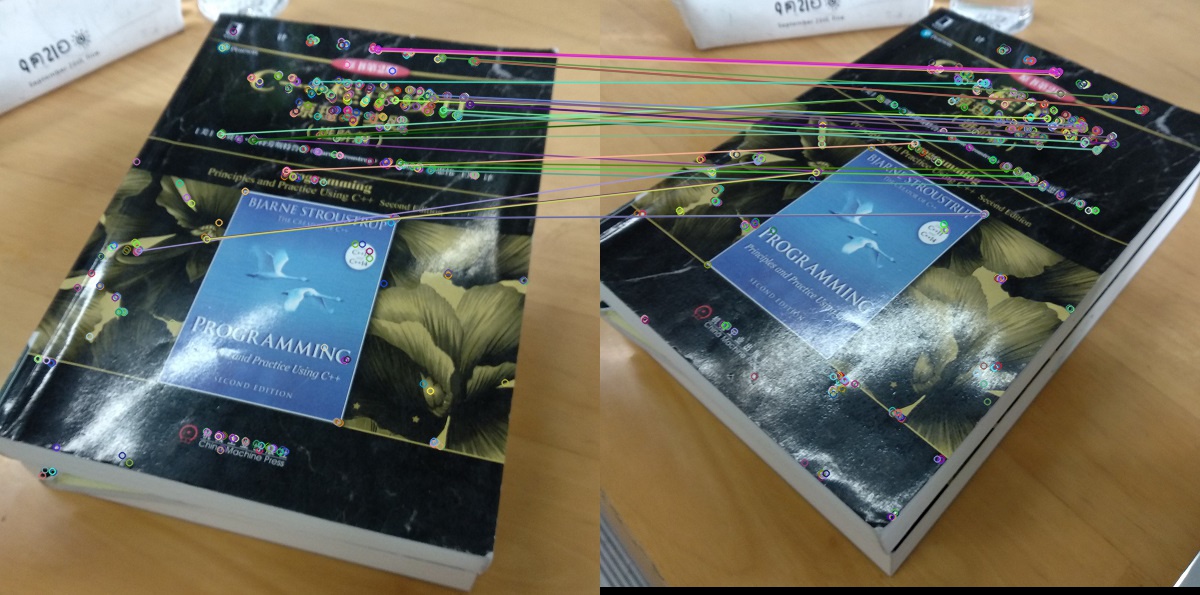

Here's the result, using violent matching: Calculate the distance between a feature point descriptor and all other feature point descriptors, and then sort the resulting distance, taking the closest one as the matching point.

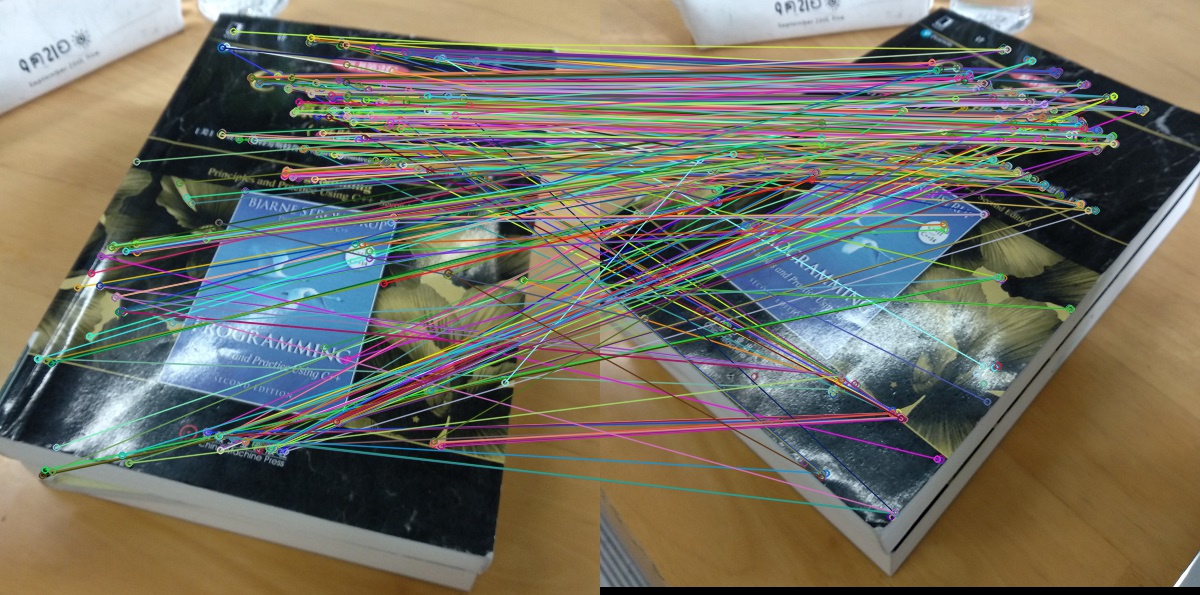

This method is too simple and rough, and can be optimized by some principles, such as: Hamming distance is less than twice the minimum distance.

Add the following code after step 4:

// Match pair filtering

double min_dist = 1000, max_dist = 0;

// Find the maximum and minimum values between all matches

for (int i = 0; i < descriptors1.rows; i++)

{

double dist = matches[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

// When the matching between descriptors is not greater than twice the minimum distance, the matching is considered an incorrect match.

// Sometimes, however, the minimum distance between descriptors is very small and an empirical value can be set as the lower limit.

vector<DMatch> good_matches;

for (int i = 0; i < descriptors1.rows; i++)

{

if (matches[i].distance <= max(2 * min_dist, 30.0))

good_matches.push_back(matches[i]);

}

Then draw the matching function parameters and change to the filtered matches (good_matches).

drawMatches(img1, keypoints1, img2, keypoints2, good_matches, ShowMatches);

Compile the run again and find that the result is much better: