introduce

Recently, due to work needs, a function needs to be used, that is, the calculation of Chinese text similarity. It belongs to an application in the nlp field. Here we find a very good package to share with you. This package is called sense transformers.

Here's how to use this package to calculate the similarity of Chinese text (it's just a small use of this package)

-

The model used here is the paraphrase-multilingual-MiniLM-L12-v2 model, because the paraphrase-MiniLM-L6-v2 model is very good. The paraphrase-multilingual-MiniLM-L12-v2 is a multilingual version of the paraphrase-MiniLM-L6-v2 model, which is fast, effective and supports Chinese!

-

The method to calculate the similarity here is the cosine similarity.

Use steps

- The first step is to install this package. You can install it directly using pip:

pip install sentence-transformers

- Import package

import sys from sentence_transformers.util import cos_sim from sentence_transformers import SentenceTransformer as SBert

- Use model

model = SBert('paraphrase-multilingual-MiniLM-L12-v2')

Because in China, visiting some model websites may fail, resulting in the following results:

HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /api/models/sentence-transformers/paraphrase-multilingual-MiniLM-L12-v2 (Caused by SSLError(SSLError(1, '[SSL: WRONG_VERSION_NUMBER] wrong version number (_ssl.c:1129)')))

Then we can change to this method: first download the model, then unzip it into a folder, and then directly transfer the folder path.

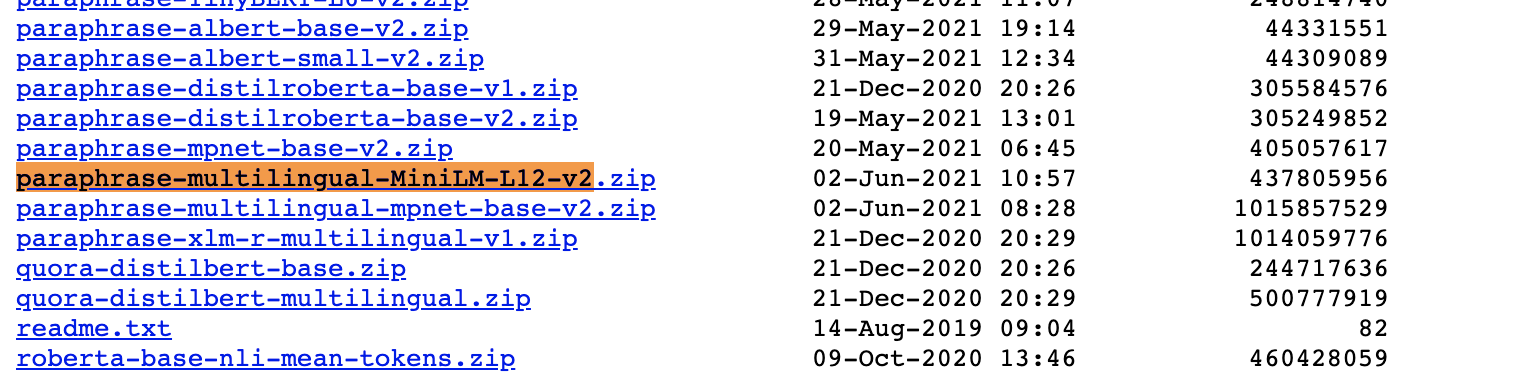

First go to the model website to download the model: the link of the model website is: https://public.ukp.informatik.tu-darmstadt.de/reimers/sentence-transformers/v0.2/

Then find the model name paraphrase-multilingual-MiniLM-L12-v2 and click download.

Then unzip the model to the paraphrase-multilingual-MiniLM-L12-v2 folder. Then put the folder path into the following model.

model = SBert("C:\\Users\xxxx\Downloads\\paraphrase-multilingual-MiniLM-L12-v2")

- Calculation results

The following content is very simple. Two lists are passed. encode the text in each list, then calculate the cosine similarity, and finally output the results.

# Two lists of sentences

sentences1 = ['How to change a bank card',

'The cat sits outside',

'A man is playing guitar',

'The new movie is awesome']

sentences2 = ['Change the binding bank card',

'The dog plays in the garden',

'A woman watches TV',

'The new movie is so great']

# Compute embedding for both lists

embeddings1 = model.encode(sentences1)

embeddings2 = model.encode(sentences2)

# Compute cosine-similarits

cosine_scores = cos_sim(embeddings1, embeddings2)

cosine_scores

What's your feeling?

In fact, I just planned to use the text2vec package. I think the package is very good and powerful. So I wanted to see the source code of his package. The moment I opened the source code, I was stunned. I didn't expect the code to be so clean and beautiful.

But I found out later that I jumped to the sense transformers package 😂, It turns out that many beautiful codes just now are sent transformers packages 😂.

The text2vec package is also very good, and the sense transformers package is even better!!

I only provide a simple use of the sense transformers package here. You can carefully read the source code of the package, which is very worth learning ~

Reference link

- https://github.com/UKPLab/sentence-transformers

- https://github.com/shibing624/text2vec

Read more

list