Requirement

Combine the following two pictures to produce panoramic mosaic effect.

Code implementation process

1. Import required libraries

import numpy as np import cv2

2. Create a class Stitcher

The Stitcher class contains five methods, which are

- stitch(self, images, ratio=0.75, reprojThresh=4.0,showMatches=False)

- cv_show(self,name,img)

- detectAndDescribe(self, image)

- matchKeypoints(self, kpsA, kpsB, featuresA, featuresB, ratio, reprojThresh)

- drawMatches(self, imageA, imageB, kpsA, kpsB, matches, status)

Here, the whole code of the Stitcher class is given first, and then the function of each method is explained.

class Stitcher: #Splicing function def stitch(self, images, ratio=0.75, reprojThresh=4.0,showMatches=False): #Get input picture (imageB, imageA) = images #Detect SIFT key feature points of A and B pictures, and calculate feature descriptors (kpsA, featuresA) = self.detectAndDescribe(imageA) (kpsB, featuresB) = self.detectAndDescribe(imageB) # Match all feature points of two pictures and return matching results M = self.matchKeypoints(kpsA, kpsB, featuresA, featuresB, ratio, reprojThresh) # If the returned result is empty and there is no matching feature point, exit the algorithm if M is None: return None # Otherwise, extract the matching results # H is the 3 x 3 perspective transformation matrix (matches, H, status) = M # Change the perspective of picture A, and result is the transformed picture result = cv2.warpPerspective(imageA, H, (imageA.shape[1] + imageB.shape[1], imageA.shape[0])) self.cv_show('result', result) # Transfer picture B to the leftmost end of the result picture result[0:imageB.shape[0], 0:imageB.shape[1]] = imageB self.cv_show('result', result) # Check whether picture matching needs to be displayed if showMatches: # Generate matching picture vis = self.drawMatches(imageA, imageB, kpsA, kpsB, matches, status) # Return result return (result, vis) # Return matching results return result def cv_show(self,name,img): cv2.imshow(name, img) cv2.waitKey(0) cv2.destroyAllWindows() def detectAndDescribe(self, image): # Convert color pictures to grayscale gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) # Build SIFT generator descriptor = cv2.xfeatures2d.SIFT_create() # Detect SIFT feature points and calculate descriptors (kps, features) = descriptor.detectAndCompute(image, None) # Convert the result to a NumPy array kps = np.float32([kp.pt for kp in kps]) # Return feature point set and corresponding description feature return (kps, features) def matchKeypoints(self, kpsA, kpsB, featuresA, featuresB, ratio, reprojThresh): # Build violence matcher matcher = cv2.BFMatcher() # Using KNN to detect SIFT feature matching from A and B graphs, K=2 rawMatches = matcher.knnMatch(featuresA, featuresB, 2) matches = [] for m in rawMatches: # When the ratio of the nearest distance to the next nearest distance is less than the ratio value, the matching pair is retained if len(m) == 2 and m[0].distance < m[1].distance * ratio: # Store index values of two points matches.append((m[0].trainIdx, m[0].queryIdx)) # When the filtered matching pair is greater than 4, the perspective transformation matrix is calculated if len(matches) > 4: # Get point coordinates of matching pairs ptsA = np.float32([kpsA[i] for (_, i) in matches]) ptsB = np.float32([kpsB[i] for (i, _) in matches]) # Calculation of angle transformation matrix (H, status) = cv2.findHomography(ptsA, ptsB, cv2.RANSAC, reprojThresh) # Return result return (matches, H, status) # If the match pair is less than 4, return to None return None def drawMatches(self, imageA, imageB, kpsA, kpsB, matches, status): # Initialize the visualization picture and connect the A and B pictures together (hA, wA) = imageA.shape[:2] (hB, wB) = imageB.shape[:2] vis = np.zeros((max(hA, hB), wA + wB, 3), dtype="uint8") vis[0:hA, 0:wA] = imageA vis[0:hB, wA:] = imageB # Joint traversal to draw matching pairs for ((trainIdx, queryIdx), s) in zip(matches, status): # When the point pair match is successful, draw it on the visualization if s == 1: # Draw a match ptA = (int(kpsA[queryIdx][0]), int(kpsA[queryIdx][1])) ptB = (int(kpsB[trainIdx][0]) + wA, int(kpsB[trainIdx][1])) cv2.line(vis, ptA, ptB, (0, 255, 0), 1) # Return visualization results return vis

2.1 cv_show(self,name,img)

CV show() function is a drawing function, which is very simple. I won't introduce it too much here.

2.2 detectAndDescribe(self, image)

This function is used to detect SIFT feature points and calculate feature points. If you are interested in the functions, please refer to another article: Feature matching of Opencv.

In addition, in the last step of this function, the coordinates of the detected feature points are converted into the form of numpy array. Namely:

kps = np.float32([kp.pt for kp in kps])

This statement can also be written as:

for i, kp in enumerate(kps): kps[i] = np.float32([kp.pt])

2.3 matchKeypoints(self, kpsA, kpsB, featuresA, featuresB, ratio, reprojThresh)

This function can be divided into two parts for understanding: violence matching and calculation of perspective transformation matrix.

Violent match

# Build violence matcher matcher = cv2.BFMatcher() # Using KNN to detect SIFT feature matching from A and B graphs, K=2 rawMatches = matcher.knnMatch(featuresA, featuresB, 2) matches = [] for m in rawMatches: # When the ratio of the nearest distance to the next nearest distance is less than the ratio value, the matching pair is retained if len(m) == 2 and m[0].distance < m[1].distance * ratio: # Store index values of two points matches.append((m[0].trainIdx, m[0].queryIdx))

This part of the code can also be explained in detail in Feature matching of Opencv Found in.

It is worth mentioning that we use m[0].trainIdx and m[0].queryIdx to store the index value of two points. The former represents the index value corresponding to the matching point m in the feature point extracted in the first picture, and the latter represents the index value corresponding to the matching point m in the feature point extracted in the second picture.

Calculation of angle transformation matrix

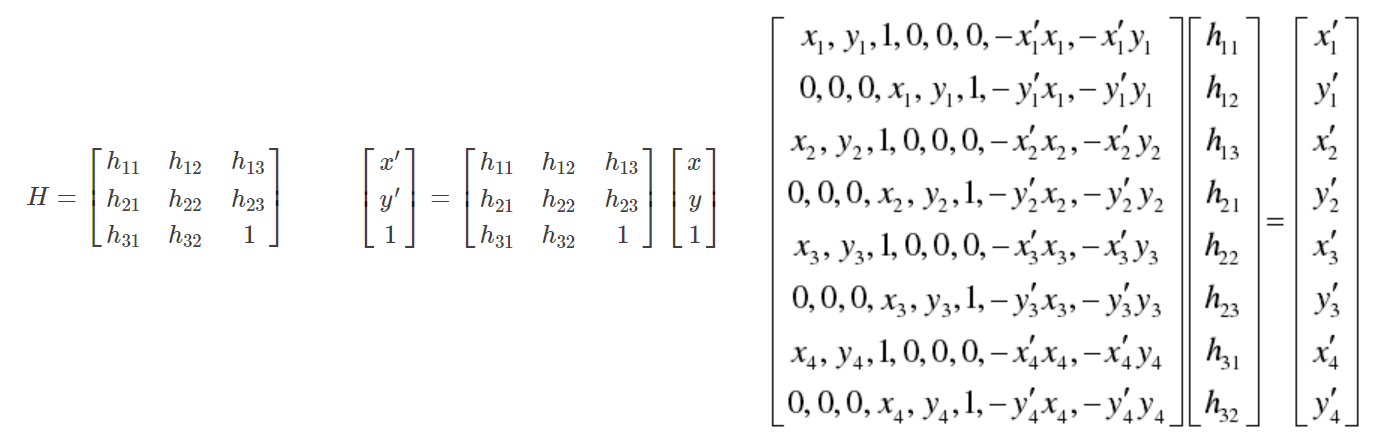

This perspective transformation matrix is also called homography matrix (H). As shown in the figure below, at least 8 equations are needed to solve the eight unknowns to get the H matrix. Eight equations can be obtained by finding four points (each point has two dimensions of x and y).

# When the filtered matching pair is greater than 4, the perspective transformation matrix is calculated if len(matches) > 4: # Get point coordinates of matching pairs ptsA = np.float32([kpsA[i] for (_, i) in matches]) ptsB = np.float32([kpsB[i] for (i, _) in matches]) # Calculation of angle transformation matrix (H, status) = cv2.findHomography(ptsA, ptsB, cv2.RANSAC, reprojThresh) # Return result return (matches, H, status)

In the above code, we use the function cv2.findhomagraphy (PTSA, ptsb, cv2.ransac, reprojthresh) to get the homography matrix H.

Function introduction

cv2.findHomography(ptsA, ptsB, cv2.RANSAC, reprojThresh)

Input parameters:

- kpA represents the coordinates of key points of image A

- Coordinates of key points of kpB image B

- cv2.RANSAC refers to the use of random sampling consistency algorithm for iteration

- reproThresh represents the number of samples taken each time

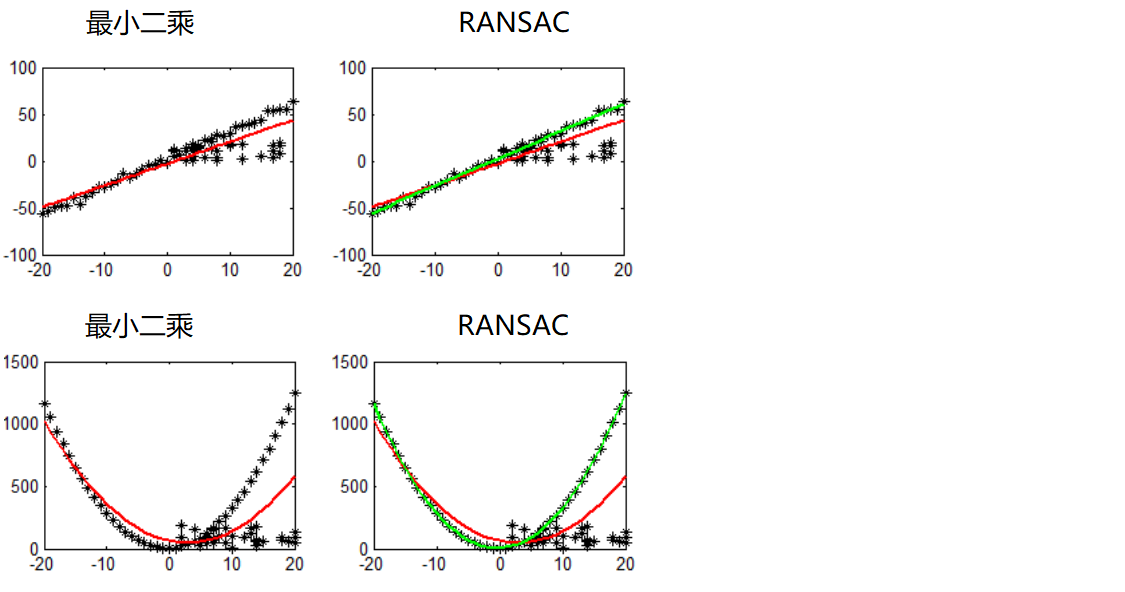

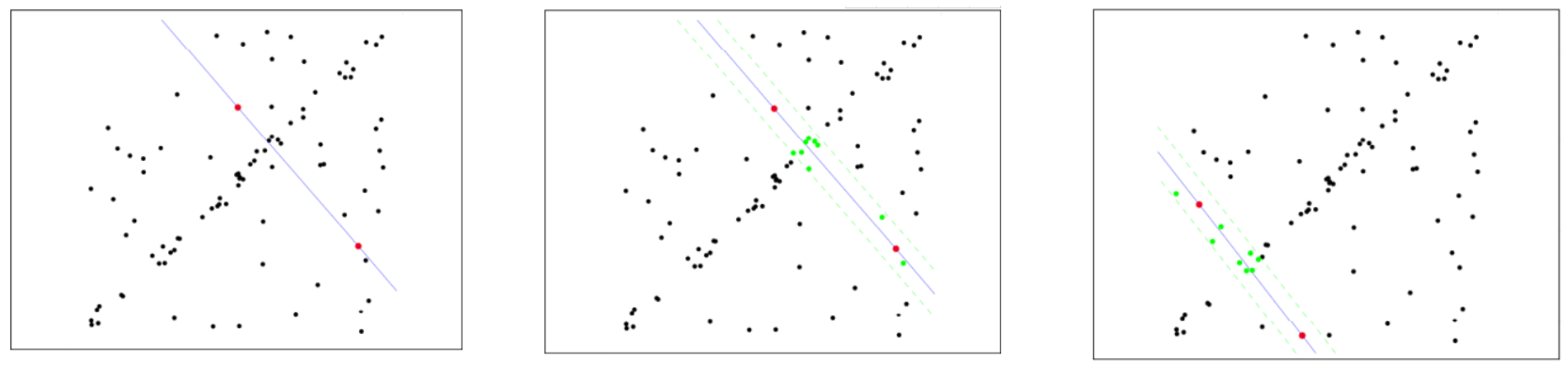

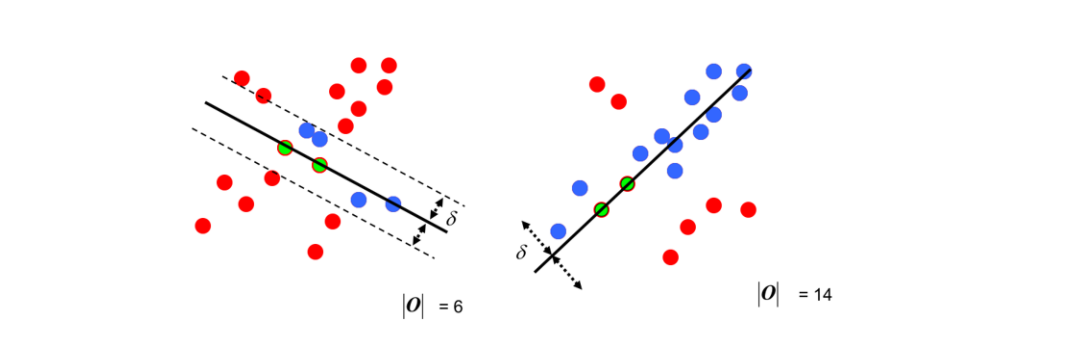

PS: RANSAC algorithm (random sampling consistency algorithm): for the left figure, it can be seen that as many satisfied points as possible can be distributed around the fitting curve using the least square method to reduce the root mean square error, so the fitting curve is prone to deviate to a certain extent, but RANSAC does not. Principle of RANSCA: because only two points are needed to fit a straight line, we randomly select two points each time, make a straight line, delimit a distance, judge the number of points falling in the range of distance around the straight line, and continue to iterate until the fitted straight line is found, so that the fitting curve with the most points falling on the upper surface is obtained.

Principle of RANSCA: because only two points are needed to fit a straight line, we randomly select two points each time, make a straight line, delimit a distance, judge the number of points falling in the range of distance around the straight line, and continue to iterate until the fitted straight line is found, so that the fitting curve with the most points falling on the upper surface is obtained.

2.4 drawMatches(self, imageA, imageB, kpsA, kpsB, matches, status)

This function is used to return visual results, that is, connect the feature points that meet the matching conditions.

# Initialize the visualization picture and connect the A and B pictures together (hA, wA) = imageA.shape[:2] (hB, wB) = imageB.shape[:2] vis = np.zeros((max(hA, hB), wA + wB, 3), dtype="uint8") vis[0:hA, 0:wA] = imageA vis[0:hB, wA:] = imageB # Joint traversal to draw matching pairs for ((trainIdx, queryIdx), s) in zip(matches, status): # When the point pair match is successful, draw it on the visualization if s == 1: # Draw a match ptA = (int(kpsA[queryIdx][0]), int(kpsA[queryIdx][1])) ptB = (int(kpsB[trainIdx][0]) + wA, int(kpsB[trainIdx][1])) cv2.line(vis, ptA, ptB, (0, 255, 0), 1) # Return visualization results return vis

After calling this function, you can get:

2.5 stitch(self, images, ratio=0.75, reprojThresh=4.0,showMatches=False)

#Splicing function def stitch(self, images, ratio=0.75, reprojThresh=4.0,showMatches=False): #Get input picture (imageB, imageA) = images #Detect SIFT key feature points of A and B pictures, and calculate feature descriptors (kpsA, featuresA) = self.detectAndDescribe(imageA) (kpsB, featuresB) = self.detectAndDescribe(imageB) # Match all feature points of two pictures and return matching results M = self.matchKeypoints(kpsA, kpsB, featuresA, featuresB, ratio, reprojThresh) # If the returned result is empty and there is no matching feature point, exit the algorithm if M is None: return None # Otherwise, extract the matching results # H is the 3 x 3 perspective transformation matrix (matches, H, status) = M # Change the perspective of picture A, and result is the transformed picture result = cv2.warpPerspective(imageA, H, (imageA.shape[1] + imageB.shape[1], imageA.shape[0])) self.cv_show('result', result) # Transfer picture B to the leftmost end of the result picture result[0:imageB.shape[0], 0:imageB.shape[1]] = imageB self.cv_show('result', result) # Check whether picture matching needs to be displayed if showMatches: # Generate matching picture vis = self.drawMatches(imageA, imageB, kpsA, kpsB, matches, status) # Return result return (result, vis) # Return matching results return result

Picture A is the picture after the angle of view transformation:

Running the main program

if __name__ == '__main__': # Read mosaic imageA = cv2.imread("left_01.png") imageB = cv2.imread("right_01.png") # Mosaic the picture into a panorama stitcher = Stitcher() (result, vis) = stitcher.stitch([imageA, imageB], showMatches=True) # Show all pictures cv2.imshow("Image A", imageA) cv2.imshow("Image B", imageB) cv2.imshow("Keypoint Matches", vis) cv2.imshow("Result", result) cv2.waitKey(0) cv2.destroyAllWindows()

Finally get the picture: