catalogue

Generate HTML test report (Master)

Parameterization

1. Why parameterization

Solve the problem of redundant code

2. What is parameterization

Description: dynamically obtain parameters according to requirements

3. Parametric application scenario

Scenario: solve the problem of the same business logic and different test data. Implementation: by installing the unittest extension parameterized

Steps:

1. Guide Package: from parameterized import parameterized

2. Modify the test function @ parameterized Expand (list type data)

3. Use the variable to receive the passed value in the test function

Syntax:

1. Single parameter: the value is list [1,2,3]

2. Multiple parameters: values are list nested primitives, such as: [(1,2,3), (2,3,4)]

3. Apply parameter values to the parameter setting variables in the test function. Note: the number of variables must be the same as the number of data values

Execution code of parameterized function:

data package Py file code

def get_data():

return [(1,2,3),(2,3,5),(3,4,7),(4,5,9)]TestCase. Under TestCase package Code under py file:

# Guide Package

import unittest

from parameterized import parameterized

# Create a function to test

from data.data import get_data

def add_data(x,y):

return x + y

# Create test class

class TestAdd(unittest.TestCase):

# Create test function

# Use parametric Tags

@parameterized.expand(get_data())

def testAdd(self,x,y,expant):

result = add_data(x,y)

self.assertEqual(result,expant)

print(result)

Execution result:

Ran 4 tests in 0.011s OK 3 5 7 9 Process finished with exit code 0

Skip use case

1. Directly mark the test function as checked (skip directly)

@unittest.skip('description ')

Scenario: it is generally suitable for the use case where the function is realized

Execution code:

# Guide Package

import unittest

from parameterized import parameterized

# Create a function to test

from data.data import get_data

def add_data(x,y):

return x + y

# Create test class

class TestAdd(unittest.TestCase):

# Create test function

# Use parametric Tags

@parameterized.expand(get_data())

def testAdd01(self,x,y,expant):

result = add_data(x,y)

self.assertEqual(result,expant)

print(result)

@unittest.skip("This function will be launched in the next version")

def testAdd02(self):

"""

This function will be launched in the next version

"""

passExecution result:

3 5 7 9 Skipped: This function will be launched in the next version Ran 5 tests in 0.012s OK (skipped=1)

2. Judge whether the test function is skipped according to the conditions (if the conditions are met, skip)

@unittest. Skipif (condition 'cause')

Scenario: if the general judgment conditions are met, it will not be executed; For example, this function is invalid when the specified version is reached

Execution code:

# Guide Package

import unittest

from parameterized import parameterized

# Create a function to test

from data.data import get_data

VERSION = 30

def add_data(x,y):

return x + y

# Create test class

class TestAdd(unittest.TestCase):

# Create test function

# Use parametric Tags

@parameterized.expand(get_data())

def testAdd01(self,x,y,expant):

result = add_data(x,y)

self.assertEqual(result,expant)

print(result)

@unittest.skip("This function will be launched in the next version")

def testAdd02(self):

"""

This function will be launched in the next version

"""

pass

@unittest.skipIf(VERSION > 25 , "This function has failed")

def testAdd02(self):

"""

This function has failed

"""

print("testAdd02")

Execution result:

3 5 7 9 Skipped: This function has failed Ran 5 tests in 0.006s OK (skipped=1)

Tips:

Both methods can modify methods or test classes

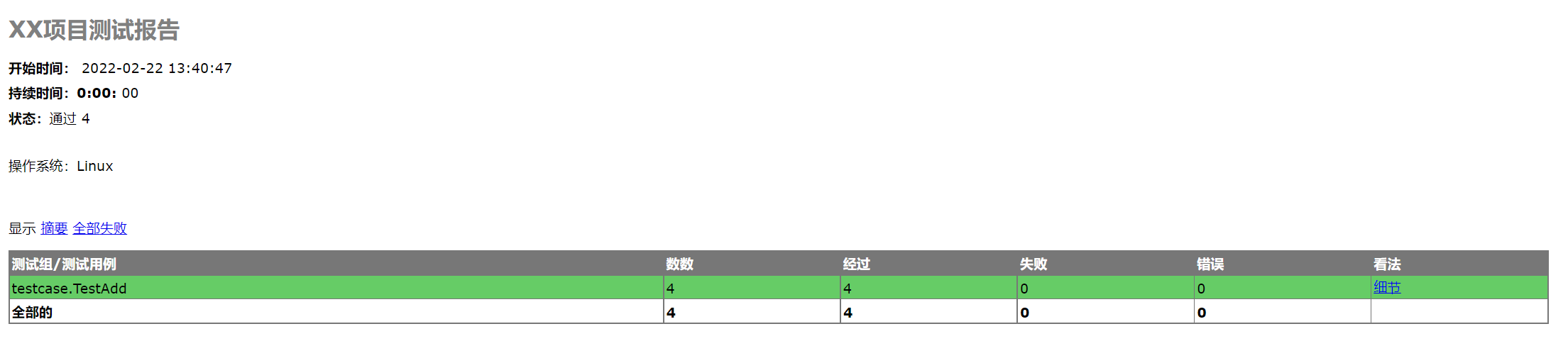

Generate HTML test report (Master)

HTML report: adapted from TextTestRunner

Operation:

1. Guide Package

2. Define test suite

3. Instantiate the HTMLTestRunner class and call the run method to execute the test suite

Steps:

1. Copy htmltestrunner Py file to the specified directory

2. Guide Package: from HTMLTestRunner import

3. Get the file stream where the report is stored, and instantiate the HTMLTestRunner class

suite = unittest.defaultTestLoader.discover("./",pattern = "test02*.py")

4. Execute the run method

with open ("... / erport/report.html", "wb") as f: # note: to generate html report, wb must be used and written in binary form

HTMLTestRunner(stream = f).run(suite)

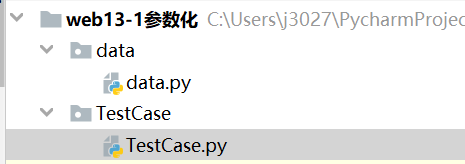

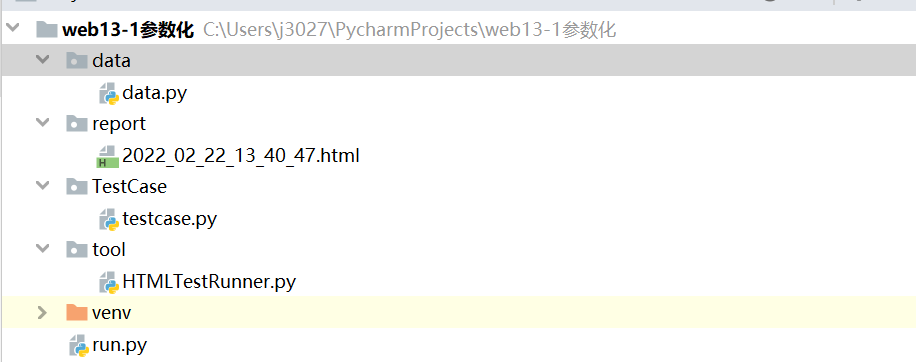

Execution code and file structure:

import time

from tool.HTMLTestRunner import HTMLTestRunner

import unittest

# Test execution Suite

suite = unittest.defaultTestLoader.discover("./TestCase",pattern="test*.py")

# Write the query file and execute it to generate an html report

with open("./report/{}.html".format(time.strftime('%Y_%m_%d_%H_%M_%S')),"wb") as f:

# Instantiate the HTMLTestRunner class and execute

HTMLTestRunner(stream=f,verbosity= 2,title="XX Project test report",description="Operating system: Linux").run(suite)Execution result:

ok testAdd01_0 (testcase.TestAdd) <_io.TextIOWrapper name='<stderr>' mode='w' encoding='UTF-8'> ok testAdd01_1 (testcase.TestAdd) TimeElapsed: 0:00:00 ok testAdd01_2 (testcase.TestAdd) ok testAdd01_3 (testcase.TestAdd)

Example report generation:

Difference between open and with open:

1. Common ground: open the file

2. Difference: with open = Open + close