This article is shared from Huawei cloud community< WeNet cloud reasoning deployment code analysis >, author: xiaoye0829.

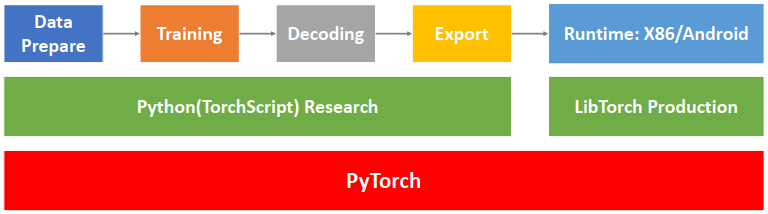

WeNet is an open source end-to-end ASR toolkit. Compared with open source voice projects such as ESPnet, its biggest advantage is that it provides a complete set of tool chain from training to deployment, making the industrial implementation of ASR services easier. As shown in Figure 1, the WeNet toolkit completely depends on the PyTorch Ecology: model development using TorchScript, dynamic feature extraction using Torchaudio, and distributed data parallel for distributed training, Use torch JIT (Just In Time) for model export and LibTorch as the production environment runtime. This series will analyze the reasoning deployment code of WeNet cloud.

Figure 1: WeNet system design [1]

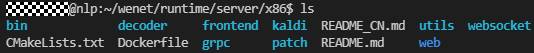

1. Code structure

The WeNet cloud reasoning and deployment code is located in the path of wenet/runtime/server/x86. The programming language is C + +. Its structure is as follows:

Of which:

- The codes related to voice file reading and feature extraction are located in the frontend folder;

- The codes related to end-to-end model import, endpoint detection and speech decoding recognition are located in the decoder folder. WeNet supports CTC prefix beam search and CTC beam search integrated with WFST. The implementation of the latter draws a lot from Kaldi, and the relevant codes are placed in the Kaldi file folder;

- In terms of service, WeNet implements two sets of server and client based on websocket and grpc respectively. The implementation based on websocket is located in the websocket folder, and the implementation based on grpc is located in the grpc folder. The entry main function codes of the two implementations are located in the bin file folder.

- Auxiliary codes such as log, timing and string processing are located in the utils folder.

WeNet provides cmakelists Txt and Dockerfile, so that users can easily compile projects and build images.

2. Front end: frontend folder

1) Voice file read in

WeNet only supports wav format audio data of 44 byte header. wav header is defined in wavheader structure, including audio format, channel number, sampling rate and other audio meta information. The WavReader class is used to read the voice file. After calling fopen to open the voice file, the WavReader reads the data of WavHeader size (that is, 44 bytes), then determines the size of the audio data to be read according to the meta information in WavHeader. Finally, it calls fread to read the audio data into buffer, and converts the data into float type by static_cast.

struct WavHeader {

char riff[4]; // "riff"

unsigned int size;

char wav[4]; // "WAVE"

char fmt[4]; // "fmt "

unsigned int fmt_size;

uint16_t format;

uint16_t channels;

unsigned int sample_rate;

unsigned int bytes_per_second;

uint16_t block_size;

uint16_t bit;

char data[4]; // "data"

unsigned int data_size;

};There is a risk that if the meta information stored in the WavHeader is incorrect, it will affect the correct reading of voice data.

2) Feature extraction

The feature used by WeNet is fbank, which is set through the FeaturePipelineConfig structure. The default frame length is 25ms, the frame shift is 10ms, and the sampling rate and fbank dimension are input by the user.

The class used for feature extraction is FeaturePipeline. In order to support both streaming and non streaming speech recognition, input is set in the FeaturePipeline class_ finished_ Property to mark whether the input ends, and set_input_finished() member function to input_finished_ Property.

The extracted fbank features are placed in the feature_queue_ In, feature_queue_ The type of is BlockingQueue < STD:: vector < float > >. BlockingQueue class is a blocking queue implemented by WeNet. During initialization, it is necessary to provide the capacity of the queue, add features to the queue through Push() function, and read features from the queue through Pop() function:

- When feature_queue_ If the number of features in exceeds capacity, the Push thread is suspended and waits for features_ queue_. Pop() frees up space.

- When feature_queue_ If it is empty, the Pop thread is suspended and waits for the feature_queue_.Push().

Thread suspension and recovery are performed through the thread synchronization primitives std::mutex and STD:: condition in the C + + standard library_ Variable, etc.

Thread synchronization is also used in the two member functions of AcceptWaveform and ReadOne. AcceptWaveform puts the fbank features extracted from voice data into the feature_queue_ In, the ReadOne member function removes the feature from the feature_queue_ It is a classic producer consumer model.

3. Decoder: decoder folder

1)TorchAsrModel

Deserialize the model on disk through torch::jit::load to obtain a ScriptModule object.

torch::jit::script::Module model = torch::jit::load(model_path);

2)SearchInterface

The decoding methods supported by WeNet reasoning inherit from the base class SearchInterface. If you want to add a decoding algorithm, you need to inherit the SearchInterface class and provide the implementation of all pure virtual functions in this class, including:

// Implementation of decoding algorithm virtual void Search(const torch::Tensor& logp) = 0; // Reset decoding process virtual void Reset() = 0; // End decoding process virtual void FinalizeSearch() = 0; // Decoding algorithm type, return an enumeration constant SearchType virtual SearchType Type() const = 0; // Return decoded input virtual const std::vector<std::vector<int>>& Inputs() const = 0; // Return decoded output virtual const std::vector<std::vector<int>>& Outputs() const = 0; // Returns the likelihood value corresponding to the decoded output virtual const std::vector<float>& Likelihood() const = 0; // Returns the number of times corresponding to the decoded output virtual const std::vector<std::vector<int>>& Times() const = 0;

At present, WeNet only provides two subclass implementations of SearchInterface, that is, two decoding algorithms, which are defined in two classes: CtcPrefixBeamSearch and CtcWfstBeamSearch.

3)CtcEndpoint

WeNet supports voice endpoint detection and provides a rule-based implementation. Users can configure rules through the structure of ctcandpointconfig and ctcandpointrule. WeNet has three default rules:

- If 5s silence is detected, the endpoint is considered to be detected;

- After decoding the speech of any length of time, if 1s silence is detected, it is considered that the endpoint is detected;

- If 20s speech is decoded, the endpoint is considered to be detected.

Once an endpoint is detected, decoding ends. In addition, WeNet treats the decoded blank as mute.

4)TorchAsrDecoder

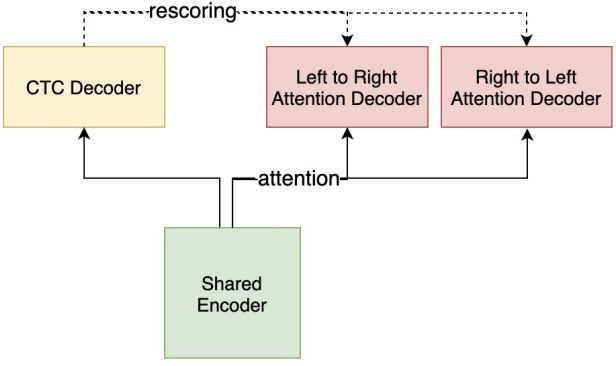

The decoder provided by WeNet is defined in the TorchAsrDecoder class. As shown in Figure 3, WeNet supports bidirectional decoding, that is, superimposing the results of decoding from left to right and decoding from right to left. After CTC beam search, the user can also choose to re score the attention.

Figure 2: WeNet decoding calculation flow [2]

Decoding parameters can be configured through the DecodeOptions structure, including the following parameters:

struct DecodeOptions {

int chunk_size = 16;

int num_left_chunks = -1;

float ctc_weight = 0.0;

float rescoring_weight = 1.0;

float reverse_weight = 0.0;

CtcEndpointConfig ctc_endpoint_config;

CtcPrefixBeamSearchOptions ctc_prefix_search_opts;

CtcWfstBeamSearchOptions ctc_wfst_search_opts;

};Among them, ctc_weight represents CTC decoding weight, rescoring_weight means re scoring weight, reverse_weight represents the decoding weight from right to left. The final decoding score is calculated as follows:

final_score = rescoring_weight * rescoring_score + ctc_weight * ctc_score; rescoring_score = left_to_right_score * (1 - reverse_weight) + right_to_left_score * reverse_weight

The decoding interface provided by TorchAsrDecoder is decode(), and the re scoring interface is Rescoring(). Decode() returns the enumeration type DecodeState, including three enumeration constants: kEndBatch, kEndpoint and kEndFeats, which respectively represent the end of decoding the current batch of data, the end point detected and the end of decoding all features.

In order to support long speech recognition, WeNet also provides a continuous decoding interface ResetContinuousDecoding(), which is different from the decoder reset interface Reset(): the continuous decoding interface will record the number of global decoded speech frames and retain the current feature_pipeline_ Status of the.

Because streaming ASR service needs two-way streaming data transmission between client and server, WeNet implements two service interfaces supporting two-way streaming communication, which are based on WebSocket and gRPC respectively.

4. Based on WebSocket

1) Introduction to WebSocket

WebSocket is a new network protocol based on TCP. Different from HTTP protocol, WebSocket allows the server to actively send information to the client. After the connection is established, the client and server can continuously send data to each other without re initiating the connection request every time they send data. Therefore, it greatly reduces the resource consumption of network bandwidth and has more advantages in performance.

WebSocket supports data transmission in both text and binary formats.

2) WebSocket interface of WeNet

WeNet uses the WebSocket implementation of boost library and defines two classes: WebSocketClient (client) and WebSocketServer (server).

In the process of streaming ASR, sending data from WebSocketClient to WebSocketServer can be divided into three steps: 1) sending start signal and decoding configuration; 2) Send binary voice data: pcm byte stream; 3) Send stop signal. From WebSocketClient::SendStartSignal() and WebSocketClient::SendEndSignal(), we can see that the start signal, decoding configuration and stop signal are wrapped in json strings and transmitted through WebSocket text format. pcm byte stream is transmitted through WebSocket binary format.

void WebSocketClient::SendStartSignal() {

// TODO(Binbin Zhang): Add sample rate and other setting surpport

json::value start_tag = {{"signal", "start"},

{"nbest", nbest_},

{"continuous_decoding", continuous_decoding_}};

std::string start_message = json::serialize(start_tag);

this->SendTextData(start_message);

}

void WebSocketClient::SendEndSignal() {

json::value end_tag = {{"signal", "end"}};

std::string end_message = json::serialize(end_tag);

this->SendTextData(end_message);

}After receiving the data, WebSocketServer needs to first judge whether the received data is in text or binary format: if it is text data, json parsing is performed, and decoding configuration, start or stop are performed according to the parsing results. The processing logic is defined in the ConnectionHandler::OnText() function. If it is binary data, speech recognition is performed, and the processing logic is defined in ConnectionHandler::OnSpeechData().

3) Shortcomings

WebSocket requires developers to write corresponding message construction and parsing codes in WebSocketClient and WebSocketServer, which is prone to errors. In addition, from the above code, the service needs to serialize and deserialize data with the help of json format, which is not as efficient as protobuf format.

The gRPC framework provides a better solution to these shortcomings.

5. gRPC based

1) Introduction to gRPC

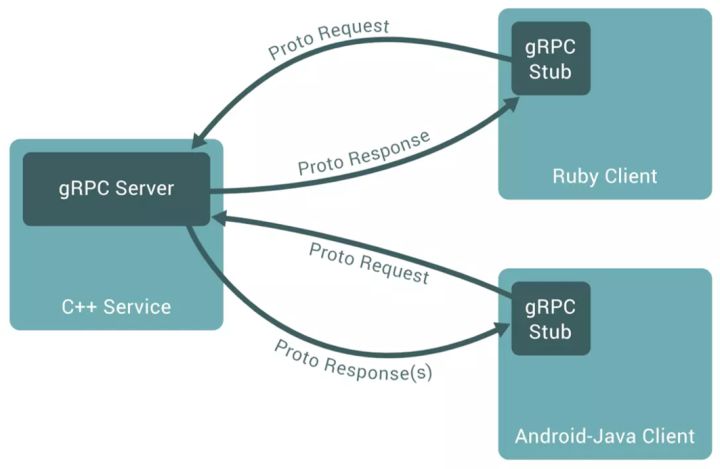

gRPC is an open source RPC framework launched by Google. It uses HTTP2 as the network transmission protocol and protobuf as the data exchange format, which has higher data transmission efficiency. Under the gRPC framework, developers only need to pass one After the RPC service and message are defined in the proto file, the message construction and parsing code can be automatically generated through the code generation tool (protocol compiler) provided by gRPC, so that developers can better focus on the interface design itself.

When making an RPC call, gRPC Stub (client) sends the Request message defined in the. proto file to gRPC Server (server). After gRPC Server processes the Request, it returns the result to gRPC Stub through the Response message defined in the. proto file.

gRPC has cross language features and supports the interaction of micro services written in different languages. For example, the server is implemented in C + + and the client is implemented in Ruby. The protocol compiler supports code generation in 12 languages.

Figure 1: GRC server and GRC stub interaction [1]

2) proto file of WeNet

The service defined by WeNet is ASR and contains a Recognize method. The input (Request) and output (Response) of this method are stream data. After compiling the proto file with the protocol compiler, four files will be obtained: wenet.grpc.pb.h, http://wenet.grpc.pb.cc,wenet.pb.h,http://wenet.pb.cc . Among them, wenet pb. H / cc stores the definition of protobuf data format, wenet gRPC. pb. H stores the definition of gRPC server / client. By including wenet. Com in the code pb. H and wenet gRPC. pb. H two header files. Developers can directly use the Request message and Response message classes to access their fields.

service ASR {

rpc Recognize (stream Request) returns (stream Response) {}

}

message Request {

message DecodeConfig {

int32 nbest_config = 1;

bool continuous_decoding_config = 2;

}

oneof RequestPayload {

DecodeConfig decode_config = 1;

bytes audio_data = 2;

}

}

message Response {

message OneBest {

string sentence = 1;

repeated OnePiece wordpieces = 2;

}

message OnePiece {

string word = 1;

int32 start = 2;

int32 end = 3;

}

enum Status {

ok = 0;

failed = 1;

}

enum Type {

server_ready = 0;

partial_result = 1;

final_result = 2;

speech_end = 3;

}

Status status = 1;

Type type = 2;

repeated OneBest nbest = 3;

}3) gRPC implementation of WeNet

The WeNet gRPC server defines the GrpcServer class, which inherits from WeNet gRPC. pb. Pure virtual base class ASR::Service in H.

The entry function of speech recognition is GrpcServer::Recognize, which initializes an instance of GRPCConnectionHandler for speech recognition, and passes input and output through the stream object of ServerReaderWriter class.

Status GrpcServer::Recognize(ServerContext* context,

ServerReaderWriter<Response, Request>* stream) {

LOG(INFO) << "Get Recognize request" << std::endl;

auto request = std::make_shared<Request>();

auto response = std::make_shared<Response>();

GrpcConnectionHandler handler(stream, request, response, feature_config_,

decode_config_, symbol_table_, model_, fst_);

std::thread t(std::move(handler));

t.join();

return Status::OK;

}The WeNet gRPC client defines the GrpcClient class. When establishing a connection with the server, the client needs to instantiate ASR::Stub, and realize two-way streaming communication through the stream object of ClientReaderWriter class.

void GrpcClient::Connect() {

channel_ = grpc::CreateChannel(host_ + ":" + std::to_string(port_),

grpc::InsecureChannelCredentials());

stub_ = ASR::NewStub(channel_);

context_ = std::make_shared<ClientContext>();

stream_ = stub_->Recognize(context_.get());

request_ = std::make_shared<Request>();

response_ = std::make_shared<Response>();

request_->mutable_decode_config()->set_nbest_config(nbest_);

request_->mutable_decode_config()->set_continuous_decoding_config(

continuous_decoding_);

stream_->Write(*request_);

}http://grpc_client_main.cc In, the client transmits voice data in segments every 0.5s, that is, for a voice file with a sampling rate of 8k, 4000 frames of data are transmitted each time. In order to reduce the size of the transmitted data and improve the data transmission speed, first change the float type to int16 on the client_ t. After receiving the data, the server sends int16_t to float. Float in c + + is 32 bits.

int main(int argc, char *argv[]) {

...

// Send data every 0.5 second

const float interval = 0.5;

const int sample_interval = interval * sample_rate;

for (int start = 0; start < num_sample; start += sample_interval) {

if (client.done()) {

break;

}

int end = std::min(start + sample_interval, num_sample);

// Convert to short

std::vector<int16_t> data;

data.reserve(end - start);

for (int j = start; j < end; j++) {

data.push_back(static_cast<int16_t>(pcm_data[j]));

}

// Send PCM data

client.SendBinaryData(data.data(), data.size() * sizeof(int16_t));

...

}summary

This paper mainly analyzes the cloud deployment code of WeNet, and introduces two service interfaces of WeNet based on WebSocket and gRPC.

The WeNet code structure is clear, concise and easy to use. It provides a set of end-to-end solutions from training to deployment for speech recognition, which greatly promotes the industrial landing efficiency. It is a speech open source project worthy of reference and learning.

reference resources

[1] https://grpc.io/docs/what-is-grpc/introduction/

[2]WeNet: Production First and Production Ready End-to-End Speech Recognition Toolkit

[4]WeNet: Production First and Production Ready End-to-End Speech Recognition Toolkit

[5] U2++: Unified Two-pass Bidirectional End-to-end Model for Speech Recognition

Click focus to learn about Huawei cloud's new technologies for the first time~