1, Overview

As we all know, Redis is a high-performance data storage framework. In high concurrency system design, Redis is also a key component and a powerful tool for us to improve system performance. It is more and more important to deeply understand the high-performance principle of Redis. Of course, the high-performance design of Redis is a systematic project, involving many contents. This paper focuses on Redis's IO model and the thread model based on IO model.

Starting from the origin of IO, we talked about blocking IO, non blocking IO and multiplexing io. Based on multiplex IO, we also combed several different Reactor models, and analyzed the advantages and disadvantages of several Reactor models. Based on the Reactor model, we started the analysis of Redis IO model and thread model, and summarized the advantages and disadvantages of Redis thread model, as well as the subsequent Redis multithreading model scheme. The focus of this paper is to sort out the design idea of Redis thread model. If you smooth out the design idea, you can do everything.

Note: the codes in this article are pseudo codes, which are mainly for illustration and cannot be used in production environment.

2, Development history of network IO model

The network IO model we often talk about mainly includes blocking IO, non blocking IO, multiplexing IO, signal driven IO and asynchronous io. This paper focuses on the content related to Redis, so we focus on analyzing blocking IO, non blocking IO and multiplexing IO to help you better understand the Redis network model in the future.

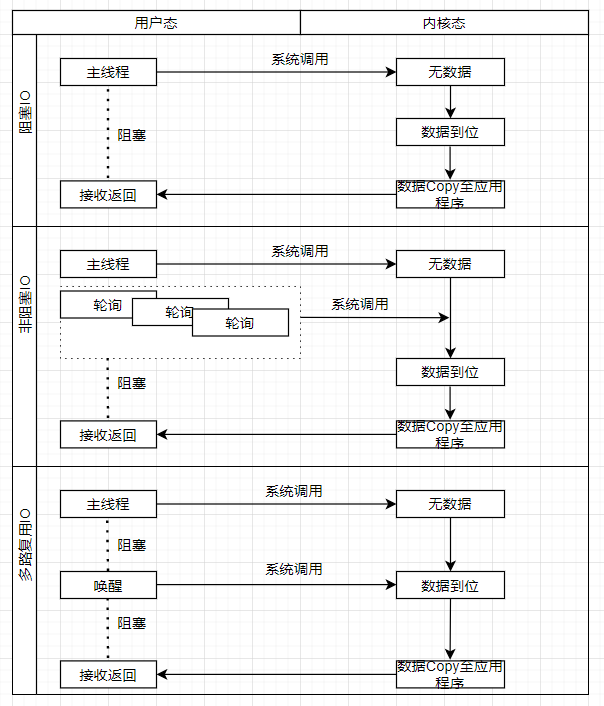

Let's look at the following picture first;

2.1 blocking IO

Blocking IO, which we often talk about, is actually divided into two types: one is single thread blocking and the other is multi thread blocking. There are actually two concepts, blocking and threading.

Blocking: refers to that the current thread will be suspended before the call result is returned, and the calling thread will return only after the result is obtained;

Thread: the number of threads of the system call.

For example, establishing connection, reading and writing all involve system calls, which itself is a blocking operation.

2.1.1 single thread blocking

The server is handled by a single thread. When the client request comes, the server uses the main process to handle the connection, read, write and other operations.

The following code simulates the blocking mode of single thread;

import java.net.Socket;

public class BioTest {

public static void main(String[] args) throws IOException {

ServerSocket server=new ServerSocket(8081);

while(true) {

Socket socket=server.accept();

System.out.println("accept port:"+socket.getPort());

BufferedReader in=new BufferedReader(new InputStreamReader(socket.getInputStream()));

String inData=null;

try {

while ((inData = in.readLine()) != null) {

System.out.println("client port:"+socket.getPort());

System.out.println("input data:"+inData);

if("close".equals(inData)) {

socket.close();

}

}

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

socket.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

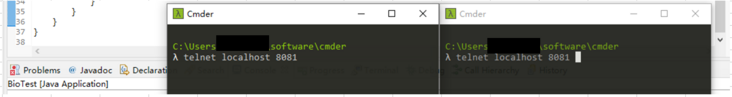

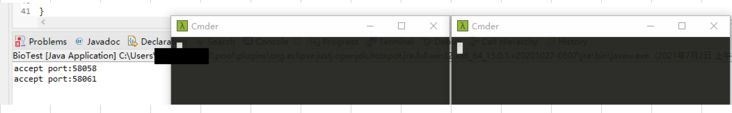

}We are going to use two clients to initiate connection requests at the same time to simulate the phenomenon of single thread blocking mode. At the same time, the connection is initiated. Through the server log, we find that the server only accepts one of the connections, and the main thread is blocked on the read method of the previous connection.

We try to close the first connection and look at the second connection. What we want to see is that the main thread returns and the new client connection is accepted.

It is found from the log that after the first connection is closed, the request of the second connection is processed, that is, the second connection request is queued until the main thread is awakened, and the next request can be received, which is in line with our expectations.

At this time, we should not only ask, why?

The main reason is that the accept, read and write functions are blocked. When the main thread is called by the system, the thread is blocked, and the connections of other clients cannot be responded.

Through the above process, we can easily find the defects of this process. The server can only process one connection request at a time, the CPU is not fully utilized, and the performance is relatively low. How to make full use of the multi-core characteristics of CPU? Naturally, I thought of multithreading logic.

2.1.2 multi thread blocking

For engineers, the code explains everything, directly on the code.

BIO multithreading

package net.io.bio;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.ServerSocket;

import java.net.Socket;

public class BioTest {

public static void main(String[] args) throws IOException {

final ServerSocket server=new ServerSocket(8081);

while(true) {

new Thread(new Runnable() {

public void run() {

Socket socket=null;

try {

socket = server.accept();

System.out.println("accept port:"+socket.getPort());

BufferedReader in=new BufferedReader(new InputStreamReader(socket.getInputStream()));

String inData=null;

while ((inData = in.readLine()) != null) {

System.out.println("client port:"+socket.getPort());

System.out.println("input data:"+inData);

if("close".equals(inData)) {

socket.close();

}

}

} catch (IOException e) {

e.printStackTrace();

} finally {

}

}

}).start();

}

}

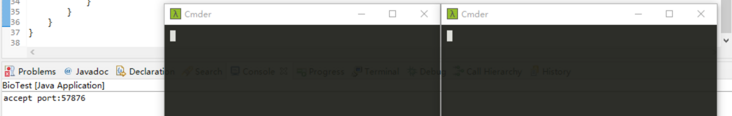

}Similarly, we initiate two requests in parallel;

Both requests are accepted, and the server adds two threads to handle the client connection and subsequent requests.

We use multithreading to solve the problem that the server can only process one request at the same time, but it also brings a problem. If the client has more connections, the server will create a large number of threads to process requests, but the threads themselves consume resources. How to solve the problem?

2.2 non blocking

If we put all sockets (file handles, and then use sockets to replace the concept of fd, so as to minimize the concept and reduce the reading burden) into the queue, only one thread is used to train the status of all sockets in turn. If we take them out when we are ready, does it reduce the number of threads on the server?

Let's look at the code. We basically don't need the simple non blocking mode. In order to demonstrate the logic, we simulated the relevant code as follows;

package net.io.bio;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.ServerSocket;

import java.net.Socket;

import java.net.SocketTimeoutException;

import java.util.ArrayList;

import java.util.List;

import org.apache.commons.collections4.CollectionUtils;

public class NioTest {

public static void main(String[] args) throws IOException {

final ServerSocket server=new ServerSocket(8082);

server.setSoTimeout(1000);

List<Socket> sockets=new ArrayList<Socket>();

while (true) {

Socket socket = null;

try {

socket = server.accept();

socket.setSoTimeout(500);

sockets.add(socket);

System.out.println("accept client port:"+socket.getPort());

} catch (SocketTimeoutException e) {

System.out.println("accept timeout");

}

//Analog non blocking: poll the connected sockets, wait for 10MS for each socket, process when there is data, return when there is no data, and continue polling

if(CollectionUtils.isNotEmpty(sockets)) {

for(Socket socketTemp:sockets ) {

try {

BufferedReader in=new BufferedReader(new InputStreamReader(socketTemp.getInputStream()));

String inData=null;

while ((inData = in.readLine()) != null) {

System.out.println("input data client port:"+socketTemp.getPort());

System.out.println("input data client port:"+socketTemp.getPort() +"data:"+inData);

if("close".equals(inData)) {

socketTemp.close();

}

}

} catch (SocketTimeoutException e) {

System.out.println("input client loop"+socketTemp.getPort());

}

}

}

}

}

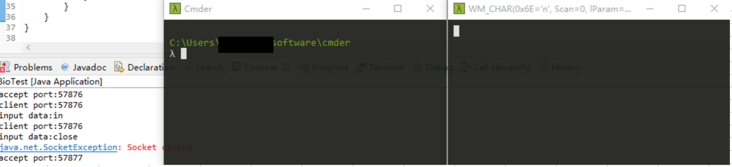

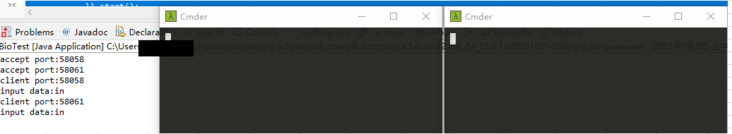

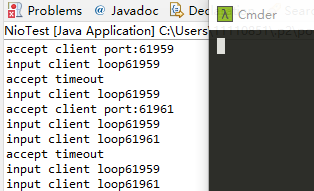

}System initialization, waiting for connection;

Initiate two client connections, and the thread starts polling whether there is data in the two connections.

After the two connections input data respectively, the polling thread finds that the data is ready and starts relevant logical processing (single thread or multi thread).

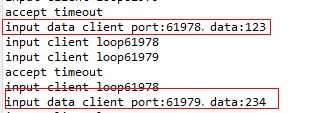

Then use a flow chart to help explain (the system actually uses a file handle. At this time, Socket is used instead to facilitate everyone's understanding).

The server has a thread dedicated to polling all sockets to confirm whether the operating system has completed relevant events. If so, it will return for processing. If not, let's think about it together? What problem does this bring.

CPU idling and system call (each polling involves a system call, and the kernel command is used to confirm whether the data is ready) cause a waste of resources. Is there a mechanism to solve this problem?

2.3 IO multiplexing

Is there a special thread on the server side to do polling operations (the application side is not the kernel), but is triggered by events. When there are relevant read, write and connection events, it will actively arouse the server thread to carry out relevant logic processing. The relevant codes are simulated as follows;

IO multiplexing

import java.net.InetSocketAddress;

import java.nio.ByteBuffer;

import java.nio.channels.SelectionKey;

import java.nio.channels.Selector;

import java.nio.channels.ServerSocketChannel;

import java.nio.channels.SocketChannel;

import java.nio.charset.Charset;

import java.util.Iterator;

import java.util.Set;

public class NioServer {

private static Charset charset = Charset.forName("UTF-8");

public static void main(String[] args) {

try {

Selector selector = Selector.open();

ServerSocketChannel chanel = ServerSocketChannel.open();

chanel.bind(new InetSocketAddress(8083));

chanel.configureBlocking(false);

chanel.register(selector, SelectionKey.OP_ACCEPT);

while (true){

int select = selector.select();

if(select == 0){

System.out.println("select loop");

continue;

}

System.out.println("os data ok");

Set<SelectionKey> selectionKeys = selector.selectedKeys();

Iterator<SelectionKey> iterator = selectionKeys.iterator();

while (iterator.hasNext()){

SelectionKey selectionKey = iterator.next();

if(selectionKey.isAcceptable()){

ServerSocketChannel server = (ServerSocketChannel)selectionKey.channel();

SocketChannel client = server.accept();

client.configureBlocking(false);

client.register(selector, SelectionKey.OP_READ);

//Continue to receive connection events

selectionKey.interestOps(SelectionKey.OP_ACCEPT);

}else if(selectionKey.isReadable()){

//Get SocketChannel

SocketChannel client = (SocketChannel)selectionKey.channel();

//Define buffer

ByteBuffer buffer = ByteBuffer.allocate(1024);

StringBuilder content = new StringBuilder();

while (client.read(buffer) > 0){

buffer.flip();

content.append(charset.decode(buffer));

}

System.out.println("client port:"+client.getRemoteAddress().toString()+",input data: "+content.toString());

//Empty buffer

buffer.clear();

}

iterator.remove();

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

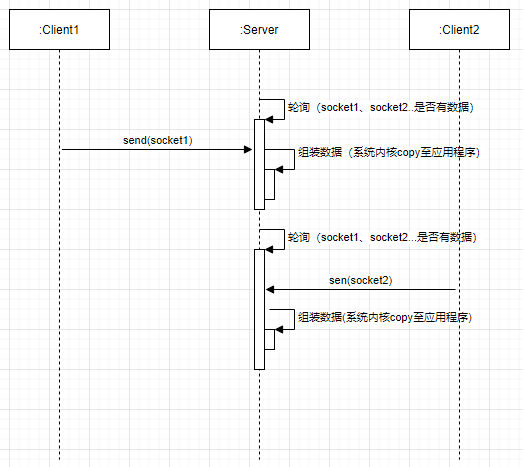

}Create two connections at the same time;

Two connections are created without blocking;

Receive, read and write without blocking;

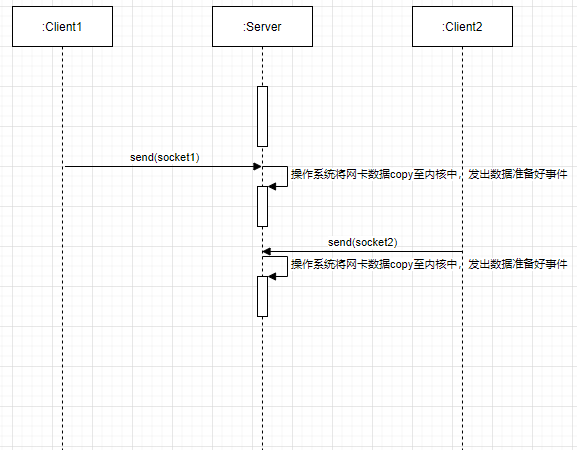

Then use a flow chart to help explain (the system actually uses a file handle. At this time, Socket is used instead to facilitate everyone's understanding).

Of course, there are several ways to implement the multiplexing of the operating system. The select() epoll mode we often use is not explained too much here. If you are interested, you can check the relevant documents. There are asynchronous, event and other modes behind the development of IO. We don't repeat it here. We are more to explain the development of Redis thread mode.

3, NIO thread model interpretation

Let's talk about blocking, non blocking and IO multiplexing modes. What kind of mode does Redis adopt?

Redis adopts IO multiplexing mode, so let's focus on understanding the next multiplexing mode and how to better implement it in our system. Inevitably, we need to talk about Reactor mode.

First, let's explain the relevant terms;

Reactor: similar to the Selector in NIO programming, it is responsible for the distribution of I/O events;

Acceptor: the branch logic that handles the connection after receiving the event in NIO;

Handler: Operation classes such as message read / write processing.

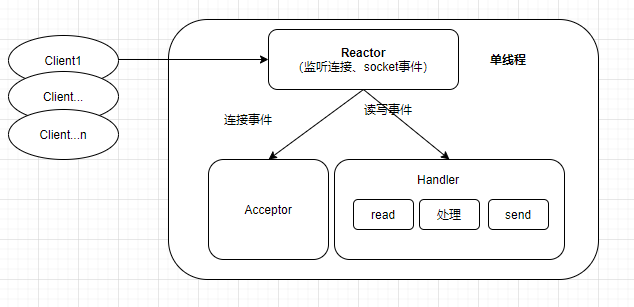

3.1 single Reactor single thread model

Processing flow

- Reactor listens for connection events and Socket events. When a connection event comes, it is handed over to the Acceptor for processing. When a Socket event comes, it is handed over to a corresponding Handler for processing.

advantage

- The model is relatively simple, and all processing processes are in one connection;

- It is easy to implement and the module functions are decoupled. Reactor is responsible for multiplexing and event distribution processing, Acceptor is responsible for connection event processing, and Handler is responsible for socket read-write event processing.

shortcoming

- There is only one thread, and connection processing and business processing share the same thread, which can not make full use of the advantage of CPU multi-core.

- When the traffic is not particularly large and the business processing is relatively fast, the system can perform well. When the traffic is relatively large and the read-write events are time-consuming, it is easy to lead to performance bottlenecks.

How to solve the above problems? Since the business processing logic may affect the system bottleneck, can we take out the business processing logic and hand it over to the thread pool for processing, so as to reduce the impact on the main thread on the one hand, and take advantage of the multi-core CPU on the other hand. I hope you will understand this thoroughly so that we can understand the design idea of Redis from single thread model to multi thread model in the future.

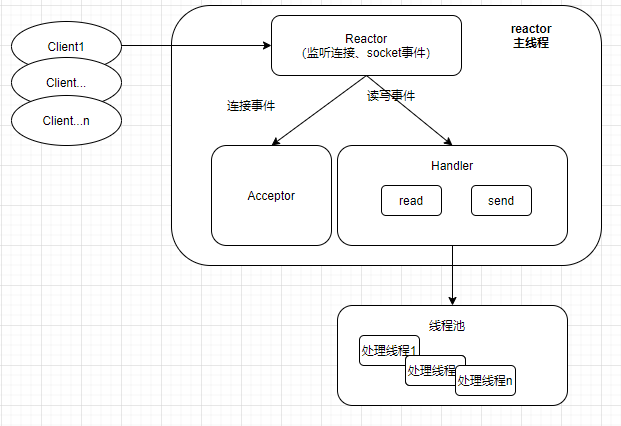

3.2 single Reactor multithreading model

Compared with the single Reactor single thread model, this model only gives the processing logic of business logic to a thread pool for processing.

Processing flow

- Reactor listens for connection events and Socket events. When a connection event comes, it is handed over to the Acceptor for processing. When a Socket event comes, it is handed over to a corresponding Handler for processing.

- After the Handler completes the read event, it is packaged into a task object and handed over to the thread pool for processing, and the business processing logic is handed over to other threads for processing.

advantage

- Let the main thread focus on the processing of general events (connection, read and write), and further decouple from the design;

- Take advantage of CPU multi-core.

shortcoming

- It seems that this model is perfect. Let's think again. If there are many clients and the traffic is particularly large, the processing of general events (read and write) may also become the bottleneck of the main thread, because each read and write operation involves system calls.

Is there any good way to solve the above problems? Through the above analysis, have you found a phenomenon that when a point becomes a system bottleneck, find a way to take it out and hand it over to another thread for processing? Is this scenario applicable?

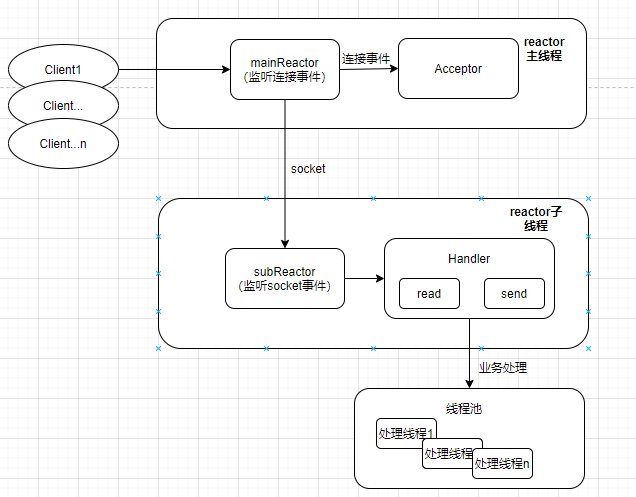

3.3 multi Reactor multithreading model

Compared with the single Reactor multithreading model, this model only takes the reading and writing processing of the socket from the main Reactor and gives it to the subReactor thread for processing.

Processing flow

- The mainReactor main thread is responsible for listening and processing connection events. After the Acceptor completes the connection process, the main thread assigns the connection to the subReactor;

- subReactor is responsible for listening and processing the Socket allocated by mainReactor. When a Socket event comes, it will be handed over to a corresponding Handler for processing;

After the Handler completes the read event, it is packaged into a task object and handed over to the thread pool for processing, and the business processing logic is handed over to other threads for processing.

advantage

- Let the main thread focus on the processing of connection events, and the sub thread focus on reading and writing events, which is further decoupled from the design;

- Take advantage of CPU multi-core.

shortcoming

- The implementation will be complex. It can be considered in the scenario of extreme pursuit of single machine performance.

4, Redis thread model

4.1 General

Above, we talked about the development history of IO network model and the reactor mode of IO multiplexing. What reactor mode does Redis adopt? Before answering this question, let's sort out several conceptual questions.

There are two types of events in Redis server, file events and time events.

File events: here, files can be understood as Socket related events, such as connection, read, write, etc;

Time: it can be understood as scheduled task events, such as some regular RDB persistence operations.

This article focuses on Socket related events.

4.2 model diagram

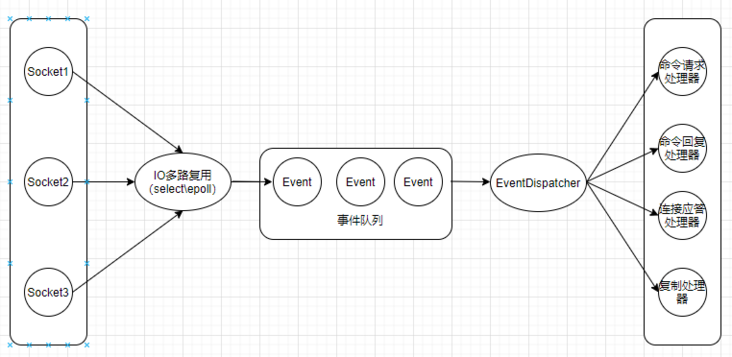

First, let's take a look at the thread model diagram of Redis service;

IO multiplexing is responsible for monitoring each event (connection, reading, writing, etc.). When an event occurs, the corresponding event is put into the queue and distributed by the event distributor according to the event type;

If it is a connection event, it is distributed to the connection response processor; redis commands such as GET and SET are distributed to the command request processor.

After the command is processed, a command reply event is generated, and then the event queue, the event distributor and the command reply processor reply to the client response.

4.3 interaction process between primary client and server

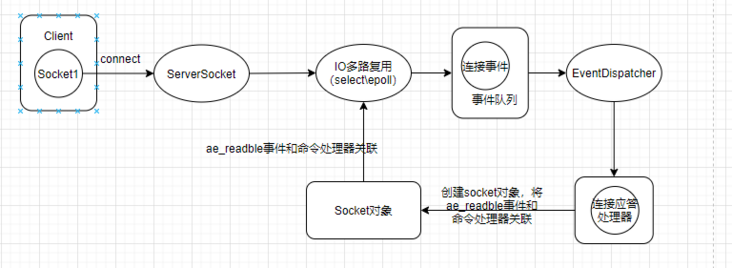

4.3.1 connection process

Connection process

- The main thread of Redis server listens to the fixed port and binds the connection event to the response processor.

- After the client initiates the connection, the connection event is triggered, and the IO multiplexer wraps the connection event into a lost event queue, which is then distributed by the event distribution processor to the connection response processor.

- Connect the response processor to create the client object and Socket object. Here we focus on the Socket object and generate AE_ The readable event is associated with the command processor and identifies that the subsequent Socket is interested in the readable event, that is, it starts to receive the command operation of the client.

- The current process is handled by a main thread.

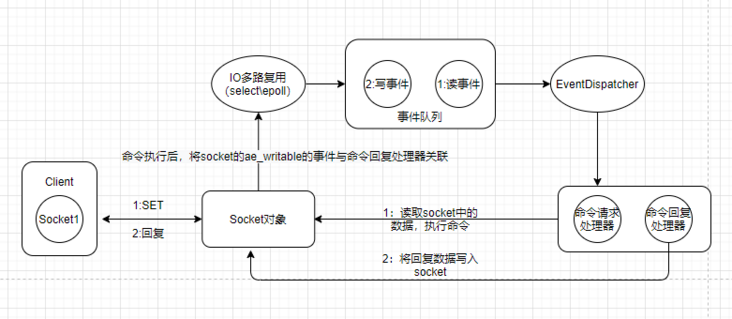

4.3.2 command execution process

SET command execution process

- The client initiates the SET command. After the IO multiplexer listens to the event (read event), it packages the data as an event and throws it into the event queue (the event is bound to the command request processor in the previous process);

- The event distribution processor distributes the event to the corresponding command request processor according to the event type;

- The command requests the processor to read the data in the Socket, execute the command, and then generate ae_writable event, and bind the command reply processor;

- After listening to the write event, the IO multiplexer packages the data as an event and throws it into the event queue. The event distribution processor distributes it to the command reply processor according to the event type;

- The command replies to the processor, writes the data into the Socket and returns it to the client.

4.4 advantages and disadvantages of the model

From the above process analysis, we can see that Redis adopts the single threaded Reactor model. We also analyze the advantages and disadvantages of this model. Why should Redis adopt this model?

Redis features

Command execution is based on memory operation, and the business processing logic is relatively fast, so the single thread of command processing can also maintain a high performance.

advantage

- For the advantages of Reactor single thread model, refer to the above.

shortcoming

- The disadvantage of Reactor single thread model is also reflected in Redis. The only difference is that business logic processing (command execution) is not a system bottleneck.

- With the increase of traffic, the time-consuming of IO operation will become more and more obvious (read operation, reading data from the kernel to the application, write operation, data from the application to the kernel). When a certain threshold is reached, the bottleneck of the system will be reflected.

How does Redis solve it?

Ha ha ~ take the time-consuming points out of the main route? Does the new version of Redis do this? Let's have a look.

4.5 Redis multithreading mode

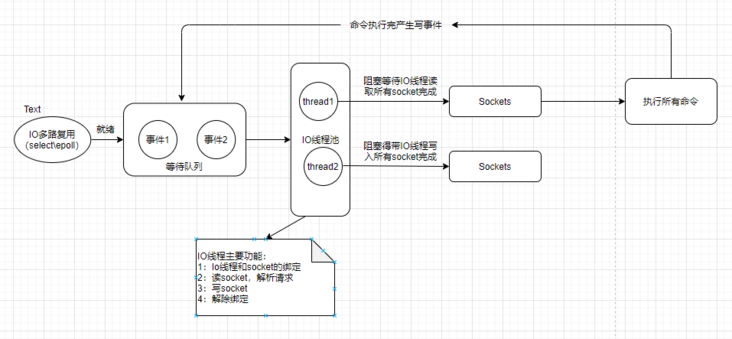

Redis's multithreading model is a little different from "multi Reactor multithreading model" and "single Reactor multithreading model", but it uses the ideas of two Reactor models at the same time, as follows;

Redis's multithreading model is to multithread IO operations. Its own logical processing process (command execution process) is still a single thread. With the help of the idea of single Reactor, there are differences in implementation.

Multithreading IO operations is consistent with the idea of deriving multiple reactors from a single Reactor, which is to take IO operations out of the main thread.

General flow of command execution

- The client sends a request command and triggers a read ready event. The main thread of the server puts the Socket (in order to simplify the understanding cost, Socket is used to represent the connection) into a queue, and the main thread is not responsible for reading;

- The IO thread reads the request command of the client through the Socket, and the main thread polls busy, waiting for all I/O threads to complete the reading task. The IO thread is only responsible for reading, not executing the command;

- The main thread executes all commands at once. The execution process is the same as that of a single thread, and then the connections that need to be returned are put into another queue. An IO thread is responsible for writing out (the main thread will also write);

- The main thread is busy polling, waiting for all I/O threads to complete the write out task.

5, Summary

To understand a component, it is more important to understand its design ideas, to think about why it is designed like this, what is the background of this technology selection, what reference significance it has for subsequent system architecture design, etc. it is all-in-one, and I hope it has reference significance for everyone.

Author: vivo Internet server team - Wang Shaodong