1. Using zipper table to implement DIM layer business dimension table in core transaction analysis and rollback of zipper table

ODS Layer Data Preparation

Because we need to construct our own data, we use the existing ods_trade_shops creates a new table:

create database hw; drop table if exists hw.ods_trade_shops; create table hw.ods_trade_shops( `shopid` int COMMENT 'shops ID', `userid` int COMMENT 'Shop Leader', `areaid` int COMMENT 'region ID', `shopname` string COMMENT 'Shop Name', `shoplevel` int COMMENT 'Shop Level', `status` int COMMENT 'Shop Status', `createtime` string COMMENT 'Creation date', `modifytime` string COMMENT 'modification date' ) COMMENT 'Business Information Table' PARTITIONED BY (`dt` string) row format delimited fields terminated by ',';

The Title asks you to construct your own data and prepare it for three days as needed:

/data/lagoudw/data/hw/shop-2020-06-29.dat 100050,1,100225,WSxxx Ying Supermarket,1,1,2020-06-28,2020-06-29 13:22:22 100052,2,100236,fresh xxx Flagship store,1,1,2020-06-28,2020-06-29 13:22:22 100053,3,100011,Huawei xxx Flagship store,1,1,2020-06-28,2020-06-29 13:22:22 100054,4,100159,millet xxx Flagship store,1,1,2020-06-28,2020-06-29 13:22:22 100055,5,100211,Apple xxx Flagship store,1,1,2020-06-28,2020-06-29 13:22:22 /data/lagoudw/data/hw/shop-2020-06-30.dat 100056,6,100050,OPxxx Self-run shop,1,1,2020-06-28,2020-06-30 13:22:22 100057,7,100311,Three xxx Mouse snack,1,1,2020-06-28,2020-06-30 13:22:22 100058,8,100329,Liangzi xxx Laying Food,1,1,2020-06-28,2020-06-30 13:22:22 100053,3,100011,Huawei xxx Flagship store,2,1,2020-06-28,2020-06-30 13:22:22 100055,5,100211,Apple xxx Flagship store,3,1,2020-06-28,2020-06-30 13:22:22 /data/lagoudw/data/hw/shop-2020-07-01.dat 100059,9,100225,Leju xxx Daily Necessities,1,1,2020-06-28,2020-07-01 13:22:22 100060,10,100211,Colleagues xxx Great health,1,1,2020-06-28,2020-07-01 13:22:22 100061,11,100159,Jiamei xxx Flagship store,1,1,2020-06-28,2020-07-01 13:22:22 100053,3,100011,Huawei xxx Flagship store,2,1,2020-06-28,2020-07-01 13:22:22 100058,8,100329,Liangzi xxx Laying Food,3,1,2020-06-28,2020-07-01 13:22:22 #Import into tables: load data local inpath '/data/lagoudw/data/hw/shop-2020-06-29.dat' into table hw.ods_trade_shops partition(dt='2020-06-29'); load data local inpath '/data/lagoudw/data/hw/shop-2020-06-30.dat' into table hw.ods_trade_shops partition(dt='2020-06-30'); load data local inpath '/data/lagoudw/data/hw/shop-2020-07-01.dat' into table hw.ods_trade_shops partition(dt='2020-07-01');

ODS Layer Data Loading Completed

DIM Layer Data Preparation

-- Also in hw Create Business Information Table in Database, That is zipper list.

drop table if exists hw.dim_trade_shops;

create table hw.dim_trade_shops(

`shopid` int COMMENT 'shops ID',

`userid` int COMMENT 'Shop Leader',

`areaid` int COMMENT 'region ID',

`shopname` string COMMENT 'Shop Name',

`shoplevel` int COMMENT 'Shop Level',

`status` int COMMENT 'Shop Status',

`createtime` string COMMENT 'Creation date',

`modifytime` string COMMENT 'modification date',

`startdate` string COMMENT 'Effective Start Date',

`enddate` string COMMENT 'End date of expiration'

) COMMENT 'Business Information Table';

-- Initialize zipper list(Load 6.29 Data of number):

insert overwrite table hw.dim_trade_shops

select shopid, userid, areaid, shopname, shoplevel, status, createtime, modifytime,

case when modifytime is not null

then from_unixtime(unix_timestamp(modifytime,'yyyy-MM-dd HH:mm:ss'),'yyyy-MM-dd')

else createtime

end as startdate,

'9999-12-31' as enddate

from hw.ods_trade_shops

where dt='2020-06-29';

Write the load statement as a script:

/data/lagoudw/script/hw/dim_load_trade_shops.sh

#!/bin/bash

source /etc/profile

if [ -n "$1" ]

then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

insert overwrite table hw.dim_trade_shops

select shopid, userid, areaid, shopname, shoplevel, status, createtime, modifytime,

case when modifytime is not null

then from_unixtime(unix_timestamp(modifytime,'yyyy-MM-dd HH:mm:ss'),'yyyy-MM-dd')

else createtime

end as startdate,

'9999-12-31' as enddate

from hw.ods_trade_shops

where dt='$do_date'

union all

select A.shopid,

A.userid,

A.areaid,

A.shopname,

A.shoplevel,

A.status,

A.createtime,

A.modifytime,

A.startdate,

case when B.shopid is not null and A.enddate = '9999-12-31'

then date_add('$do_date', -1)

else A.enddate end as enddate

from (select * from hw.ods_trade_shops where dt='$do_date') B

right join hw.dim_trade_shops A on B.shopid = A.shopid;

"

hive -e "$sql"

Loading data for 6.30 and 7.1:

sh /data/lagoudw/script/hw/dim_load_trade_shops.sh 2020-06-30 sh /data/lagoudw/script/hw/dim_load_trade_shops.sh 2020-07-01

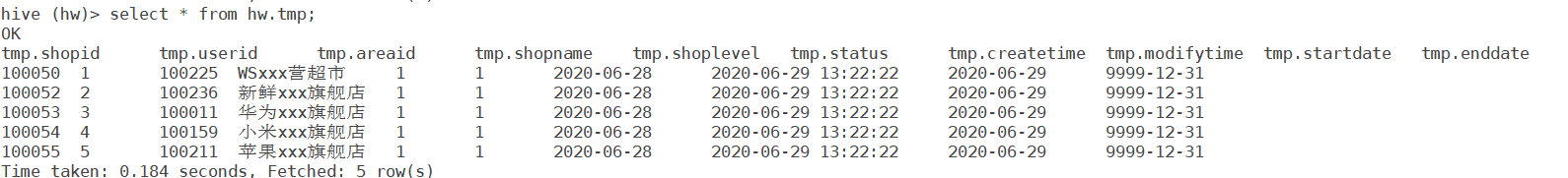

Check data:

Zipper list rollback

/data/lagoudw/script/hw/dim_rollback_trade_shops.sh

#!/bin/bash source /etc/profile if [ -n "$1" ] then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" drop table hw.tmp; create table hw.tmp as select shopid, userid, areaid, shopname, shoplevel, status, createtime, modifytime, startdate, enddate from hw.dim_trade_shops where enddate < '$do_date' union all select shopid, userid, areaid, shopname, shoplevel, status, createtime, modifytime, startdate, '9999-12-31' as enddate from hw.dim_trade_shops where startdate <= '$do_date' and enddate >= '$do_date'; " hive -e "$sql"

Test rollback 6.29:

[External chain picture transfer failed, source station may have anti-theft chain mechanism, it is recommended to save the picture and upload it directly (img-qZpxyLXO-16399359016) (C:\UsersjaneAppDataRoamingTyporatypora-user-imagesimage-202428.png)]

2. Calculate the number of silent and lost members in the membership analysis

Definition of silent member: App was started only on the day of installation and was installed 7 days ago

Definition of lost members: members who have not logged in in in the last 30 days

I'll start with table dws.dws_member_start_day intercepts a small portion of the data, recreates it in my hw database, and modifies some standard-compliant data

use hw; drop table if exists hw.dws_member_start_day; create table hw.dws_member_start_day ( `device_id` string, `uid` string, `app_v` string, `os_type` string, `language` string, `channel` string, `area` string, `brand` string ) COMMENT 'Member Day Start Summary' PARTITIONED BY (`dt` string) row format delimited fields terminated by ',';

Prepare data:

/data/lagoudw/data/hw/member-start-day-2020-06-29.dat 1FB872-9A1001,2F10092A1,1.1.0,0.97,chinese WM,Sanmenxia,xiaomi-3,2020-06-29 1FB872-9A10010,2F10092A10,1.1.5,0.60,chinese ZD,Jiangmen,Huawei-9,2020-06-29 1FB872-9A100100,2F10092A100,1.1.10,7.2,chinese WK,Golden altar,xiaomi-6,2020-06-29 /data/lagoudw/data/hw/member-start-day-2020-07-02.dat 1FB872-9A1001,2F10092A1,1.1.0,0.97,chinese WM,Sanmenxia,xiaomi-3,2020-07-02 1FB872-9A100100,2F10092A100,1.1.10,7.2,chinese WK,Golden altar,xiaomi-6,2020-07-02 1FB872-9A1001000,2F10092A1000,1.1.3,8.1,chinese UJ,Tangshan,xiaomi-0,2020-07-02 1FB872-9A1001001,2F10092A1001,1.1.10,8.3.5,chinese LB,Rushan,xiaomi-7,2020-07-02 1FB872-9A1001002,2F10092A1002,1.1.9,0.0.0,chinese MZ,Wenzhou,xiaomi-0,2020-07-02 1FB872-9A1001003,2F10092A1003,1.1.14,3.20,chinese SL,Anyang,Huawei-1,2020-07-02 /data/lagoudw/data/hw/member-start-day-2020-07-08.dat 1FB872-9A1001002,2F10092A1002,1.1.9,0.0.0,chinese MZ,Wenzhou,xiaomi-0,2020-07-08 1FB872-9A1001003,2F10092A1003,1.1.14,3.20,chinese SL,Anyang,Huawei-1,2020-07-08 /data/lagoudw/data/hw/member-start-day-2020-08-05.dat 1FB872-9A1001,2F10092A1,1.1.0,0.97,chinese WM,Sanmenxia,xiaomi-3,2020-08-05 1FB872-9A1001002,2F10092A1002,1.1.9,0.0.0,chinese MZ,Wenzhou,xiaomi-0,2020-08-05 1FB872-9A1001003,2F10092A1003,1.1.14,3.20,chinese SL,Anyang,Huawei-1,2020-08-05 1FB872-9A1001004,2F10092A1004,1.1.9,9.9, chinese YX,Weihai,Huawei-2,2020-08-05 #Import data: load data local inpath '/data/lagoudw/data/hw/member-start-day-2020-06-29.dat' into table hw.dws_member_start_day partition(dt='2020-06-29'); load data local inpath '/data/lagoudw/data/hw/member-start-day-2020-07-02.dat' into table hw.dws_member_start_day partition(dt='2020-07-02'); load data local inpath '/data/lagoudw/data/hw/member-start-day-2020-07-08.dat' into table hw.dws_member_start_day partition(dt='2020-07-08'); load data local inpath '/data/lagoudw/data/hw/member-start-day-2020-08-05.dat' into table hw.dws_member_start_day partition(dt='2020-08-05');

Calculate Silent Members:

Assuming today is 2020-07-08, the selected members will sign in only once at 2020-06-29

#!/bin/bash

source /etc/profile

if [ -n "$1" ]

then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

drop table hw.silent_cnt;

create table hw.silent_cnt as

-- Select users who logged on seven days ago

select count(*) as silent_member, '$do_date' as dt

from

(select device_id from hw.dws_member_start_day

where dt<date_add('$do_date', -7)) A join

-- Users logged on only once

(select device_id, count(device_id) as cnt from hw.dws_member_start_day group by device_id having cnt=1) B on A.device_id = B.device_id

"

hive -e "$sql"

Calculate lost members:

Assuming today is 2020-08-05, the selected members logged on 30 days ago but have not logged on in the past 30 days:

#!/bin/bash

source /etc/profile

if [ -n "$1" ]

then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

sql="

drop table hw.lost_cnt;

create table hw.lost_cnt as

select count(distinct device_id) as lost_member, '$do_date'

from hw.dws_member_start_day

where dt<=date_add('$do_date', -30)

and device_id not in (

select device_id

from hw.dws_member_start_day

where dt>date_add('$do_date', -30) and dt<='$do_date'

)

"

hive --hiveconf "hive.strict.checks.cartesian.product=false" -e "$sql"

3. Complete the calculation of the following indicators in the core transaction analysis

-- 1.1 First count daily orders and total sales,Only valid orders are counted:

drop table if exists dws.dws_trade_orders_day;

create table if not exists dws.dws_trade_orders_day(

day_dt string comment 'Date: yyyy-MM-dd',

day_cnt decimal comment 'Number of daily orders',

day_sum decimal comment 'Total daily orders'

) comment 'Daily Order Statistics Table';

insert overwrite table dws.dws_trade_orders_day

select dt, count(*) cnt, sum(totalmoney) sum_money

from (

select distinct orderid, dt, totalmoney from dwd.dwd_trade_orders where dataFlag='1'

) tmp

group by dt;

-- 1.2 Try Testing:

select * from dws.dws_trade_orders_day where dt between '2020-01-01' and '2020-12-31';

-- 2 Statistics of sales orders and total orders for each quarter in 2020

drop table if exists dws.dws_trade_orders_season;

create table if not exists dws.dws_trade_orders_season(

year string comment 'Particular year',

season_dt string comment 'Quarter: S1, S2, S3, S4',

season_cnt decimal comment 'Quarterly Order Number',

season_sum decimal comment 'Quarterly total orders'

) comment 'Quarterly Order Statistics Table';

with tmp as(

select substr(day_dt, 0, 4) as year,

case when substr(day_dt, 6, 2)='01' or substr(day_dt, 6, 2)='02' or substr(day_dt, 6, 2)='03' then "1"

when substr(day_dt, 6, 2)='04' or substr(day_dt, 6, 2)='05' or substr(day_dt, 6, 2)='06' then "2"

when substr(day_dt, 6, 2)='07' or substr(day_dt, 6, 2)='08' or substr(day_dt, 6, 2)='09' then "3"

when substr(day_dt, 6, 2)='10' or substr(day_dt, 6, 2)='11' or substr(day_dt, 6, 2)='12' then "4" end as season_dt,

day_cnt,

day_sum

from dws.dws_trade_orders_day

)

insert overwrite table dws.dws_trade_orders_season

select year,

season_dt,

sum(day_cnt),

sum(day_sum)

from tmp

group by year, season_dt;

-- 3. Statistics of sales orders and total orders per month in 2020

drop table if exists dws.dws_trade_orders_month;

create table if not exists dws.dws_trade_orders_month(

year string comment 'Particular year',

month_dt string comment 'Month',

month_cnt decimal comment 'Number of monthly orders',

month_sum decimal comment 'Total monthly orders'

) comment 'Monthly Order Statistics Table';

with tmp as(

select substr(day_dt, 0, 4) as year,

substr(day_dt, 6, 2) as month_dt,

day_cnt,

day_sum

from dws.dws_trade_orders_day

)

insert overwrite table dws.dws_trade_orders_month

select year,

month_dt,

sum(day_cnt),

sum(day_sum)

from tmp

group by year, month_dt;

- Statistics of sales orders and total orders per week (Monday to Sunday) in 2020

Use the udf function to get the number of weeks for the corresponding date:

package cn.lagou.dw.hive.udf;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.apache.hadoop.io.Text;

import java.text.ParseException;

import java.util.Calendar;

import java.util.Date;

import java.text.SimpleDateFormat;

public class WeekOfYear extends UDF {

public int evaluate(final Text val) throws ParseException {

String datestr = val.toString();

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd");

Date dt = sdf.parse(datestr);

Calendar c1 = Calendar.getInstance();

c1.setFirstDayOfWeek(Calendar.MONDAY);

c1.setTime(dt);

int dayOfWeek = c1.get(Calendar.WEEK_OF_YEAR);

return dayOfWeek;

}

}

Next, the function is used to calculate the weekly table:

drop table if exists dws.dws_trade_orders_week;

create table if not exists dws.dws_trade_orders_week(

year string comment 'Particular year',

month_dt string comment 'Weeks',

month_cnt decimal comment 'Number of weekly orders',

month_sum decimal comment 'Total Weekly Orders'

) comment 'Weekly Order Statistics Table';

with tmp as(

select substr(day_dt, 0, 4) as year,

weekOfYear(day_dt) as week,

day_cnt,

day_sum

from dws.dws_trade_orders_day

)

insert overwrite table dws.dws_trade_orders_week

select year,

week,

sum(day_cnt),

sum(day_sum)

from tmp group by year, week;

- Statistics of the number of orders and total orders for national statutory holidays, rest days and working days in 2020

Create a date dimension table to count whether the day is a holiday or a rest day or a work day

drop table if exists dim.day_info; create table if not exists dim.day_info( year string comment 'Particular year', dt string comment 'date', is_holiday int comment '1: yes; 0: no', is_workDay int comment '1: yes; 0: no' ) comment 'Weekly Order Statistics Table'; -- Statutory Holiday Statistics select A.year, nvl(sum(A.day_cnt),0), nvl(sum(A.day_sum),0) from dws.dws_trade_orders_day group by year A join select dt from dim.day_info where is_holiday=1 B on A.dt = B.dt; -- Rest day statistics select A.year, nvl(sum(A.day_cnt),0), nvl(sum(A.day_sum),0) from dws.dws_trade_orders_day join select dt from dim.day_info where is_workDay=0 B on A.dt = B.dt group by year; -- Workday statistics select A.year, nvl(sum(A.day_cnt),0), nvl(sum(A.day_sum),0) from dws.dws_trade_orders_day join select dt from dim.day_info where is_workDay=1 B on A.dt = B.dt group by year;