1, Storage

1.1,Volume

Official website: https://kubernetes.io/docs/concepts/storage/volumes/

Through the description on the official website, I can roughly summarize the understanding of this volume in docker, that is, I can name a variable only through a volume technology, and then bind the path of the physical host and the virtual path through this variable; In short, this is a persistence technology; In k8s, it can be understood as binding persistence with pod; The practice of this content is applied in the previous yaml file. If you are interested, you can see the previous article. But this thing still has problems. For example, my application is now on node a, and the data persistence is also persistent on node A. However, after the service migration, I serve on node B, and then the problem comes.

1.2 Host type volume actual combat

Define a pod that contains two containers, both of which use the Volume of the pod; Pod is named Volume pod yml

apiVersion: v1 kind: Pod metadata: name: volume-pod spec: containers: - name: nginx-container image: nginx ports: - containerPort: 80 volumeMounts: - name: volume-pod mountPath: /nginx-volume - name: busybox-container image: busybox command: ['sh', '-c', 'echo The app is running! && sleep 3600'] volumeMounts: - name: volume-pod mountPath: /busybox-volume volumes: - name: volume-pod hostPath: path: /tmp/volume-pod

(1) Create resource

kubectl apply -f volume-pod.yaml

(2) Check the operation of pod

kubectl get pods -o wide

(3) Go to the running worker node

Use the command to see if the container exists

docker ps | grep volume

Enter the docker directory to see if the created directory exists. You will find that nginx volume and busybox volume directories exist

docker exec -it containerid sh

(4) Check whether the hosts file in the container in the pod is the same. (just compare the contents of the above two folders with the volume pod content of the host computer)

(5) Therefore, the storage or network content in the container should not be modified at the container level, but in the pod

1.3 PersistentVolume

Official website: https://kubernetes.io/docs/concepts/storage/persistent-volumes/

This scheme is recommended by the production environment;

The volumes technology mentioned earlier is to map the virtual disk to the physical machine. I also said that there will be a problem, that is, when the system is migrated to another server, the virtual disk and the persistent disk are not on the same physical host. How to solve this problem? In fact, it is very simple. I do not persist to the physical host when I persist, Instead, it is persistent to a fixed host, and then make the physical disk of the fixed host highly available. In this way, you can find the location of the fixed persistent disk regardless of the wave of your virtual disk. yaml file is as follows

apiVersion: v1 kind: PersistentVolume metadata: name: my-pv spec: capacity: storage: 5Gi # Storage space size volumeMode: Filesystem accessModes: - ReadWriteOnce # Only one Pod is allowed to read and write exclusively persistentVolumeReclaimPolicy: Recycle storageClassName: slow mountOptions: - hard - nfsvers=4.1 nfs: path: /tmp # Directory of remote server server: 172.17.0.2 # remote server

To put it bluntly, PV is a resource in K8s. The plugin implementation of volume is independent of Pod and encapsulates the details of the implementation of the underlying storage volume.

1.4,PersistentVolumeClaim

Official website: https://kubernetes.io/docs/concepts/storage/persistent-volumes/#persistentvolumeclaims

With PV, how can Pod be used? For convenience, we can design a PVC to bind PV, and then give the PVC to Pod for use

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: myclaim spec: accessModes: - ReadWriteOnce volumeMode: Filesystem resources: requests: storage: 8Gi storageClassName: slow selector: matchLabels: release: "stable" matchExpressions: - {key: environment, operator: In, values: [dev]}

To put it bluntly, PVC will match the PV that meets the requirements [* * is matched according to size and access mode * *], bind them one by one, and then their status will become Bound. That is, PVC is responsible for requesting the size and access mode of PV, and then PVC can be used directly in Pod.

1.5 how to use PVC in Pod

Official website: https://kubernetes.io/docs/concepts/storage/persistent-volumes/#claims-as-volumes

apiVersion: v1 kind: Pod metadata: name: mypod spec: containers: - name: myfrontend image: nginx volumeMounts: - mountPath: "/var/www/html" name: mypd volumes: - name: mypd persistentVolumeClaim: claimName: myclaim

1.6 actual combat of using PVC in Pod

Scene background: demonstration of using nginx persistent storage

- Shared storage uses nfs, for example, in the m node

- Create pv and pvc

- pvc used in nginx pod

1.6.1 build nfs on the master node

Set up an NFS server on the master node, and the directory is / nfs/data

1) Select the master node as the nfs server, so install nfs on the master node

yum install -y nfs-utils

Create nfs directory

mkdir -p /nfs/data/

mkdir -p /nfs/data/mysql

Grant permission

chmod -R 777 /nfs/data

Edit export file

vi /etc/exports

/nfs/data *(rw,no_root_squash,sync)

Make the configuration effective

exportfs -r

View effective

exportfs

Start rpcbind and nfs services

systemctl restart rpcbind && systemctl enable rpcbind

systemctl restart nfs && systemctl enable nfs

Check the registration of rpc service

rpcinfo -p localhost

showmount test

showmount -e master-ip

2) Install clients on all node s

yum -y install nfs-utils

systemctl start nfs && systemctl enable nfs

1.6.2 create PV & PVC & nginx

(1) create the required directory on the nfs server

mkdir -p /nfs/data/nginx

(2) yaml document defining PV, PVC and Nginx; I named it Nginx PV demo yaml

# Define PV apiVersion: v1 kind: PersistentVolume metadata: name: nginx-pv spec: accessModes: - ReadWriteMany capacity: storage: 2Gi nfs: path: /nfs/data/nginx server: 121.41.10.13 --- # Define PVC for consumption of PV apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nginx-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 2Gi --- # Define Pod and specify the PVC to be used apiVersion: apps/v1beta1 kind: Deployment metadata: name: nginx spec: selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - image: nginx name: mysql ports: - containerPort: 80 volumeMounts: - name: nginx-persistent-storage mountPath: /usr/share/nginx/html volumes: - name: nginx-persistent-storage persistentVolumeClaim: claimName: nginx-pvc

(3) Create and view resources from yaml files

kubectl apply -f nginx-pv-demo.yaml

kubectl get pv,pvc

kubectl get pods -o wide

(4) Test persistent storage

- Create a new file 1 in / nfs/data/nginx HTML, write the content

- kubectl get pods -o wide get the ip address of nginx pod

- curl nginx-pod-ip/1.html

- Kubectl exec - it nginx pod bash enter / usr/share/nginx/html directory to view

- kubectl delete pod nginx-pod

- View the ip of the new nginx pod and visit nginx pod ip / 1 html

1.7, StorageClass

The way of manually managing PV is still a little low. Let's have a more convenient one

Official website: https://kubernetes.io/docs/concepts/storage/storage-classes/

github:https://github.com/kubernetes-incubator/external-storage/tree/master/nfs

The official website briefly means that StorageClass is a declared storage plug-in used to automatically create PV. To put it bluntly, it is the template for creating PV, which has two important parts: PV attributes and plug-ins required to create this PV. In this way, PVC can match PV according to "Class". You can specify the storageClassName attribute for PV to identify which Class PV belongs to.

The following is the template file provided on the official website

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: standard provisioner: kubernetes.io/aws-ebs parameters: type: gp2 reclaimPolicy: Retain allowVolumeExpansion: true mountOptions: - debug volumeBindingMode: Immediate

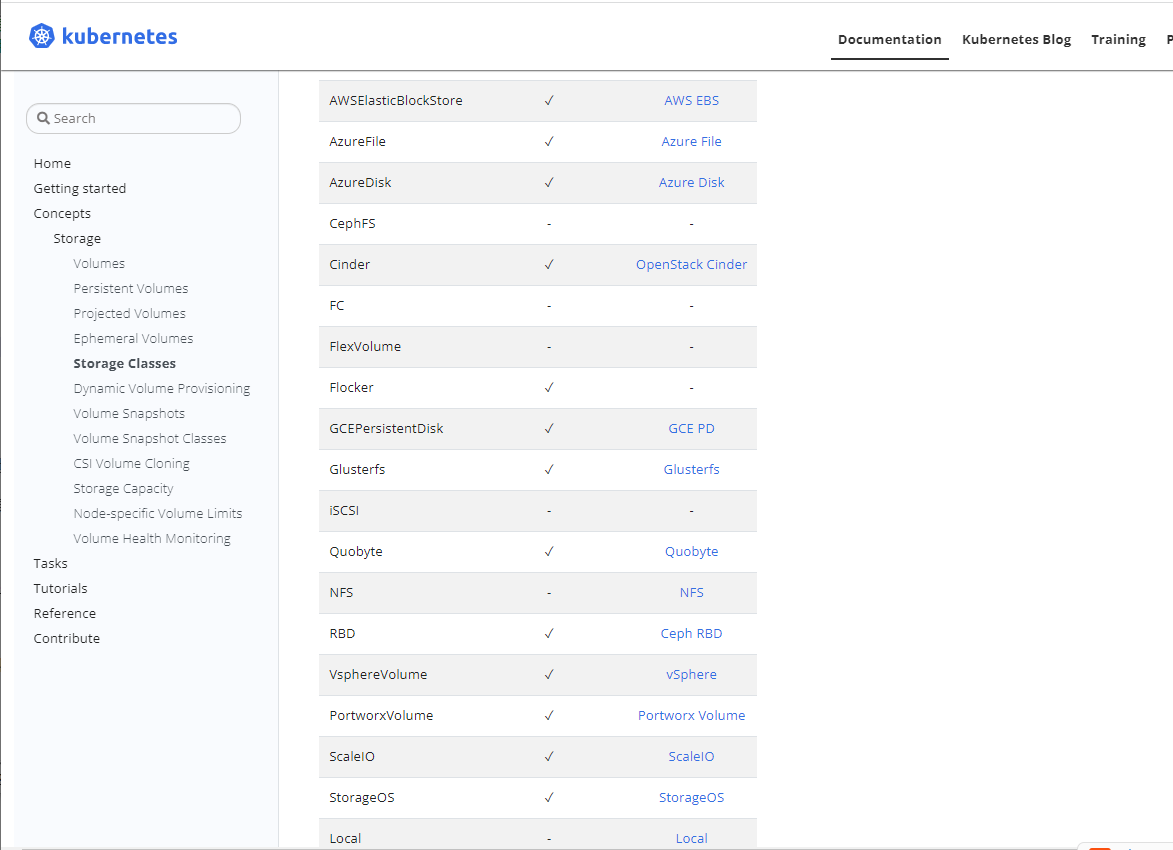

The official website is too long for me to interpret one by one. I can read it for myself, but the documents on the official website illustrate a very important information, that is, the reason why StorageClass can dynamically supply PV is because of the Provisioner, that is, Dynamic Provisioning. However, for NFS, there is no Provisioner plug-in in in K8s by default, so you need to create it yourself; But we are now using NFS mode. If NFS cannot support it, everything we play face-to-face is for nothing. In fact, I don't think we need to worry too much. Why? For development, there are always people willing to challenge and make the impossible possible; Next, in the actual combat, I combine the NFS plug-in support provided by the boss in github to realize the above theory.

1.8 StorageClass actual combat

github:https://github.com/kubernetes-incubator/external-storage/tree/master/nfs

(1) Prepare the NFS server [and ensure that NFS works properly], and create the directory needed for persistence

cd /nfs/data/ghy

(2) Because creating resources requires APIServer authentication, you need to create an account to interact with APIServer, so according to RBAC Yaml file creation resource

kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] - apiGroups: [""] resources: ["services", "endpoints"] verbs: ["get"] - apiGroups: ["extensions"] resources: ["podsecuritypolicies"] resourceNames: ["nfs-provisioner"] verbs: ["use"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-provisioner subjects: - kind: ServiceAccount name: nfs-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: ClusterRole name: nfs-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-provisioner rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-provisioner subjects: - kind: ServiceAccount name: nfs-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-provisioner apiGroup: rbac.authorization.k8s.io

kubectl apply -f rbac.yaml

(3) According to deployment Yaml file creation resources, in which there will be the server we want to access. Please change the ip and server file name configured in it to your own; Looking at this configuration, you can easily understand that this thing is the thing that interacts with NFS server

apiVersion: v1 kind: ServiceAccount metadata: name: nfs-provisioner --- kind: Deployment apiVersion: extensions/v1beta1 metadata: name: nfs-provisioner spec: replicas: 1 strategy: type: Recreate template: metadata: labels: app: nfs-provisioner spec: serviceAccount: nfs-provisioner containers: - name: nfs-provisioner image: registry.cn-hangzhou.aliyuncs.com/open-ali/nfs-client-provisioner volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: example.com/nfs - name: NFS_SERVER value: 192.168.0.21 - name: NFS_PATH value: /nfs/data/ghy volumes: - name: nfs-client-root nfs: server: 192.168.0.21 path: /nfs/data/ghy

kubectl apply -f deployment.yaml

(4) According to class Yaml create resource

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: example-nfs provisioner: example.com/nfs

kubectl apply -f class.yaml

(5) After the previous steps, we can not use the action of creating PV before. With storage automatically generated for us, we should create PVC next, but now there is another problem, that is, PVC can not automatically bind PV. The reason is that we don't need to create PV now. The action of PV creation is automatically created by storage. Now we create a PVC according to my PVC Yaml create resources;

kind: PersistentVolumeClaim apiVersion: v1 metadata: name: my-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 1Mi # This name should be consistent with the name of the storageclass created above. The reason for this is because we want to create PV through storageclass, so we need to match storageclass and let it dynamically create PV for us storageClassName: example-nfs

kubectl apply -f my-pvc.yaml

kubectl get pvc

(6) After the above PV and PVC are available, there is a difference of Pod according to the previous method. The following is based on nginx Pod Yaml create resource

kind: Pod apiVersion: v1 metadata: name: nginx spec: containers: - name: nginx image: nginx volumeMounts: - name: my-pvc mountPath: "/usr/ghy" restartPolicy: "Never" volumes: - name: my-pvc persistentVolumeClaim: claimName: my-pvc

kubectl apply -f nginx-pod.yaml

kubectl exec -it nginx bash

Then modify the file with the following command to verify the file synchronization problem

cd /usr/ghy

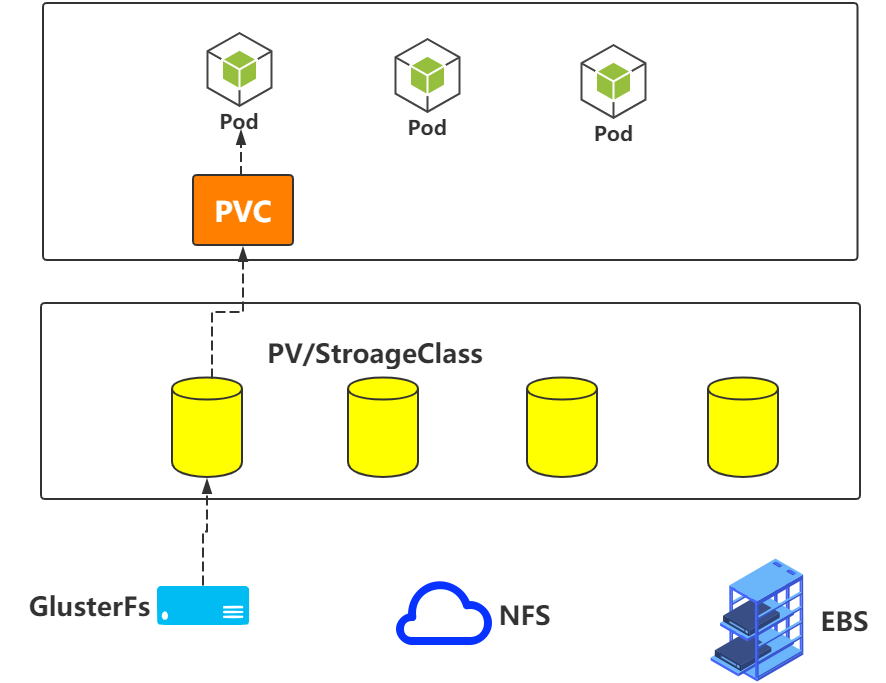

In fact, the above process can be summarized with the following picture

1.9 PV status and recovery strategy

Status of PV

- Available: indicates that the current pv is not bound

- Bound: indicates that it has been attached by pvc

- Released: pvc is not in use. The administrator needs to release pv manually

- Failed: resource recycling failed

PV recovery strategy

- Retain: indicates that when deleting PVC, the PV will not be deleted together, but will change to Released status and wait for the administrator to clean it manually

- Recycle: not in the new version of Kubernetes. Dynamic PV supply is used instead

- Delete: when deleting PVC, PV will be deleted together, and the actual storage space pointed to by PV will also be deleted

Note: Currently, only NFS and HostPath support the Recycle policy. AWS EBS, GCE PD, Azure Disk and Cinder support Delete policy