01 Preface

Hello everyone, this article is Special topic of iOS/Android audio and video development The seventh article, the AVPlayer project code will be hosted in Github, and you can get back the data in the background of WeChat official account (GeekDev) to get the project address.

In the last article OpenGL ES realizes playing video frames We already know how to use GLSurfaceView to render the decoded video to the screen, but our player does not have the function of audio playback. In this article, we will use AudioTrack to play the decoded audio data (PCM).

Contents of this issue:

- PCM introduction

- AudioTrack API introduction

- Decoding and playing audio tracks using MediaCodec

- Concluding remarks

02 PCM introduction

PCM (pulse code modulation) is a method of converting analog signal into digital signal. Because the computer can only recognize digital signals, that is, a pile of binary sequences, the analog signals collected by the microphone will be converted by the analog-to-digital converter to generate digital signals. The most common way is through PCM A/D conversion.

A/D conversion involves sampling, quantization and coding.

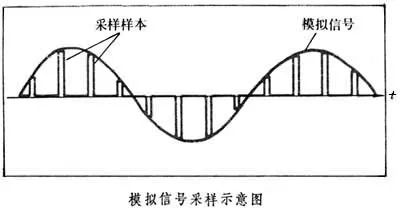

Sampling: due to the limited storage space, we need to sample and store the analog signal. Sampling refers to sampling from analog signals. Sampling involves sampling frequency. The sampling frequency is the number of sound samples per second. The higher the sampling rate is, the higher the sound quality is, the more the real sound can be restored. Therefore, we generally call analog signal continuous signal and digital signal discrete and discontinuous signal.

According to Nyquist theory, if the sampling frequency is not less than 2 times of the highest frequency of the audio signal, the real sound can be restored losslessly.

Since the frequency range that human ears can hear is 20Hz ~ 20kHz, in order to ensure that the sound is not distorted, the sampling frequency is generally set above 40kHz. The commonly used sampling frequencies are 22.05kHz, 16kHz, 37.8kHz, 44.1kHz and 48kHz. Currently, in Android devices, only 44.1kHz is the sampling frequency supported by all devices.

Quantization: analog signals are sampled into discrete signals, and discrete signals are quantized into digital signals. Quantization is the process of converting the sampled discrete data into binary numbers. The quantization depth represents how many bits each sampling point is represented by. In a computer, the quantization depth of audio is generally 4, 8, 16, 32 bits, etc.

The quantization depth affects the quality of sound. Obviously, the more bits, the closer the quantized waveform is to the original waveform, the higher the quality of sound and the more storage space is required; The smaller the number of bits, the lower the sound quality and the less storage space required. CD sound quality adopts 16 bits and mobile communication adopts 8 bits.

In addition, WAV file is actually PCM format, because when playing PCM raw stream, we need to know the sampling rate, channel number, bit width and other information of PCM. WAV only adds this part of description information in front of the file header, so WAV file can be played directly.

PCM is a frequently contacted format in audio processing. Generally, our audio processing is based on PCM stream, such as common volume adjustment, sound change, tone change and other characteristics.

03 AudioTrack API introduction

In Android, if you want to play an audio file, we generally give priority to MediaPlayer. When using MediaPlayer, you don't need to care about the specific format of the file or decode the file. Using the API provided by MediaPlayer, we can develop a simple audio player.

AudioTrack is another way to play audio, "you can also learn about SoundPool if you are interested", and can only be used to play PCM data.

AudioTrack API overview:

- AudioTrack initialization

/** * Class constructor. * @param streamType Flow type * @link AudioManager#STREAM_VOICE_CALL, voice call * @link AudioManager#STREAM_SYSTEM, System sound such as low battery * @link AudioManager#STREAM_RING, Incoming ring * @link AudioManager#STREAM_MUSIC, music player * @link AudioManager#STREAM_ALARM, Warning tone * @link AudioManager#STREAM_NOTIFICATION notice * * @param sampleRateInHz sampling rate * * @param channelConfig Channel type * @link AudioFormat#CHANNEL_OUT_MONO Mono * @link AudioFormat#CHANNEL_OUT_STEREO Dual channel * @param audioFormat * @link AudioFormat#ENCODING_PCM_16BIT, * @link AudioFormat#ENCODING_PCM_8BIT, * @link AudioFormat#ENCODING_PCM_FLOAT * @param bufferSizeInBytes Buffer size * @param mode pattern * @link #MODE_STATIC Static mode writes data once through write, which is suitable for small files * @link #MODE_STREAM Streaming mode is written in batches through write, which is suitable for large files */ public AudioTrack (int streamType, int sampleRateInHz, int channelConfig, int audioFormat,int bufferSizeInBytes, int mode)

The bufferSizeInBytes parameter when initializing AudioTrack can calculate the appropriate estimated buffer size through getMinBufferSize, which is generally an integer multiple of getMinBufferSize.

2. Write data

/** * @param audioData An array that holds the data to be played * @param offsetInBytes The offset in bytes in the audioData to be written to * @param sizeInBytes The number of bytes written to audioData after the offset. **/ public int write(@NonNull byte[] audioData, int offsetInBytes, int sizeInBytes)

3. Start playing

public void play()

If the mode when AudioTrack is created is mode_ When static, you must ensure that the write method has been called before calling play.

4. Pause playback

public void pause()

Pause playing the data. The data that has not been played will not be discarded. When you call play again, it will continue to play.

5. Stop playing

public void stop()

Stop playing data. Data that has not been played will be discarded.

6. Refresh buffer data

public void flush()

Refresh the data currently queued for playback. The written data will be discarded and the buffer will be cleaned up.

04 MediaCodec decodes and plays audio tracks

If we want to play an audio track, we need to decode the audio track before playing. We have been talking about how to decode the video before. If you have seen the AVPlayer Demo, you must be familiar with how to create a video track decoder. If we want to decode an audio track, we just need to change the mimeType. Create an audio track to decode as follows:

private void doDecoder() {

// step 1: create a media splitter

MediaExtractor extractor = new MediaExtractor();

// step 2: load media file path for media separator

// specify the path to a file

Uri videoPathUri = Uri.parse("android.resource://" + getPackageName() + "/" + R.raw.demo_video);

try

{

extractor.setDataSource(this, videoPathUri, null);

}

catch(IOException e) {

e.printStackTrace();

}

// step 3: get and select the track of the specified type

// Number of tracks in media files (generally including video, audio, subtitles, etc.)

int trackCount = extractor.getTrackCount();

// mime type indicates the track type that needs to be separated. Specify it as audio track

String extractMimeType = "audio/";

MediaFormat trackFormat = null;

// Record the track index id. separate track indexes need to be specified before MediaExtractor reads data

int trackID = -1;

for(int i = 0; i < trackCount; i++) {

trackFormat = extractor.getTrackFormat(i);

if(trackFormat.getString(MediaFormat.KEY_MIME).startsWith(extractMimeType))

{

trackID = i;

break;

}

}

// There are video tracks in the media file

// step 4: select the track of the specified type

if(trackID != -1)

extractor.selectTrack(trackID);

// step 5: create decoder according to MediaFormat

MediaCodec mediaCodec = null;

try

{

mediaCodec = MediaCodec.createDecoderByType(trackFormat.getString(MediaFormat.KEY_MIME));

mediaCodec.configure(trackFormat,null,null,0);

mediaCodec.start();

}

catch(IOExceptione) {

e.printStackTrace();

}

while (mRuning) {

// step 6: feed data to decoder

boolean ret = feedInputBuffer(extractor,mediaCodec);

// step 7: spit data from decoder

boolean decRet = drainOutputBuffer(mediaCodec);

if(!ret && !decRet) break;

}

// step 8: release resources

// Release the separator, after which the extractor will not be available

extractor.release();

// Release decoder

mediaCodec.release();

new Handler(LoopergetMainLooper()).post(new Runnable() {

@Override

public void run() {

mPlayButton.setEnabled(true);

mInfoTextView.setText("Decoding complete");

}

});

}

When decoding audio, we set extractMimeType to "audio /", and other codes are the same as when decoding video.

Then we listened to the info_ OUTPUT_ FORMAT_ In the changed state, get the format information of the audio track. MediaFormat provides enough information for us to initialize AudioTrack.

// Spit out the decoded audio information from MediaCodec

private boolean drainOutputBuffer(MediaCodec mediaCodec) {

if (mediaCodec == null) return false;

final MediaCodec.BufferInfo info = new MediaCodec.BufferInfo();

int outIndex = mediaCodec.dequeueOutputBuffer(info, 0);

if ((info.flags & BUFFER_FLAG_END_OF_STREAM) != 0)

{

mediaCodec.releaseOutputBuffer(outIndex, false);

return false ;

}

switch (outIndex) {

case

INFO_OUTPUT_BUFFERS_CHANGED: return true

case

INFO_TRY_AGAIN_LATER: return true;

case

INFO_OUTPUT_FORMAT_CHANGED: {

MediaFormat outputFormat = mediaCodec.getOutputFormat();

int sampleRate = 44100;

if (outputFormat.containsKey(MediaFormat.KEY_SAMPLE_RATE))

sampleRate = outputFormat.getInteger(MediaFormat.KEY_SAMPLE_RATE);

int channelConfig = AudioFormat.CHANNEL_OUT_MONO;

if

(outputFormat.containsKey(MediaFormat.KEY_CHANNEL_COUNT))

channelConfig = outputFormat.getInteger(MediaFormat.KEY_CHANNEL_COUNT) == 1 ? AudioFormat.CHANNEL_OUT_MONO : AudioFormat.CHANNEL_OUT_STEREO;

int audioFormat = AudioFormat.ENCODING_PCM_16BIT;

if (outputFormat.containsKey("bit-width"))

audioFormat = outputFormat.getInteger("bit-width") == 8 ? AudioFormat.ENCODING_PCM_8BIT : AudioFormat.ENCODING_PCM_16BIT;

mBufferSize = AudioTrack.getMinBufferSize(sampleRate,channelConfig,audioFormat) * 2;

mAudioTrack = new AudioTrack(AudioManager.STREAM_MUSIC,sampleRate,channelConfig,audioFormat,mBufferSize,AudioTrack.MODE_STREAM);

mAudioTrack.play();

return true;

}

default:

{

if (outIndex >= 0 && info.size > 0)

{

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

bufferInfo.presentationTimeUs = info.presentationTimeUs;

bufferInfo.size = info.size;

bufferInfo.flags = info.flags;

bufferInfo.offset = info.offset;

ByteBuffer outputBuffer = mediaCodec.getOutputBuffers()[outIndex];

outputBuffer.position(bufferInfo.offset);

outputBuffer.limit(bufferInfo.offset + bufferInfo.size);

byte[] audioData = new byte[bufferInfo.size];

outputBuffer.get(audioData);

// Write decoded audio data

mAudioTrack.write(audioData,bufferInfo.offset,Math.min(bufferInfo.size, mBufferSize));

// release

mediaCodec.releaseOutputBuffer(outIndex, false);

return true;

}

}

}When we pass info_ OUTPUT_ FORMAT_ After changed obtains MediaFormat and initializes AudioTrack, it can write the decoded audio data through the write method.

See DemoAudioTrackPlayerActivity for details

Article reprint:

Play audio tracks using AudioTrack