What is the Pod lifecycle

Like a stand-alone application container, pod is also considered a relatively temporary (rather than long-term) entity. The pod will be created, given a unique ID (UID), and dispatched to the node. The pod will run on the node until it is terminated (according to the restart Policy) or deleted.

If a node dies, the Pod scheduled to the node is also scheduled to be deleted after the end of a given timeout period.

Pod itself does not have self-healing ability. If the pod is scheduled to a node, but the node later fails, or the scheduling operation itself fails, the pod will be deleted; Similarly, the pod cannot survive when the node resource is exhausted or during node maintenance. Kubernetes uses a high-level abstraction called controller to manage these relatively disposable pod instances.

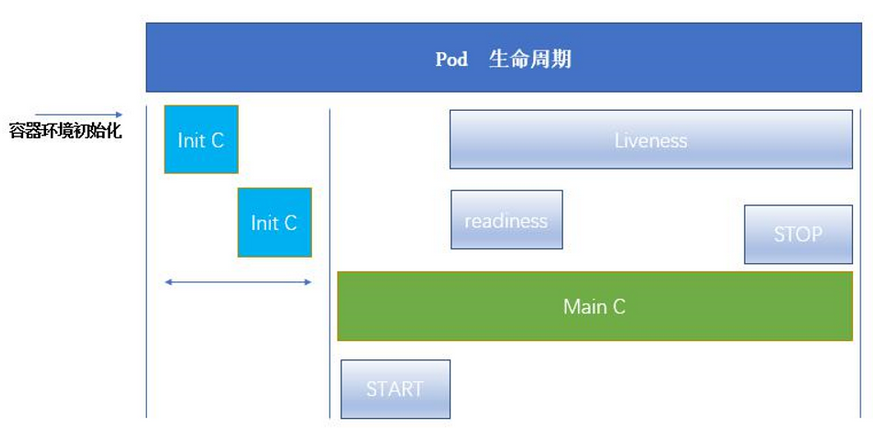

Generally, the period from the creation to the end of a pod object becomes the life cycle of a pod. It mainly includes the following processes:

1. pod creation process

2. Run the init container process (more or less, optional)

3. Run the main container procedure

The hook function executed after the container is started (post start), and the hook function executed before the container is terminated (pre stop)

Survivability probe and readiness probe of container

4. pod termination process

A diagram to illustrate:

Init initialize container

What Init container can do

Pod can contain multiple containers in which applications run.

The Pod can have one or more Init containers that start before the application container.

1. The Init container can contain some utilities or personalization code that does not exist in the application container during installation.

2. The Init container can safely run these tools to prevent them from reducing the security of the application image. Because the initialization container is closed after running, it is impossible for others to connect to your container and use / scan these tools.

3. The creator and deployer of the application image can work independently, and there is no need to jointly build a separate application image.

4. The Init container can run in a file system view different from the application container in the Pod. Therefore, the Init container can have access to Secrets, but the application container cannot.

5. Because the Init container must run before the application container starts, the Init container provides a mechanism to block or delay the start of the application container until a set of prerequisites are met. Once the preconditions are met, all application containers in the Pod will start in parallel.

Init container features

The Init container is very similar to an ordinary container, except for the following two points:

They always run to completion.

Init containers do not support Readiness / probes because they must run before the Pod is ready (they must run successfully before the main container runs), and each init container must run successfully before the next can run.

If the Init container of the Pod fails, Kubernetes will restart the Pod continuously until the Init container succeeds. However, if the restart policy value corresponding to Pod is Never, it will not restart.

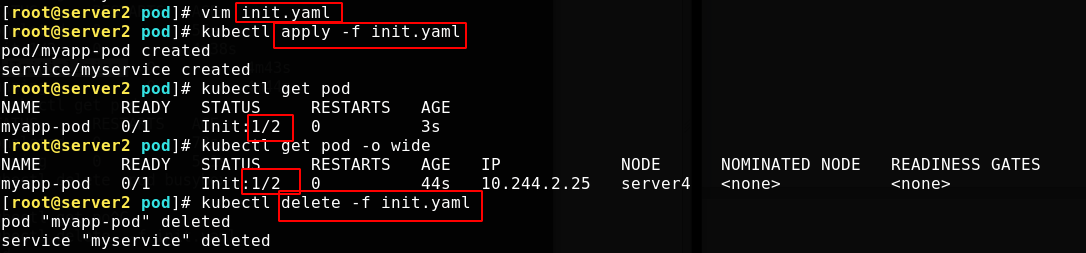

init initialization instance

Write a yaml file containing the initialization container. Refer to the previous article for the meaning of each parameter Resource list.

Comment out one of the following services to see if they can run successfully

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

- name: myapp-container #The main container can only be run after the initialization container is completed

image: busyboxplus

command: ['sh', '-c', 'echo The app is running! && sleep 3600'] #sh -c lets bash execute a string as a complete command

initContainers:

- name: init-myservice #One of the services that initialize the container

image: busyboxplus

command: ['sh', '-c', "until nslookup myservice.default.svc.cluster.local; do echo waiting for myservice; sleep 2; done"] #Query DNS records, check whether domain name resolution is normal, and monitor whether the lowest service is running normally

- name: init-mydb #One of the services that initialize the container

image: busyboxplus

command: ['sh', '-c', "until nslookup mydb.default.svc.cluster.local; do echo waiting for mydb; sleep 2; done"] #Has been parsing, svc name Behind the namespace is fixed. Query DNS records and monitor whether the lowest service is running normally

--- #Separated by three separators, the top and bottom do not affect each other. The following two services are the services to be started by the initialization container. These two services cannot start and complete the initialization container

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

--- #The service that needs to be started for the initialization container to run is commented out, and the pod operation should fail

#apiVersion: v1

#kind: Service

#metadata:

# name: mydb

#spec:

# ports:

# - protocol: TCP

# port: 80

# targetPort: 9377

The application file, check the status, only complete the startup of an initialization container, meet the expectations, and finally delete it

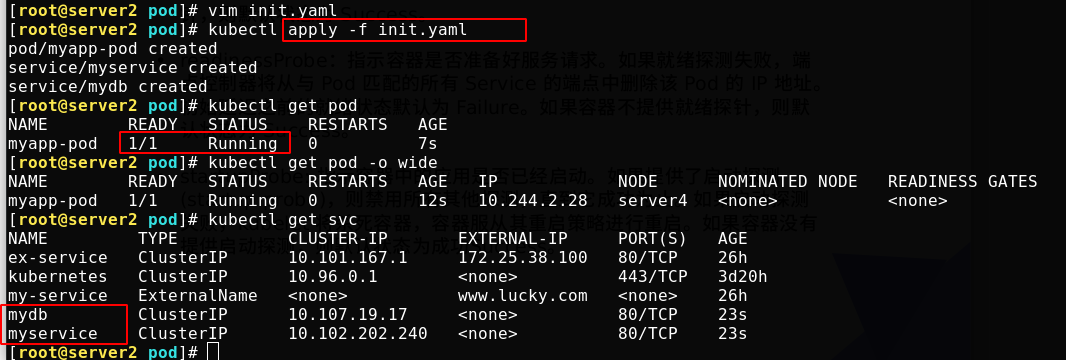

Remove the comments and run again. You can see that the two initialization services have run successfully

probe

The probe is a periodic diagnosis of the container performed by kubelet:

ExecAction: executes the specified command within the container. If the return code is 0 when the command exits, the diagnosis is considered successful.

TCPSocketAction: TCP checks the IP address of the container on the specified port. If the port is open, the diagnosis is considered successful.

HTTPGetAction: performs an HTTP Get request on the IP address of the container on the specified port and path. If the status code of the response is greater than or equal to 200 and less than 400, the diagnosis is considered successful.

Each probe will obtain one of the following three results:

Success: the container passed the diagnosis.

Failed: container failed diagnostics.

Unknown: the diagnosis failed and no action will be taken.

Kubelet can choose whether to execute and respond to three probes running on the container:

1. Liveness probe: indicates whether the container is running. If the survival probe fails, kubelet will kill the container and the container will be affected by its restart policy. If the container does not provide a survival probe, the default state is Success.

2. Readiessprobe: indicates whether the container is ready to Service requests. If the ready probe fails, the endpoint controller will delete the IP address of the Pod from the endpoints of all services matching the Pod. The ready state before the initial delay defaults to Failure. If the container does not provide a ready probe, the default state is Success.

3. startupProbe: indicates whether the application in the container has been started. If a startup probe is provided, all other probes are disabled until it succeeds. If the probe fails to start, kubelet will kill the container, and the container will restart according to its restart policy. If the container does not provide a start probe, the default status is Success.

Restart policy:

There is a restartPolicy field in PodSpec. The possible values are Always, OnFailure and Never. The default is Always.

Pod's life:

Generally, Pod will not disappear until they are artificially destroyed, which may be a person or controller.

It is recommended that you create an appropriate controller to create the Pod instead of creating the Pod yourself. Because a single Pod cannot recover automatically in case of machine failure, but the controller can.

Three controllers are available:

Use a job to run a Pod that is expected to terminate, such as a batch calculation. Job is only applicable to Pod whose restart policy is OnFailure or Never.

Use ReplicationController, ReplicaSet, and Deployment, such as a Web server, for pods that are not expected to terminate. ReplicationController is only applicable to pods with restartPolicy of Always.

Provide machine specific system services and run a Pod for each machine using the daemon set.

livenessProbe survival probe

probe Official documents Click to view

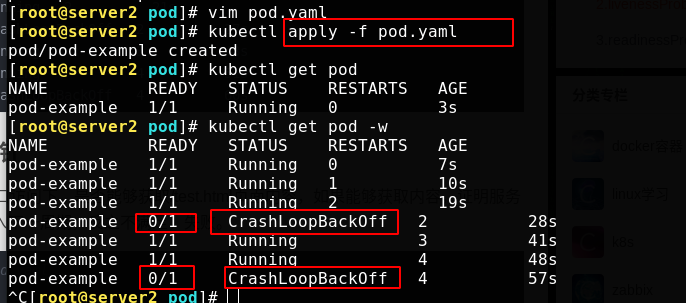

[root@server2 pod]# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-example

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v1

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 1 #How many seconds after the container is started

periodSeconds: 3 #Detection interval

timeoutSeconds: 1 #Seconds after probe timeout

The application file detects whether port 8080 is open. If the detection conditions are not met, it will be re detected all the time

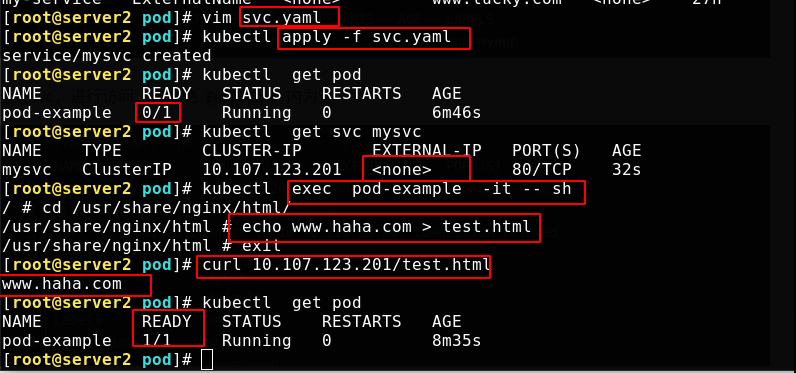

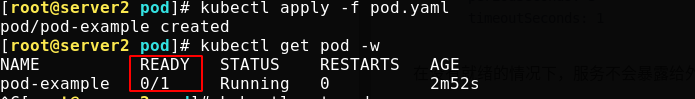

readinessProbe ready probe

The application of the ready probe in the script is to judge whether / test can be obtained under port 80 access If the content of HTML page can be obtained, it proves that the service access is normal and everything is ready. It can enter the next cycle. Otherwise, it fails.

[root@server2 pod]# vim pod.yaml

The contents of the document are as follows:

apiVersion: v1

kind: Pod

metadata:

name: pod-example

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v1

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 1

readinessProbe:

httpGet:

path: /test.html

port: 80

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 1

When the service is not ready, it will not be exposed to the outside, so the outside cannot be accessed, but only through the inside.

[root@server2 pod]# vim svc.yaml

apiVersion: v1

kind: Service

metadata:

name: mysvc

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: myapp

The back end is empty. Enter the service and publish the test page. It can be accessed externally and is in a ready state