Label

PostgreSQL, citus, pgbouncer, network optimization, worker node

background

Network requirements between citus nodes:

1. The cn node accesses all worker nodes. oltp services are frequently accessed.

2. When data is redistributed, worker nodes visit each other. Access frequency is not high, OLAP business is common, once possible, data exchange throughput is large.

The cn node of citus is connected to the worker node in two modes.

One is to maintain connection mode for transaction level (establish connection at the start of each SQL, release connection after the end of SQL (unless in the transaction, the end of SQL immediately release connection). ,

The other is the session-level connection-preserving mode, which establishes the connection at the start of the session and releases the connection after the end of the session.

1. When running OLAP-like SQL, the first instant connection mode is used (the concurrency of OLAP scenarios is not high, and the extra cost of establishing connections is not obvious).

You can open parameters at worker nodes for tracking

postgres=# show log_connections ; log_connections ----------------- on (1 row) postgres=# show log_disconnections ; log_disconnections -------------------- on (1 row)

Example,

Both of the following SQL are instant short connection modes (Custom Scan (Citus Task-Tracker) and Custom Scan (Citus Real-Time).

postgres=# set citus.task_executor_type =task; ERROR: invalid value for parameter "citus.task_executor_type": "task" HINT: Available values: real-time, task-tracker. postgres=# set citus.task_executor_type ='task-tracker'; SET postgres=# explain select count(*) from pgbench_accounts ; QUERY PLAN --------------------------------------------------------------------------------------------------------------------- Aggregate (cost=0.00..0.00 rows=0 width=0) -> Custom Scan (Citus Task-Tracker) (cost=0.00..0.00 rows=0 width=0) Task Count: 128 Tasks Shown: One of 128 -> Task Node: host=172.24.211.224 port=1921 dbname=postgres -> Aggregate (cost=231.85..231.86 rows=1 width=8) -> Seq Scan on pgbench_accounts_106812 pgbench_accounts (cost=0.00..212.48 rows=7748 width=0) (8 rows) postgres=# set citus.task_executor_type ='real-time'; postgres=# explain select count(*) from pgbench_accounts ; QUERY PLAN --------------------------------------------------------------------------------------------------------------------- Aggregate (cost=0.00..0.00 rows=0 width=0) -> Custom Scan (Citus Real-Time) (cost=0.00..0.00 rows=0 width=0) Task Count: 128 Tasks Shown: One of 128 -> Task Node: host=172.24.211.224 port=1921 dbname=postgres -> Aggregate (cost=231.85..231.86 rows=1 width=8) -> Seq Scan on pgbench_accounts_106812 pgbench_accounts (cost=0.00..212.48 rows=7748 width=0) (8 rows)

2. Running OLTP queries (usually with high concurrency, with connection pools at the front end (holding sessions), and maintaining connection mode (Custom Scan (Citus Router) at the session level.

The following SQL is a long connection mode (it will not be released immediately, but will be released later to reduce the connection overhead when high concurrency occurs)

postgres=# explain select * from pgbench_accounts where aid=5; QUERY PLAN ------------------------------------------------------------------------------------------------------------------------------------------ Custom Scan (Citus Router) (cost=0.00..0.00 rows=0 width=0) Task Count: 1 Tasks Shown: All -> Task Node: host=172.24.211.224 port=1921 dbname=postgres -> Index Scan using pgbench_accounts_pkey_106836 on pgbench_accounts_106836 pgbench_accounts (cost=0.28..2.50 rows=1 width=97) Index Cond: (aid = 5) (7 rows)

Looking at the above two scenarios, CITUS should say that the design is very good. It can satisfy both TP and AP.

However, as mentioned earlier, connection maintenance is at the transaction or session level. If the query volume is large or the user uses short connections, the overhead of establishing connections will be very prominent.

newdb=> \c postgres postgres You are now connected to database "postgres" as user "postgres". postgres=# select * from pgbench_accounts where aid=1; aid | bid | abalance | filler -----+-----+----------+-------------------------------------------------------------------------------------- 1 | 1 | 7214 | (1 row) Time: 11.264 ms -- Including the overhead of new connections postgres=# select * from pgbench_accounts where aid=1; aid | bid | abalance | filler -----+-----+----------+-------------------------------------------------------------------------------------- 1 | 1 | 7214 | (1 row) Time: 0.905 ms -- Connection established

Using pgbouncer to solve the overhead of establishing connections

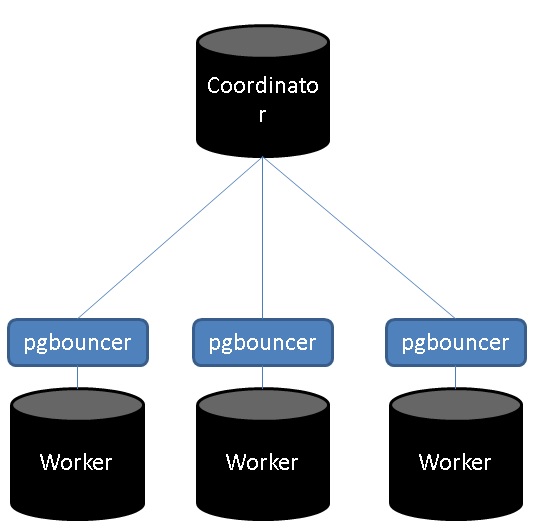

On the worker node, pgbouncer is deployed. All connections established with the worker node are maintained through the pgbouncer connection pool to reduce the overhead of frequent new connections of the worker node.

Deployment method

1. All worker nodes

pgbouncer

yum install -y pgbouncer

To configure

vi /etc/pgbouncer/pgb.ini [databases] newdb = host=/tmp dbname=newdb port=1921 user=digoal pool_size=128 reserve_pool=10 [pgbouncer] logfile = /var/log/pgbouncer/pgbouncer.log pidfile = /var/run/pgbouncer/pgbouncer.pid listen_addr = 0.0.0.0 listen_port = 8001 auth_type = any auth_file = /etc/pgbouncer/userlist.txt pool_mode = session server_reset_query = DISCARD ALL max_client_conn = 5000 default_pool_size = 128 ; Maximum not more than 4 times CPU number

start-up

pgbouncer -d -u pgbouncer /etc/pgbouncer/pgb.ini

In a citus cluster, there can be both direct worker and pgbouncer connection worker.

Different database s can be configured differently.

For the following example, create a new database and connect worker using pgbouncer.

2, all nodes (including cn, worker)

New database, plug-in

su - postgres psql -c "create role digoal login;" psql -c "create database newdb;" psql -c "grant all on database newdb to digoal;" psql -U postgres newdb -c "create extension citus;"

cn node

Add worker to cluster configuration, using pgbouncer's connection port

su - postgres -c "psql -U postgres newdb -c \"SELECT * from master_add_node('xxx.xxx.xxx.224', 8001);\"" su - postgres -c "psql -U postgres newdb -c \"SELECT * from master_add_node('xxx.xxx.xxx.230', 8001);\"" su - postgres -c "psql -U postgres newdb -c \"SELECT * from master_add_node('xxx.xxx.xxx.231', 8001);\"" su - postgres -c "psql -U postgres newdb -c \"SELECT * from master_add_node('xxx.xxx.xxx.225', 8001);\"" su - postgres -c "psql -U postgres newdb -c \"SELECT * from master_add_node('xxx.xxx.xxx.227', 8001);\"" su - postgres -c "psql -U postgres newdb -c \"SELECT * from master_add_node('xxx.xxx.xxx.232', 8001);\"" su - postgres -c "psql -U postgres newdb -c \"SELECT * from master_add_node('xxx.xxx.xxx.226', 8001);\"" su - postgres -c "psql -U postgres newdb -c \"SELECT * from master_add_node('xxx.xxx.xxx.229', 8001);\""

MX configuration, again, adds worker nodes to metadata synchronization.

psql newdb postgres

select * from master_add_node('xxx.xxx.xxx.224',8001); select * from master_add_node('xxx.xxx.xxx.230',8001);

Open Synchronization to Metadata.

select start_metadata_sync_to_node('xxx.xxx.xxx.224',8001); select start_metadata_sync_to_node('xxx.xxx.xxx.230',8001);

test

1. tpc-b long connection test

pgbench -i -s -U digoal newdb

psql -U digoal newdb select create_distributed_table('pgbench_accounts','aid'); select create_distributed_table('pgbench_branches','bid'); select create_distributed_table('pgbench_tellers','tid'); select create_distributed_table('pgbench_history','aid');

pgbench -M prepared -v -r -P 1 -c 64 -j 64 -T 120 -U digoal newdb -S

Performance is similar to that without pgbouncer, because long connections are used to test simple SQL (citus itself uses session-level connection preservation without short connection problems).

Time-consuming hiding of citus first QUERY

In a new session, the first query always takes more time (including connection time if no connection is established). Even if a connection has been established, it will take some extra time (for specific reasons, code can be tracked and analyzed).

The following is the pgbouncer connection worker, so the first QUERY does not include the time to establish the connection.

newdb=> \q postgres@digoal-citus-gpdb-test001-> psql newdb digoal psql (10.5) Type "help" for help. \timing newdb=> select * from pgbench_accounts where aid=5; aid | bid | abalance | filler -----+-----+----------+-------------------------------------------------------------------------------------- 5 | 1 | 0 | (1 row) Time: 6.016 ms -- Excluding new connections (used) pgbouncer Established), but a few milliseconds more. -- But compared with unused pgbouncer,The delay has been reduced by about 5 milliseconds. newdb=> select * from pgbench_accounts where aid=5; aid | bid | abalance | filler -----+-----+----------+-------------------------------------------------------------------------------------- 5 | 1 | 0 | (1 row) Time: 0.989 ms

A few milliseconds more, our community partners Deng Biao and Wang Jian gave the following reasons. Many places need to check whether the installed version of citus is compatible with the version of citus.control file (for example, when loading RELCACHE E of distributed TABLE, the first visit is the problem). Incompatible error reporting:

See the code for details.

/* * CheckAvailableVersion compares CITUS_EXTENSIONVERSION and the currently * available version from the citus.control file. If they are not compatible, * this function logs an error with the specified elevel and returns false, * otherwise it returns true. */ bool CheckAvailableVersion(int elevel) { char *availableVersion = NULL; if (!EnableVersionChecks) { return true; } availableVersion = AvailableExtensionVersion(); if (!MajorVersionsCompatible(availableVersion, CITUS_EXTENSIONVERSION)) { ereport(elevel, (errmsg("loaded Citus library version differs from latest " "available extension version"), errdetail("Loaded library requires %s, but the latest control " "file specifies %s.", CITUS_MAJORVERSION, availableVersion), errhint("Restart the database to load the latest Citus " "library."))); return false; } return true; } /* * AvailableExtensionVersion returns the Citus version from citus.control file. It also * saves the result, thus consecutive calls to CitusExtensionAvailableVersion will * not read the citus.control file again. */ static char * AvailableExtensionVersion(void) { ReturnSetInfo *extensionsResultSet = NULL; TupleTableSlot *tupleTableSlot = NULL; FunctionCallInfoData *fcinfo = NULL; FmgrInfo *flinfo = NULL; int argumentCount = 0; EState *estate = NULL; bool hasTuple = false; bool goForward = true; bool doCopy = false; char *availableExtensionVersion; InitializeCaches(); estate = CreateExecutorState(); extensionsResultSet = makeNode(ReturnSetInfo); extensionsResultSet->econtext = GetPerTupleExprContext(estate); extensionsResultSet->allowedModes = SFRM_Materialize; fcinfo = palloc0(sizeof(FunctionCallInfoData)); flinfo = palloc0(sizeof(FmgrInfo)); fmgr_info(F_PG_AVAILABLE_EXTENSIONS, flinfo); InitFunctionCallInfoData(*fcinfo, flinfo, argumentCount, InvalidOid, NULL, (Node *) extensionsResultSet); /* pg_available_extensions returns result set containing all available extensions */ (*pg_available_extensions)(fcinfo); tupleTableSlot = MakeSingleTupleTableSlot(extensionsResultSet->setDesc); hasTuple = tuplestore_gettupleslot(extensionsResultSet->setResult, goForward, doCopy, tupleTableSlot); while (hasTuple) { Datum extensionNameDatum = 0; char *extensionName = NULL; bool isNull = false; extensionNameDatum = slot_getattr(tupleTableSlot, 1, &isNull); extensionName = NameStr(*DatumGetName(extensionNameDatum)); if (strcmp(extensionName, "citus") == 0) { MemoryContext oldMemoryContext = NULL; Datum availableVersion = slot_getattr(tupleTableSlot, 2, &isNull); /* we will cache the result of citus version to prevent catalog access */ oldMemoryContext = MemoryContextSwitchTo(CacheMemoryContext); availableExtensionVersion = text_to_cstring(DatumGetTextPP(availableVersion)); MemoryContextSwitchTo(oldMemoryContext); ExecClearTuple(tupleTableSlot); ExecDropSingleTupleTableSlot(tupleTableSlot); return availableExtensionVersion; } ExecClearTuple(tupleTableSlot); hasTuple = tuplestore_gettupleslot(extensionsResultSet->setResult, goForward, doCopy, tupleTableSlot); } ExecDropSingleTupleTableSlot(tupleTableSlot); ereport(ERROR, (errcode(ERRCODE_OBJECT_NOT_IN_PREREQUISITE_STATE), errmsg("citus extension is not found"))); return NULL; }

Benefits of pgbouncer connection pool connecting worker

1. Short connection in business layer will have better effect. The delay can be reduced by at least 5 milliseconds.

2. For a large number of complex queries (queries requiring motion), the number of connections between nodes can be reduced.

Also note the pgbouncer bottleneck: bandwidth, QPS (single core, single pgbouncer process peak QPS about 50,000)

Reference resources

man 1 pgbouncer

man 5 pgbouncer