Practical application of canal

Understand the principle of canal

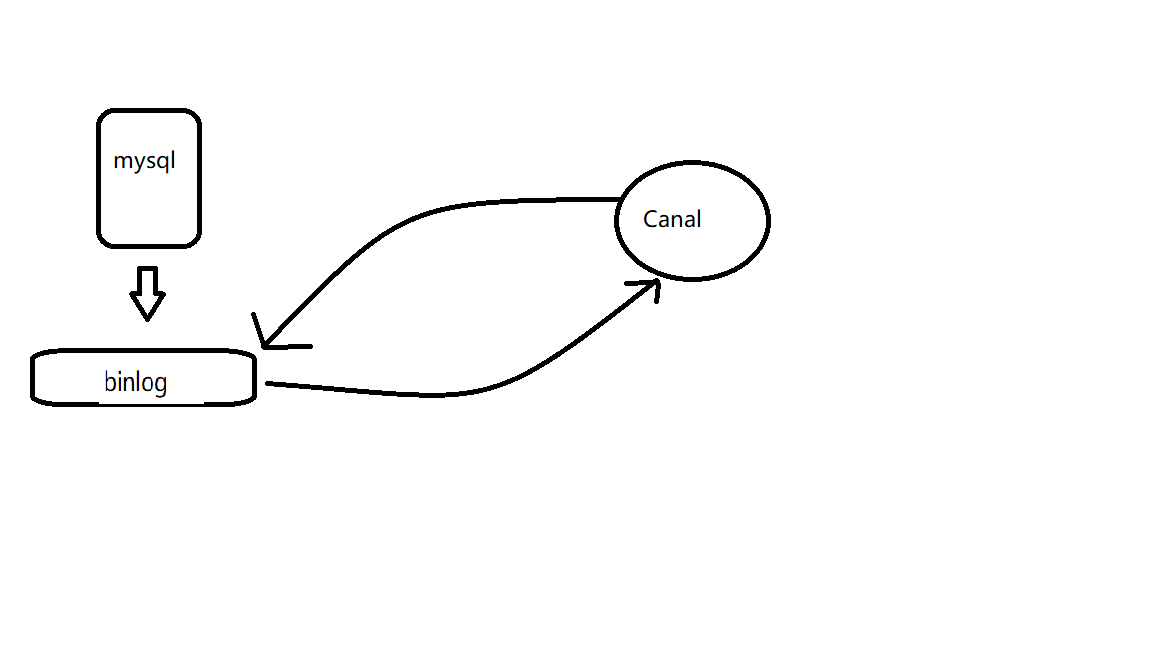

Canal is a middleware based on database incremental log parsing developed in java, which provides incremental data subscription and consumption. At present, canal mainly supports MySQL binlog parsing. After parsing, canal client is used to process the obtained relevant data. (database synchronization requires Alibaba's otter middleware, which is based on canal)

How canal works: disguise yourself as a slave and copy data from the master. Master authorization is required to read binlog. Because binlog is encrypted, the authorized user name and password can be read. After authorization, the master does not know whether the slave or canal reads his binlog. All his transmission protocols comply with the slave standard, so the master always thinks it is read from the slave.

1.mysql configuration

1.1 open the binlog module of mysql

Switch to the mysql installation path, find my.cnf(Linux)/my.ini (windows), and add the following:

[mysqld] log-bin=mysql-bin #Add this line, ok binlog-format=ROW #Select row mode server_id=1 #The MySQL replacement configuration needs to be defined and cannot be the same as the slaveId of canal

After configuration, you need to restart the database

1.2 create a canal user to manage the access rights of canal. We can control the content that can be obtained by canal by controlling the access rights of canal users

CREATE USER canal IDENTIFIED BY 'canal'; GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON Database name.Table name TO 'canal'@'%'; -- GRANT ALL PRIVILEGES ON Database name.Table name TO 'canal'@'%' ; FLUSH PRIVILEGES; such as(Give the read, copy and copy permissions of the whole database and table): GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%' IDENTIFIED BY 'canal' ;

Possible problems

report errors: ERROR 1819 (HY000): Your password does not satisfy the current policy requirements

Because the password setting is too simple, MySQL has a specification for password setting, which is specifically related to validate_ password_ The value of policy

View the complete initial password rules of MySQL, and execute the following commands after logging in:

mysql> SHOW VARIABLES LIKE 'validate_password%';

The length of the password is determined by validate_password_length, but can be modified by the following command

set global validate_password_length=4;

validate_password_policy determines the password authentication policy. The default level is medium, which can be modified to low through the following command

set global validate_password_policy=0;

2.canal configuration

Download address https://github.com/alibaba/canal/releases/

git download may be slow or can't get down. Here's my online disk link. If it expires, you can contact me

Link: https://pan.baidu.com/s/1zL_Mx_EqskjHALPvvq_GeQ

Extraction code: wu6s

2.1 unzip tar zxvf canal.deployer-1.0.0.tar.g

2.2. Document description: there are several folders under canal

bin conf to configure lib jar package logs journal Usually these four

2.3 configuration description

The two main configuration files are canal.properties and instance.properties. The latter is in the example folder in conf. normally, the configuration file canal.properties does not need to be changed. This will be mentioned later. Here is my configuration (instance. Properties),

## mysql serverId canal.instance.mysql.slaveId = 2 #Note that this id cannot be the same as the server in mysql configuration_ Same id # position info canal.instance.master.address = localhost:3306 #Own database connection address canal.instance.master.journal.name = canal.instance.master.position = canal.instance.master.timestamp = #canal.instance.standby.address = #canal.instance.standby.journal.name = #canal.instance.standby.position = #canal.instance.standby.timestamp = # username/password canal.instance.dbUsername = canal canal.instance.dbPassword = canal canal.instance.defaultDatabaseName = pifi #Database name canal.instance.connectionCharset = UTF-8 #code # table regex canal.instance.filter.regex = .*\\..* # table black regex canal.instance.filter.black.regex =

2.4 startup

./bin/startup.sh

This way, pay attention to this Linux

windows yes

./bin/startup.bat

2.5 viewing startup status

adopt logs/canal/canal.log and logs/example/example.log Log to judge canal Whether it started successfully.

logs/canal/canal.log

2021-04-08 09:43:55.450 [main] INFO c.a.o.c.d.monitor.remote.RemoteConfigLoaderFactory - ## load local canal configurations 2021-04-08 09:43:55.455 [main] INFO com.alibaba.otter.canal.deployer.CanalStater - ## start the canal server. 2021-04-08 09:43:55.668 [main] INFO com.alibaba.otter.canal.deployer.CanalController - ## start the canal server[10.0.75.1:11111] 2021-04-08 09:43:56.600 [main] INFO com.alibaba.otter.canal.deployer.CanalStater - ## the canal server is running now ...... 2021-04-08 10:36:26.771 [main] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## set default uncaught exception handler 2021-04-08 10:36:26.799 [main] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## load canal configurations

logs/example/example.log

2021-04-08 09:38:49.733 [main] INFO c.a.o.c.i.spring.support.PropertyPlaceholderConfigurer - Loading properties file from class path resource [example/instance.properties] 2021-04-08 09:38:49.885 [main] ERROR com.alibaba.druid.pool.DruidDataSource - testWhileIdle is true, validationQuery not set 2021-04-08 09:38:50.086 [main] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start CannalInstance for 1-example 2021-04-08 09:38:50.090 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table filter : ^.*\..*$ 2021-04-08 09:38:50.091 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table black filter : 2021-04-08 09:38:50.096 [main] INFO c.a.otter.canal.instance.core.AbstractCanalInstance - start successful....

Basically, you can write code tests directly or look at the log files above

package com.example.demo.canal;

import java.net.InetSocketAddress;

import java.util.List;

import com.alibaba.fastjson.JSONObject;

import com.alibaba.otter.canal.client.CanalConnector;

import com.alibaba.otter.canal.common.utils.AddressUtils;

import com.alibaba.otter.canal.protocol.Message;

import com.alibaba.otter.canal.protocol.CanalEntry.Column;

import com.alibaba.otter.canal.protocol.CanalEntry.Entry;

import com.alibaba.otter.canal.protocol.CanalEntry.EntryType;

import com.alibaba.otter.canal.protocol.CanalEntry.EventType;

import com.alibaba.otter.canal.protocol.CanalEntry.RowChange;

import com.alibaba.otter.canal.protocol.CanalEntry.RowData;

import com.alibaba.otter.canal.client.*;

/**

* @author zhang ho jian

* @date 2021/4/8

* @time 10:16

* @Description :Test Canal

*/

public class CanalTest {

public static void main(String[] args) {

//Change the ip and port to their own canal. The default is 11111

CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress(AddressUtils.getHostIp(),

11111), "example", "", "");

int batchSize = 1000;

try {

connector.connect();

connector.subscribe(".*\\..*");

connector.rollback();

while (true) {

Message message = connector.getWithoutAck(batchSize); // Gets the specified amount of data

long batchId = message.getId();

int size = message.getEntries().size();

if (batchId == -1 || size == 0) {

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

} else {

printEntry(message.getEntries());

}

connector.ack(batchId); // Submit for confirmation

// connector.rollback(batchId); // Processing failed, rollback data

}

} finally {

connector.disconnect();

}

}

private static void printEntry( List<Entry> entrys) {

for (Entry entry : entrys) {

if (entry.getEntryType() == EntryType.TRANSACTIONBEGIN || entry.getEntryType() == EntryType.TRANSACTIONEND) {

continue;

}

RowChange rowChage = null;

try {

rowChage = RowChange.parseFrom(entry.getStoreValue());

} catch (Exception e) {

throw new RuntimeException("ERROR ## parser of eromanga-event has an error , data:" + entry.toString(),

e);

}

EventType eventType = rowChage.getEventType();

System.out.println(String.format("================> binlog[%s:%s] , name[%s,%s] , eventType : %s",

entry.getHeader().getLogfileName(), entry.getHeader().getLogfileOffset(),

entry.getHeader().getSchemaName(), entry.getHeader().getTableName(),

eventType));

for (RowData rowData : rowChage.getRowDatasList()) {

//If you need business processing, you can do the corresponding processing here. In my case, you need to synchronize the data of multiple databases to one database, which will cause the problem of primary key conflict, so you can process the primary key here. If you are a master and slave, you don't need to use it here

if (eventType == EventType.DELETE) {

System.out.println("Delete operation"+rowData.getBeforeColumnsList());

} else if (eventType == EventType.INSERT) {

System.out.println("Add operation"+rowData.getAfterColumnsList());

} else {

System.out.println("-------> before");

printColumn(rowData.getBeforeColumnsList());

System.out.println("to update"+rowData.getAfterColumnsList());

}

}

}

}

private static void printColumn( List<Column> columns) {

for (Column column : columns) {

System.out.println(column.getName() + " : " + column.getValue() + " update=" + column.getUpdated());

}

}

}

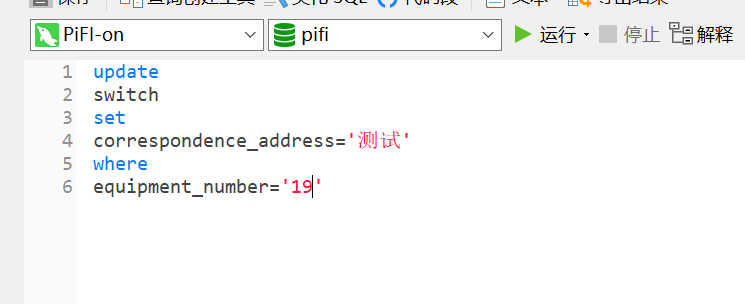

Here, let me operate the database

You can see the console output

-------> before preset : update=false besponsible_person : update=false breaking_current : update=false breaking_capacity : update=false switch_property : Incoming switch update=false switch_state : update=false operating_mechanism : update=false coal_mine_name : Smart grid simulation laboratory update=false equipment_manufacturer : update=false device_name : Switch 19 update=false device_model : update=false correspondence_address : Test 3 update=false equipment_number : 19 update=false preset_status : update=false rated_capacity : update=false rated_voltage : update=false rated_current : update=false protector_category : ZBT-11C(DSP)Protector update=false protector_number : 19 update=false Substation_no : TEST-BDS-001 update=false short_value : 2.000000000 update=false overcurrent_value : 1.000000000 update=false overload_setting_value : 3.000000000 update=false leakage_current1 : 2.000000000 update=false leakage_current2 : 44.000000000 update=false negative_sequence1 : 24.000000000 update=false negative_sequence2 : 3.000000000 update=false load_power : 2.000000000 update=false three_phase : 12.000000000 update=false two_phase : 12.000000000 update=false time_setting : 321.000000000 update=false negative_sequence_thime : 1.200000000 update=false protector_manufacturer : 2 update=false to update[index: 0 sqlType: 12 name: "preset" isKey: false updated: false isNull: true mysqlType: "varchar(32)" , index: 1 sqlType: 12 name: "besponsible_person" isKey: false updated: false isNull: true mysqlType: "varchar(100)" , index: 2 sqlType: 12 name: "breaking_current" isKey: false updated: false isNull: true mysqlType: "varchar(100)" , index: 3 sqlType: 12 name: "breaking_capacity" isKey: false updated: false isNull: true mysqlType: "varchar(100)" , index: 4 sqlType: 12 name: "switch_property" isKey: false updated: false isNull: false value: "\350\277\233\347\272\277\345\274\200\345\205\263" mysqlType: "varchar(100)" , index: 5 sqlType: 12 name: "switch_state" isKey: false updated: false isNull: true mysqlType: "varchar(100)" , index: 6 sqlType: 12 name: "operating_mechanism" isKey: false updated: false isNull: true mysqlType: "varchar(100)" , index: 7 sqlType: 12 name: "coal_mine_name" isKey: false updated: false isNull: false value: "\346\231\272\350\203\275\347\224\265\347\275\221\344\273\277\347\234\237\345\256\236\351\252\214\345\256\244" mysqlType: "varchar(100)" , index: 8 sqlType: 12 name: "equipment_manufacturer" isKey: false updated: false isNull: true mysqlType: "varchar(100)" , index: 9 sqlType: 12 name: "device_name" isKey: true updated: false isNull: false value: "\345\274\200\345\205\26319" mysqlType: "varchar(100)" , index: 10 sqlType: 12 name: "device_model" isKey: false updated: false isNull: true mysqlType: "varchar(100)" , index: 11 sqlType: 12 name: "correspondence_address" isKey: false updated: true isNull: false value: "\346\265\213\350\257\225" mysqlType: "varchar(100)" , index: 12 sqlType: 12 name: "equipment_number" isKey: true updated: false isNull: false value: "19" mysqlType: "varchar(100)" , index: 13 sqlType: 12 name: "preset_status" isKey: false updated: false isNull: true mysqlType: "varchar(100)" , index: 14 sqlType: 3 name: "rated_capacity" isKey: false updated: false isNull: true mysqlType: "decimal(20,10)" , index: 15 sqlType: 3 name: "rated_voltage" isKey: false updated: false isNull: true mysqlType: "decimal(20,10)" , index: 16 sqlType: 3 name: "rated_current" isKey: false updated: false isNull: true mysqlType: "decimal(20,10)" , index: 17 sqlType: 12 name: "protector_category" isKey: false updated: false isNull: false value: "ZBT-11C(DSP)\344\277\235\346\212\244\345\231\250" mysqlType: "varchar(100)" , index: 18 sqlType: 12 name: "protector_number" isKey: false updated: false isNull: false value: "19" mysqlType: "varchar(100)" , index: 19 sqlType: 12 name: "Substation_no" isKey: false updated: false isNull: false value: "TEST-BDS-001" mysqlType: "varchar(100)" , index: 20 sqlType: 3 name: "short_value" isKey: false updated: false isNull: false value: "2.000000000" mysqlType: "decimal(20,10)" , index: 21 sqlType: 3 name: "overcurrent_value" isKey: false updated: false isNull: false value: "1.000000000" mysqlType: "decimal(20,10)" , index: 22 sqlType: 3 name: "overload_setting_value" isKey: false updated: false isNull: false value: "3.000000000" mysqlType: "decimal(20,10)" , index: 23 sqlType: 3 name: "leakage_current1" isKey: false updated: false isNull: false value: "2.000000000" mysqlType: "decimal(20,10)" , index: 24 sqlType: 3 name: "leakage_current2" isKey: false updated: false isNull: false value: "44.000000000" mysqlType: "decimal(20,10)" , index: 25 sqlType: 3 name: "negative_sequence1" isKey: false updated: false isNull: false value: "24.000000000" mysqlType: "decimal(20,10)" , index: 26 sqlType: 3 name: "negative_sequence2" isKey: false updated: false isNull: false value: "3.000000000" mysqlType: "decimal(20,10)" , index: 27 sqlType: 3 name: "load_power" isKey: false updated: false isNull: false value: "2.000000000" mysqlType: "decimal(20,10)" , index: 28 sqlType: 3 name: "three_phase" isKey: false updated: false isNull: false value: "12.000000000" mysqlType: "decimal(20,10)" , index: 29 sqlType: 3 name: "two_phase" isKey: false updated: false isNull: false value: "12.000000000" mysqlType: "decimal(20,10)" , index: 30 sqlType: 3 name: "time_setting" isKey: false updated: false isNull: false value: "321.000000000" mysqlType: "decimal(20,10)" , index: 31 sqlType: 3 name: "negative_sequence_thime" isKey: false updated: false isNull: false value: "1.200000000" mysqlType: "decimal(20,10)" , index: 32 sqlType: 12 name: "protector_manufacturer" isKey: false updated: false isNull: false value: "2" mysqlType: "varchar(255)" ]

Obviously, such data is not easy to operate. See below

for (RowData rowData : rowChage.getRowDatasList()) {

if (eventType == EventType.DELETE) {

System.out.println("Delete operation"+rowData.getBeforeColumnsList());

} else if (eventType == EventType.INSERT) {

System.out.println("Add operation"+rowData.getAfterColumnsList());

} else {

System.out.println("-------> before");

jsonUpdate(rowData.getAfterColumnsList());

/* printColumn(rowData.getBeforeColumnsList());*/

}

}

private static void jsonUpdate( List<Column> columns){

JSONObject json=new JSONObject();

for (Column column : columns) {

json.put(column.getName(), column.getValue());

}

if(columns.size()>0){

System.out.println(("user:"+ columns.get(0).getValue()));

System.out.println("+++++++++++++++++++++++++++");

System.out.println(json);

}

}

Console

user:

+++++++++++++++++++++++++++

{"Substation_no":"TEST-BDS-001","besponsible_person":"","breaking_capacity":"","breaking_current":"","coal_mine_name":"Smart grid simulation laboratory","correspondence_address":"Test 3","device_model":"","device_name":"Switch 19","equipment_manufacturer":"","equipment_number":"19","leakage_current1":"2.000000000","leakage_current2":"44.000000000","load_power":"2.000000000","negative_sequence1":"24.000000000","negative_sequence2":"3.000000000","negative_sequence_thime":"1.200000000","operating_mechanism":"","overcurrent_value":"1.000000000","overload_setting_value":"3.000000000","preset":"","preset_status":"","protector_category":"ZBT-11C(DSP)Protector","protector_manufacturer":"2","protector_number":"19","rated_capacity":"","rated_current":"","rated_voltage":"","short_value":"2.000000000","switch_property":"Incoming switch","switch_state":"","three_phase":"12.000000000","time_setting":"321.000000000","two_phase":"12.000000000"}

extend

If you need to monitor the data of multiple databases, you need to configure the configuration file canal.properties

#################################################

######### common argument #############

#################################################

#canal.manager.jdbc.url=jdbc:mysql://127.0.0.1:3306/canal_manager?useUnicode=true&characterEncoding=UTF-8

#canal.manager.jdbc.username=root

#canal.manager.jdbc.password=121212

canal.id = 1

canal.ip =

canal.port = 11111 #Port default 11111

canal.metrics.pull.port = 11112

canal.zkServers =

# flush data to zk

canal.zookeeper.flush.period = 1000

canal.withoutNetty = false

# tcp, kafka, RocketMQ

canal.serverMode = tcp

# flush meta cursor/parse position to file

canal.file.data.dir = ${canal.conf.dir}

canal.file.flush.period = 1000

## memory store RingBuffer size, should be Math.pow(2,n)

canal.instance.memory.buffer.size = 16384

## memory store RingBuffer used memory unit size , default 1kb

canal.instance.memory.buffer.memunit = 1024

## meory store gets mode used MEMSIZE or ITEMSIZE

canal.instance.memory.batch.mode = MEMSIZE

canal.instance.memory.rawEntry = true

## detecing config

canal.instance.detecting.enable = false

#canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now()

canal.instance.detecting.sql = select 1

canal.instance.detecting.interval.time = 3

canal.instance.detecting.retry.threshold = 3

canal.instance.detecting.heartbeatHaEnable = false

# support maximum transaction size, more than the size of the transaction will be cut into multiple transactions delivery

canal.instance.transaction.size = 1024

# mysql fallback connected to new master should fallback times

canal.instance.fallbackIntervalInSeconds = 60

# network config

canal.instance.network.receiveBufferSize = 16384

canal.instance.network.sendBufferSize = 16384

canal.instance.network.soTimeout = 30

# binlog filter config

canal.instance.filter.druid.ddl = true

canal.instance.filter.query.dcl = false

canal.instance.filter.query.dml = false

canal.instance.filter.query.ddl = false

canal.instance.filter.table.error = false

canal.instance.filter.rows = false

canal.instance.filter.transaction.entry = false

# binlog format/image check

canal.instance.binlog.format = ROW,STATEMENT,MIXED

canal.instance.binlog.image = FULL,MINIMAL,NOBLOB

# binlog ddl isolation

canal.instance.get.ddl.isolation = false

# parallel parser config

canal.instance.parser.parallel = true

## concurrent thread number, default 60% available processors, suggest not to exceed Runtime.getRuntime().availableProcessors()

#canal.instance.parser.parallelThreadSize = 16

## disruptor ringbuffer size, must be power of 2

canal.instance.parser.parallelBufferSize = 256

# table meta tsdb info

canal.instance.tsdb.enable = true

canal.instance.tsdb.dir = ${canal.file.data.dir:../conf}/${canal.instance.destination:}

canal.instance.tsdb.url = jdbc:h2:${canal.instance.tsdb.dir}/h2;CACHE_SIZE=1000;MODE=MYSQL;

canal.instance.tsdb.dbUsername = canal

canal.instance.tsdb.dbPassword = canal

# dump snapshot interval, default 24 hour

canal.instance.tsdb.snapshot.interval = 24

# purge snapshot expire , default 360 hour(15 days)

canal.instance.tsdb.snapshot.expire = 360

# aliyun ak/sk , support rds/mq

canal.aliyun.accessKey =

canal.aliyun.secretKey =

#################################################

######### destinations #############

#################################################

canal.destinations = example,example2

# conf root dir

canal.conf.dir = ../conf

# auto scan instance dir add/remove and start/stop instance

canal.auto.scan = true

canal.auto.scan.interval = 5

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/h2-tsdb.xml

#canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml

canal.instance.global.mode = spring

canal.instance.global.lazy = false

#canal.instance.global.manager.address = 127.0.0.1:1099

#canal.instance.global.spring.xml = classpath:spring/memory-instance.xml

canal.instance.global.spring.xml = classpath:spring/file-instance.xml

#canal.instance.global.spring.xml = classpath:spring/default-instance.xml

canal.destinations is a place where multiple data sources are configured. The names can be customized. Folders with corresponding names will be generated in your conf. you need to copy the previous configuration files