In OSSIM sensor, the conversion of communication protocol and data format between OSSIM proxy and OSSIM server is realized through GET framework. Let's take a brief look at the ossim-agent script:

#!/usr/bin/python -OOt

import sys

sys.path.append('/usr/share/ossim-agent/')

sys.path.append('/usr/local/share/ossim-agent/')

from ossim_agent.Agent import Agent

agent = Agent()

agent.main()

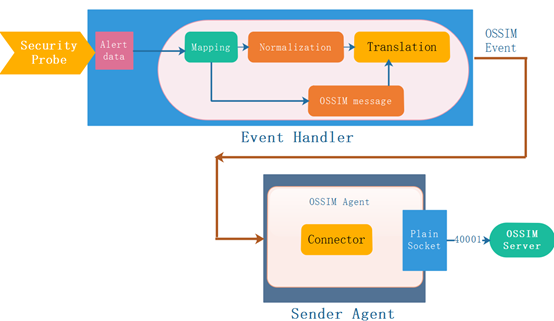

Here GET is needed to transport data to the OSSIM server as an OSSIM proxy. The two main operations needed to achieve close integration are "mapping Mapping" for generating (or) OSSIM compatible events and "transferring" such data to OSSIM servers. The two components of the GET framework responsible for such operations are EventHandler and Ender Agent, as shown in Figure 1.

Figure 1 integrates Get framework content into OSSIM

Event Handler's main task is to map events captured by data source plug-ins to OSSIM standardized event formats for SIEM instance alerts. In order to perform this process, the original message undergoes a transformation from RAW LOG to the existing normalized data field format; in the figure above, we represent these mechanisms as "normalized Normalization" and "OSSIM message". Partial log normalization code:

from Logger import Logger

from time import mktime, strptime

logger = Logger.logger

class Event:

EVENT_TYPE = 'event'

EVENT_ATTRS = [

"type",

"date",

"sensor",

"interface",

"plugin_id",

"plugin_sid",

"priority",

"protocol",

"src_ip",

"src_port",

"dst_ip",

"dst_port",

"username",

"password",

"filename",

"userdata1",

"userdata2",

"userdata3",

"userdata4",

"userdata5",

"userdata6",

"userdata7",

"userdata8",

"userdata9",

"occurrences",

"log",

"data",

"snort_sid", # snort specific

"snort_cid", # snort specific

"fdate",

"tzone"

]

def __init__(self):

self.event = {}

self.event["event_type"] = self.EVENT_TYPE

def __setitem__(self, key, value):

if key in self.EVENT_ATTRS:

self.event[key] = self.sanitize_value(value)

if key == "date":

# The date in seconds anf fdate as string

self.event["fdate"]=self.event[key]

try:

self.event["date"]=int(mktime(strptime(self.event[key],"%Y-%m-%d %H:%M:%S")))

except:

logger.warning("There was an error parsing date (%s)" %\

(self.event[key]))

elif key != 'event_type':

logger.warning("Bad event attribute: %s" % (key))

def __getitem__(self, key):

return self.event.get(key, None)

# Event representation

def __repr__(self):

event = self.EVENT_TYPE

for attr in self.EVENT_ATTRS:

if self[attr]:

event += ' %s="%s"' % (attr, self[attr])

return event + "\n"

# Returns the internal hash value

def dict(self):

return self.event

def sanitize_value(self, string):

return str(string).strip().replace("\"", "\\\"").replace("'", "")class EventOS(Event):

EVENT_TYPE = 'host-os-event'

EVENT_ATTRS = [

"host",

"os",

"sensor",

"interface",

"date",

"plugin_id",

"plugin_sid",

"occurrences",

"log",

"fdate",

]

class EventMac(Event):

EVENT_TYPE = 'host-mac-event'

EVENT_ATTRS = [

"host",

"mac",

"vendor",

"sensor",

"interface",

"date",

"plugin_id",

"plugin_sid",

"occurrences",

"log",

"fdate",

]

class EventService(Event):

EVENT_TYPE = 'host-service-event'

EVENT_ATTRS = [

"host",

"sensor",

"interface",

"port",

"protocol",

"service",

"application",

"date",

"plugin_id",

"plugin_sid",

"occurrences",

"log",

"fdate",

]

class EventHids(Event):

EVENT_TYPE = 'host-ids-event'

EVENT_ATTRS = [

"host",

"hostname",

"hids_event_type",

"target",

"what",

"extra_data",

"sensor",

"date",

"plugin_id",

"plugin_sid",

"username",

"password",

"filename",

"userdata1",

"userdata2",

"userdata3",

"userdata4",

"userdata5",

"userdata6",

"userdata7",

"userdata8",

"userdata9",

"occurrences",

"log",

"fdate",

]

class WatchRule(Event):

EVENT_TYPE = 'event'

EVENT_ATTRS = [

"type",

"date",

"fdate",

"sensor",

"interface",

"src_ip",

"dst_ip",

"protocol",

"plugin_id",

"plugin_sid",

"condition",

"value",

"port_from",

"src_port",

"port_to",

"dst_port",

"interval",

"from",

"to",

"absolute",

"log",

"userdata1",

"userdata2",

"userdata3",

"userdata4",

"userdata5",

"userdata6",

"userdata7",

"userdata8",

"userdata9",

"filename",

"username",

]class Snort(Event):

EVENT_TYPE = 'snort-event'

EVENT_ATTRS = [

"sensor",

"interface",

"gzipdata",

"unziplen",

"event_type",

"plugin_id",

"type",

"occurrences"

]

Log code:

import threading, time

from Logger import Logger

logger = Logger.logger

from Output import Output

import Config

import Event

from Threshold import EventConsolidation

from Stats import Stats

from ConnPro import ServerConnPro

class Detector(threading.Thread):

def init(self, conf, plugin, conn):

self._conf = conf

self._plugin = plugin

self.os_hash = {}

self.conn = conn

self.consolidation = EventConsolidation(self._conf)

logger.info("Starting detector %s (%s).." % \

(self._plugin.get("config", "name"),

self._plugin.get("config", "plugin_id")))

threading.Thread.__init__(self)

def _event_os_cached(self, event):

if isinstance(event, Event.EventOS):

import string

current_os = string.join(string.split(event["os"]), ' ')

previous_os = self.os_hash.get(event["host"], '')

if current_os == previous_os:

return True

else:

# Fallthrough and add to cache

self.os_hash[event["host"]] = \

string.join(string.split(event["os"]), ' ')

return False

def _exclude_event(self, event):

if self._plugin.has_option("config", "exclude_sids"):

exclude_sids = self._plugin.get("config", "exclude_sids")

if event["plugin_sid"] in Config.split_sids(exclude_sids):

logger.debug("Excluding event with " +\

"plugin_id=%s and plugin_sid=%s" %\

(event["plugin_id"], event["plugin_sid"]))

return True

return False

def _thresholding(self):

self.consolidation.process()

def _plugin_defaults(self, event):

# Get default parameters from configuration files

if self._conf.has_section("plugin-defaults"):

# 1) date

default_date_format = self._conf.get("plugin-defaults",

"date_format")

if event["date"] is None and default_date_format and \

'date' in event.EVENT_ATTRS:

event["date"] = time.strftime(default_date_format,

time.localtime(time.time()))

# 2) sensors

default_sensor = self._conf.get("plugin-defaults", "sensor")

if event["sensor"] is None and default_sensor and \

'sensor' in event.EVENT_ATTRS:

event["sensor"] = default_sensor

# 3) Network Interface

default_iface = self._conf.get("plugin-defaults", "interface")

if event["interface"] is None and default_iface and \

'interface' in event.EVENT_ATTRS:

event["interface"] = default_iface

# 4) source IP

if event["src_ip"] is None and 'src_ip' in event.EVENT_ATTRS:

event["src_ip"] = event["sensor"]

# 5) time zone

default_tzone = self._conf.get("plugin-defaults", "tzone")

if event["tzone"] is None and 'tzone' in event.EVENT_ATTRS:

event["tzone"] = default_tzone

# 6) sensor,source ip and dest != localhost

if event["sensor"] in ('127.0.0.1', '127.0.1.1'):

event["sensor"] = default_sensor

if event["dst_ip"] in ('127.0.0.1', '127.0.1.1'):

event["dst_ip"] = default_sensor

if event["src_ip"] in ('127.0.0.1', '127.0.1.1'):

event["src_ip"] = default_sensor

# Types of detection logs

if event["type"] is None and 'type' in event.EVENT_ATTRS:

event["type"] = 'detector'

return event

def send_message(self, event):

if self._event_os_cached(event):

return

if self._exclude_event(event):

return

#Use default values for some empty attributes.

event = self._plugin_defaults(event)

# Pre-merger inspection

if self.conn is not None:

try:

self.conn.send(str(event))

except:

id = self._plugin.get("config", "plugin_id")

c = ServerConnPro(self._conf, id)

self.conn = c.connect(0, 10)

try:

self.conn.send(str(event))

except:

return

logger.info(str(event).rstrip())

elif not self.consolidation.insert(event):

Output.event(event)

Stats.new_event(event)

def stop(self):

#self.consolidation.clear()

pass# Override in subclasses

def process(self):

pass

def run(self):

self.process()

class ParserSocket(Detector):

def process(self):

self.process()

class ParserDatabase(Detector):

def process(self):

self.process()

... ...

As can be seen from the above, the normalization of sensors is mainly responsible for re-coding the data fields in each LOG to generate a completely new event that may be used to send to the OSSIM server. In order to achieve this goal, the GET framework contains some specific functions in order to transform all functions into fields that require BASE64 transformation. The "OSSIM message" is responsible for filling in fields that do not exist in the original event generated by GET. So the plugin_id and plugin_sid mentioned above are used to represent the source type and subtype of the log message, which is also the required field to generate SIEM events. For event format integrity, sometimes when the source or destination IP cannot be confirmed, the system defaults to 0.0.0 to fill in the field.

Note: This mandatory field allows us to view the MySQL database of OSSIM using the phpmyadmin tool.

Sender Agent is responsible for the following two tasks:

Send events collected by GET and formatted by events to OSSIM server. This task is implemented by Event Hander creating message queues and sending them to message middleware. The sequence diagram is shown in Figure 2.

Figure 2 Sequence diagram: Conversion from security detector logs to OSSIM server events

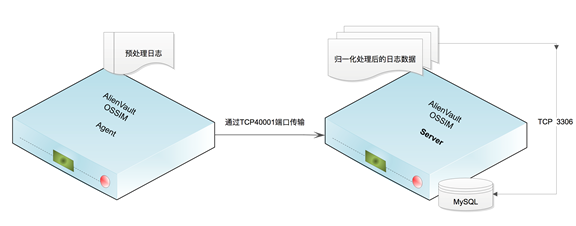

2) Manage the communication between GET framework and OSSIM server. The communication port is TCP 40001 through two-way handshake. Normalized original log is an important part of the normalization process. OSSIM preserves the original log while normalizing the log. It can be used for log archiving and provides a means to extract the original log from normalized events.

The normalized EVENTS is stored in MySQL database, as shown in Figure 3. Then the correlation engine carries out cross-correlation analysis according to the parameters of rules, priority, reliability and so on, obtains the risk value and sends out various alarm prompt information.

Figure 3 Log storage mechanism

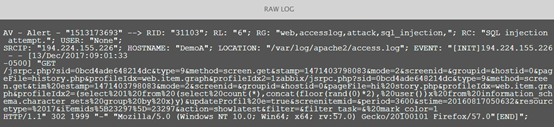

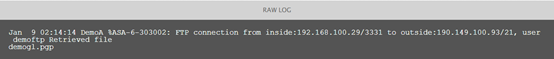

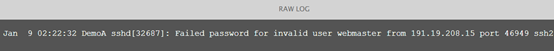

Next, let's look at an example. Here's an original log of Apache, CiscoASA and SSH, as shown in Figures 4, 5, and 6.

Figure 4 Apache original log

Figure 5 Cisco ASA original log

Figure 6 SSH original log

Through the normalization of OSSIM, the actual situation is displayed to you through the front-end of the Web to facilitate reading of the lattice. The comparison between normalized events and original logs will be explained in Open Source Security Operations and Maintenance Platform OSSIM Difficulty Resolution: Introduction Chapter.

Figure 7 Apache access log after normalization

In the example shown in Figure 7, only Userdata1 and Userdata2 are used. Userdata3-Userdata9 are not used. They are extensions, mainly reserved for other devices or services, where the destination address is marked as an IP address, such as Host 192.168.11.160. In fact, normalized processing occurs after system acquisition and storage events, before Association and data analysis, in SIEM tools, the data is converted into a readable format in the acquisition process, and the formatted data is easier to understand.