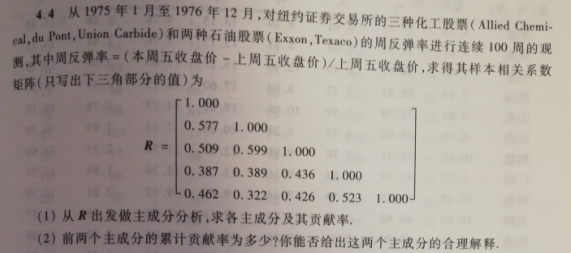

Question 1

# Guide Pack import numpy as np import pandas as pd

# Incoming Matrix Parameter R1 = np.array([[1, 0.577, 0.509, 0.387, 0.462], [0.577, 1, 0.599, 0.389, 0.322], [0.509, 0.599, 1, 0.436, 0.426], [0.387, 0.389, 0.436, 1, 0.523], [0.462, 0.322, 0.426, 0.523, 1]])

# Finding the parameters and eigenvectors of a matrix eigValues1, eigVects1 = np.linalg.eig(R1) eigValues1, eigVects1

(array([2.85671099, 0.80916372, 0.34294995, 0.53967524, 0.4515001 ]),

array([[ 0.46360519, 0.24033901, 0.45126217, 0.61170545, -0.38663456],

[ 0.45710783, 0.50930473, -0.67622331, -0.17818949, -0.20647436],

[ 0.47017564, 0.26044828, 0.40000721, -0.33505645, 0.66244469],

[ 0.42145876, -0.5256649 , 0.17559859, -0.54076277, -0.47200602],

[ 0.42122445, -0.58196989, -0.38502448, 0.43517554, 0.38243876]]))

Set No. i i i Principal Component Y i = ∑ k = 1 n e k X k Y_i=\sum_{k=1}^{n} e_kX_k Yi =k=1n ek Xk. Based on mathematical derivation, the orthogonal unit feature vector corresponding to the eigenvalues from large to small is the combination coefficient of the corresponding principal components, and the resulting principal components satisfy Y 1 Y_1 Y1 Contains as much information as possible, Y i ( 2 ≤ i ≤ n ) Y_i(2 \leq i \leq n) Yi (2 < i < n) contains as much as possible except Y k ( k ≤ i − 1 ) Y_k(k \leq i-1) Information other than Yk (k < i_1) is equal to the variance of each principal component.

Making principal component analysis from correlation matrix is equivalent to making principal component analysis from the covariance matrix of standardized data, and the eigenvalues and eigenvectors are:

| characteristic value | feature vector |

|---|---|

| λ 1 \lambda_1 λ1=2.857 | e 1 e_1 e1=(0.467,0.457,0.470,0.421,0.421) |

| λ 2 \lambda_2 λ2=0.809 | e 2 e_2 e2=(0.240,0.509,0.260,-0.526,-0.581) |

| λ 3 \lambda_3 λ3=0.540 | e 3 e_3 e3=(0.612,-0.178,-0.335,-0.541,0.435) |

| λ 4 \lambda_4 λ4=0.452 | e 4 e_4 e4=(-0.387,-0.206,0.662,-0.472,0.382) |

| λ 5 \lambda_5 λ5=0.343 | e 5 e_5 e5=(0.451,-0.676,0.400,0.176,-0.385) |

therefore

X

∗

X^{*}

The five principal components of X_are:

Y

k

=

e

k

X

∗

Y_k=e_kX^{*}

Yk=ekX∗

# Calculate contribution ratio

def contributionRate(values):

v = list(values)

l = len(v)

result = np.array([v[i]*100 / sum(v) for i in range(l)])

add = np.empty([l, ])

add[0] = result[0]

for i in range(1, l):

add[i] = add[i-1] + result[i]

return [result, add]

contribution = pd.DataFrame() # Create a data frame with variable names and eigenvalues contribution['eigValues'] = eigValues1 # characteristic value contribution['Contribution ratio'] = contributionRate(eigValues1)[0] contribution['Cumulative contribution ratio'] = contributionRate(eigValues1)[1] contribution

| eigValues | Contribution ratio | Cumulative contribution ratio | |

|---|---|---|---|

| 0 | 2.856711 | 57.134220 | 57.134220 |

| 1 | 0.809164 | 16.183274 | 73.317494 |

| 2 | 0.342950 | 6.858999 | 80.176493 |

| 3 | 0.539675 | 10.793505 | 90.969998 |

| 4 | 0.451500 | 9.030002 | 100.000000 |

Because of the k k Contribution ratio of k principal components= λ k ∑ i = 1 5 λ i \frac{\lambda_k}{\sum_{i=1}^{5} \lambda_i} _i=15 λ I λ k, the contribution rates of each principal component are 57.1%, 16.2%, 6.86%, 10.80%, 9.03%. The cumulative contribution of the first two principal components was 73.3%.

The meaning of the first principal component is the weighted sum of all the indicators. When the first principal component is large, it can be inferred that each rebound rate is large, so it can be called the "total rebound rate" factor. When the second principal component is larger, the rebound rate is smaller and larger, so the second principal component can be called the "oil stock rebound rate" factor.

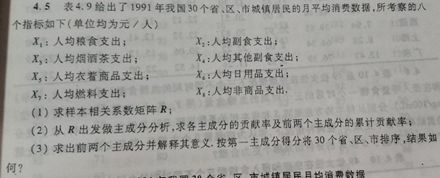

Question 2

Field information:

| index | Meaning |

|---|---|

| X 1 X_1 X1 | Food expenditure per capita |

| X 2 X_2 X2 | Food expenditure per capita |

| X 3 X_3 X3 | Per capita expenditure on tobacco, alcohol and tea |

| X 4 X_4 X4 | Other side food expenditure per capita |

| X 5 X_5 X5 | Cost per capita on clothing goods |

| X 6 X_6 X6 | Per capita commodity expenditure |

| X 7 X_7 X7 | Per capita fuel expenditure |

| X 8 X_8 X8 | Per capita non-commodity expenditure |

Question 1: Find the correlation coefficient matrix R

# Data Preprocessing

data = pd.read_excel('4.2.xlsx',index_col=0)

# 0 Mean Normalization data = (data - data.mean()) / data.std()

#Correlation matrix R2 = data.corr() R2

| X1 | X2 | X3 | X4 | X5 | X6 | X7 | X8 | |

|---|---|---|---|---|---|---|---|---|

| X1 | 1.000000 | 0.333647 | -0.054539 | -0.061254 | -0.289361 | 0.198796 | 0.348698 | 0.318677 |

| X2 | 0.333647 | 1.000000 | -0.022902 | 0.398931 | -0.156304 | 0.711134 | 0.413595 | 0.834952 |

| X3 | -0.054539 | -0.022902 | 1.000000 | 0.533329 | 0.496763 | 0.032830 | -0.139086 | -0.258358 |

| X4 | -0.061254 | 0.398931 | 0.533329 | 1.000000 | 0.698424 | 0.467917 | -0.171274 | 0.312757 |

| X5 | -0.289361 | -0.156304 | 0.496763 | 0.698424 | 1.000000 | 0.280129 | -0.208277 | -0.081234 |

| X6 | 0.198796 | 0.711134 | 0.032830 | 0.467917 | 0.280129 | 1.000000 | 0.416821 | 0.701586 |

| X7 | 0.348698 | 0.413595 | -0.139086 | -0.171274 | -0.208277 | 0.416821 | 1.000000 | 0.398868 |

| X8 | 0.318677 | 0.834952 | -0.258358 | 0.312757 | -0.081234 | 0.701586 | 0.398868 | 1.000000 |

From the table above, we can see that there is a strong relationship between variables, so it is suitable to do principal component analysis and factor analysis to achieve the purpose of dimension reduction.

Question 2: Principal component analysis

# Computing eigenvalues and eigenvectors eigValues2, eigVects2 = np.linalg.eig(R2) eig = pd.DataFrame() # Create a data frame with variable names and eigenvalues eig['names'] = data.columns # Column Name eig['eigValues'] = eigValues2 # characteristic value eig

| names | eigValues | |

|---|---|---|

| 0 | X1 | 3.096288 |

| 1 | X2 | 2.367223 |

| 2 | X3 | 0.919987 |

| 3 | X4 | 0.705925 |

| 4 | X5 | 0.498442 |

| 5 | X6 | 0.051470 |

| 6 | X7 | 0.130776 |

| 7 | X8 | 0.229888 |

# Determine the number of common factors

from math import sqrt

for k in range(1, 9):

if eig['eigValues'][:k].sum() / eig['eigValues'].sum() >= 0.8: # If the explanatory power reaches 80%, end the cycle

print(k)

break

4

Because of the k k Contribution ratio of k principal components= λ k ∑ i = 1 8 λ i \frac{\lambda_k}{\sum_{i=1}^{8} \lambda_i} ∑i=18λiλk

# Calculate contribution ratio contribution = pd.DataFrame() # Create a data frame with variable names and eigenvalues contribution['names'] = data.columns # Column Name contribution['eigValues'] = eigValues2 # characteristic value contribution['Contribution ratio'] = contributionRate(eigValues2)[0] contribution['Cumulative contribution ratio'] = contributionRate(eigValues2)[1] contribution

| names | eigValues | Contribution ratio | Cumulative contribution ratio | |

|---|---|---|---|---|

| 0 | X1 | 3.096288 | 38.703604 | 38.703604 |

| 1 | X2 | 2.367223 | 29.590288 | 68.293892 |

| 2 | X3 | 0.919987 | 11.499842 | 79.793734 |

| 3 | X4 | 0.705925 | 8.824067 | 88.617801 |

| 4 | X5 | 0.498442 | 6.230529 | 94.848330 |

| 5 | X6 | 0.051470 | 0.643369 | 95.491699 |

| 6 | X7 | 0.130776 | 1.634697 | 97.126396 |

| 7 | X8 | 0.229888 | 2.873604 | 100.000000 |

Question 3: Factor analysis

# Constructing the initial factor load matrix col0 = list(sqrt(eigValues2[0]) * eigVects2[:,0]) #Factor Load Matrix Column 1 col1 = list(sqrt(eigValues2[1]) * eigVects2[:,1]) #Factor Load Matrix Column 2 col2 = list(sqrt(eigValues2[2]) * eigVects2[:,2]) #Factor Load Matrix Column 3 col3 = list(sqrt(eigValues2[3]) * eigVects2[:,3]) #Factor Load Matrix Column 4 A = pd.DataFrame([col0, col1, col2, col3]).T #Construction factor load matrix A A.columns=['X1','X2','X3','X4'] #Common factor of factor load matrix A A

| X1 | X2 | X3 | X4 | |

|---|---|---|---|---|

| 0 | 0.439215 | 0.371164 | -0.665578 | 0.316560 |

| 1 | 0.913659 | 0.057862 | 0.068351 | 0.188935 |

| 2 | -0.032518 | -0.731499 | -0.554221 | -0.027204 |

| 3 | 0.447107 | -0.827879 | 0.020887 | 0.194140 |

| 4 | 0.038175 | -0.885373 | 0.046123 | -0.239764 |

| 5 | 0.866903 | -0.207209 | 0.139412 | -0.188390 |

| 6 | 0.558060 | 0.401080 | -0.274694 | -0.645366 |

| 7 | 0.896234 | 0.133982 | 0.260200 | 0.148706 |

# Establish Factor Model h = np.zeros(8) #Variable commonality, reflecting the dependence of variables on common factors, approaches 1, indicating that the higher the degree of explanations of common factors, the better the effect of factor analysis D = np.mat(np.eye(8))#Special factor variance, the degree of variance contribution of a factor, reflecting the contribution of a common factor to a variable, and measuring the relative importance of a common factor A = np.mat(A) #Matrix Factor Load Matrix A

for i in range(8):

a = A[i,:] * A[i,:].T #Sum of row squares of elements of A

h[i] = a[0,0] #Calculates the commonality of the variable X, describing all the common factors F to the variable X_ The contribution of the total variance of i, and the variable X_i The part of the variance that can be explained by all factors

D[i,i] = 1 - a[0,0] #Because the normalized variance of the independent variable matrix is 1, that is, Var(X_i)=the first common degree h_i +Variance of Special Factor I I

Linear combination of factors as variables

def varimax(Phi, gamma = 1.0, q =10, tol = 1e-6): #Define the maximum variance rotation function

p, k = Phi.shape #Gives the total number of rows and columns of the matrix Phi

R = np.eye(k) #Given a unit matrix of k*k

d = 0

for i in range(q):

d_old = d

Lambda = np.dot(Phi, R)#Matrix Multiplication

u, s, vh = np.linalg.svd(np.dot(Phi.T, np.asarray(Lambda) ** 3 - (gamma / p) * np.dot(Lambda, np.diag(np.diag(np.dot(Lambda.T,Lambda)))))) #Singular Value Decomposition svd

R = np.dot(u,vh)#Constructing Orthogonal Matrix R

d = np.sum(s)#Sum of singular values

if d_old != 0 and d / d_old:

return np.dot(Phi, R)#Returns the rotation matrix Phi*R

rotation_mat = varimax(A) # Calling the maximum variance rotation function

rotation_mat = pd.DataFrame(rotation_mat) # Data Frame

rotation_mat

| 0 | 1 | 2 | 3 | |

|---|---|---|---|---|

| 0 | 0.203150 | 0.062124 | -0.892652 | -0.178661 |

| 1 | 0.889045 | -0.029496 | -0.262154 | -0.135988 |

| 2 | -0.214454 | -0.871857 | -0.194565 | 0.008240 |

| 3 | 0.481798 | -0.792082 | 0.077324 | 0.240652 |

| 4 | 0.033408 | -0.819782 | 0.413444 | 0.029366 |

| 5 | 0.804396 | -0.274344 | 0.064507 | -0.350578 |

| 6 | 0.255416 | 0.127864 | -0.165170 | -0.924869 |

| 7 | 0.933336 | 0.109739 | -0.110305 | -0.125212 |

# Calculating factor score data_ = np.mat(data) #Matrix processing factor_score = (data_).dot(A) #Calculating factor score factor_score = pd.DataFrame(factor_score)#Data Frame factor_score.columns = ['X1','X2','X3','X4'] #Naming factor variables factor_score.index = data.index factor_score['sum'] = factor_score.sum(axis=1)

Sorting results

factor_score = factor_score.sort_values(by = ['sum'], ascending=False) factor_score

| X1 | X2 | X3 | X4 | sum | |

|---|---|---|---|---|---|

| region | |||||

| Guangdong | 12.134223 | 3.505384 | -0.780327 | -1.320523 | 13.538757 |

| Hainan | 2.465522 | 4.890210 | -1.567251 | 1.625074 | 7.413554 |

| Guangxi | 1.859072 | 1.900515 | 1.539035 | -0.063227 | 5.235395 |

| Shanghai | 5.716003 | -3.940215 | 1.603553 | 1.666736 | 5.046078 |

| Fujian | 2.030434 | 2.114319 | -0.799141 | 0.606531 | 3.952143 |

| Hunan | -0.911514 | 0.849711 | 1.107383 | -0.187768 | 0.857812 |

| Jiangxi | -3.450478 | 3.243735 | 0.989421 | -0.075377 | 0.707301 |

| Sichuan | -0.237351 | -0.528700 | 1.121904 | 0.133922 | 0.489775 |

| Tianjin | 0.766193 | -0.729420 | -0.647183 | 1.044525 | 0.434115 |

| Beijing | 3.153503 | -4.443551 | 1.185859 | 0.457701 | 0.353514 |

| Yunnan | -1.173142 | 0.454616 | -0.215400 | 0.962859 | 0.028933 |

| Xinjiang | -1.440261 | 0.648165 | 1.237420 | -0.432477 | 0.012847 |

| Jiangsu | 0.269736 | -0.174160 | 0.491318 | -0.609942 | -0.023048 |

| Hubei | -0.299919 | 0.907169 | -0.123593 | -0.584738 | -0.101081 |

| Shaanxi | -1.078188 | 0.434750 | 0.037484 | 0.254255 | -0.351699 |

| Zhejiang | 2.665959 | -2.113285 | -0.145554 | -0.905556 | -0.498436 |

| Guizhou | -2.215974 | 0.658934 | 0.294098 | 0.350311 | -0.912631 |

| Anhui | -1.961910 | 0.677642 | -0.210394 | 0.521765 | -0.972897 |

| HEBEI | -0.690123 | 0.454840 | -0.414944 | -0.635875 | -1.286102 |

| Jilin | -2.276431 | 1.323683 | 0.105062 | -0.566823 | -1.414510 |

| Liaoning | 0.079538 | -1.508676 | 0.178047 | -0.325763 | -1.576853 |

| Shandong | -0.248310 | -1.049270 | -0.064522 | -0.451012 | -1.813114 |

| Ningxia | -0.757336 | -0.993462 | 0.136768 | -0.373849 | -1.987879 |

| Gansu | -2.080281 | 0.296880 | -0.726723 | 0.027185 | -2.482939 |

| Inner Mongolia | -2.213952 | 0.203457 | -0.289416 | -0.190353 | -2.490265 |

| Henan | -2.614718 | 0.540896 | -0.702427 | 0.131479 | -2.644770 |

| Shanxi | -2.964064 | -0.257612 | 0.155919 | 0.194874 | -2.870882 |

| Heilongjiang | -2.332689 | 0.157537 | 0.222618 | -1.009044 | -2.961577 |

| Qinghai | -1.959088 | 0.027927 | -1.477425 | 0.137006 | -3.271581 |

| Tibet | -0.234453 | -7.552021 | -2.241588 | -0.381896 | -10.409958 |

The first principal component is all indicators except the weighting of per capita non-staple food expenditure. When the first principal component is large, it can be inferred that all kinds of daily life expenditure are large, so it can be called "daily life expenditure" factor. When the second principal component is larger, the per capita expenditure on food, by-food, fuel and non-commodities is smaller, while the per capita expenditure on tobacco, alcohol, tea, other by-food, clothing and commodities is larger, so the second principal component can be called the "unnecessary expenditure" factor.

Factor analysis is a multivariate statistical analysis method to study how to concentrate many original variables into a few factors with minimal loss of information, and how to make factors have some explanatory nomenclature.

Its matrix form is

X

=

A

F

+

ε

X=AF+\varepsilon

X=AF+ε

KMO spherical test should be carried out before factor analysis of data. The P value = 0.000 is very significant, so it meets the precondition of factor analysis.

Question 3

Table 3 below shows the head length (X1), head width (X2) and head length (Y1) and head width (Y2) of adult offspring from 25 families. Try from the sample covariance matrix, respectively. Σ Starting from the sample correlation coefficient matrix R, do the canonical correlation analysis to find out the typical variable pairs and the canonical correlation coefficients, and test whether the typical variable pairs are significantly related (=0.05). What are the differences between the results in the two cases?

# Guide Pack

df = pd.read_excel('4.3.xlsx')

# input X = df[['X1', 'X2']] y = df[['Y1', 'Y2']]

# Modeling

import scipy

from sklearn.cross_decomposition import CCA

def cca(X, Y, k):

l = len(k)

reu = []

for i in range(l):

cca = CCA(n_components=k[i])

# Training data

cca.fit(X, y)

# Dimension Reduction Operation

X_train_r, y_train_r = cca.transform(X, y)

reu.append(scipy.stats.pearsonr(X_train_r[:, k[i]-1], y_train_r[:, k[i]-1])) # Output correlation coefficient

return reu

analysis = pd.DataFrame() r = cca(X, y, [1, 2]) analysis['corr'] = r[0] analysis['p-value'] = r[1] analysis

| corr | p-value | |

|---|---|---|

| 0 | 0.788508 | 0.053740 |

| 1 | 0.000003 | 0.798626 |

The canonical correlation coefficient of the first pair of canonical correlation variables is 0.789 and the P value is 0.056 for both standardized and non-standardized data. The second pair of canonical correlation variables has a canonical correlation coefficient of 0.0003 and a P value of 0.797, which makes it obvious that the second pair of canonical variables cannot provide information about X and Y.