preface

Processes and threads are the basic objects of the operating system. Understanding them helps to better write reliable and efficient code.

process

After a program is executed in the operating system, the unit running is the process. A program can be executed many times and run multiple processes. For example, google browser opens a process every time it executes, which provides users with web browsing services.

thread

The smallest execution unit of the operating system is also the smallest unit of task scheduling of the operating system.

Thread creation

new,Runnable,ExecutorService

Thread information

Thread information can be accessed through Java Lang. thread class.

thread priority

Threads are scheduled by the operating system, and the operating system is generally preemptive scheduling, that is, it will determine which thread has a greater probability of obtaining CPU time slice according to the priority of threads.

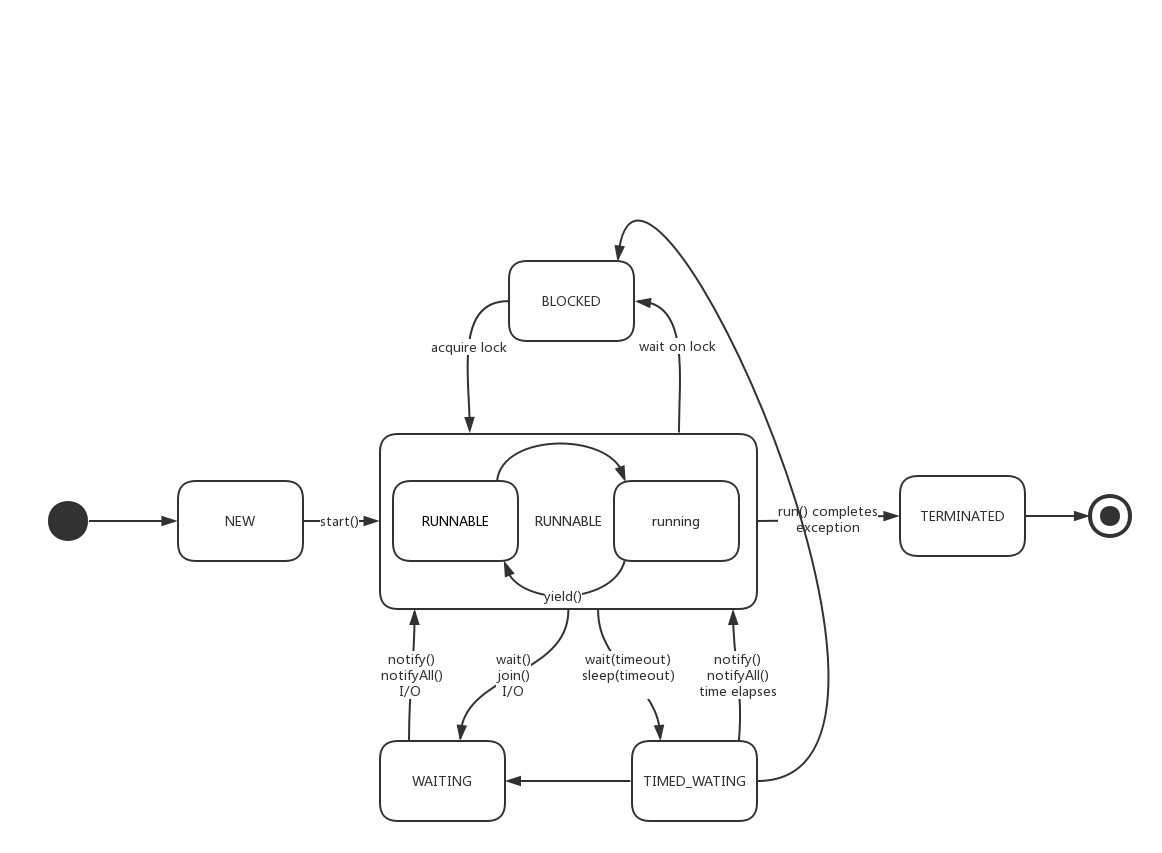

Status of the thread

Can pass VisualVM View, reference Blog.

InterruptedException

After a thread receives an interrupt exception, it should correctly handle the fact that the thread has been interrupted. Propagate the exception or call thread currentThread. Interrupt() to set the interrupt state so that the code that depends on the thread interrupt state can run correctly.

Thread pool

How thread pool works

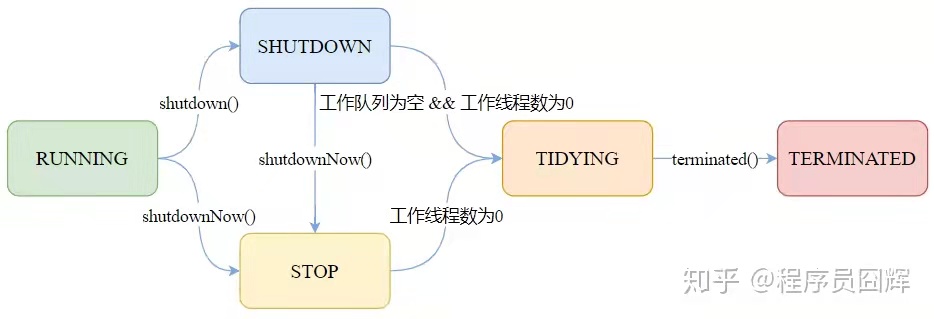

State transition of thread pool

The thread pool is not created by the static method of Executors (either the queue is infinite, the memory is easy to be oom, or the number of threads is infinite, and the cpu is easy to be full). Instead, it needs to be created by the construction method according to the actual business.

tomcat thread pool

Acceptor thread

The name contains - Acceptor -, and there is only one by default. The function is to block the receiving connection in an endless loop, and then register with poller (AbstractEndpoint.setSocketOptions(* *)).

Poller thread

The name contains - ClientPoller -, and the default number is math min(2,Runtime.getRuntime(). availableProcessors()). Continuously poll the Selector and send IO events to the thread pool for execution (AbstractEndpoint.processSocket(* *)).

Configuration class

The default thread pool parameter can be springframework. boot. autoconfigure. web. The serverproperties configuration class specifies.

Built in thread pool

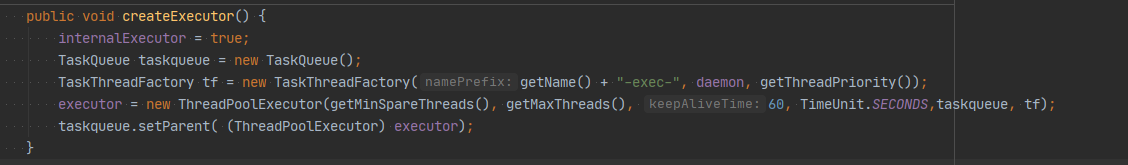

tomcat uses the built-in thread pool by default. The name contains - exec -.

org.apache.tomcat.util.net.AbstractEndpoint.java

- Number of core threads: Min spare

- Maximum number of threads: max

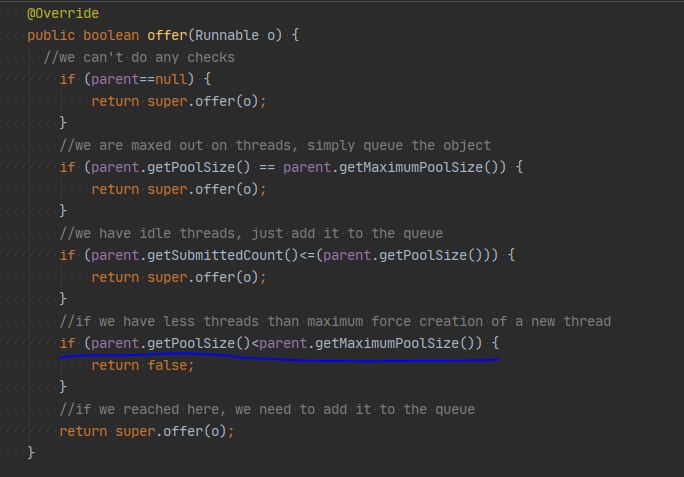

TaskQueue with built-in thread pool overloads the offer method and adds an interesting logic. When the number of threads in the thread pool is less than the maximum number of threads, it directly returns false, as shown in the following figure.

This change directly affects the behavior of the thread pool execute method. When the core thread is full, the task will not directly join the team, but will enter the third judgment and create a non core thread for execution; This is different from the behavior of a general thread pool.

Of course, the size of the task queue here is also unlimited, which means that when there are enough tasks, it will burst the memory and OOM.

Integer.MAX_VALUE. If the maximum value is reached, the memory will not hold at all.

In addition, if the number of core threads is 0, the thread pool will also create a thread to execute the task (execute calls addWorker(null, false)). However, only one thread will be created, and all tasks will be executed in one thread.

spring custom tomcat thread pool

@Component

public class TomcatConfig implements WebServerFactoryCustomizer<ConfigurableTomcatWebServerFactory>, Ordered {

@Resource

private ServerProperties serverProperties;

@Override

public int getOrder() {

return 0;

}

@Override

public void customize(ConfigurableTomcatWebServerFactory factory) {

if (factory instanceof TomcatServletWebServerFactory) {

//It seems to be the legendary asynchronous non blocking model.

((TomcatServletWebServerFactory) factory).setProtocol("org.apache.coyote.http11.Http11Nio2Protocol");

}

factory.addConnectorCustomizers((connector) -> {

ProtocolHandler handler = connector.getProtocolHandler();

if (handler instanceof AbstractProtocol) {

AbstractProtocol<?> protocol = (AbstractProtocol<?>) handler;

protocol.setExecutor(getExecutor());

}

});

}

private Executor getExecutor() {

ThreadPoolExecutor executor = new ThreadPoolExecutor(

serverProperties.getTomcat().getThreads().getMinSpare(),

serverProperties.getTomcat().getThreads().getMax(),

60, TimeUnit.SECONDS,

new TaskQueue(serverProperties.getTomcat().getThreads().getMax()),

new DefaultThreadFactory("civic-tomcat")

);

//The core thread is also recycled

executor.allowCoreThreadTimeOut(true);

return executor;

}

}

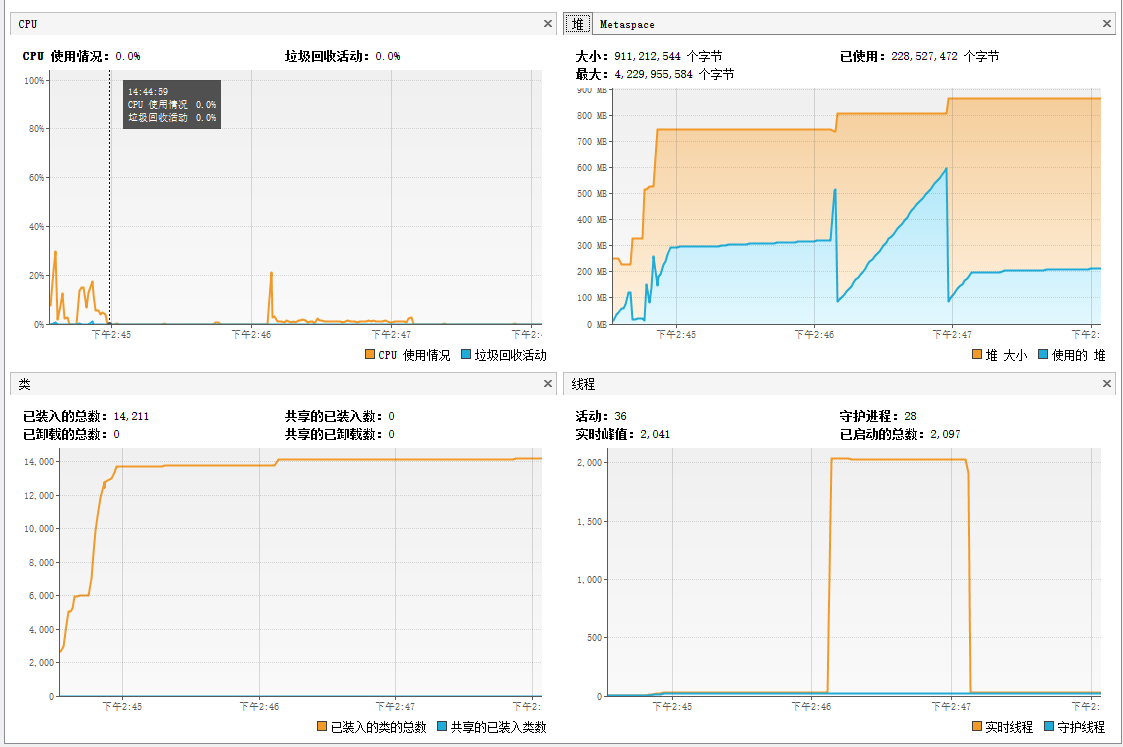

allowCoreThreadTimeOut(true) configures recyclable core threads. When the request peak comes, a large number of threads will be created. When the peak goes, these threads can be recycled (as shown in the sharp drop after keepAliveTime in the figure below). Both CPU pressure and reactor pressure are reduced.

Of course, you can also not recycle the core thread to avoid the performance overhead caused by thread creation and destruction.

@Async thread pool

Configuration class

org.springframework.boot.autoconfigure.task.TaskExecutionProperties defines thread pool parameters and parameters for thread pool closing.

Default thread pool

The default thread pool ThreadPoolExecutor is provided by org springframework. boot. autoconfigure. task. Taskexecutionautoconfiguration is created and held by the ThreadPoolTaskExecutor object. It can be configured through the TaskExecutorCustomizer object.

Reference org springframework. scheduling. concurrent. Initializeexector method of threadpooltaskexecutor.

Custom thread pool

Implementation org springframework. scheduling. annotation. Asyncconfigurer class.

@Override

public Executor getAsyncExecutor() {

ThreadPoolTaskExecutor executor = new ThreadPoolTaskExecutor();

executor.setCorePoolSize(7);

executor.setMaxPoolSize(42);

executor.setQueueCapacity(11);

executor.setThreadNamePrefix("MyExecutor-");

executor.initialize();

return executor;

}

@Override

public AsyncUncaughtExceptionHandler getAsyncUncaughtExceptionHandler() {

return new MyAsyncUncaughtExceptionHandler();

}

@Scheduled thread pool

Configuration class

org. springframework. boot. autoconfigure. task. The parameters of the thread pool and taskingschedules define the parameters of the thread pool.

Default thread pool

The default thread pool ScheduledExecutorService is provided by org springframework. boot. autoconfigure. task. Taskschedulingautoconfiguration is created and held by the ThreadPoolTaskScheduler object. It can be configured through the TaskSchedulerCustomizer object.

Reference org springframework. scheduling. concurrent. Initializeexector method of threadpooltaskscheduler.

Custom thread pool

Implementation org springframework. scheduling. annotation. Schedulingconfigurator class.

@Override

public void configureTasks(ScheduledTaskRegistrar taskRegistrar) {

taskRegistrar.setScheduler(taskExecutor());

}

@Bean(destroyMethod="shutdown")

public Executor taskExecutor() {

return Executors.newScheduledThreadPool(100);

}

Netty thread pool

The thread pool concept of netty is different from that of jdk. Netty's thread pool is actually an event loop group (MultithreadEventLoopGroup). Each thread is actually an event loop (SingleThreadEventLoop). After the thread of each event loop is started, it will open an endless loop to continuously consume the events in the event queue. The thread pool in jdk has only one task queue, which is shared by all threads in the thread pool. It is uncertain which thread will process the tasks in the queue (it may be a thread created in the pool or a new thread created for processing). Each thread of netty has an event queue and maintains an endless loop to handle these events. Therefore, the event that enters the event loop will be handled by that thread.

Configure netty event loop group

netty's event loop group can be divided into three categories according to its functions: MainReactor (listening for new connection events), SubReactor (listening for read-write events) and handler (encoding, decoding and business logic).

For details, please refer to Reactor.

A complete simple http server is as follows:

EventLoopGroup boss = new NioEventLoopGroup(1, new DefaultThreadFactory("boss"));

EventLoopGroup worker = new NioEventLoopGroup(new DefaultThreadFactory("worker"));

EventLoopGroup handler = new DefaultEventLoopGroup(new DefaultThreadFactory("handler"));

ServerBootstrap serverBootstrap = new ServerBootstrap();

try {

serverBootstrap.group(boss, worker);

serverBootstrap.channel(NioServerSocketChannel.class);

serverBootstrap.localAddress(5555);

serverBootstrap.childOption(ChannelOption.SO_KEEPALIVE, true);

serverBootstrap.handler(new LoggingHandler(LogLevel.DEBUG));

serverBootstrap.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline pipeline = ch.pipeline();

pipeline.addLast(handler,

new HttpServerCodec(),

new HttpObjectAggregator(512 * 1024),

new HttpRequestHandler());

}

});

ChannelFuture bindFuture = serverBootstrap.bind().sync();

log.info("Service bound");

ChannelFuture closeFuture = bindFuture.channel().closeFuture();

closeFuture.sync();

} catch (Exception e) {

log.info("Service startup exception", e);

} finally {

handler.shutdownGracefully();

worker.shutdownGracefully();

boss.shutdownGracefully();

}

Among them, boss is the event loop group that handles new connections, and only handles selectionkey OP_ Accept event; worker is an event loop group that handles read / write io events. It only handles selectionkey OP_ Read event; handler is the event loop group of codec and business logic.

The event loop is started when the first event is accepted. Generally, it is a registered channel event. Once the event loop is started, it will not close automatically (this is different from the automatic recycling of non core threads in the thread pool after the keepAliveTime expires). Here, only one event loop is configured for the boss, the default value is used for the worker, which is twice the number of cores, and the same is true for the handler.

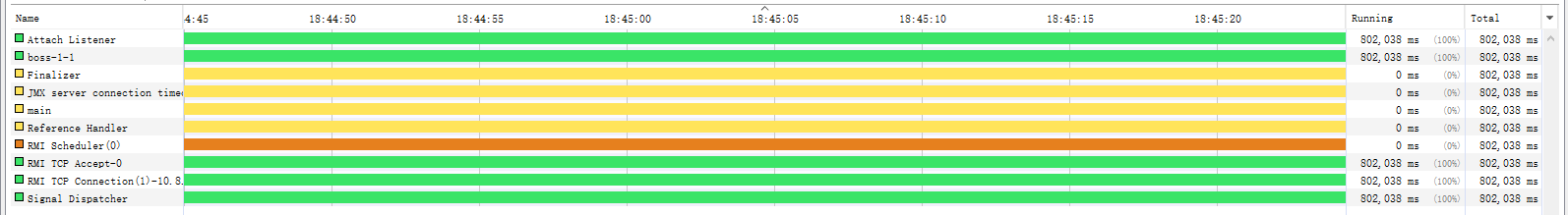

When the program starts, the NioServerSocketChannel channel is registered and the thread where the boss's only event loop is located is started.

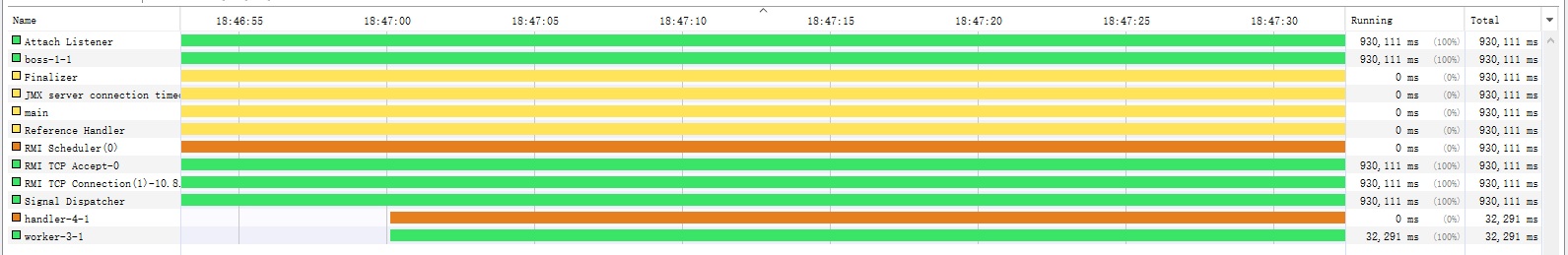

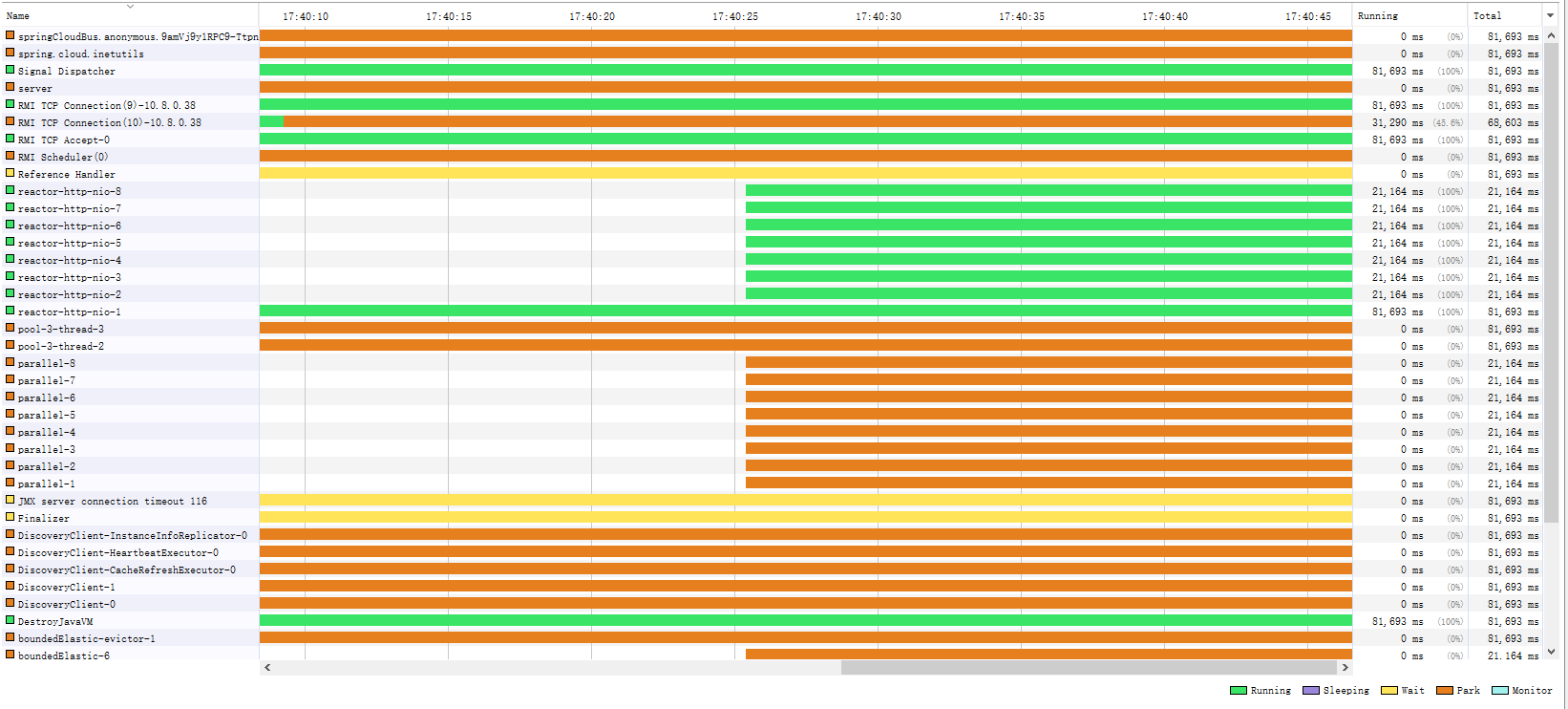

use jmeter Send an http request. You can see that the server starts a worker event loop and a handler event loop.

Why are boss and worker running and handler park? Please think for yourself.

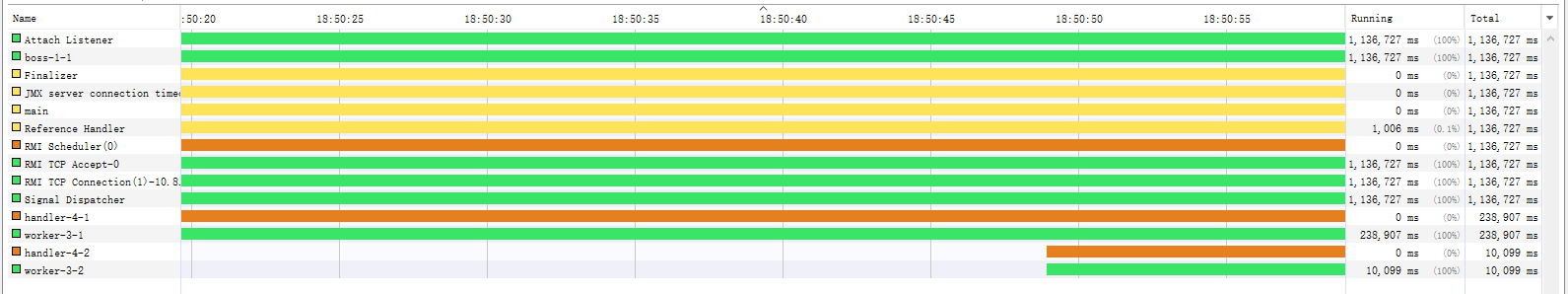

Then, an http request is sent. It can be found that a new worker event loop and handler event loop are opened.

Why start a new event cycle instead of using the old one? The answer is Io netty. util. concurrent. EventExecutorChooserFactory$EventExecutorChooser.

Then, send 100 http requests at a time. You can see that all event cycles are turned on. At this time, the server enters the fully open state.

After all event cycles of the event cycle group are opened, no new event cycle will be opened. That is, the number of final start threads is the sum of the sizes of all event loop groups.

Configure the size of the event queue for the netty event loop

In order to prevent server OOM caused by too many tasks placed on the event queue, you need to configure the event queue size of each event cycle. The specific allocation depends on the configuration and requirements of the server.

First, let's look at the default size.

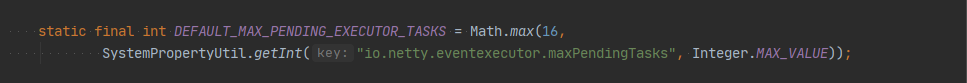

As you can see, the queue size is determined by io netty. eventLoop. Maxpendingtasks and Io netty. eventexecutor. Determined by the maxpendingtasks parameter, the default is integer MAX_ VALUE.

These two parameters can be set at startup.

-Dio.netty.eventLoop.maxPendingTasks=1024

-Dio.netty.eventexecutor.maxPendingTasks=1024

Reactor netty thread pool

Reactor netty is written based on netty, so the thread pool model is similar to netty, but reactor netty adds an interface reactor netty. resources. Loopresources to represent thread pool resources. The default implementation is reactor netty. resources. DefaultLoopResources.

Configure reactor netty event loop group

A simple http server configuration of reactor netty is as follows:

DisposableServer disposableServer = HttpServer.create()

.port(5556)

.runOn(LoopResources.create("civic", 1, 2, false))

.accessLog(true)

.handle((req, res) -> {

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

return res.sendString(Flux.just("hello"));

})

.bind()

.block();

log.info("Service binding succeeded");

assert disposableServer != null;

disposableServer.onDispose().block();

The runOn operator is used to configure the event loop group. You can configure the size of two event loop groups, boss and worker, similar to netty. LoopResources.create("civic", 1, 2, false) the first parameter specifies the name of the thread, the second parameter specifies the boss size, the third parameter specifies the worker size, and the fourth parameter specifies whether to guard the thread.

Unfortunately, reactor netty cannot configure the handler event loop group, reactor netty. transport. Transportconfig $transportchannelinitializer does not provide such a parameter.

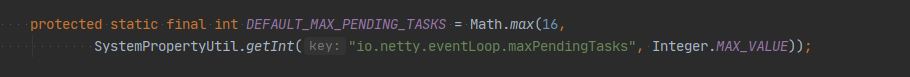

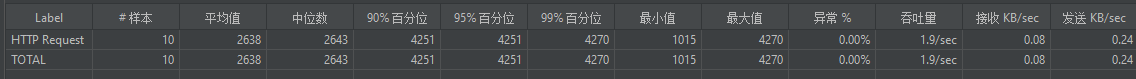

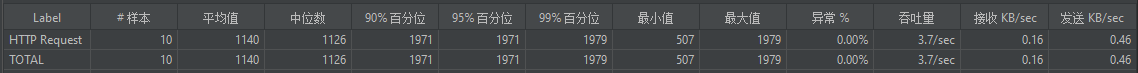

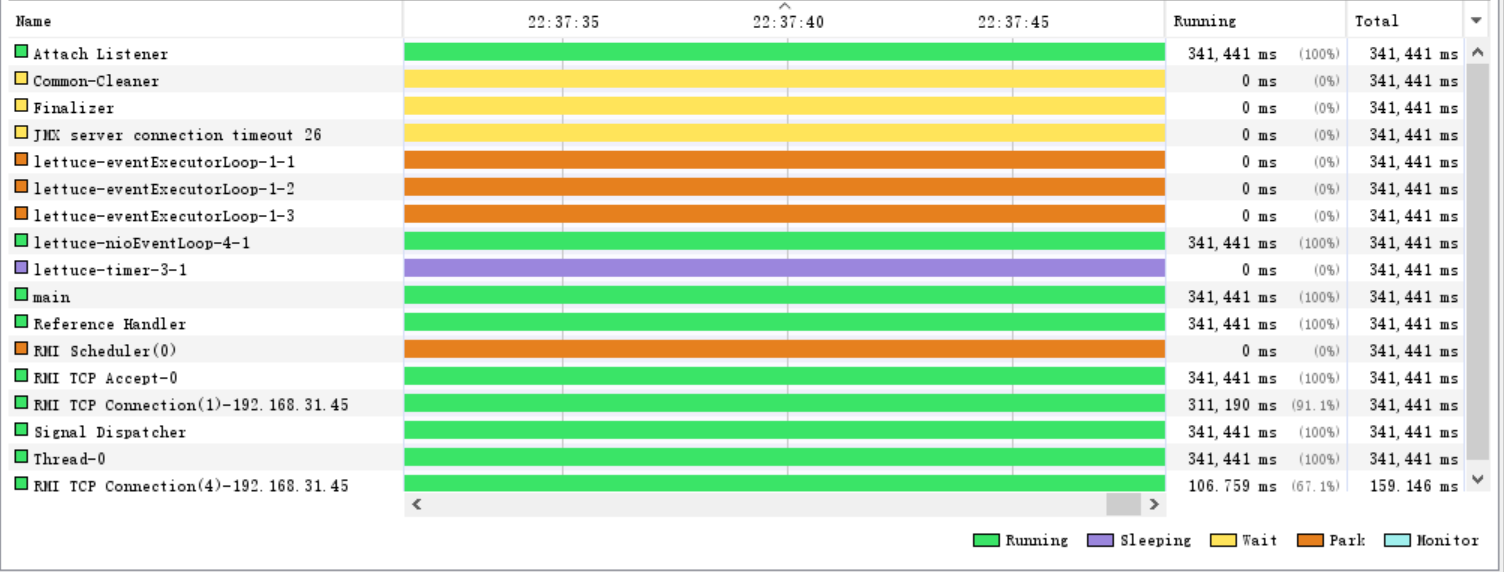

Start the program and send 10 requests with jmeter. You can see that the program starts two worker event cycle groups.

As can be seen from the figure, the worker thread is sleeping, so the QPS is only close to 2.

If the sleep time is shortened to 500ms, 10 requests are also sent. You can see that the QPS is close to 4.

Shortening the interface response time is one of the important means to improve QPS.

Configure the event queue size of reactor netty event loop

Analogy netty configuration method.

Spring Webflux thread pool

Spring weblux is a set of Web Services Framework Based on reactor netty. Its thread pool is through org springframework. boot. autoconfigure. web. reactive. The reactivewebserverfactoryconfiguration class determines the configuration of the ReactorResourceFactory.

The default boss size is - 1 (which means sharing the same message loop group with the worker), and the worker size is the number of cpu cores. Using jmeter to send 16 requests, the program creates 8 reactor message loop groups.

Configure custom Spring Webflux thread pool

To customize the weblux thread pool, you only need to customize the ReactorResourceFactory and replace the default.

@Configuration

@Slf4j

public class LoopConfig {

@Bean

ReactorResourceFactory reactorResourceFactory() {

ReactorResourceFactory factory = new ReactorResourceFactory();

factory.setUseGlobalResources(false);

factory.setLoopResources(LoopResources.create("civic", 1, 2, false));

return factory;

}

}

Using jmeter to send 16 requests, you can see that there are one boss thread and two worker threads.

Configure the event queue size of weblux event loop

Analogy netty configuration method.

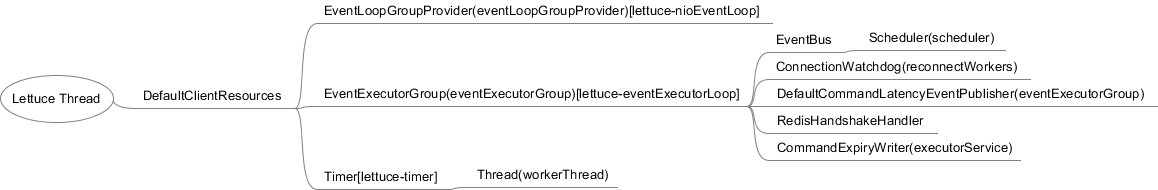

lettuce thread pool

After starting the program, check the thread usage as follows:

Lettuce uses the netty client api to connect to the redis service and configure the lettuce thread pool, which is actually configuring the netty client thread pool.

Configure thread pool

The default client thread pool size is determined by the following code:

If the number of available cores is greater than 2, it is the number of available cores; If the number of cores is less than 2, it is available.

Modify the default number and size of thread pools as follows:

DefaultClientResources clientResources = DefaultClientResources.builder().ioThreadPoolSize(8).computationThreadPoolSize(8).build();

ioThreadPoolSize determines the size of lettuce eventexecutorloop, and computationThreadPoolSize determines the size of lettuce nioeventloop.

Configure the size of the event queue for the event loop

Analogy netty configuration method.