Content summary

- Zombie process and orphan process

- Daemon

- Mutex (key)

- Message queue

- Implement inter process data interaction (IPC mechanism)

- Producer consumer model

- Thread theory

Detailed content

1, Process supplement

Zombie process and orphan process

Zombie process

After the main process code runs, it will not end directly, but wait for all child processes to run and recycle their resources.

Orphan process

The main process has died (abnormal death) and the child process is still running.

Daemon

Daemon: it guards a process. Once the daemon ends, the daemon will end

from multiprocessing import Process

import time

def test():

print('Daemon is running')

time.sleep(3)

print('Daemon is over')

if __name__ == '__main__':

p = Process(target=test)

# Set p process as daemon

p.daemon = True

p.start()

time.sleep(0.2)

print('Guarded main process')

# Operation results

Daemon is running

Guarded main thread

# 'daemon is over 'will not be executed because the p process will also end after the main process ends

mutex

Take ticket grabbing as an example. There is one ticket left in the database. There is a purchase function. First, use file operation to obtain the data of the number of tickets in the database, and then subtract one before saving. If the function is executed in a concurrent state, multiple processes can modify the data at the same time, that is, multiple people have successfully purchased the same ticket at the same time, resulting in data security problems

Problem: operating the same data under concurrent conditions is extremely easy to cause data disorder

Solution: turn concurrency into serial, which reduces efficiency but improves data security

- Lock can achieve the effect of changing concurrency into serial

Precautions for using lock

Generated in the main process and used by child processes

1.Be sure to lock where you need it. Don't add it at will

2.Do not use the lock easily(Deadlock phenomenon)

# In the future programming career, it is almost impossible to release their own operation lock

import json

from multiprocessing import Process, Lock

import time

import random

# Ticket checking

def search(name):

with open(r'data.txt', 'r', encoding='utf8') as f:

data_dict = json.load(f)

ticket_num = data_dict.get('ticket_num')

print('%s Query remaining tickets:%s' % (name, ticket_num))

# Buy a ticket

def buy(name):

# Check the tickets first

with open(r'data.txt', 'r', encoding='utf8') as f:

data_dict = json.load(f)

ticket_num = data_dict.get('ticket_num')

# Simulate a delay

time.sleep(random.random())

# Judge whether there are tickets

if ticket_num > 0:

# Reduce the remaining votes by one

data_dict['ticket_num'] -= 1

# Re write to database

with open(r'data.txt', 'w', encoding='utf8') as f:

json.dump(data_dict, f)

print('%s: Purchase successful' % name)

else:

print('Sorry, there are no tickets!!!')

def run(name,mutex):

search(name)

mutex.acquire() # Lock grab

buy(name)

mutex.release() # Release lock

if __name__ == '__main__':

mutex = Lock()

for i in range(1, 11):

p = Process(target=run, args=('user%s' % i,mutex))

p.start()

Message queue

queue:fifo

from multiprocessing import Queue

# This command generates a queue object q

# q you can call the put() method to add data to the queue and the get() method to get data from the queue

q = Queue(5) # The maximum waiting number can be filled in brackets

# Store data

q.put(111)

q.put(222)

# print(q.full()) # False determines whether the data in the queue is full

q.put(333)

q.put(444)

q.put(555)

# print(q.full())

# q.put(666) # Out of range, wait in place until there is a vacant position

# Extract data

print(q.get())

print(q.get())

print(q.get())

# print(q.empty()) # Determine whether there is still data in the queue

print(q.get())

print(q.get())

# print(q.get()) # After there is no data, wait in place until there is data

print(q.get_nowait()) # If there is no data, an error will be reported immediately

"""

full and get_nowait Can it be used for precise use in the case of multiple processes

No!!!

Because these two methods can only judge the data in the queue at a certain time, but the data in the queue will be changed by other processes at any time

The use of queues can break the default failure of communication between processes

"""

IPC mechanism

Realize data interaction between processes

Principle: create a queue. All processes can store data in the queue. If a process adds data to the queue, other processes can take out the data stored in it to realize data interaction between processes

Question: can't you create a list and then all processes operate on it? Can't this list achieve the same effect? Of course not. The list can only exist in the global namespace of a process. By default, there is no data interaction between processes, so the list in a process cannot be operated.

from multiprocessing import Queue, Process

def producer(q):

q.put("Subprocess p Put data")

def consumer(q):

print('Subprocess c Fetched data',q.get())

if __name__ == '__main__':

q = Queue()

p = Process(target=producer, args=(q,))

c = Process(target=consumer, args=(q,))

p.start()

c.start()

# q.put('data put by main process')

# p = Process(target=consumer, args=(q,))

# p.start()

# p.join()

# print(q.get())

# print('master ')

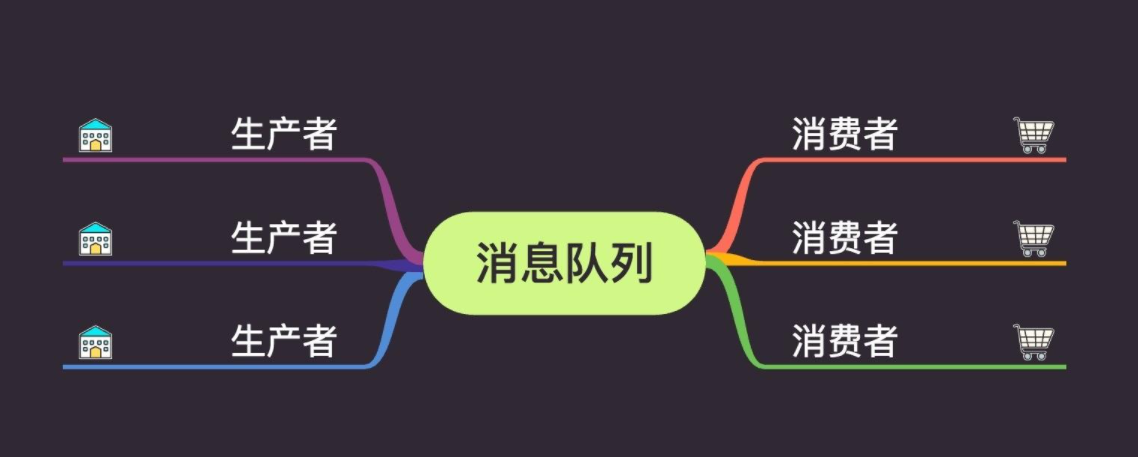

Producer consumer model

That is to use IPC mechanism to realize the data interaction between processes

"""

producer

Responsible for generating data(Steamed stuffed bun maker)

consumer

Responsible for data processing(Eat steamed stuffed bun)

The model needs to solve the congratulations imbalance

"""

# The queue generated by JoinableQueue can provide more queue operation methods, such as task_done() can judge whether the data in the queue has been obtained. If there is no data, the get() method will not be executed

from multiprocessing import Queue, Process, JoinableQueue

import time

import random

def producer(name, food, q):

for i in range(10):

print('%s Produced %s' % (name, food))

q.put(food)

time.sleep(random.random())

def consumer(name, q):

while True:

data = q.get()

print('%s Yes %s' % (name, data))

# Judge whether the data in the queue is obtained. If there is no data, the get() method will not be executed

q.task_done()

if __name__ == '__main__':

# q = Queue()

q = JoinableQueue()

p1 = Process(target=producer, args=('chef jason', 'Masala', q))

p2 = Process(target=producer, args=('Indian a San', 'Flying cake', q))

p3 = Process(target=producer, args=('Thai Arab', 'Durian', q))

c1 = Process(target=consumer, args=('Monitor a Fei', q))

p1.start()

p2.start()

p3.start()

# The main process will end immediately after execution

c1.daemon = True

c1.start()

# The main process waits for all producers to produce data

p1.join()

p2.join()

p3.join()

# The main process waits for all data in the queue to be fetched

q.join() # Wait for all data in the queue to be cleared

print('main')

2, Thread

Thread theory

What is a thread?

A process is actually a resource unit. What is really executed by the CPU is actually the threads in the process

""" Processes are like factories, and threads are like pipelines in factories All processes must contain at least one thread """

Data between processes is isolated by default, but data between multiple threads in the same process can be shared

Two ways to set up threads

"""

What should I do to set up the process

1.Reapply a piece of memory space

2.Import all the required resources

What do I need to do to set up a thread

Neither of the above two steps is required, so setting up a thread consumes far less resources than setting up a process!!!

"""

from threading import Thread

import time

# Open thread directly

def test(name):

print('%s is running' % name)

time.sleep(3)

print('%s is over' % name)

t = Thread(target=test, args=('jason',))

t.start()

print('main')

# Setting up threads with classes

class MyClass(Thread):

def __init__(self,name):

super().__init__()

self.name = name

def run(self):

print('%s is running' % self.name)

time.sleep(3)

print('%s is over' % self.name)

obj = MyClass('jason')

obj.start()

print('Main thread')

Other methods for Thread objects

1.join method

2. Get the process number (verify that multiple threads can be set up in the same process)

3.active_count counts the number of threads currently active

4.current_thread

Daemon thread

"""

The end of the main thread means the end of the whole process

Therefore, the main thread needs to wait for the end of all non daemon threads

"""

from threading import Thread

from multiprocessing import Process

import time

def foo():

print(123)

time.sleep(3)

print("end123")

def bar():

print(456)

time.sleep(1)

print("end456")

if __name__ == '__main__':

t1=Thread(target=foo)

t2=Thread(target=bar)

t1.daemon=True

t1.start()

t2.start()

print("main-------")

Thread data sharing

from threading import Thread

money = 100

def test():

global money

money = 999

t = Thread(target=test)

t.start()

t.join()

print(money)

# results of enforcement

999

Describes the data sharing between the main process and child processes

Thread mutex

from threading import Thread, Lock

from multiprocessing import Lock

import time

num = 100

def test(mutex):

global num

mutex.acquire()

# Get the value of num first

tmp = num

# Simulated delay effect

time.sleep(0.1)

# Modify value

tmp -= 1

num = tmp

mutex.release()

t_list = []

mutex = Lock()

for i in range(100):

t = Thread(target=test, args=(mutex,))

t.start()

t_list.append(t)

# Ensure that all child threads end

for t in t_list:

t.join()

print(num)

TCP service implementation concurrency

Turn on Multithreading

import socket

from threading import Thread

from multiprocessing import Process

server = socket.socket()

server.bind(('127.0.0.1', 8080))

server.listen(5)

def talk(sock):

while True:

try:

data = sock.recv(1024)

if len(data) == 0: break

print(data.decode('utf8'))

sock.send(data + b'gun dan!')

except ConnectionResetError as e:

print(e)

break

sock.close()

while True:

sock, addr = server.accept()

print(addr)

# Set up multi process or multi thread

t = Thread(target=talk, args=(sock,))

t.start()

train

1.What is self Baidu search"Optimistic lock and pessimistic lock"

2.What is self Baidu search"Message queue"

3.What is self Baidu search"Twenty three design modes"

# At ordinary times, you can practice the algorithm (bubbling two-point insertion into fast row heap and barrel row)

Pen and paper:Bubbling two-part insertion fast row

Capable of describing the internal principles:Stack pail