Processes and threads

A program has at least one process and a program has at least one thread. A thread cannot run alone. It must run inside the process

-

Process [executing application]: it is the basic unit for allocating and managing resources during the execution of concurrent programs. It is a dynamic concept.

-

The basic unit that competes for computer system resources- "Multitasking operating system" - "multiple processes running at the same time" - "CPU allocation of resources" - "time slicing"

The basic unit of processor scheduling

-

Thread: it is an execution unit of a process and an internal scheduling entity of the process. A basic unit of independent operation smaller than a process. Threads are also called lightweight processes.

A process can have multiple processes, and multiple threads within the same process can share process resources

For example, start a Java program

- Starting a Java program is actually starting the JVM [line by line translation of bytecode files - translation into the underlying machine language]

Start a JVM process - jvm.exe [executable file in windows. Programs written in C language are directly compiled into. Exe executable file] - After the JVM process is started, two threads are started at the same time - the main thread and a GC thread [background daemon thread]

Daemon thread - if only the daemon thread is left in the background, the process will end

The end of the process does not need to wait until all the daemon threads are executed - The main thread is responsible for executing the programs in the main method - after all the programs in the main method are executed, the main thread ends

GC thread is responsible for collecting garbage objects

The traditional way to create threads

-

The first method: extends Thread - share code without sharing resources

Only when the resource is set to be static - it is also necessary to share a resource

-

The second method: implements Runnable interface - share code and resources

The difference between Thread and Runnable

-

The first method is the extend thread method - share code without sharing resources

Only when the resource is set to be static - it is also necessary to share a resource

-

The second way is to implement the runnable interface - share code and resources

-

The third method is Callable interface

It is recommended to use with Future + thread pool

Differences between Callable and Runnable interfaces

- Callable can get the result of asynchronous calculation through Future - get the result after thread execution

- Callable calls the call method and Runnable calls the run method

- The call method can throw an exception list, but the run method is not allowed to throw an exception list

Callable example

//Get the result of thread execution callable + futuretask - > the result of thread execution

class C1 implements Callable<Integer>{

public static void main(String[] args) {

System.out.println("main-begin...");

Callable<Integer> c = new C1();

//FutureTask - asynchronous task

FutureTask<Integer> task = new FutureTask<>(c);

//Using asynchronous tasks to build Thread objects

Thread t = new Thread(task);

t.start();//Start thread

System.out.println("The thread has started...");

try {

//Get the result of thread calculation

//The get() method must wait until the thread execution ends - before it stops

System.out.println("result:"+task.get());

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

}

System.out.println("main-end...");

}

@Override

public Integer call() throws Exception {

int total = 0;

for (int i = 1; i <= 100 ; i++) {

total+=i;

}

//Deliberately simulate a time-consuming and laborious task

Thread.sleep(2000);

return total;//5050

}

}

public class CallablePoolDemo {

public static void main(String[] args) {

//Create a cacheable thread pool object

ExecutorService executorService = Executors.newCachedThreadPool();

//Submit tasks to this cache pool

//First task second task

Future<Integer> future1 = executorService.submit(new C1());

Future<Integer> future2 = executorService.submit(new C2());

//Must the number of tasks be the same as the number of threads?

//There is an effect - > two threads - execute these two asynchronous tasks at the same time

//You need to add the results of the two threads

try {

//The get method will certainly wait until the thread is executed - before continuing

Integer res1 = future1.get();

Integer res2 = future2.get();

int result = future1.get() + future2.get();

//If the get methods of the two threads are not completed, the main thread will not continue to execute

System.out.println("Start integration....");

System.out.println(Thread.currentThread().getName() + " --- " + result);

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

}

}

}

class C1 implements Callable<Integer>{

@Override

public Integer call() throws Exception {

System.out.println(Thread.currentThread().getName() + "Calculating " + "1~10:");

Integer total = 0;

for (int i = 0; i <= 10; i++) {

total+=i;

}

Thread.sleep(1000);

System.out.println("The calculation result is:"+total);

return total;

}

}

class C2 implements Callable<Integer>{

@Override

public Integer call() throws Exception {

System.out.println(Thread.currentThread().getName() + "Calculating" + "1~100:");

Integer total = 0;

for (int i = 0; i <= 100; i++) {

total+=i;

}

Thread.sleep(1000);

System.out.println("The calculation result is:"+total);

return total;

}

}

Thread safe and thread unsafe classes

-

StringBuilder - thread unsafe string class, StringBuffer - thread safe string class

-

ArrayList - thread unsafe collection, Vector - thread safe collection

-

HashMap - thread unsafe collection, Hashtable - thread safe collection

The above thread safe classes - api methods are modified with synchronized methods - at a certain time, only one thread can access them, and other threads are in a waiting state

Common methods provided by Thread

-

static Thread currentThread();// Returns the reference object of the thread currently executing

-

String getName();// Returns the name of the thread

-

void start();// Start the thread. In essence, when using the t1.start() method, the bottom layer will let the JVM start the thread. Our program is not qualified and capable to really start a thread. CPU scheduling JVM process - call t1 thread

-

void setName(String name);// Set a name for the thread

-

void setPriority(int n);// Set the priority of the thread, numbers [1 ~ 10]. Note: the higher the number, the higher the priority. However, the higher the priority, the thread will not be executed first. I just want it to execute first. Finally, the cpu is required

-

void setDaemon(boolean on);// When set to true, this thread becomes a background daemon thread

//If only the daemon thread is left in the background - it can end

//You don't need to wait until all daemon threads are running

synchronized keyword

- Keywords of java language

- Can be used to lock objects and methods or code blocks

- When he locks a method [synchronization method] or a code block [synchronization code block], at most one thread executes the code at the same time

- When two concurrent threads access the lock synchronization code block in the same object, only one thread can be executed at a time. Another thread must wait for the current thread to execute the code block before executing the code block.

- Unfair lock

- If an exception occurs in the synchronization code block, the lock resource will still be released automatically.

Basic method

In java, each object has and only has one synchronization lock, and the synchronization lock depends on the existence of the object. When we call the synchronized modified synchronization method of an object, we obtain the synchronization lock of the object.

- Common modification method - object lock - different objects have independent "one lock", and the "lock" of each object does not conflict - "buffet"

- Modify static method - "class lock" - act on all objects under this class - all objects instantiated by this class compete for the same "lock" - "class lock" - "eat on one table"

- Decorated code block synchronized(this) - object lock

- Modifier code block (XXX.class) - "class lock"

synchronized properties

-

Atomicity

The so-called atomicity represents one or more operations, or all of them are executed, and the execution process cannot be interrupted by any factor.Or not. such as i++,i+=2,i=i+1;None of these operations are atomic[Region, calculation, assignment]. These three steps are not atomic - Any one of the three steps is in the process of execution. Others may interrupt him. int x = 10; //Atomic operation special - Find out double x = 3.0d perhaps long x1 = 20L - Not atomic.

-

visibility

Reason: after meeting synchronized, empty the local working memory and copy the latest value from the main memory again

When multiple threads access the same resource, the status and information of the resource are visible to other threads

-

Order

At the same time, only one thread can enter

-

Reentrant

When a thread applies for a lock resource and [releases] after execution, it still has the opportunity to continue applying for the lock resource that has been applied for.

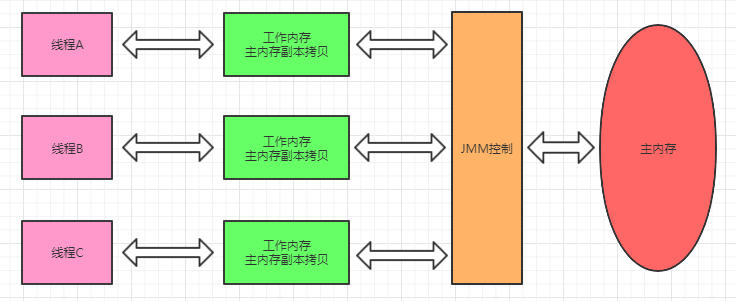

JMM

JMM is the java memory model - not the JVM memory model

The java memory model specifies that all variables are stored in main memory, including instance variables [non static attributes in classes] , static variables, but excluding local variables and method parameters. Each thread has its own working memory. The working memory of the thread stores the variables used by the thread and a copy of the main memory. The thread operates on the variables in the working memory. The thread cannot directly read and write the variables in the main memory.

Different threads cannot access variables in each other's working memory. The transfer of variable values between threads needs to be completed through main memory.

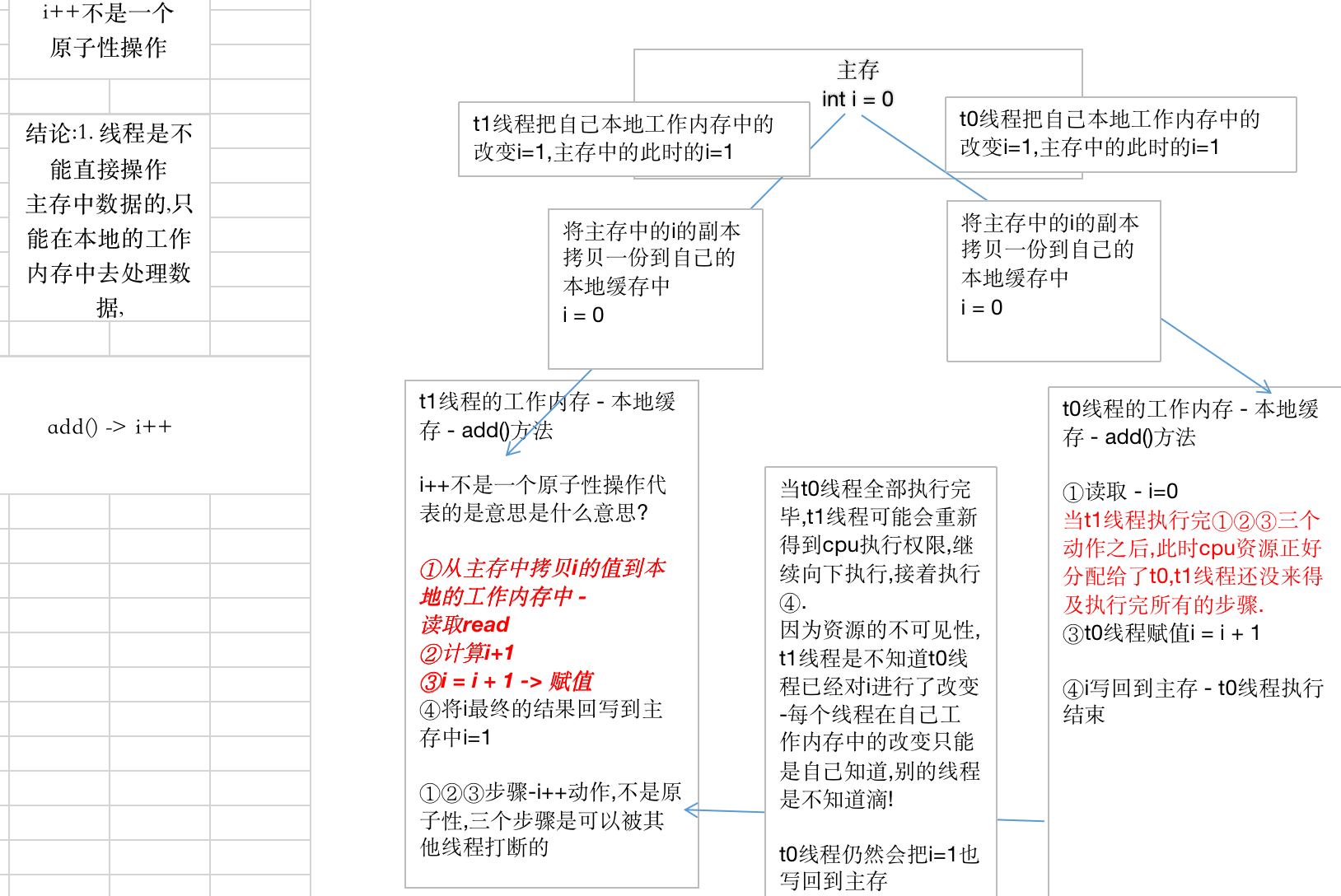

Describe i + + procedures – multithreading safety issues

Premise:

-

Threads cannot directly operate on the data in the main memory. They must be completed in the local working memory. After completion, they will be brushed back to the main memory.

-

Threads are isolated. When each thread executes a method, it will open up a local area [stack frame thread stack], and each thread is in the local working memory

If a resource is modified, the information and status of the resource are invisible to other threads.

add Method not used synchronized Process of modification

Dirty data - the value of the variable data in the local working memory is different from that in the main memory, which violates the principle of "cache consistency in JMM"

In the case of lock free resources add

i++ Not an atomic operation means in the middle of it,Can be interrupted by other threads

①T1 Threads copy copies of variables from main memory to local working memory - read read(i=0) => T1

`T1 The thread gave up CPU Executive power.T0 The thread obtains Execution Authority - Copy a copy of a variable from main memory(i=0)` => T0

`T0 Threads are calculated directly,assignment,Brush back to main memory[i=1]` => T0

`T0 Thread brush back i After main memory,At this time, the main memory i=1,T0 Thread surrender cpu Executive power`

②In local working memory i Calculate,After calculation,Re assign the self increasing data to a new value i => T1

`T1 The thread continues execution②,Because before T1 Thread did not end execution,Therefore, it will not be copied from the updated main memory i Copy of`

`therefore T1 When the thread continues to execute,You still use the variable in your local working memory i[read Down,The initial value is still 0]`

`T1 thread i = i + 1,Brush back to main memory[i=1]`

`Because threads are isolated,T1 Thread it doesn't know T0 Thread pair i Modified.`

③Put the final local working memory i Calculation results of - [After the current thread is executed]Brush back to main memory[Time is not fixed.But after the current thread is executed,It will certainly brush back to main memory] => T1

[If it is a single threaded environment,When T1 After the thread brushes the final result back to main memory,Call again the second time add()When the method,that T1 The thread will still be removed from main memory again

Get a copy of the variable,Repeat execution①②③)

A faint signal:If thread T1 No execution end,Then it will not pull values from main memory again

The effect is - Both threads operate at the same time i++,But in main memory i In fact, it is only added once.

How to solve the problem

Use synchronized to decorate where i + + is located

When an object calls the add method to get the lock resource, it will first empty the local working memory. The purpose of emptying is to enable subsequent operations to open the latest value from the main memory again!

public synchronized void add(){

i++;

}

- Because the add method is synchronized - synchronized is modified. At a certain time, only one thread [to obtain lock resources] can execute it

- [read, calculate and assign] - three steps. Other threads cannot intervene. Because threads that do not obtain lock resources are synchronously blocked externally

- When the executing thread releases the lock resource, it will brush the changes in the local working memory back to the main memory

When will changes in working memory be synchronized to main memory

Single thread

- When the current single thread execution method ends

Multithreading

- Threads release locks between resources

- Thread switching

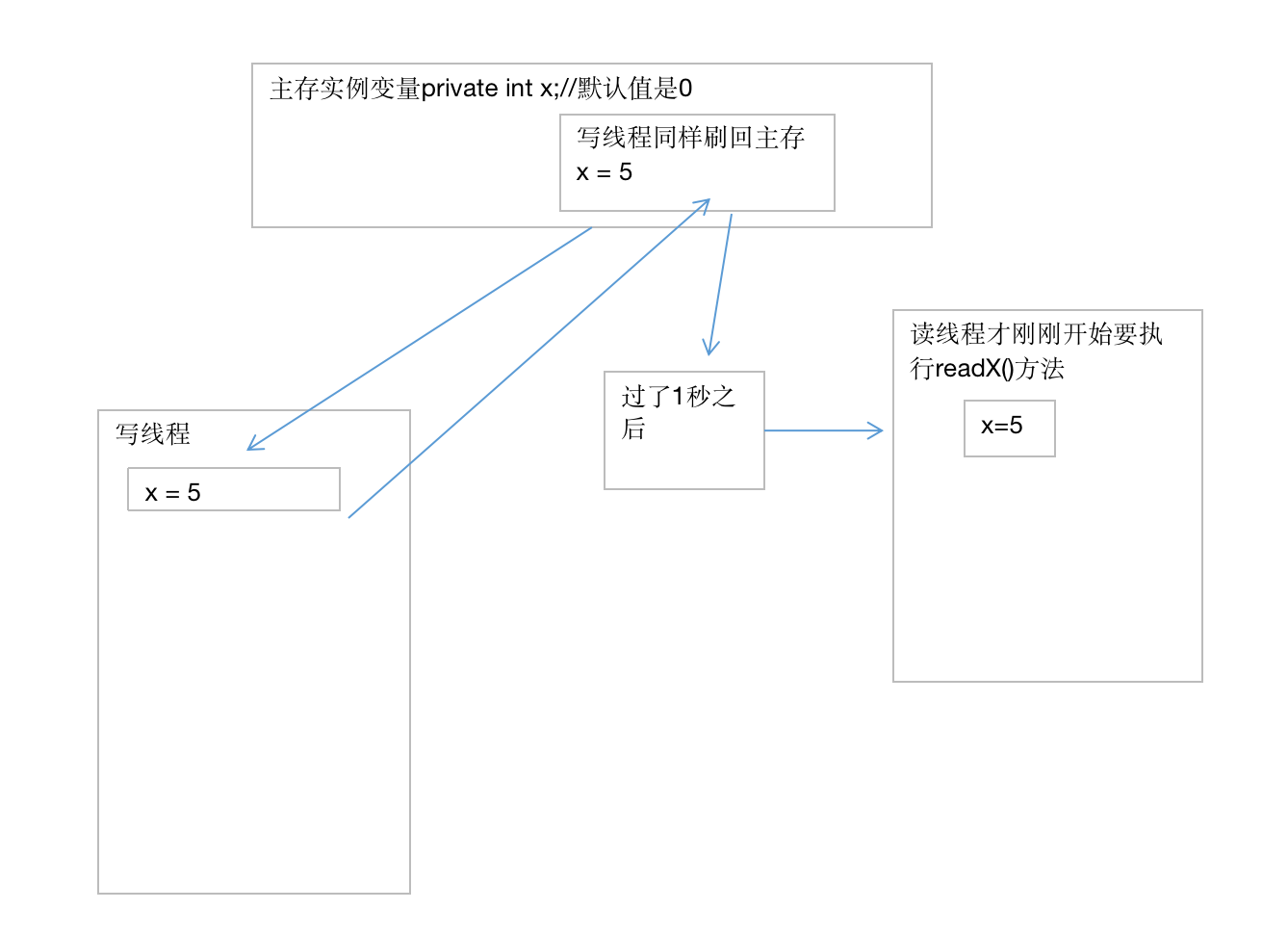

Analyze visibility codes

Write before you read

-

Why can a read thread terminate a loop

public class VisibilityDemo { //Instance variable - existing in main memory private volatile int x; public void writeX(){ x=5; } public void readX(){ while (x!=5){ } if (x==5){ System.out.println("==stop=="); } } public static void main(String[] args) { VisibilityDemo vd = new VisibilityDemo(); Thread t1 = new Thread(new Runnable() { //Create a write thread @Override //Use anonymous inner classes public void run() { vd.writeX(); } }); Thread t2 = new Thread(()->vd.readX()); //Create a read thread //Using lambda expressions //The execution order of threads has nothing to do with which one you start first //Most scenarios are - with higher probability - which thread is started first and which thread has a greater chance of execution first //If you write before you read t1.start(); try { //One second of sleep here is enough for our writing thread to brush the final result of x=5 back to main memory Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); } t2.start(); //It is found that x becomes 5, so stop is executed } }

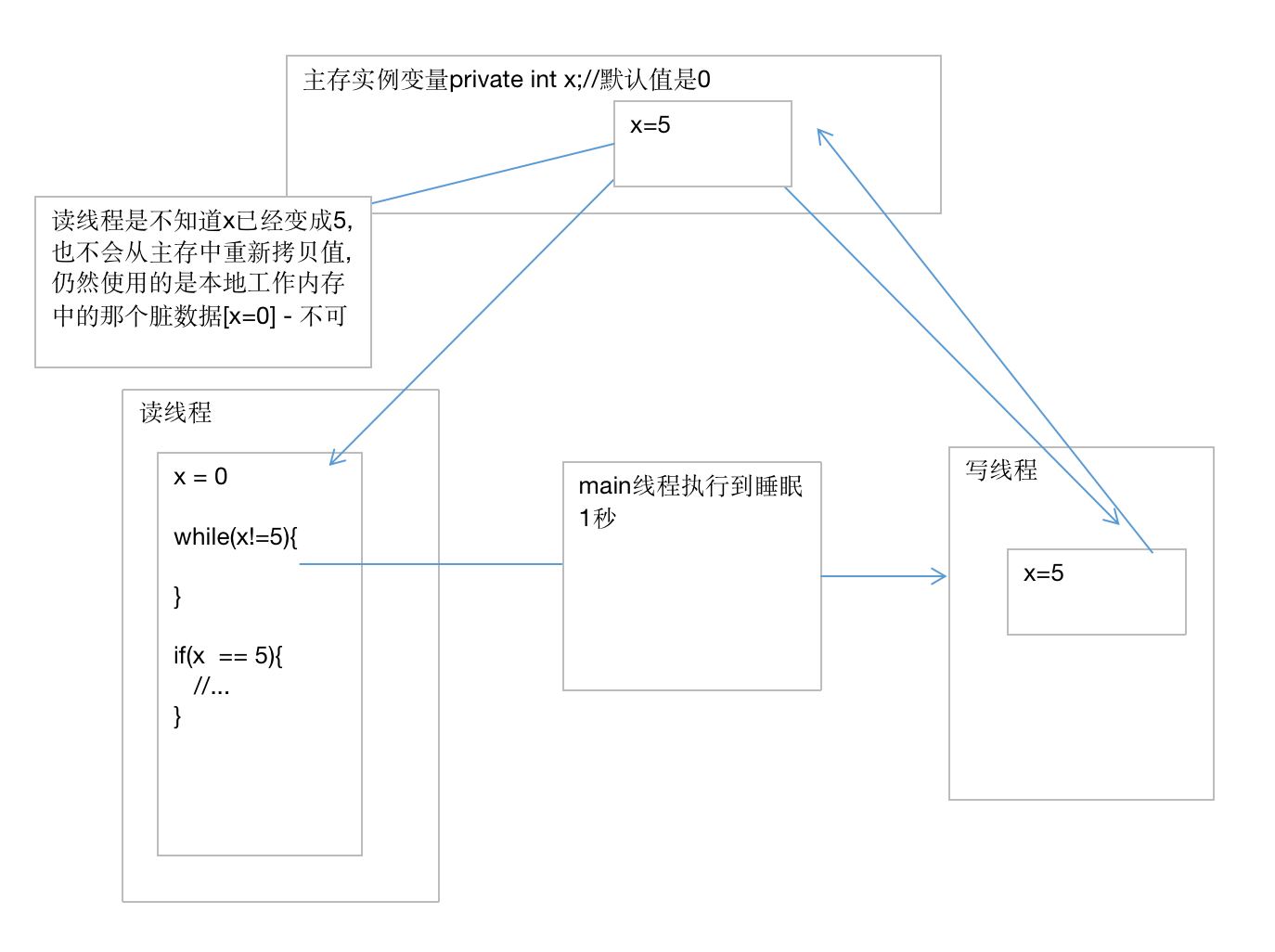

Read before write

-

When multiple threads access the same resource, the status and information of the resource are invisible to other threads

//The omitted code is the same as above //If you read before you write t2.start();//read try { Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); } t1.start();//write

synchronized depth

-

Features: when the object regains the lock resource, it will first empty the local working memory. Force the main memory to copy the updated variables

//Read before write, just add a line of code to the loop body public void readX(){ //After a long time, the change of x=5 is brushed back to main memory //However, the read thread encountered synchronized code block in the execution loop - loop body //Once you encounter synchronized - try to get the lock resource - it will empty the local working memory [empty x] //Continuing the cycle will use x again. Because the local working memory has been emptied, the reading thread can only go to the main memory to force re-entry // To copy a variable of x to the local working memory //You must get the value x=5 after the write thread has been updated while(x!=5){ // System.out.println();//Added code - the read thread jumps out of the loop - finds a new x and copies the latest x again } if(x==5) { System.out.println("-----stopped---"); } } reason: //The internal bottom layer of the println method System.out.println() is - synchronized code block - synchronized private void newLine() { try { synchronized (this) { //... } } catch (InterruptedIOException x) { Thread.currentThread().interrupt(); } catch (IOException x) { trouble = true; } }

How to ensure visibility - solution

-

Use synchronized to ensure visibility

-

Use volatile to decorate instance variables

Function 1: force the program to comply with the "cache consistency" protocol. If the variables in main memory are changed, the thread will force the thread to re copy the updated data from main memory to its local working memory

Function 2: prohibit specifying rearranged - singleton

x Student s = new Student(); Instruction rearrangement - JVM After instruction optimization ①Allocate space to objects ②The space address is given immediately s,s Save to stack ③Object initialization volatile Student s = new Student();//Prohibit instruction rearrangement ①Allocate space to objects③Object initialization ②The space address is given immediately s,s Save to stackRole of volatile keyword

-

Ensure visibility

-

volatile does not cause blocking

-

Prohibit instruction rearrangement

-

Atomicity cannot be guaranteed

volatile int i = 0; //i is visible to both threads. Once the main memory is changed, the other thread must be able to "see"- //I will force another copy to the local cache @Override public void run(){ add(); } public void add(){ i++; } t1.start(); t2.start(); //Induced- //Force the program to comply with the "cache consistency" protocol. If the variables in main memory are changed //The thread will force it to re copy the latest data from main memory to its local working memory //The final result of i is < 200000 - > atomicity cannot be guaranteed //t1 is executed to the last step, and the calculation is completed in its own working memory, //i has been self incremented, i = 1 < --- other threads have intervened -- >, but they haven't had time to brush back to main memory /* t0 The thread directly calculates, i=1, brushes back to main memory, and ends However, t1 has finished the operation on i, and i will not be operated inside t1 Only the last action - > I = 1 will be brushed back to main memory */

The difference between volatile and synchronized!

- volatile can only be used with variables, while synchronized can act on variables, methods, and code blocks

- Multithreaded access to volatile does not block, while synchronized keyword blocks

- volatile can ensure the visibility of data, that is, it is visible among multiple threads, and atomicity cannot be guaranteed. The synchronized keyword can ensure

- volatile keyword mainly solves the visibility between multiple threads, while synchronized keyword ensures the synchronization of multiple threads accessing resources

- volatile can prohibit jvm instruction rearrangement, but synchronized cannot

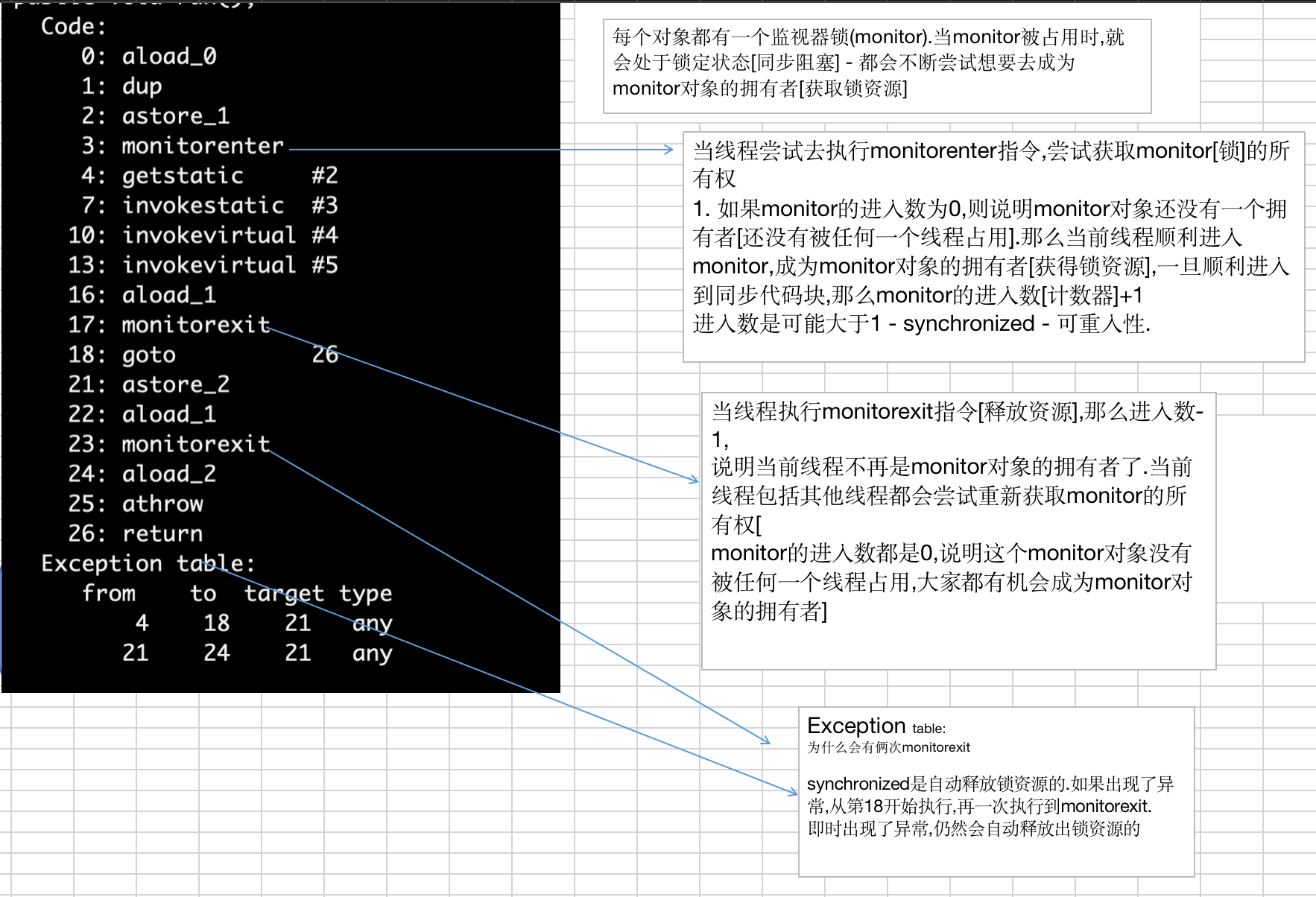

Underlying principle of synchronized

-

understand

-

Each lock resource corresponds to a monitor object. In the HotSpot virtual machine, it is implemented by ObjectMonitor (C + +)

The difference between thread and process

-

Address space: threads of the same process share the address space of the process, while processes have independent address space.

Processes are independent. Multiple threads within the same process can share process resources

such as:No country[process]There is no home[thread ] home[process] - Having multiple family members[thread ]

-

Resource ownership: threads in the same process share the resources of the process, but the resources between processes are independent.

-

After a process crashes, it will not affect other processes in protected mode, but a thread crashes and the whole process dies. So multiprocessing is more robust than multithreading.

-

Process switching consumes large resources and is efficient. Therefore, when it comes to frequent switching, using threads is better than processes. Similarly, if concurrent operations that require simultaneous and shared variables are required, only threads can be used, not processes.

-Process heavyweight units (create, switch, destroy - time consuming) s

-Thread lightweight units (create, switch, destroy - relatively high)

-

Execution process: each independent process has an entry for program operation, sequential execution sequence and program entry. However, threads cannot be executed independently. They must be stored in the application, and the application provides multiple thread execution control.

-

Threads are the basic unit of processor scheduling, but processes are not.

-

Both can be executed concurrently.

A thread only belongs to one process, but a process can have multiple threads, but at least one thread resource is allocated to the process, and all threads in the same process share all resources of the process.

Thread state - thread lifecycle

-

-

Introduction: thread life cycle

- New: new state / transient. After the thread object is created, it enters the new state, such as Thread t = new MyThread()

- Runnable: ready state. When the start() method (t.start()) of the thread object is called, the thread enters the ready state. A thread in the ready state only indicates that it is ready to wait for the cpu to schedule execution at any time, not that it will execute immediately after t.start() is executed

- Running: running status. When the cpu starts scheduling the threads in the ready status, the threads can actually execute, that is, enter the running status.

- Blocked: the thread in the running state temporarily gives up the right to use the cpu and stops execution for some reason. At this time, it enters the blocking state. Until it enters the ready state, it has the opportunity to be called by the cpu again to enter the running state

- Dead: dead state (end state). When the thread finishes executing or exits the run() method due to an exception, the thread ends its life cycle

- (1) The ready state is the only entry to the running state. (2) if a thread wants to enter the running state for execution, it must first be in the ready state. (3) according to the causes of blocking, the blocking state can be divided into three types: [1] waiting blocking: the thread in the running state executes the wait() method to make the thread enter the waiting blocking state [2] Synchronization blocking: when a thread fails to obtain a synchronized synchronization lock (because the lock is occupied by other threads), it will enter the synchronization blocking state [3] other blocking: when calling the thread's sleep() or join() or issuing an I/O request, the thread will enter the blocking state. When the sleep() state expires, the join() waits for the thread to terminate or time out, or the I/O processing is completed, the thread will return to the ready state. Obtain keyboard input before

Daemon thread

-

GC - running in the background - responsible for recycling garbage objects

-

Core: when the thread ends, you don't need to care whether the background daemon thread also ends. The thread won't end until all the background daemon threads end

-

When only the daemon thread is executing in the background, it can be considered that the thread can end

public class DaemonDemo { public static void main(String[] args) { Thread t1 = new T1(); Thread t2 = new T2(); //Set the thread - for printing numbers as the background daemon thread //If only the daemon thread is left in the background - it can end //You don't need to wait until all daemon threads are running t2.setDaemon(true); //If neither t1 nor t2 is a daemon thread - the main thread must wait for both threads to finish executing before it ends t1.start(); t2.start(); } } class T1 extends Thread{ @Override public void run() { for (int i = 65; i <100 ; i++) { System.out.println((char)i); try { Thread.sleep(100); } catch (InterruptedException e) { e.printStackTrace(); } } } } class T2 extends Thread{ @Override public void run() { for (int i = 0; i <100 ; i++) { System.out.println(i); try { Thread.sleep(500); } catch (InterruptedException e) { e.printStackTrace(); } } } }

Lock - sync code

Introduction: it is an interface. There are many implementation classes below. The written test question is the difference between lock and synchronized!

-

lock is an interface and synchronized is a keyword

-

Lock lock is a display lock (manual application lock, manual release lock) and synchronized implicit lock (automatic application / release lock)

-

Lock manually apply for lock * * (object lock)**

-

Lock is a lock code block

-

When a lock exception occurs, it will not actively release resources

/** * This class is used to demonstrate: Lock synchronization code - synchronization code block * Apply for object locks - the same object will compete for a lock * If an exception occurs - the lock will not be released actively */ public class LockHelloDemo { //Build lock object - Interface Lock lock = new ReentrantLock(); public void add(){ try { //begin.. //Multiple threads will compete for "lock resources" lock.lock();//Manually request lock resources - Show locks //Only one thread can enter and execute at a certain time //Synchronization code start System.out.println(Thread.currentThread().getName()+":0"); try { //If sleep appears in the synchronization code, it will not release the lock resource, but will only give up the cpu time fragment Thread.sleep(1000); } catch (InterruptedException e) { e.printStackTrace(); } System.out.println(Thread.currentThread().getName()+":1"); //end... //End of synchronization code }catch (Exception e) { e.printStackTrace(); }finally { lock.unlock(); } } public static void main(String[] args) { LockHelloDemo lockHelloDemo = new LockHelloDemo(); Thread t1 = new Thread(()->lockHelloDemo.add()); Thread t2 = new Thread(()->lockHelloDemo.add()); t1.start(); t2.start(); } }deadlock

Conditions for deadlock generation

-

**Mutual exclusion condition: * * refers to the exclusive use of the allocated resources by the process, that is, a resource is occupied by only one process in a period of time. If there are other processes requesting resources at this time, the requester can only wait until the process occupying the resources is released.

-

Request and hold condition: it means that the process has held at least one resource, but has made a new resource request, and the resource has been occupied by other processes. At this time, the requesting process is blocked, but it still holds the other resources it has obtained.

-

**Conditions of non deprivation: * * refers to the resources obtained by the process. They cannot be deprived before they are used up, but can only be released by themselves when they are used up.

-

**Loop waiting condition: * * means that when a deadlock occurs, there must be a process resource ring chain, that is, P0 in the process set {P0, P1, P2, ···, Pn} is waiting for a resource occupied by P1; P1 is waiting for resources occupied by P2,..., Pn is waiting for resources occupied by P0.

As long as one of the four conditions is broken, deadlock can be prevented

Deadlock is inevitable, but it requires one of four conditions for program destruction

Static domains are prone to deadlock

-

Four common thread pools - required

Introduction to ExecutorService, the return value of thread pool

ExecutorService is a class provided by Java for managing thread pools. This class has two functions: controlling the number of threads and reusing threads

- Executors.newCacheThreadPool(): cacheable thread pool. First check whether there are previously established threads in the pool. If so, use them directly. If not, create a new thread to join the pool. The cache pool is usually used to perform asynchronous tasks with a short lifetime

- Executors.newFixedThreadPool(int n): create a reusable fixed number of thread pool to run these threads in a shared unbounded queue.

- Executors.newScheduledThreadPool(int n): create a fixed length thread pool to support scheduled and periodic task execution

- Executors.newSingleThreadExecutor(): create a single threaded thread pool. It will only use a unique worker thread to execute tasks to ensure that all tasks are executed in the specified order (FIFO, LIFO, priority).