Article structure:

- Gunicorn

- preliminary

- install and usage

- pros & cons

- supervisor

- install and usage

Gunicorn

Gunicorn 'Green Unicorn' (pronunciation jee-unicorn | green unicorn | gun-i-corn) is a widely used Python WSGI UNIX HTTP server, transplanted from Ruby's Unicorn project, using a pre-fork working mode, which is simple to use, low resource consumption and efficient. Guicorn relies on the operating system to provide load balancing, using fcntl, OS in its source code. Fork and others only exist in Unix modules and interfaces, so the current version (v20.1.0) can only run in Unix without a patch. For Windows environments, you can use waitress To run the web service.

Usage

from flask import Flask

app = Flask(__name__)

@app.route("/")

def index():

return "SUCCESS"

if __name__ == "__main__":

app.debug = True

app.run()

For the above wsgi.py file, using gunicorn to listen for requests:

gunicorn -w 2 -b 0.0.0.0:8000 wsgi:app

Parameter description:

- -w, specify the number of worker processes

- -b, specify listening address/port

Gunicorn-h Lookup Common Parameters

usage: gunicorn [OPTIONS] [APP_MODULE]

optional arguments:

-h, --help Help Information

-v, --version Edition

-c CONFIG, --config CONFIG

From a configuration file CONFIG Function

-b ADDRESS, --bind ADDRESS

Listening Address and Port [['127.0.0.1:8000']]

--backlog INT Maximum number of pending connections

-w INT, --workers INT

Maximum number of worker processes

-k STRING, --worker-class STRING

Used worker Type of, default sync

--threads INT Maximum number of worker threads

--worker-connections INT

The maximum number of simultaneous clients. [1000]

--max-requests INT The maximum number of requests a worker will process before restarting. [0]

--max-requests-jitter INT

The maximum jitter to add to the *max_requests* setting. [0]

-t INT, --timeout INT

Workers silent for more than this many seconds are killed and restarted. [30]

...

Pre-fork working mode

There is a management process master and a certain amount of worker in the model.

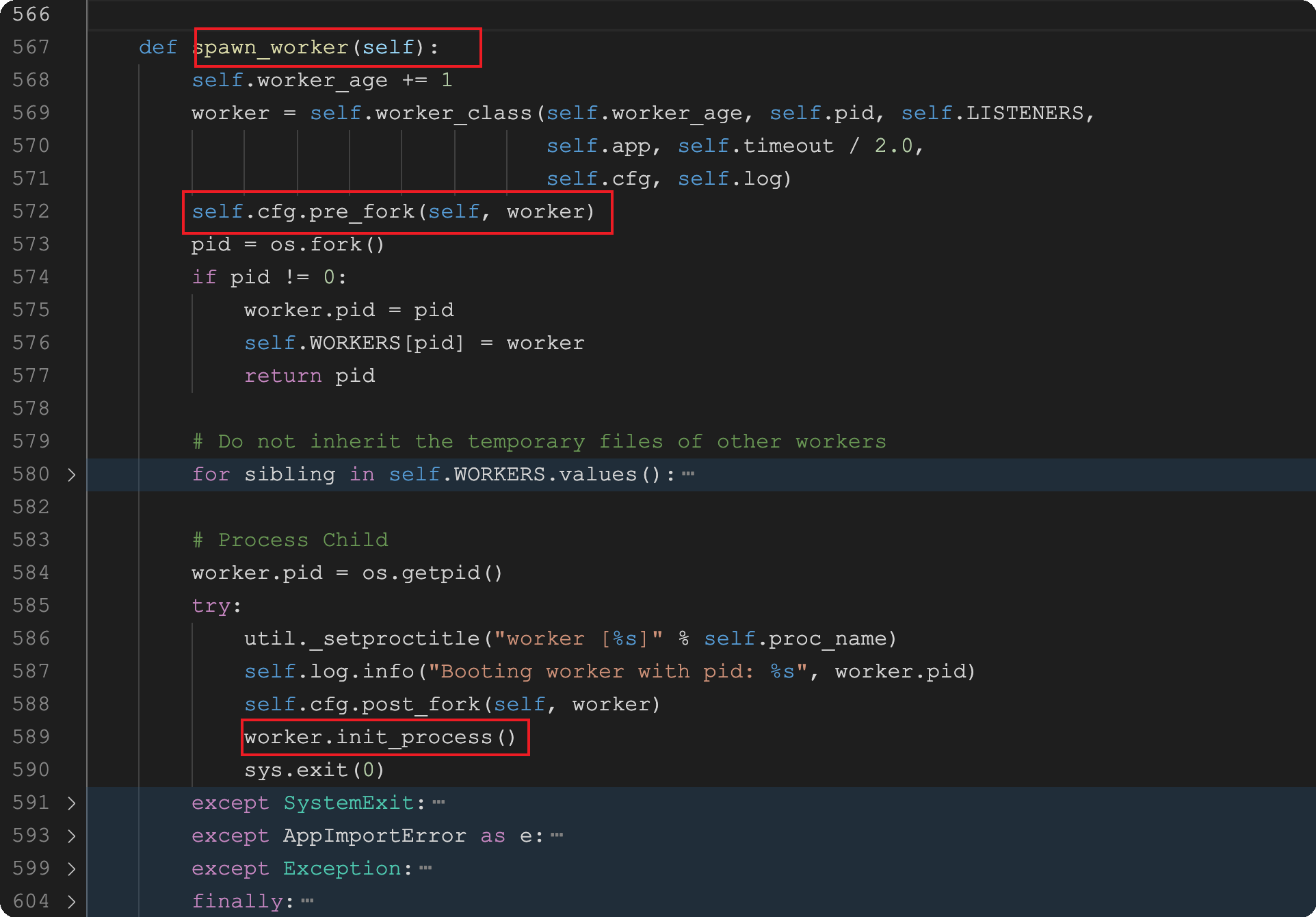

Pre means that the worker process is created ahead of time, that is, a certain number of subprocesses are pre-derived (spawn_worker() in the code below is the specific implementation process) before the connection request occurs, and then waiting for the connection, which reduces the time overhead of starting the creation of the subprocess before the request occurs. The number of worker processes specified in advance for K can be set by the parameter -w INT(--workers INT).

Worker. Init_ The process() function accomplishes the task of having each child process instantiate our wsgi:app object. The objects in each worker are independent and do not interfere with each other. After the master creates the worker, two types of processes enter their own message loop:

- master loop handles signal reception, worker process management and other tasks

- The worker loop listens for and handles network events such as new connections, disconnections, processing requests, sending responses, and so on.

operating mechanism

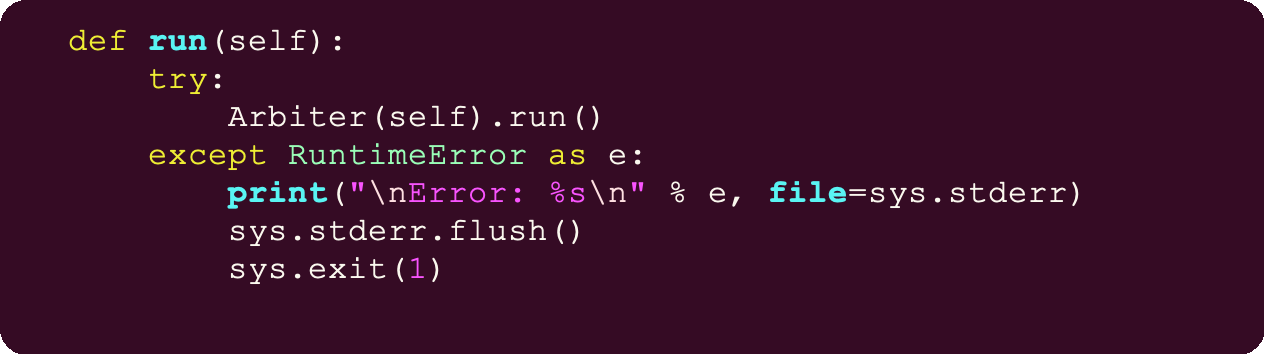

Start gunicorn, initialize gunicorn first. App. Wsgiapp. WSGIApplication (inheriting the base class gunicorn.app.base.BaseApplication) reads and sets the configuration, that is, sets the parameters passed in from the command line or file. Arbiter is then called. Run(), the method code is as follows:

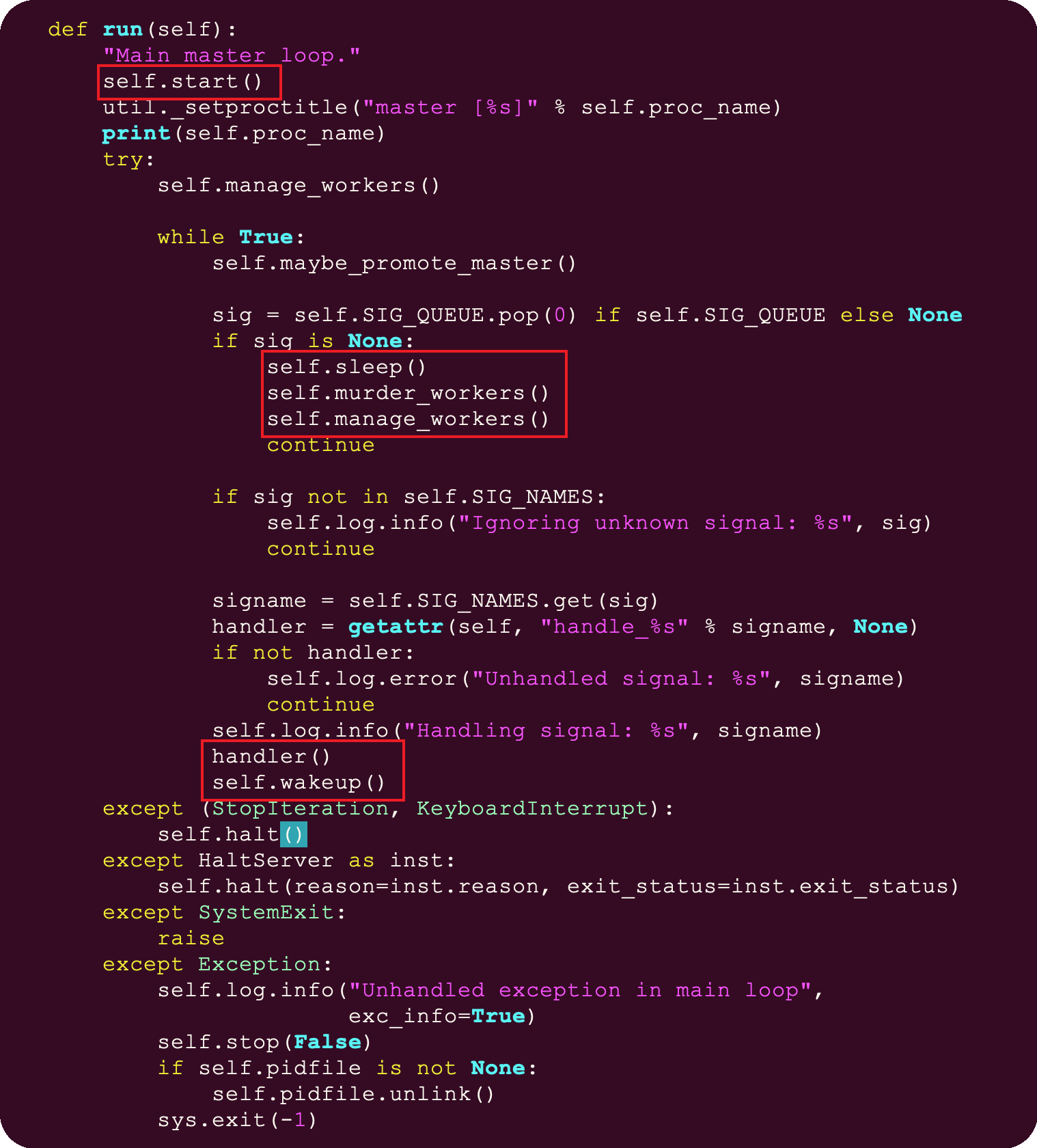

Call Arbiter (self) as shown below. After run (), Arbiter first reads configuration items, such as number of workers, worker working mode, address to listen on, and so on. Then the signal processing function is initialized and the socket is established. Then the desired number of subprocesses is derived. Finally enter the loop: process the signals in the signal queue, kill and restart the unresponsive child processes, and sleep and wait when there are no tasks to process.

The worker process, as described in the previous section, reads the configuration, initializes the signal processing function, then enters a loop: sends a survival signal to the master, listens for and processes network events.

Pros & Cons

- Advantages:

- Simple to use and run directly through the terminal

- Low resource consumption

- High Performance

- Hot updates are supported (starting at 19.0), and applications can be automatically reloaded when code changes by specifying the parameter--reload

- Insufficient:

- Automatic restart is not supported after unexpected service interruption

- Cannot view application status directly via gunicorn, stop, restart application

Because gunicorn does not support application status viewing and application restart, we can consider using process management tools such as supervisor to assist gunicorn.

supervisor

supervisor Is a process monitoring management tool developed by Python on UNIX-like (sorry again, Windows users) systems. It changes a normal command-line process to a background daemon process and monitors its status, which automatically restarts when exiting abnormally. It starts these managed processes as subprocesses of supervisor by fork/exec. Also, when a child process hangs up, the main process can accurately get information about the child process hanging up, and can choose whether to start and alert. Supervisor also provides a function to set up a non-root user for supervisord or for each subprocess, and this ordinary user can manage its corresponding processes.

Installation, Configuration and Use

install

brew install supervisor # macOS apt install supervisor # Debian/Ubuntu yum install supervisor # CentOS pip install supervisor # Python

To configure

The supervisor configuration file path is typically located at/etc/supervisord.conf (location depends on environment configuration), subprocess profile path/etc/supervisor.d/

Profile description:

[unix_http_server] file=/tmp/supervisor.sock ;UNIX socket Files, supervisorctl Will use ;chmod=0700 ;socket file right mode,Default is 0700 ;chown=nobody:nogroup ;socket File owner,Format: uid:gid ;[inet_http_server] ;HTTP Server, Provide web Management interface ;port=127.0.0.1:9001 ;Web Manage Background Running IP And ports ;username=user ;Logon administration background user name ;password=123 ;Login Administration Background Password [supervisord] logfile=/tmp/supervisord.log ;Log file, default is $CWD/supervisord.log logfile_maxbytes=50MB ;Log file size, exceeds rotate,Default 50 MB,If set to 0, no size limit logfile_backups=10 ;Log files retain backups by default of 10, set to 0 to indicate no backups loglevel=info ;Log level, default info,Other: debug,warn,trace pidfile=/tmp/supervisord.pid ;pid file nodaemon=false ;Whether to start in the foreground, default is false,That is to say, daemon Way to start minfds=1024 ;Minimum value of file descriptors that can be opened, default 1024 minprocs=200 ;Minimum number of processes that can be opened, default 200 [supervisorctl] serverurl=unix:///tmp/supervisor.sock; Connect supervisord through UNIX socket, path to unix_ Http_ Files in server section are consistent ;serverurl=http://127.0.0.1:9001; Connect supervisord over HTTP ; [program:process_name]Is the managed process configuration parameter, process_name Is the name of the process [program:process_name] command=/usr/bin/python app.py ; Program Start Command autostart=true ; stay supervisord Start automatically at startup startsecs=10 ; If no abnormal exit occurs after 10 seconds of startup, the process starts normally, defaulting to 1 second autorestart=true ; Automatically restart after program exit,Optional values:[unexpected,true,false],Default to unexpected,Indicates that the process was accidentally killed before being restarted startretries=3 ; Startup failure automatic retries, default is 3 user=tomcat ; With which user to start the process, default is root priority=999 ; Process Startup Priority, default 999, lower value, higher priority redirect_stderr=true ; hold stderr Redirect to stdout,default false stdout_logfile_maxbytes=20MB ; stdout Log file size, default 50 MB stdout_logfile_backups = 20 ; stdout Number of log file backups, default is 10 ; stdout Log files, note that the specified directory does not start properly when it does not exist, so you need to create the directory manually. supervisord Will automatically create a log file) stdout_logfile=/var/log/my.log stopasgroup=false ;Default to false,Whether to send to this process group when the process is killed stop Signals, including subprocesses killasgroup=false ;Default to false,Send to Process Group kill Signals, including subprocesses ;Include other profiles [include] files = relative/directory/*.ini ;You can specify one or more to.ini Closed profile

Subprocess Profile Description

[program:blog] #Project Name directory=/home/abc/pro/ # Project Directory command=/usr/bin/python /home/abc/pro/app.py #Whether supervisor starts at the same time, default True autostart=true autorestart=false #This option is how many seconds after the child process has started, and if the state is running, we think the start was successful. Default value is 1 startsecs=1 user = test # User identity of the program to run #Log Output stderr_logfile=/tmp/app_err.log stdout_logfile=/tmp/app_stdout.log #Redirect stderr to stdout, default false redirect_stderr = true #stdout log file size, default 50MB stdout_logfile_maxbytes = 20M #Number of stdout log file backups stdout_logfile_backups = 20