every blog every motto: You can do more than you think.

0. Preface

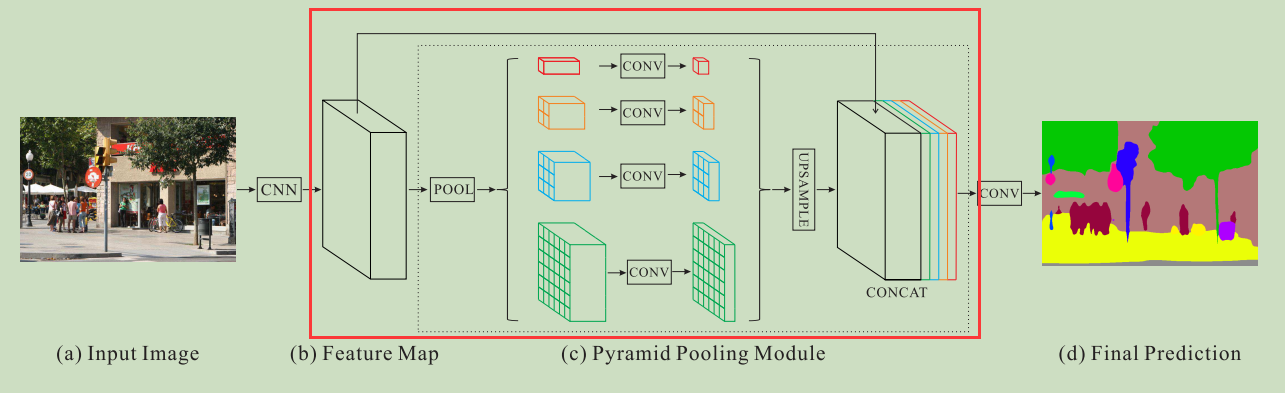

Simple summary of pyramid pooling module.

Nonsense: I'm still picky about food. Hey,

1. Text

1.1 basic concepts

Pyramid pool module, that is, pool operation with different scales.

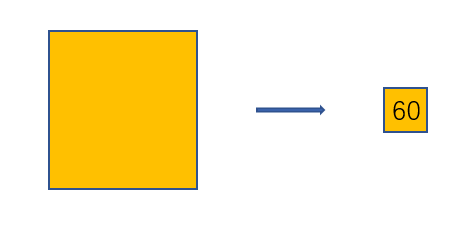

For example, the size of the characteristic diagram after pooling is 1 × 1, (regardless of the channel, because the channel has no change), suppose our input characteristic diagram is 40 × 40, then, average all data (1600) (as shown in the figure below, the average value is 60).

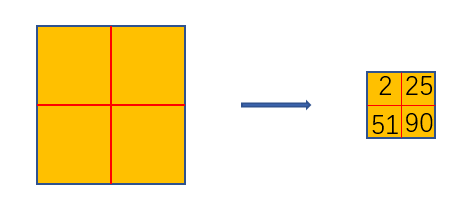

For example, the characteristic diagram after pooling is 2 × 2, then divide the feature map into four regions (2 × 2) , average pooling is carried out in each area, as shown in the following figure:

explain:

1. In tensorflow, average pooling2d is used for pooling, and the pool core and step length need to be calculated

In Python, use adaptive avgpool2d to specify the size of the output feature map

See the following code for details

2. The values in the above pictures are obtained casually and are only displayed.

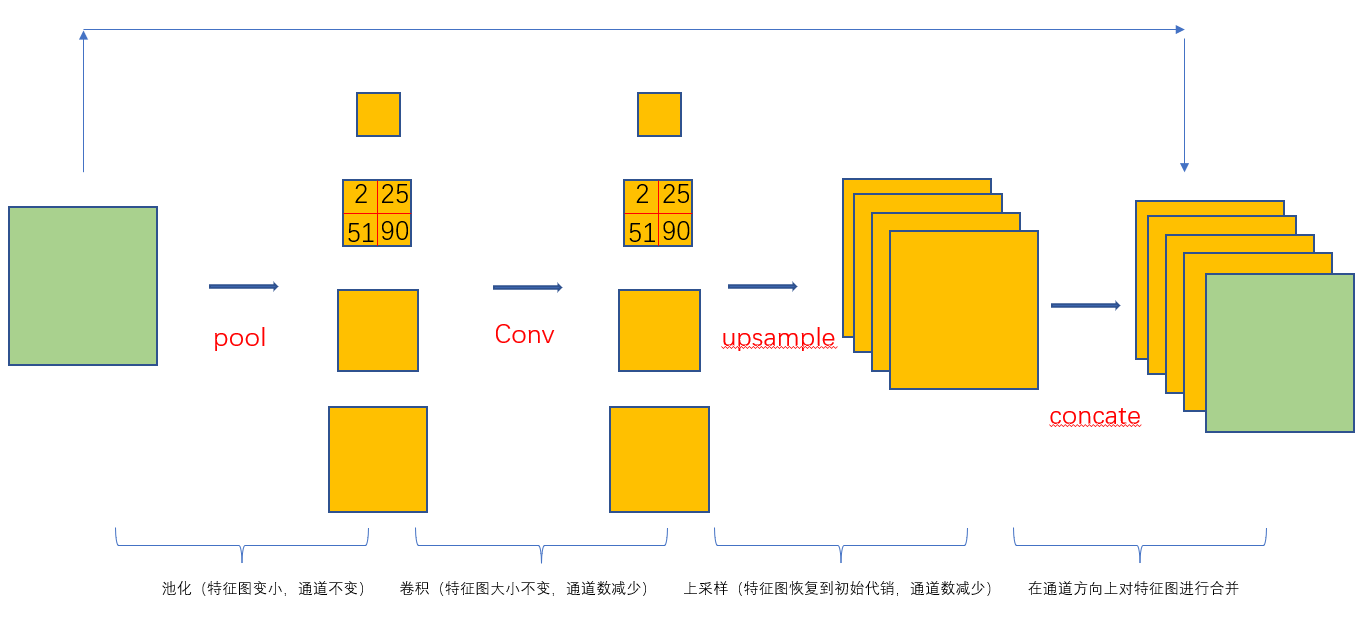

Pyramid pooling module to generate pooling results of different scales. The specific results are as follows: 1 × 1,2 × 2,3 × 3,6 × 6. Four, and then restore the sampling on the generated feature map to the original feature map size, and finally merge in the channel direction (including the original feature map), as shown below:

Popular understanding:

Note: it is only displayed from the perspective of the size of the feature map, not the channel

1.2 code

1.2.1 pytorch compact

from torch import nn

import torch

from torch.nn import functional as F

class PPM(nn.Module):

def __init__(self, in_dim, reduction_dim, bins):

"""Pyramid Pooling Module Pyramid pooling"""

super(PPM, self).__init__()

self.features = []

for bin in bins:

self.features.append(nn.Sequential(

# Pooling

nn.AdaptiveAvgPool2d(bin),

# Convolution to reduce the number of channels

nn.Conv2d(in_dim, reduction_dim, kernel_size=1, bias=False),

nn.BatchNorm2d(reduction_dim),

nn.ReLU(inplace=True)

))

self.features = nn.ModuleList(self.features)

def forward(self, x):

x_size = x.size()

out = [x]

for f in self.features:

temp = f(x) # Pooling + convolution

# print(temp.shape)

# Upsampling, restoring to initial size

temp = F.interpolate(temp, x_size[2:], mode='bilinear', align_corners=True)

out.append(temp)

return torch.cat(out, 1) # Merge in channel direction

ten = torch.rand((7, 4, 30, 30))

ppm = PPM(4, 2, [1, 2, 3, 6])

ppm(ten)

1.2. 2 old pytorch

import torch

import torch.nn as nn

from torch.nn import functional as F

import numpy as np

class PSPModule(nn.Module):

def __init__(self, in_channel, out_channel=1024, sizes=(1, 2, 3, 6)):

super().__init__()

self.stages = []

self.stages = nn.ModuleList([self._make_stage(in_channel, size) for size in sizes])

self.bottleneck = nn.Conv2d(in_channel * (len(sizes) + 1), out_channel, kernel_size=(1, 1))

self.relu = nn.ReLU()

def _make_stage(self, in_channel, out_size):

prior = nn.AdaptiveAvgPool2d(output_size=(out_size, out_size))

# print('prior shape:',prior.shape)

conv = nn.Conv2d(in_channel, in_channel, kernel_size=(1, 1), bias=False)

return nn.Sequential(prior, conv)

def forward(self, x):

h, w = x.size(2), x.size(3)

box = [x] # Collect different pooled characteristic maps

for layer in self.stages:

tempx = layer(x) # Adaptive average pooling

print('Adaptive average pooling shape:', tempx.shape)

x = F.upsample(input=tempx, size=(h, w), mode='bilinear')

box.append(x)

box = [F.upsample(input=stage(x), size=(h, w), mode='bilinear') for stage in self.stages] + [x]

x = torch.cat(box, 1) # Merge in channel direction

print('Channel direction merged shape:',x.shape)

# print('shape:', priors.shape)

bottle = self.bottleneck(x)

return self.relu(bottle)

arr = np.zeros((1, 3, 30, 30), dtype=np.float32)

ten = torch.from_numpy(arr)

a = PSPModule(3)

a(ten)

Description: contains some codes after channel merging (convolution + relu)

1.2. 3 tensorflow 2. Version x

In Tensorflow, since there is no adaptive avgpool2d to pytorch, average pooling 2D is used, that is, the same size is achieved by formulating the pool core size and stride.

eg1: the input characteristic diagram is (30,30), and you want to get the characteristic diagram of (2,2) (regardless of the channel, because the channel has no change)

Then, this process is shown in 1.1, pool_factor=2

Pool core size: pool_size = 30/pool_factor = 30/2 =15

Stride: stripes = pool_ size = 15

eg2: if you want to get the characteristic graph of (3,3), then pool_factor = 3

Pool core size: pool_size = 30/pool_factor = 30/3 =10

Stride: stripes = pool_ size = 10

eg3: if you want to get the characteristic graph of (3,3), then pool_factor =6

Pool core size: pool_size = 30/pool_factor = 30/6 =5

Stride: stripes = pool_ size = 5

Note: the code in tensorflow needs to calculate these two parameters!

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

import tensorflow as tf

from tensorflow.keras.layers import AveragePooling2D, Conv2D, BatchNormalization, Activation, Lambda

import tensorflow.keras.backend as K

import numpy as np

def pool_block(feats, pool_factor, out_channel):

"""

:param feats: Input characteristic diagram (h,w,C)

:param pool_factor: Pooled size (h1,w1,c)

:param out_channel: Output channel

:return:

"""

print('pool factor Is:', pool_factor)

h = K.int_shape(feats)[1]

w = K.int_shape(feats)[2]

pool_size = [int(np.round(float(h) / pool_factor)), int(np.round(float(w) / pool_factor))]

strides = pool_size

# Average pooling at different scales

x = AveragePooling2D(pool_size, strides=strides, padding='same')(feats)

print('After average pooling shape:', x.shape)

x = Conv2D(out_channel , (1, 1), padding='same')(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = Lambda(lambda x: tf.compat.v1.image.resize_images(x, (h, w), align_corners=True))(x)

print('final x shape:',x.shape)

return x

arr = np.zeros((1, 30, 30, 3), dtype=np.float32)

ten = tf.convert_to_tensor(arr)

pool_factorts = [1, 2, 3, 6]

for p in pool_factorts:

pool_block(ten, p, 60)

1.2. 4 tensorflow 1. Version x

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

import tensorflow as tf

from tensorflow.contrib import slim

import numpy as np

def resize_image(input_images, s):

"""

:param input_images: input

:param s: Scale (2,2)

:return:

"""

h, w = input_images.get_shape().as_list()[1], input_images.get_shape().as_list()[2]

h_ratio = s[0]

w_ratio = s[1]

h = int(h * h_ratio)

w = int(w * w_ratio)

images = tf.image.resize_images(input_images, size=(h, w))

return images

def pool_block(x, pool_factor, IMAGE_ORDER='NHWC'):

if IMAGE_ORDER == 'NHWC':

h, w = x.get_shape().as_list()[1], x.get_shape().as_list()[2]

else:

h, w = x.get_shape().as_list()[2], x.get_shape().as_list()[3]

# strides = [18,18],[9,9],[6,6],[3,3]

pool_size = [int(np.round(float(h) / pool_factor)), int(np.round(float(w) / pool_factor))]

strides = pool_size

# Average pooling in different degrees

x = slim.avg_pool2d(x, kernel_size=pool_size, stride=strides, padding='SAME')

print('Size of pooled feature map:', x.shape)

# Convolution operation

x = slim.conv2d(x, 512, kernel_size=(1, 1), stride=1, padding='SAME')

x = tf.cast(x, tf.float32)

x = slim.batch_norm(x)

x = tf.nn.relu(x)

print('relu After:', x.shape)

print('-' * 100)

x = resize_image(x, strides)

return x

arr = np.zeros((1, 30, 30, 3), dtype=np.float32)

xx = tf.convert_to_tensor(arr)

pool_factors = [1, 2, 3, 6]

for pf in pool_factors:

p = pool_block(xx, pf)

# print(p.shape)

reference

[1] https://blog.csdn.net/qq_24975309/article/details/108677378

[2] https://www.cnblogs.com/learningcaiji/p/14187039.html

[3] https://blog.csdn.net/qq_43258953/article/details/103300945

[4] https://zhuanlan.zhihu.com/p/75206669

[5] https://pytorch.org/docs/stable/nn.functional.html

[6] https://blog.csdn.net/weixin_44791964/article/details/108469870

[7] https://github.com/pudae/tensorflow-pspnet

[8] https://github.com/bubbliiiing/pspnet-tf2

[9] https://github.com/hszhao/semseg

[10] https://github.com/bubbliiiing/pspnet-pytorch/blob/master/nets/pspnet.py

[11] https://blog.csdn.net/qq_41375318/article/details/110071599

[12] https://www.cnblogs.com/wzyuan/p/10224793.html

[13] https://www.cnblogs.com/wzyuan/p/10224793.html

[14] https://github.com/Lextal/pspnet-pytorch/blob/master/pspnet.py