If you are reading this article, I believe you already know your needs.

Before version 5.5 of mysql, modifying the table structure, such as adding indexes and modifying columns, required locking tables, which could not be written during the period, which was a disaster for large tables. From 5.5, especially 5.6, the situation has improved. Online DDL is supported. See this article for an introduction. However, in the actual alter table process, I still cause data meta lock problems. pt-online-schema-change is a member of Percona-toolkit. By improving the native ddl, it can modify the table structure online without locking the table.

1. pt-osc working process

- Create a new empty table structure (the structure before alter) that is the same as the table to perform the alter operation.

- Execute alter table statements in new tables (should be fast)

- Create three triggers in the original table corresponding to insert,update,delete operations

- Copy data from the original table to the temporary table with a certain block size. Writing operations on the original table through triggers on the original table during the copy process will be updated to the new temporary table.

- Rename original table to old table, in the temporary table Rename as the original table

- If there is a foreign key referring to the table, according to the value of alter-foreign-keys-method parameter, the table related to the foreign key is detected, and the corresponding settings are processed.

- Delete the old table by default

2. Description of Common Options

Only a few of the commonly used options are introduced.

–host=xxx –user=xxx –password=xxx

Connect instance information, abbreviate - h xxx -u xxx -p xxx, password can be entered manually with parameter - ask-pass.-

–alter

Structural change statements do not require ALTER TABLE keywords. Like the original ddl, you can specify multiple changes, separated by commas.- In most cases, a primary key or a unique index is required on the table, because in order to ensure that the new table is up to date, the tool needs to create DELETE and UPDATE triggers on the old table. It will be faster to have a primary key when synchronizing to the new table. In some cases, when the alter operation is to create a primary key on the c1 column, the DELETE trigger will be based on the c1 column.

- The clause does not support rename to rename tables.

- The original table of the alter command does not support renaming the index. It needs to drop before add ing, and so does pt-osc. (mysql 5.7 supports RENAME INDEX old_index_name TO new_index_name)

But rename the field, never drop-add, the whole column data will be lost, use change col1 col1_new type constraint (keep the type and constraint consistent, otherwise equivalent to modifying column type, can not online) - If the clause is add column and defines not null, it must specify default value, otherwise it will fail.

- If you want to delete a foreign key (the name fk_foo), underline the foreign key name when using the tool, such as --- alter "DROP FOREIGN KEY_fk_foo"

D=db_name,t=table_name

Specify the database name and table name to ddl–max-load

The default is Threads_running=25. After each chunk is copied, the contents of SHOW GLOBAL STATUS are checked to see if the index exceeds the specified threshold. If it exceeds, it will be suspended first. Here you can use commas to separate and specify multiple conditions, each condition format: status index = MAX_VALUE or status index: MAX_VALUE. If MAX_VALUE is not specified, then the tool will only specify it as 120% of the current value.

Threads_running is an excellent indicator of the current database load, since copy rows may lock some rows.–max-lag

Default 1s. After each chunk copy is completed, all replication Slave delays (Seconds_Behind_Master) are checked. If the delay is greater than this value, the replication of data is suspended until all slave latencies are less than this value. check-interval is used in conjunction with max-lag to specify how long the tool will sleep when the slave lag exceeds max-lag, default 1 s, and check again. For example - max-lag=5 - check-interval=2.

Anyone familiar with percona-toolkit knows that - recursion-method can be used to specify records from libraries dsn. In addition, if the slave library is stopped, it will wait forever until synchronization starts, and the delay is less than that value.–chunk-time

By default 0.5s, when copying data rows, in order to ensure that a chunk is copied within 0.5s, the size of chunk-size is dynamically adjusted to adapt to changes in server performance.

Another option, chunk-size, can also be used to prohibit dynamic adjustment, i.e. 1 K rows per fixed copy and 1000 rows by default if specified, with priority over chunk-time.–set-vars

Set-vars can set these variables before alter is executed. For example, by default --- set-vars "wait_timeout=10000,innodb_lock_wait_timeout=1,lock_wait_timeout=60" will be set.

Because the speed of ddl will slow down after using pt-osc, it is expected that it can only be improved in 2.5 hours. Remember to increase wait_timeout.–dry-run

Create and modify new tables, but do not create triggers, copy data, and replace the original tables. Not really executed, you can see the generated execution statement, understand its execution steps and details, and --- print with the best.–execute

When a modification table is determined, this parameter is specified. Actually execute alter. dry-run and execute must specify one, and they are mutually exclusive

3. Use doubts (restrictions)

3.1 No triggers can exist on the original table

It's easy to understand that pt-osc will create three triggers on the original table, while a table can't have two triggers of the same type at the same time. For the sake of simplicity and generality, it can only kill with one stick.

So if we want it to support tables with triggers, the idea is: first find the original table trigger definition; rewrite the original table trigger; and finally apply the original table trigger definition to the new table.

3.2 Will data inconsistency or abnormality occur when data is written to temporary new tables via triggers?

This is actually a concern for me, because if update t1 triggers update t2, but this data has not been copied to t2, is there an exception? The background opens general_log and sees the trigger it creates:

6165 Query CREATE TRIGGER `pt_osc_confluence_sbtest3_del` AFTER DELETE ON `confluence`.`sbtest3`

FOR EACH ROW DELETE IGNORE FROM `confluence`.`_sbtest3_new` WHERE `confluence`.`_sbtest3_new`.`id` <=> OLD.`id`

6165 Query CREATE TRIGGER `pt_osc_confluence_sbtest3_upd` AFTER UPDATE ON `confluence`.`sbtest3`

FOR EACH ROW REPLACE INTO `confluence`.`_sbtest3_new` (`id`, `k`, `c`, `pad`) VALUES (NEW.`id`, NEW.`k`, NEW.`c`, NEW.`pad`)

6165 Query CREATE TRIGGER `pt_osc_confluence_sbtest3_ins` AFTER INSERT ON `confluence`.`sbtest3`

FOR EACH ROW REPLACE INTO `confluence`.`_sbtest3_new` (`id`, `k`, `c`, `pad`) VALUES (NEW.`id`, NEW.`k`, NEW.`c`, NEW.`pad`)

//And the copy operation is:

6165 Query INSERT LOW_PRIORITY IGNORE INTO `confluence`.`_sbtest3_new` (`id`, `k`, `c`, `pad`)

SELECT `id`, `k`, `c`, `pad` FROM `confluence`.`sbtest3` FORCE INDEX(`PRIMARY`) WHERE ((`id` >= '4692805')) AND ((`id` <= '4718680'))

LOCK IN SHARE MODE /*pt-online-schema-change 46459 copy nibble*/update on the original table and replace the entire row of data on the new temporary table, so when there are updates or inserts. At the same time, with the following insert ignore, it ensures that this data will not fail because of duplication.

3.3 Why are foreign keys so special?

Suppose T1 is a table to be modified, t2 has a foreign key dependent on t1, and _t1_new is a new temporary table generated by alter t1.

The foreign key here is not to see whether there is a foreign key on t1, but t2 as a subtable. The main problem is that when rename t1 "does not exist", the foreign key of t2 thinks that the reference fails and Rename is not allowed.

pt-osc provides the -- alter-foreign-keys-method option to decide how to deal with this situation:

- rebuild_constraints, preferring this approach

- It first rebuilds the foreign key reference by alter table t2 drop fk1, add_fk1, and points to the new table.

- rename t1 t1_old, _t1_new t1, exchange table name, do not affect the client

- Delete old table t1_old

However, if the table t2 is too large, the alter operation may take too long, and - drop_swap may be forced.

The main methods involved are determined_alter_fk_method, rebuild_constraints and swap_tables of pt-online-schema-change file.

- drop_swap,

- Disable t2 table foreign key constraint check FOREIGN_KEY_CHECKS=0

- Then drop t1 original table

- Rename_t1_new T1

This approach is faster and does not block requests. However, there are risks. First, the original table t1 does not exist in the instantaneous rename process of drop table, and it will make an error when it meets a request. Second, if the old table has been deleted and the new table rename fails because of a bug or for some reason, it will be too late, but this situation is rare.

Our development specification determines that even if there is a foreign key reference relationship between tables, no mandatory constraints are defined through tables.

3.4 Disk capacity needs to be evaluated before use

Using OSC doubles space, including indexing

And under Row Based Replication, a binlog will be written. Don't take it for granted to use - set-vars to set sql_log_bin=0, because at this session level, alter statements are also executed from libraries unless you have other plans for slave libraries.

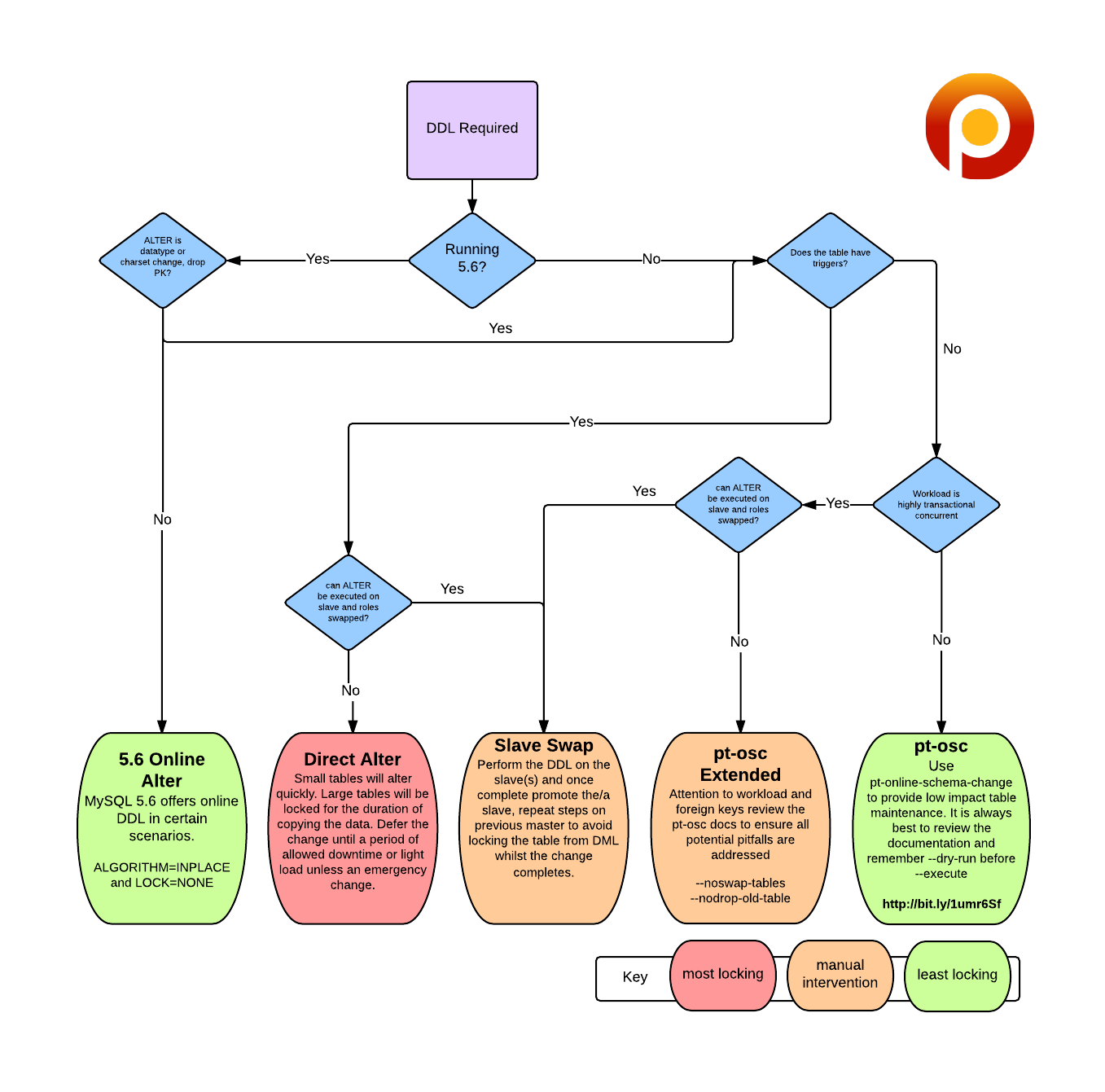

4. How to choose between native 5.6 online ddl and pt-osc

- online ddl has a high cost when copy table is required, so it is not suitable to use it.

- The pt-osc tool is not applicable when triggers exist

- When modifying indexes, foreign keys and column names, online ddl is preferred, and ALGORITHM=INPLACE is specified.

- In other cases, pt-osc is used, although copy data exists.

- pt-osc is about twice as slow as online ddl because it is load-adjusted

- Either way, the business peak period is chosen for execution.

- In special cases, we need to make use of the master-slave characteristic, alter-slave library, master-standby switch, and then change the original master library.

Use a picture of percona blog to illustrate:

5. Examples

- Add new columns

Complete output process

[root@ssd-34 sysbench]# pt-online-schema-change --user=user --password=password --host=10.0.201.34 --alter "ADD COLUMN f_id int default 0" D=confluence,t=sbtest3 --print --execute

No slaves found. See --recursion-method if host ssd-34 has slaves.

Not checking slave lag because no slaves were found and --check-slave-lag was not specified.

Operation, tries, wait:

analyze_table, 10, 1

copy_rows, 10, 0.25

create_triggers, 10, 1

drop_triggers, 10, 1

swap_tables, 10, 1

update_foreign_keys, 10, 1

Altering `confluence`.`sbtest3`...

Creating new table... ==> Create a new table

CREATE TABLE `confluence`.`_sbtest3_new` (

`id` int(10) unsigned NOT NULL AUTO_INCREMENT,

`k` int(10) unsigned NOT NULL DEFAULT '0',

`c` char(120) COLLATE utf8_bin NOT NULL DEFAULT '',

`pad` char(60) COLLATE utf8_bin NOT NULL DEFAULT '',

PRIMARY KEY (`id`),

KEY `k_3` (`k`)

) ENGINE=InnoDB AUTO_INCREMENT=5000001 DEFAULT CHARSET=utf8 COLLATE=utf8_bin MAX_ROWS=1000000

Created new table confluence._sbtest3_new OK.

Altering new table... ==> Use ddl Modify the new table structure

ALTER TABLE `confluence`.`_sbtest3_new` ADD COLUMN f_id int default 0

Altered `confluence`.`_sbtest3_new` OK.

2016-05-24T20:54:23 Creating triggers... ==> Create on an old table3Triggers

CREATE TRIGGER `pt_osc_confluence_sbtest3_del` AFTER DELETE ON `confluence`.`sbtest3` FOR EACH ROW

DELETE IGNORE FROM `confluence`.`_sbtest3_new` WHERE `confluence`.`_sbtest3_new`.`id` <=> OLD.`id`

CREATE TRIGGER `pt_osc_confluence_sbtest3_upd` AFTER UPDATE ON `confluence`.`sbtest3` FOR EACH ROW

REPLACE INTO `confluence`.`_sbtest3_new` (`id`, `k`, `c`, `pad`) VALUES (NEW.`id`, NEW.`k`, NEW.`c`, NEW.`pad`)

CREATE TRIGGER `pt_osc_confluence_sbtest3_ins` AFTER INSERT ON `confluence`.`sbtest3` FOR EACH ROW

REPLACE INTO `confluence`.`_sbtest3_new` (`id`, `k`, `c`, `pad`) VALUES (NEW.`id`, NEW.`k`, NEW.`c`, NEW.`pad`)

2016-05-24T20:54:23 Created triggers OK.

2016-05-24T20:54:23 Copying approximately 4485573 rows... ==> Copy data into new tables in blocks

INSERT LOW_PRIORITY IGNORE INTO `confluence`.`_sbtest3_new` (`id`, `k`, `c`, `pad`)

SELECT `id`, `k`, `c`, `pad` FROM `confluence`.`sbtest3` FORCE INDEX(`PRIMARY`) WHERE ((`id` >= ?)) AND ((`id` <= ?))

LOCK IN SHARE MODE /*pt-online-schema-change 44155 copy nibble*/

SELECT /*!40001 SQL_NO_CACHE */ `id` FROM `confluence`.`sbtest3` FORCE INDEX(`PRIMARY`) WHERE ((`id` >= ?)) ORDER BY `id` LIMIT ?, 2 /*next chunk boundary*/

Copying `confluence`.`sbtest3`: 36% 00:52 remain

Copying `confluence`.`sbtest3`: 69% 00:26 remain

2016-05-24T20:56:01 Copied rows OK.

2016-05-24T20:56:01 Analyzing new table...

2016-05-24T20:56:01 Swapping tables... ==> Exchange old and new tables

RENAME TABLE `confluence`.`sbtest3` TO `confluence`.`_sbtest3_old`, `confluence`.`_sbtest3_new` TO `confluence`.`sbtest3`

2016-05-24T20:56:01 Swapped original and new tables OK.

2016-05-24T20:56:01 Dropping old table... ==> Delete old tables

DROP TABLE IF EXISTS `confluence`.`_sbtest3_old`

2016-05-24T20:56:02 Dropped old table `confluence`.`_sbtest3_old` OK.

2016-05-24T20:56:02 Dropping triggers...

DROP TRIGGER IF EXISTS `confluence`.`pt_osc_confluence_sbtest3_del`;

DROP TRIGGER IF EXISTS `confluence`.`pt_osc_confluence_sbtest3_upd`;

DROP TRIGGER IF EXISTS `confluence`.`pt_osc_confluence_sbtest3_ins`;

2016-05-24T20:56:02 Dropped triggers OK.

Successfully altered `confluence`.`sbtest3`.- Modify column type

pt-online-schema-change h=10.0.201.34,P=3306,u=jacky,p=xxx,D=confluence,t=sbtest3 \

--alter "CHANGE pad f_pad varchar(60) NOT NULL DEFAULT '' " \

--print --dry-run

pt-online-schema-change -ujacky -p xxx -h "10.0.201.34" D=confluence,t=sbtest3 \

--alter "CHANGE pad f_pad varchar(60) NOT NULL DEFAULT '' " \

--execute- Add and delete index

Backstage execution

pt-online-schema-change --user=user --ask-pass --host=10.0.201.34 \

--alter "DROP KEY cid, ADD KEY idx_corpid_userid(f_corp_id,f_user_id) " \

D=confluence,t=sbtest3 --print --execute- Modify the primary key

In my environment, there are many tables that did not add id at the beginning, but used composite primary keys. The pt-osc will deal with deleting and adding primary keys in a special way. See here for details.

6. Error handling

1. Existence of trigger

[zx@mysql-5 ~]$ pt-online-schema-change -u user -p password -h 10.0.200.195 \

--alter="MODIFY COLUMN f_receiver varchar(128) NOT NULL DEFAULT '' AFTER f_user_id" --dry-run D=db_name,t=table_name

The table `db_name`.`table_name` has triggers. This tool needs to create its own triggers, so the table cannot already have triggers.There are triggers on the table, which are not applicable.

2.no-version-check

$ pt-online-schema-change -uuser -ppassword --alter "add key id_provice(f_provice)" \

D=db_name,t=tb_name -h rdsxxxxxx.mysql.rds.aliyuncs.com

Can't use an undefined value as an ARRAY reference at /usr/bin/pt-online-schema-change line 7335.This error occurred when executing on Aliyun RDS. I thought it was my grammar that was wrong, but it's OK to get the original version 5.6, plus the no-version-check option. See you. https://help.aliyun.com/knowledge_detail/13098164.html No further study, it should be pt to verify the version of mysql server when the information from rds is not correct, resulting in format errors.

Original address: http://seanlook.com/2016/05/27/mysql-pt-online-schema-change/