pthread Queue for thread synchronization

Original link https://www.foxzzz.com/queue-with-thread-synchronization/

In recent days, pthread needs to be used to implement a Queue with thread synchronization function. In the process, two pits are stepped on:

-

pthread_cond_wait() needs to be placed in pthread_mutex_lock() and pthread_mutex_unlock() is inside, not outside, otherwise a deadlock will occur. The concept here is a bit convoluted. You need to understand pthread_cond_wait() will release the current lock so that other threads can enter the critical area when other threads pthread_cond_signal(), the wait thread wakes up and regains the lock;

-

pthread_ cond_ After the wait () wakes up, the condition judgment needs to be performed again, and the condition judgment form must be while instead of if. The reason why this must be done is pthread_cond_signal() may wake up multiple threads that are in the wait state (in the case of multiple CPUs), so the awakened thread needs to check again whether there is data to be processed. If it does not need processing, it should continue to enter the wait to wait for the next wake-up.

I implemented Queue to support one-to-one, one to many, many to one and many to many thread synchronization mechanisms, and wrote a simple producer consumer model for testing. The complete procedure is as follows. The test environment is ubuntu 20.04:

/********************************************

* Queue with thread synchronization

* Copyright (C) i@foxzzz.com

*

* Using pthread implementation.

* Can be used in the producer-consumer model

* of one-to-one, one-to-many, many-to-one,

* many-to-many patterns.

*********************************************/

#include <iostream>

#include <sstream>

#include <vector>

#include <cstdio>

#include <sys/time.h>

#include <pthread.h>

#include <unistd.h>

/*!

* @brief queue with thread synchronization

*/

template<typename T>

class Queue {

public:

Queue(int capacity) :

front_(0),

back_(0),

size_(0),

capacity_(capacity),

cond_send_(PTHREAD_COND_INITIALIZER),

cond_receive_(PTHREAD_COND_INITIALIZER),

mutex_(PTHREAD_MUTEX_INITIALIZER) {

arr_ = new T[capacity_];

}

~Queue() {

delete[] arr_;

}

public:

/*!

* @brief data entry queue

* @param[in] data needs to be put into the queue

*/

void send(const T& data) {

pthread_mutex_lock(&mutex_);

while (full()) {

pthread_cond_wait(&cond_receive_, &mutex_);

}

enqueue(data);

pthread_mutex_unlock(&mutex_);

pthread_cond_signal(&cond_send_);

}

/*!

* @brief retrieve data from the queue

* @param[out] data retrieved from the queue

*/

void receive(T& data) {

pthread_mutex_lock(&mutex_);

while (empty()) {

pthread_cond_wait(&cond_send_, &mutex_);

}

dequeue(data);

pthread_mutex_unlock(&mutex_);

pthread_cond_signal(&cond_receive_);

}

private:

void enqueue(const T& data) {

arr_[back_] = data;

back_ = (back_ + 1) % capacity_;

++size_;

}

void dequeue(T& data) {

data = arr_[front_];

front_ = (front_ + 1) % capacity_;

--size_;

}

bool full()const {

return (size_ == capacity_);

}

bool empty()const {

return (size_ == 0);

}

private:

T* arr_;

int front_;

int back_;

int size_;

int capacity_;

pthread_cond_t cond_send_;

pthread_cond_t cond_receive_;

pthread_mutex_t mutex_;

};

/*!

* @brief a demonstration of queue operations

*/

template<typename T, typename Make>

class Demo {

public:

Demo(int capacity) :

queue_(capacity) {

start();

}

public:

/*!

* @brief generate the data and queue it(for producer thread)

* @param[in] origin The starting value of the data

* @param[in] count The amount of data to be generated

* @param[in] interval the time interval(ms) to enter the queue

*/

void send(int origin, int count, int interval) {

Make make;

while (count--) {

T data = make(origin);

queue_.send(data);

print("send", data);

usleep(interval * 1000);

}

}

/*!

* @brief retrieve data from the queue(for consumer thread)

* @param[in] interval the time interval(ms) to enter the queue

*/

void receive(int interval) {

while (true) {

T data;

queue_.receive(data);

print("receive", data);

usleep(interval * 1000);

}

}

private:

void print(const char* name, const T& data) {

char buffer[256] = { 0 };

sprintf(buffer, "[%-4lu ms][pid %lu][%-10s] ", elapsedMS(), pthread_self(), name);

std::stringstream ss;

ss << buffer;

ss << data;

ss << "\n";

std::cout << ss.str();

}

private:

void start() {

gettimeofday(&start_time_, nullptr);

}

long elapsedMS() const {

struct timeval current;

gettimeofday(¤t, nullptr);

return diffMS(start_time_, current);

}

static long diffMS(const timeval& start, const timeval& end) {

long seconds = end.tv_sec - start.tv_sec;

long useconds = end.tv_usec - start.tv_usec;

return (long)(((double)(seconds) * 1000 + (double)(useconds) / 1000.0) + 0.5);

}

private:

timeval start_time_;

Queue<T> queue_;

};

/*!

* @brief generates integer data

*/

class IntMake {

public:

IntMake() : count_(0) {

}

public:

int operator() (int origin) {

return (origin + count_++);

}

private:

int count_;

};

/*!

* @brief thread type

*/

enum {

TYPE_THREAD_SEND,

TYPE_THREAD_RECEIVE

};

/*!

* @brief thread arguments

*/

struct Args {

Args(Demo<int, IntMake>& demo, int type, int interval) :

demo(demo),

type(type),

interval(interval),

origin(0),

count(0) {

}

Args(Demo<int, IntMake>& demo, int type, int interval, int origin, int count) :

demo(demo),

type(type),

interval(interval),

origin(origin),

count(count) {

}

Demo<int, IntMake>& demo;

int type;

int interval;

int origin;

int count;

};

/*!

* @brief thread info

*/

struct ThreadInfo {

ThreadInfo(const Args& args) :

tid(0),

args(args) {

}

pthread_t tid;

Args args;

};

/*!

* @brief producer thread function

*/

void* thread_func_send(void* arg) {

Args* args = (Args*)arg;

args->demo.send(args->origin, args->count, args->interval);

return nullptr;

}

/*!

* @brief consumer thread function

*/

void* thread_func_receive(void* arg) {

Args* args = (Args*)arg;

args->demo.receive(args->interval);

return nullptr;

}

/*!

* @brief start to work

*/

void work(std::vector<ThreadInfo>& list) {

for (auto& it : list) {

switch (it.args.type) {

case TYPE_THREAD_SEND:

pthread_create(&it.tid, nullptr, thread_func_send, &it.args);

break;

case TYPE_THREAD_RECEIVE:

pthread_create(&it.tid, nullptr, thread_func_receive, &it.args);

break;

}

}

for (auto& it : list) {

pthread_join(it.tid, nullptr);

}

}

int main() {

Demo<int, IntMake> demo(10);

//configuration of the threads

std::vector<ThreadInfo> list = {

ThreadInfo(Args(demo, TYPE_THREAD_SEND, 2, 1, 50)),

ThreadInfo(Args(demo, TYPE_THREAD_RECEIVE, 2)),

};

work(list);

return 0;

}

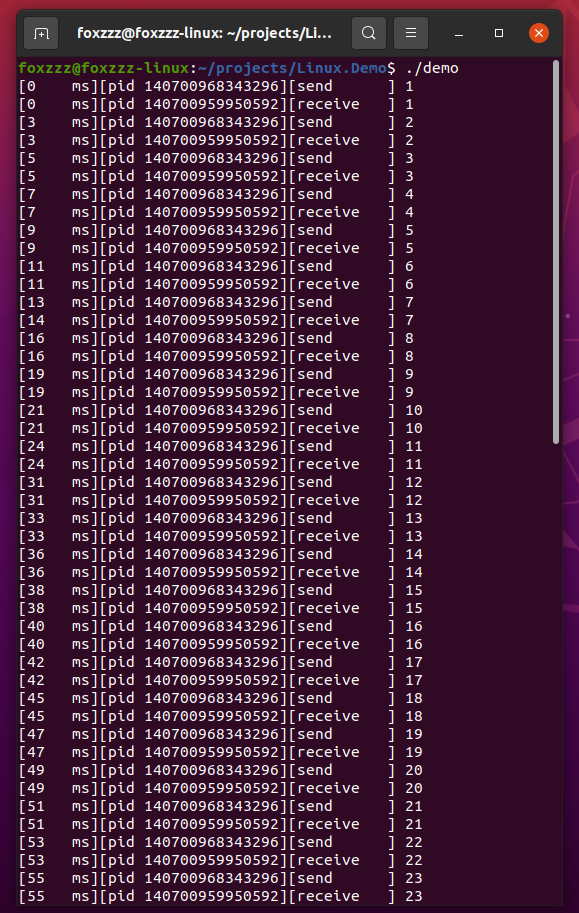

The program is configured with a production end thread and a consumer end thread. The efficiency of both production end and consumer end is set to 2ms:

Demo<int, IntMake> demo(10);

//configuration of the threads

std::vector<ThreadInfo> list = {

ThreadInfo(Args(demo, TYPE_THREAD_SEND, 2, 1, 50)),

ThreadInfo(Args(demo, TYPE_THREAD_RECEIVE, 2)),

};

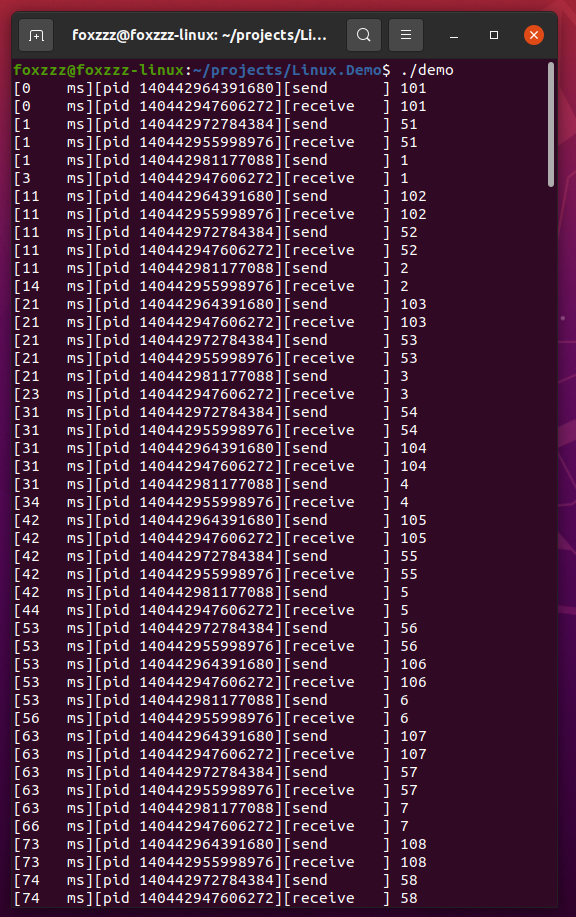

From the program printout results, the production end and consumption end are similar to the round robin system:

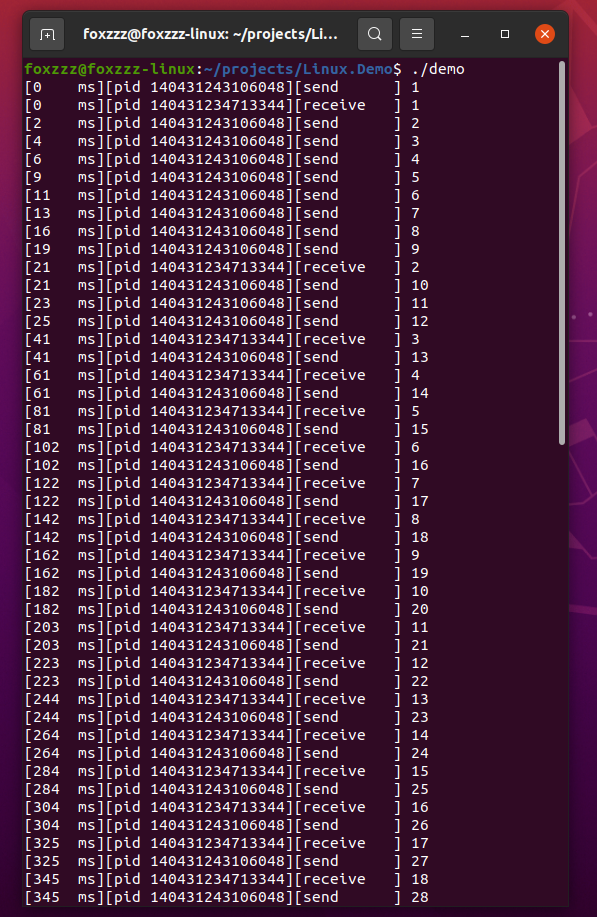

Modify the configuration to reduce the efficiency of the consumer end from 2ms to 20ms:

Demo<int, IntMake> demo(10);

//configuration of the threads

std::vector<ThreadInfo> list = {

ThreadInfo(Args(demo, TYPE_THREAD_SEND, 2, 1, 50)),

ThreadInfo(Args(demo, TYPE_THREAD_RECEIVE, 20)),

};

From the program printout results, when the queue is full (the queue capacity is set to 10), the production end needs to wait for the consumer to take the data from the queue before reproduction:

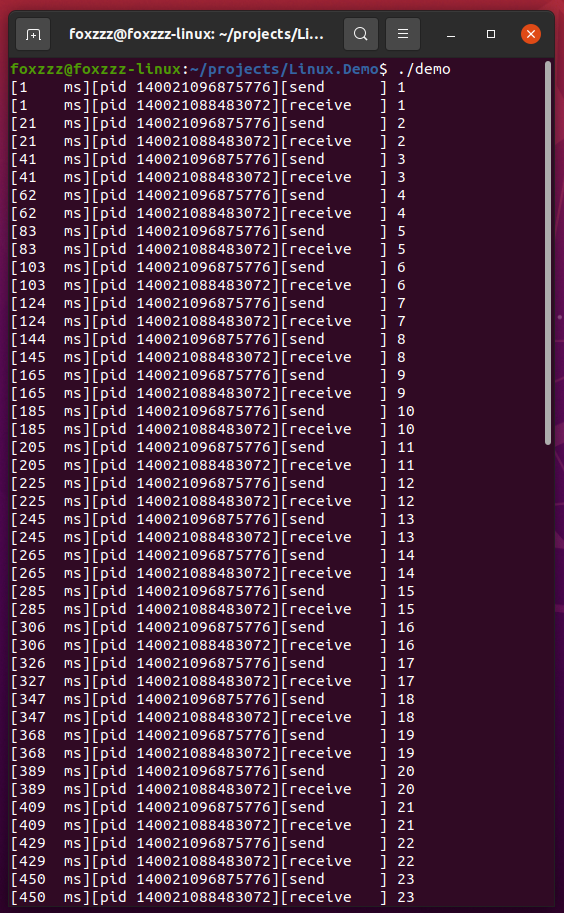

Modify the configuration to reduce the production efficiency from 2ms to 20ms:

Demo<int, IntMake> demo(10);

//configuration of the threads

std::vector<ThreadInfo> list = {

ThreadInfo(Args(demo, TYPE_THREAD_SEND, 20, 1, 50)),

ThreadInfo(Args(demo, TYPE_THREAD_RECEIVE, 2)),

};

From the program printout results, the production end and the consumer end are similar to the round system because the consumer end has high efficiency and has to wait until the production end produces:

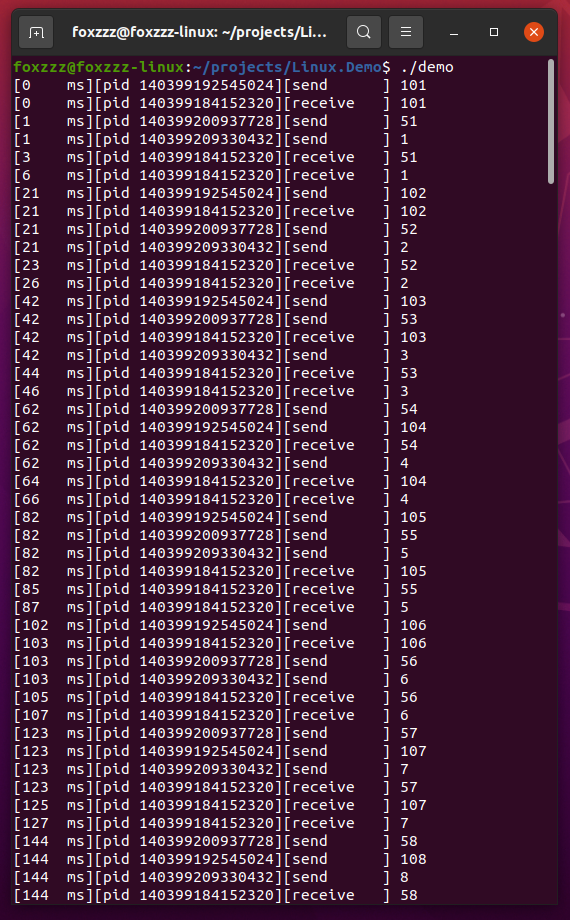

Modify the configuration, increase the number of production terminals to 3, keep the number of consumer terminals unchanged, the efficiency of production terminal is 20ms, and the efficiency of consumer terminal is 2ms:

Demo<int, IntMake> demo(10);

//configuration of the threads

std::vector<ThreadInfo> list = {

ThreadInfo(Args(demo, TYPE_THREAD_SEND, 20, 1, 50)),

ThreadInfo(Args(demo, TYPE_THREAD_SEND, 20, 51, 50)),

ThreadInfo(Args(demo, TYPE_THREAD_SEND, 20, 101, 50)),

ThreadInfo(Args(demo, TYPE_THREAD_RECEIVE, 2)),

};

From the program printout results, the efficiency of the consumer end is still much higher than that of the production end. Even if there are three production ends, the queue cannot be filled:

After modifying the configuration, the number of production ends remains 3, the number of consumer ends becomes 2, the efficiency of production end is 10ms, and the efficiency of consumer end is 2ms:

Demo<int, IntMake> demo(10);

//configuration of the threads

std::vector<ThreadInfo> list = {

ThreadInfo(Args(demo, TYPE_THREAD_SEND, 10, 1, 50)),

ThreadInfo(Args(demo, TYPE_THREAD_SEND, 10, 51, 50)),

ThreadInfo(Args(demo, TYPE_THREAD_SEND, 10, 101, 50)),

ThreadInfo(Args(demo, TYPE_THREAD_RECEIVE, 2)),

ThreadInfo(Args(demo, TYPE_THREAD_RECEIVE, 2)),

};

The program printout presents many to many mode:

The above is a demonstration of several examples. The configuration is relatively simple and can be designed according to your own wishes (create two other parameters at the production end, one is the starting value and the other is the production quantity).