1. Project introduction

This project uses the program provided by Python + the use of selectors in the graph (quite easy to use) to realize crawling the picture of the girl graph (welfare graph). I have learned that the paw (2, 10) of a certain durian is right!

2. Knowledge points used

① Python Programming (I use version 3.7.3)

(2) using css selector in the graph

③ using async process

④ use aiohttp to access url asynchronously

⑤ use aiofiles to save files asynchronously

Don't say much, go straight to the code. Note: running in the python3.x environment, due to the timeliness, the URL here may be modified by the sister map website later. Please check whether the corresponding URL is available at that time.

import gevent #No need to install the module imported into the process

from lxml import etree #Import the related modules of xml xpath, no pre installation

import os,threading #Import os module import multithreaded module

import urllib.request #Import network crawling module

import time

# Configuration of request headers

headers = {

'User-Agent':'Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_5_6; en-US) AppleWebKit/530.9 (KHTML, like Gecko) Chrome/ Safari/530.9 '

}

# Basic url to be crawled

baseurl = 'http://www.meizitu.com/a/more'

'''

//Analysis:

//First, obtain the pages that need to be downloaded, and then obtain all the secondary URLs

//And then I'll crawl the pictures in these URLs and download them

'''

# //div[@class="pic"]/a/@href get all URL s of the page

# //div[@id="picture"]/p/img/@alt get picture name

# //div[@id="picture"]/p/img/@src get picture path

# Functions for downloading pictures

def download_img(image_url_list,image_name_list,image_fenye_path):

try:

# Create a table of contents for each page

os.mkdir(image_fenye_path)

except Exception as e:

pass

for i in range(len(image_url_list)):

# Get picture suffix

houzhui = (os.path.splitext(image_url_list[i]))[-1]

# Splicing file name

file_name = image_name_list[i] + houzhui

# Save path of splicing file

save_path = os.path.join(image_fenye_path,file_name)

# Start downloading pictures

print(image_url_list[i])

try:

# I found that I can't read it here. I changed it to file read and write once

# urllib.request.urlretrieve(image_url_list[i],save_path)

newrequest = urllib.request.Request(url=image_url_list[i], headers=headers)

newresponse = urllib.request.urlopen(newrequest)

data = newresponse.read()

with open(save_path,'wb') as f: #with writing

# F = open ('test. JPG ',' WB ') original writing method

f.write(data)

f.close()

print('%s Download completed'%save_path)

except Exception as e:

print('%s xxxxxxxx Picture loss'%save_path)

# Function to get url

def read_get_url(sure_url,image_fenye_path):

request = urllib.request.Request(url=sure_url, headers=headers)

response = urllib.request.urlopen(request)

html = response.read().decode('gbk')

html_tree = etree.HTML(html)

need_url_list = html_tree.xpath('//div[@class="pic"]/a/@href')

# print(need_url_list)

# Start the process crawling and downloading within each thread

xiecheng = []

for down_url in need_url_list:

# Create a process object

xiecheng.append(gevent.spawn(down_load, down_url,image_fenye_path))

# Open Association

gevent.joinall(xiecheng)

# Intermediate processing function

def down_load(read_url,image_fenye_path):

# print(read_url,image_fenye_path)

try:

request = urllib.request.Request(url=read_url, headers=headers)

response = urllib.request.urlopen(request)

html = response.read().decode('gbk')

html_tree = etree.HTML(html)

# Get the name of the picture

image_name_list = html_tree.xpath('//div[@id="picture"]/p/img/@alt')

# Get the url of the picture

image_url_list = html_tree.xpath('//div[@id="picture"]/p/img/@src')

# print(image_url_list,image_name_list)

download_img(image_url_list,image_name_list,image_fenye_path)

except Exception as e:

pass

# Main entry function

def main(baseurl):

start_page = int(input('Please enter the start page:'))

end_page = int(input('Please enter the end page:'))

# Create a saved folder

try:

global father_path

# Get the directory name of the current file

father_path = (os.path.dirname(os.path.abspath(__file__)))

# Create a directory to save pictures

mkdir_name = father_path + '/meizitufiles'

os.mkdir(mkdir_name)

except Exception as e:

print(e)

print('Start downloading...')

t_list = []

# One thread per page

for page_num in range(start_page,end_page + 1):

# Splicing url

sure_url = baseurl + '_' + str(page_num) + '.html'

# Get the directory name of each page

image_fenye_path = father_path + '/meizitufiles' + '/The first%s page'%page_num

t = threading.Thread(target=read_get_url,args=(sure_url,image_fenye_path))

t.start()

t_list.append(t)

for j in t_list:

j.join()

print('Download completed!')

if __name__ == '__main__':

start_time = time.time()

main(baseurl)

print('The final download time is:%s'%(time.time()-start_time))

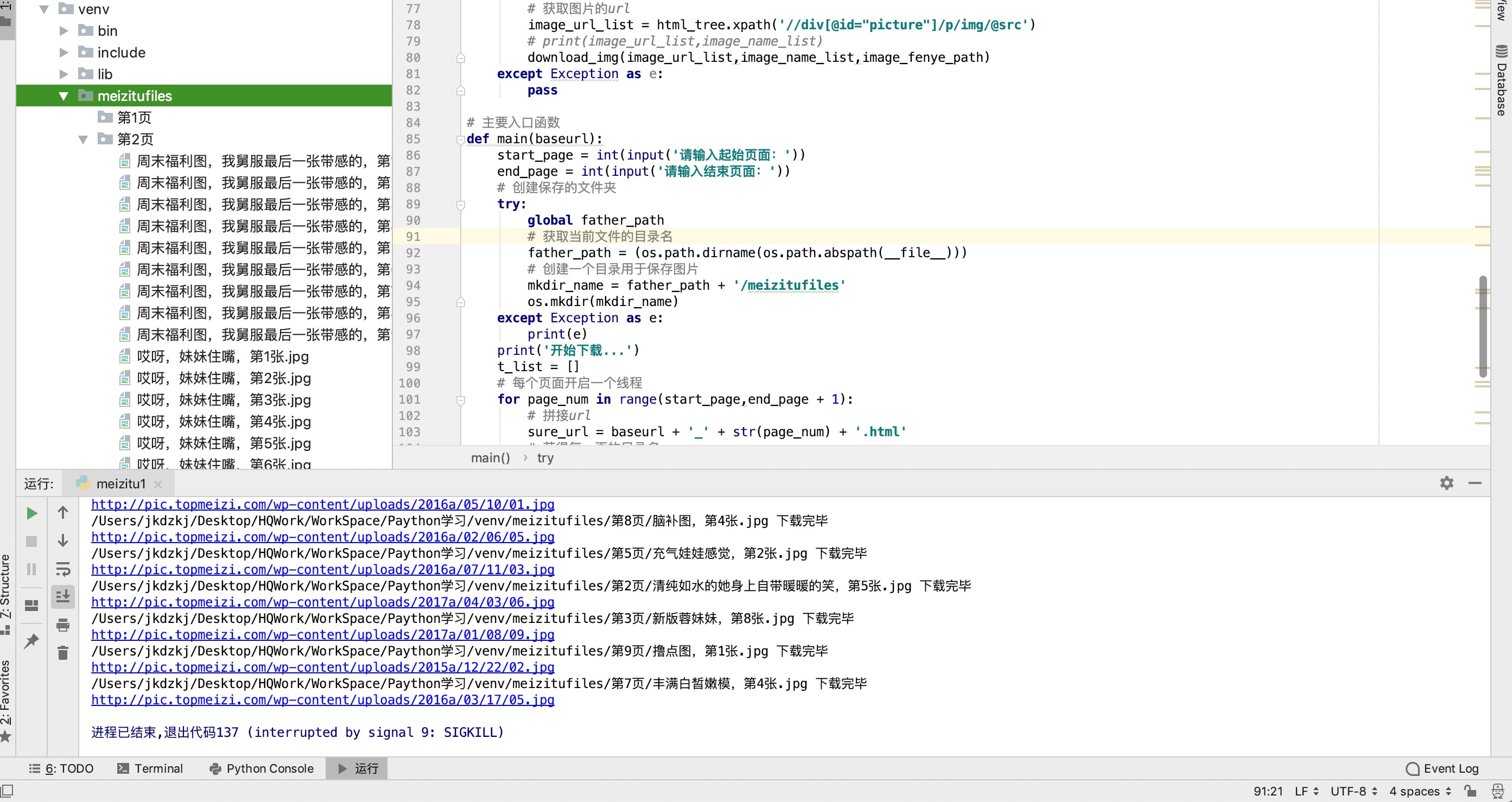

Operation effect: