1, Overall thinking

For example, the goal achieved through this project is to increase the number of blog visits. How can we achieve this goal?

Traffic is a step that we click on the corresponding articles through the browser or mobile APP. Can we simulate our behavior through python to increase the traffic? Just do it! Let's go!

To simulate our behavior, what do we need to prepare? First, do you always need the corresponding article URL? Then, in order to imitate the behavior of the browser and tell the server that we are normal users, do we need to encapsulate the header of the request package? Finally, in order to show that the same person is not accessing, should we encapsulate a proxy IP?

Here, let's sort out our needs! 1. Target URL; 2. Camouflage header; 3. Proxy IP; 4. Mimic behavioral access. Now that the needs are clear, let's get ready to start.

We all have to do it. Let's finish 3 first.

2, Get the disguised header,

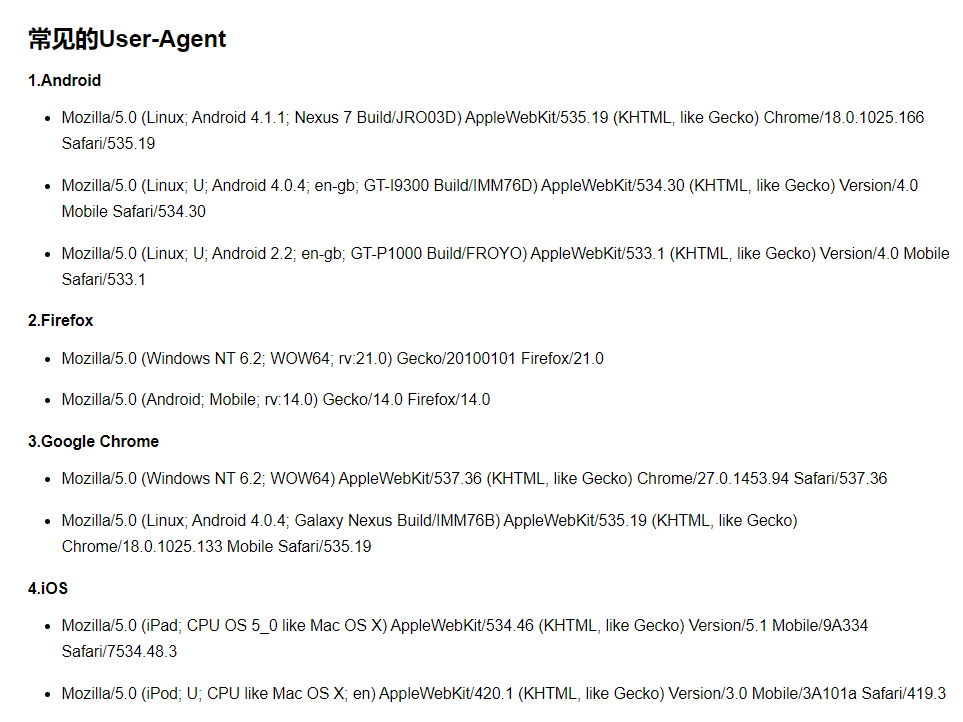

Through browsing information on the Internet, we found such a website https://www.cnblogs.com/0bug/p/8952656.html#_label1 , as shown below:

You don't need to crawl. Let's just copy and paste!

Create a "user agent. TXT. TXT" file in the directory of the code, and then paste the following contents into it:

Mozilla/5.0 (Linux; Android 4.1.1; Nexus 7 Build/JRO03D) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Safari/535.19 Mozilla/5.0 (Linux; U; Android 4.0.4; en-gb; GT-I9300 Build/IMM76D) AppleWebKit/534.30 (KHTML, like Gecko) Version/4.0 Mobile Safari/534.30 Mozilla/5.0 (Linux; U; Android 2.2; en-gb; GT-P1000 Build/FROYO) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1 Mozilla/5.0 (Windows NT 6.2; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0 Mozilla/5.0 (Android; Mobile; rv:14.0) Gecko/14.0 Firefox/14.0 Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/27.0.1453.94 Safari/537.36 Mozilla/5.0 (Linux; Android 4.0.4; Galaxy Nexus Build/IMM76B) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.133 Mobile Safari/535.19 Mozilla/5.0 (iPad; CPU OS 5_0 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Version/5.1 Mobile/9A334 Safari/7534.48.3 Mozilla/5.0 (iPod; U; CPU like Mac OS X; en) AppleWebKit/420.1 (KHTML, like Gecko) Version/3.0 Mobile/3A101a Safari/419.3

3, Crawling proxy IP

Through browsing information on the Internet, we found such a website https://www.kuaidaili.com/free/inha/ , as shown below:

Can't you just crawl his IP? Just do it!!

The source code is as follows:

import requests

from bs4 import BeautifulSoup

import codecs

from tqdm import tqdm

import random

import re

def main(url):

# Get proxy header and ip

header = head()

ip = ipProxies()

# Crawl web pages

html = getURL(url,header,ip)

# Extract content

result = getResult(html)

# Save results

saveResult(result)

def ipProxies():

f = codecs.open("IP.txt", "r+", encoding="utf-8")

ip = f.readlines()

f.close()

IP = {'http':random.choice(ip)[-2::-1][::-1]}

print("This use IP(proxies)Is:",IP['http'])

return IP

def head():

f = codecs.open("User-Agent.txt","r+",encoding="utf-8")

head = f.readlines()

f.close()

header = {"User-Agent":random.choice(head)[-3::-1][::-1]}

print("This use header(User-Agent)Is:", header["User-Agent"])

return header

def getURL(url,header,ip):

html = ""

try:

response = requests.get(url=url,headers=header,proxies=ip)

html = response.text

except:

print("url Something went wrong!")

return html

def getResult(html):

# Parsing web content

bs = BeautifulSoup(html,"html.parser")

# Regular matching IP address

pantter = '(([01]{0,1}\d{0,1}\d|2[0-4]\d|25[0-5])\.){3}([01]{0,1}\d{0,1}\d|2[0-4]\d|25[0-5])'

# Search documents for results

result = bs.find_all(text= re.compile(pantter))

return result

def saveResult(result):

f = codecs.open("IP.txt",'w+',encoding="utf-8")

for ip in tqdm(result,desc="Content crawling",ncols=70):

f.write(ip)

f.write("\n")

f.close()

if __name__ == '__main__':

url = "https://www.kuaidaili.com/free/inha/"

print("-------------------Start crawling------------------------")

# Call main function

main(url)

print("-------------------Crawling completed------------------------")

print("^-^The result exists in the same directory as this code'IP.txt'In the file^-^")

I won't explain the code too much. If this code can't run for the first time, it should be that you don't have an "IP.txt" file.

Solution: create an "IP.txt" file in the directory of and this code, and then write your local IP in this txt file.

4, Get destination URL

You can refer to this article: prthon crawler crawls all the article links of a user's csdn blog

Here, of course, we will also give our code here. The source code is as follows: dry!

import requests

import random

from bs4 import BeautifulSoup

import codecs

from tqdm import tqdm

import sys

def main(url):

# Get proxy header and ip

header = head()

ip = ipProxies()

# Crawl web pages

html = getURL(url,header,ip)

# Extract content

result = getResult(html)

# Save results

saveResult(result)

# Get random IP

def ipProxies():

f = codecs.open("IP.txt", "r+", encoding="utf-8")

ip = f.readlines()

f.close()

IP = {'http':random.choice(ip)[-2::-1][::-1]}

print("This use IP(proxies)Is:",IP['http'])

return IP

# Get random header header

def head():

f = codecs.open("User-Agent.txt","r+",encoding="utf-8")

head = f.readlines()

f.close()

header = {"User-Agent":random.choice(head)[-3::-1][::-1]}

print("This use header(User-Agent)Is:", header["User-Agent"])

return header

# Get page

def getURL(url,header,ip):

html = ""

try:

response = requests.get(url=url,headers=header,proxies=ip)

html = response.text

except:

print("target URL Wrong")

return html

# Get the required page (HTML) file

def getResult(html):

result = []

bs = BeautifulSoup(html,"html.parser")

for link in bs.find_all('a'):

getlink = link.get('href')

try:

if ("comments" not in getlink) and("/article/details/" in getlink) and ("blogdevteam" not in getlink):

if (getlink not in result):

result.append(getlink)

except TypeError as e:

print("This is a warning. It's just a small anomaly. It can be covered! Still crawling, don't worry! Rush!!!!")

continue

return result

# Save crawl result URL

def saveResult(result):

f = codecs.open('url.txt','w+',encoding='utf-8')

for link in tqdm(result,desc="Crawling on this page",ncols=70):

f.write(link)

f.write("\n")

f.close()

# Function entry

if __name__ == '__main__':

# Get the page that the user needs to crawl

url = str(input("Please enter the to crawl URL: "))

# Judge whether the user input is legal

if "blog.csdn.net" not in url:

print("Please enter a valid CSDN Blog home page link")

sys.exit()

print("-------------------Start crawling------------------------")

# Call main function

main(url)

print("-------------------Crawling completed------------------------")

print("^-^The result exists in the same directory as this code'url.txt'In the file^-^")

Here, our preparations have been completed! After running this code, your current path has five files as shown in the following figure:

If not, there must be a problem ahead. Don't go on.

5, Simulate access and increase traffic!

The preparation is ready. Success or failure depends on it!

Don't worry, since the article has appeared here, it must be a success.

No more nonsense, code:

import requests

import codecs

from tqdm import tqdm

import random

import time

def main():

# Get proxy header and ip

header = head()

ip = ipProxies()

# Get the target URL and visit a blog randomly

url = getURL()

# Access URL

return askURL(url,header,ip)

def ipProxies():

f = codecs.open("IP.txt", "r+", encoding="utf-8")

ip = f.readlines()

f.close()

IP = {'http':random.choice(ip)[-2::-1][::-1]}

print("This use IP(proxies)Is:",IP['http'])

return IP

def head():

f = codecs.open("User-Agent.txt","r+",encoding="utf-8")

head = f.readlines()

f.close()

header = {"User-Agent":random.choice(head)[-3::-1][::-1]}

print("This use header(User-Agent)Is:", header["User-Agent"])

return header

def getURL():

f = codecs.open("url.txt","r+",encoding="utf-8")

URL = f.readlines()

f.close()

url = random.choice(URL)[-2::-1][::-1]

print("The links accessed this time are:", url)

return url

def askURL(url,header,ip):

try:

request = requests.get(url=url,headers=header,proxies=ip)

print(request)

time.sleep(5)

except:

print("This visit failed!")

if __name__ == '__main__':

# Gets the number of simulated accesses

num = int(input("Please enter the number of simulated visits:"))

for num in tqdm(range(num),desc="Visiting",ncols=70):

print("This is the second"+str(num+1)+"Visits")

main()

So far, we have achieved the goal of this project! The next step is the verification of tension and stimulation.

6, Verification link

The final operation results are as follows:

Thus, the project is successful! end!

7, Usage and conclusion

The method of use is very simple. Just put them all in one folder.

Welcome to pay attention and like. Communicate with me and learn python, Django, crawler, docker, routing exchange, web security and so on.