At present, the data on many pages is dynamically loaded, so using the method of "scrape" to crawl the page can only get the layout code of the page HTML, but not the data.

And for novices, it's difficult to use scratch on the road, so use selenium to open the game, and then load the page to get data.

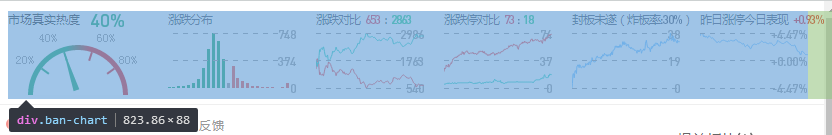

Demand: Statistics of the summary data after the closing of the market every day.

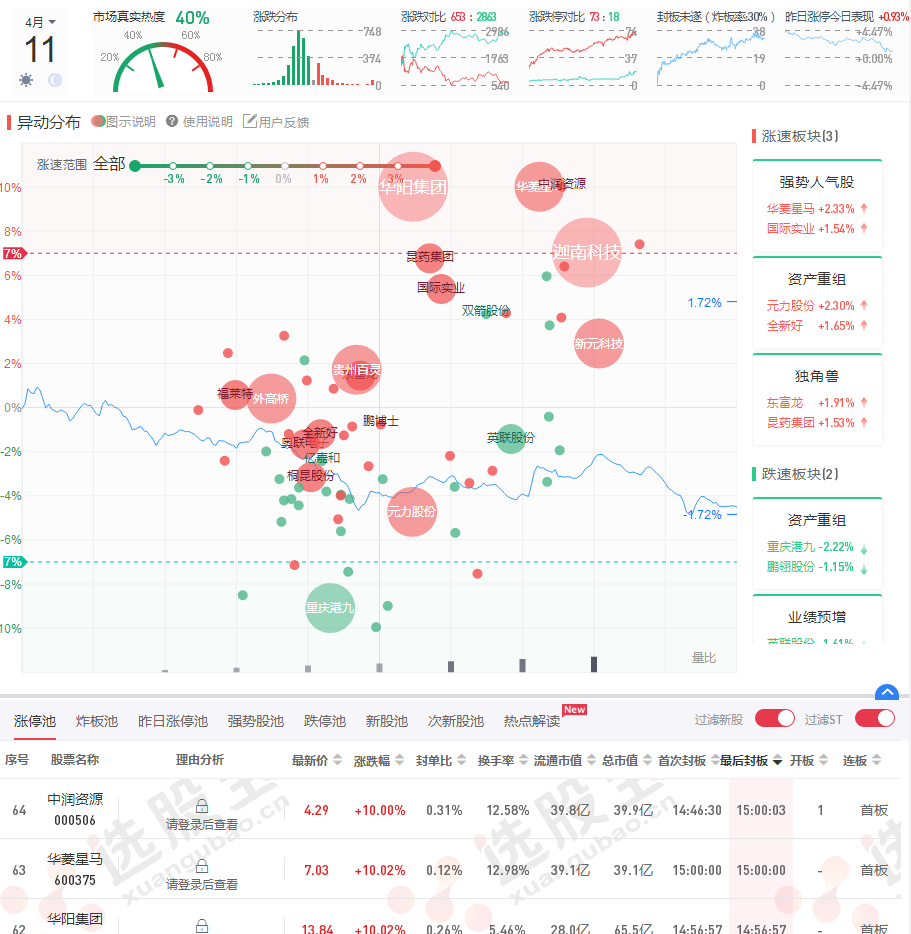

Found a website https://xuangubao.cn/dingpan , the data after the closing of each day is on this page.

Next, use selenium to crawl the summary data on the page.

Step 1, install selenium

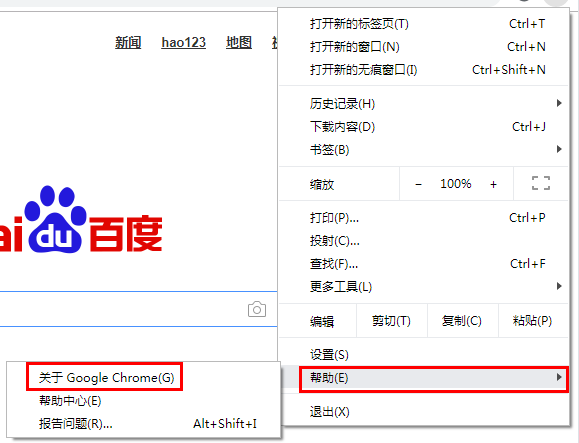

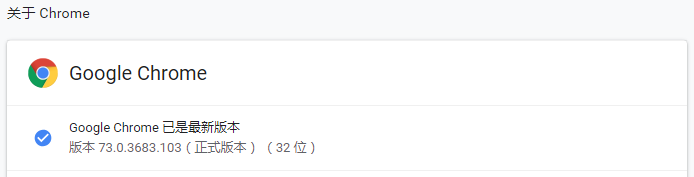

The second step is to install the corresponding game driver, I use Google browser, so I install the chrome driver. Pay attention to version problems.

Step 3, install beautiful soup4 and lxml

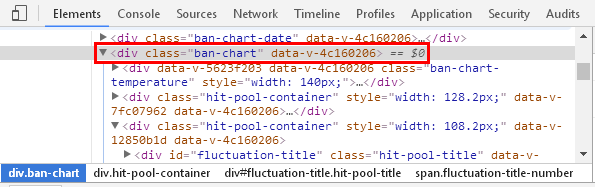

The fourth step is to analyze the page structure.

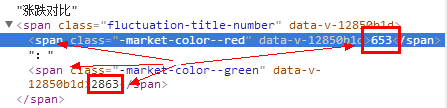

The data is in the SPAN tag in DIV with class "ban cart".

The fifth step is to realize the code:

# Install selenium before chrome driver

from selenium import webdriver

from bs4 import BeautifulSoup

import time

browser = webdriver.Chrome()

browser.get("https://xuangubao.cn/dingpan")

page = browser.page_source

soup = BeautifulSoup(page,"lxml")

# Date obtained

# today = soup.find("div", attrs={"class", "ban-chart-date-container"}).find_all("p")

# print(today[0].get_text().strip(),today[1].get_text().strip(), "day")

date = time.strftime('%Y-%m-%d',time.localtime(time.time()))

print(date)

# Query up and down data

spans = soup.find("div", attrs={"class":"ban-chart"}).find_all("span")

up = spans[3].get_text().strip()

down = spans[4].get_text().strip()

limitUp = spans[6].get_text().strip()

limitDown = spans[7].get_text().strip()

bomb = spans[8].get_text().strip()

print("Rise:",up)

print("Fall:",down)

print("Limit:",limitUp)

print("Limit:",limitDown)

print("Frying rate:", bomb)

# Linked stocks

listCount = [] # Number of continuous plates

guList = soup.find("table", attrs={"class", "table hit-pool__table"}).find_all("tr")

for gu in guList[1:]:

tds = gu.find_all("td")

guName = tds[1].find_all("span")[0].get_text().strip()

guCode = tds[1].find_all("a")[0].get_text().strip()[-6:]

# print(guName,"(",guCode,")",": ",tds[12].get_text().strip())

listCount.append(tds[12].get_text().strip()) # Save connection data to list

# Display the number of different connecting boards

for i in set(listCount):

print("data{0}stay list The number in is:{1}".format(i, listCount.count(i)))

browser.close()

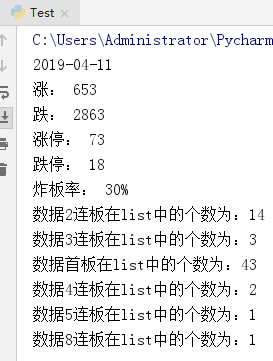

The crawled data effect is as follows: