0. Why choose OpenCV for reasoning

It is reasonable to use OpenVINO reasoning Suite (engine) for optimization and accelerated reasoning, or other reasoning Suites for reasoning, such as TensorRT, etc. OpenVINO It is a comprehensive tool suite launched by Intel for rapid deployment of applications and solutions. There are more than 150 CNN network structures supporting computer vision.

However, the introduction of reasoning Suite (engine) is bound to bring additional workload. In the case of low requirements for reasoning speed, using the DNN module of OpenCV is enough to meet the requirements, achieve lightweight deployment and reduce the dependence on third-party platforms.

With the change of versions, the version of OpenCV has come to 4.5.4, and its DNN module is more and more perfect.

1. Premise

#Python opencv version: 4.5.3

#The training of classification network has been completed, and the pytorch network model has been converted / saved to onnx file format.

#onnx file onnx_name = "surface_defect_model.onnx"

As for how to save as onnx, I made a record.

pytorch saves, loads the model, and saves the network model. pt as ONNIX

The input requirements of the network are:

dummy = [1, 3, 300, 300] # Arranged in the format of N C W H

The picture shall be in the most common three channel 8UC3 format.

#Introduction to network model:

The model is an image classification neural network, which is a migration training based on ResNet18. The training set is 6 different types of defect images.

# Picture category name defect_labels = ["In","Sc","Cr","PS","RS","Pa"]

Because it is a transfer learning training in pytorch, the training data sets are strictly in accordance with:

transforms.Compose([transforms.ToTensor(), transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]), transforms.Resize((300, 300)) ])

Normalize preprocessing, so when reasoning, the image must be processed in strict accordance with the same preprocessing method before feeding it to the network for reasoning, otherwise it will produce a counterproductive prediction effect.

2. Code practice

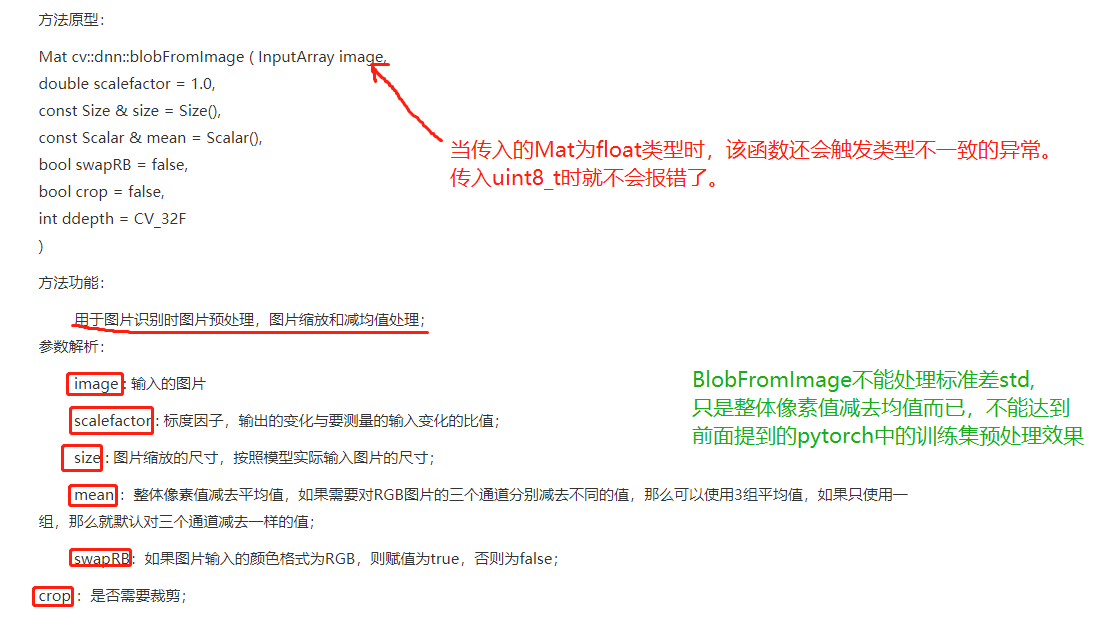

two point one cv.dnn.blobFromImage not applicable

The prototype of cv.dnn.blobFromImage is as follows:

So I realized a three channel CV_8UC3 image is converted to the effects of transforms.ToTensor() and transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) in pytorch. It also returns N*C*W*H (N=1) shape data required by the network, which can be directly fed into the network for inference. The BlobFromNormalizeRGBImage function does not change the size of the incoming image. Its implementation is as follows:

def BlobFromNormalizeRGBImage(img, meanList, stdList):

"""

BlobFromNormalizeRGBImage(img, meanList, stdList)

. @generate a N*C*W*H blob from a RGB(CV_8UC3) picture

. * @param img : a RGB(CV_8UC3) picture ,whose pixel value arrange is 0 ~ 255.

. * @param meanList: the mean value list of RGB channels,it must contains three elements.

. when it is None,ignore it.

. * @param stdList: the standard deviation(sd) value list of RGB channels,it must contains three elements.

. when it is None,ignore it.

. * @returns a N*C*W*H blob .

"""

img = img / 255.0 # Normalized to 0 ~ 1 interval

R, G, B = cv.split(img)

if meanList is not None:

R = R - meanList[0]

G= G - meanList[1]

B = B - meanList[2]

if stdList is not None:

R = R / stdList[0]

G = G / stdList[1]

B = B / stdList[2]

# Channel merging

merged = cv.merge([R, G, B])

# print("merged.shape:",merged.shape) # merged.shape: (300, 300, 3)

merged = merged.transpose((2, 0, 1))

# print("transpose merged.shape:", merged.shape) # transpose merged.shape: (3, 300, 300)

blob = np.expand_dims(merged, 0)

#Generate the format of (1, 3, 300, 300)

# print("blob.shape:", blob.shape) # blob.shape: (1, 3, 300, 300)

return blob2.2 complete code

# import torch # It is separated from the pytorch framework and does not need to be introduced

import cv2 as cv

import numpy as np

import os

# category

defect_labels = ["In","Sc","Cr","PS","RS","Pa"]

# onnx file

onnx_name = "surface_defect_model.onnx"

print(cv.__version__)

def BlobFromNormalizeRGBImage(img, meanList, stdList):

"""

BlobFromNormalizeRGBImage(img, meanList, stdList)

. @generate a N*C*W*H blob from a RGB(CV_8UC3) picture

. * @param img : a RGB(CV_8UC3) picture ,whose pixel value arrange is 0 ~ 255.

. * @param meanList: the mean value list of RGB channels,it must contains three elements.

. when it is None,ignore it.

. * @param stdList: the standard deviation(sd) value list of RGB channels,it must contains three elements.

. when it is None,ignore it.

. * @returns a N*C*W*H blob .

"""

img = img / 255.0 # Normalized to 0 ~ 1 interval

R, G, B = cv.split(img)

if meanList is not None:

R = R - meanList[0]

G= G - meanList[1]

B = B - meanList[2]

if stdList is not None:

R = R / stdList[0]

G = G / stdList[1]

B = B / stdList[2]

# Channel merging

merged = cv.merge([R, G, B])

# print("merged.shape:",merged.shape) # merged.shape: (300, 300, 3)

merged = merged.transpose((2, 0, 1))

# print("transpose merged.shape:", merged.shape) # transpose merged.shape: (3, 300, 300)

blob = np.expand_dims(merged, 0)

#Generate the format of (1, 3, 300, 300)

# print("blob.shape:", blob.shape) # blob.shape: (1, 3, 300, 300)

return blob

def opencv_onnx_defect_infer():

#Input size of forward reasoning

dummy = [1, 3, 300, 300] # Arranged in the format of N C W H

width = dummy[2]

height = dummy[3]

# opencv dnn loading

net = cv.dnn.readNetFromONNX(onnx_name)

root_dir = "./test"

#total

total_pic_cnt = 0

# Forecast correct quantity

predict_ok_cnt = 0

fileNames = os.listdir(root_dir)

for f in fileNames:

print("=========================================================")

image = cv.imread(os.path.join(root_dir, f))

# print("original size:", image.shape)

image = cv.cvtColor(image, cv.COLOR_BGR2RGB)

image = cv.resize(image, (width, height))

# Print ("size after resize:", image.shape)

# blob = cv.dnn.blobFromImage(image,4.424778,size=None,mean=[0.485, 0.456, 0.406])

blob = BlobFromNormalizeRGBImage(image, meanList=[0.485, 0.456, 0.406],stdList=[0.229, 0.224, 0.225])

# print("BlobFromNormalizeRGBImage:",blob.shape) # (1, 3, 300, 300)

# Set the input of the model

net.setInput(blob)

out = net.forward()

# print("out.shape:",out.shape)# out.shape: (1, 6)

# Get a class with a highest score.

out = out.flatten()

# print("out. flatten shape:", out.shape)# out. flatten shape: (6,)

classId = np.argmax(out)

# print("classId:", classId)

confidence = out[classId]

# print("confidence:", confidence)

# print("class:", defect_labels[classId])

total_pic_cnt += 1

if f.find(defect_labels[classId]) >= 0:

predict_ok_cnt += 1

print("OK !!!!")

print("real class %s, infer class:%s, confidence:%f, classId:%d" % (f, defect_labels[classId], confidence,classId))

print(out)

else:

print("predict error !!!!")

print("real: %s, predict result:%s, confidence:%f, classId:%d, predict error" % (f, defect_labels[classId],confidence, classId) )

print(out)

#Statistical results

print("total_pic_cnt:%d, predict_ok_cnt:%d, rate = %0.3f" % (total_pic_cnt, predict_ok_cnt, predict_ok_cnt / total_pic_cnt))

if __name__ == "__main__":

opencv_onnx_defect_infer()