Python multitasking process

What is a process

Process: it is the basic unit of resource allocation. When a program starts running, it is a process, including the running program and the memory and system resources used by the program. CPU allocates CUP processing time for processes in the form of time slices.

A process is composed of multiple threads.

Thread: it is the smallest unit of program execution flow and the basic unit for system independent scheduling and allocation of CPU (independent operation). A thread is an execution stream in a program. Each thread has its own proprietary registers (stack pointer, program counter, etc.), but the code area is shared, that is, different threads can execute the same function. Thread is the smallest execution unit in a process. If a process wants to perform tasks, it needs to rely on threads, and there is at least one thread in a process.

Program: for example, XXX Py this is a program, is a static

II. Process of process

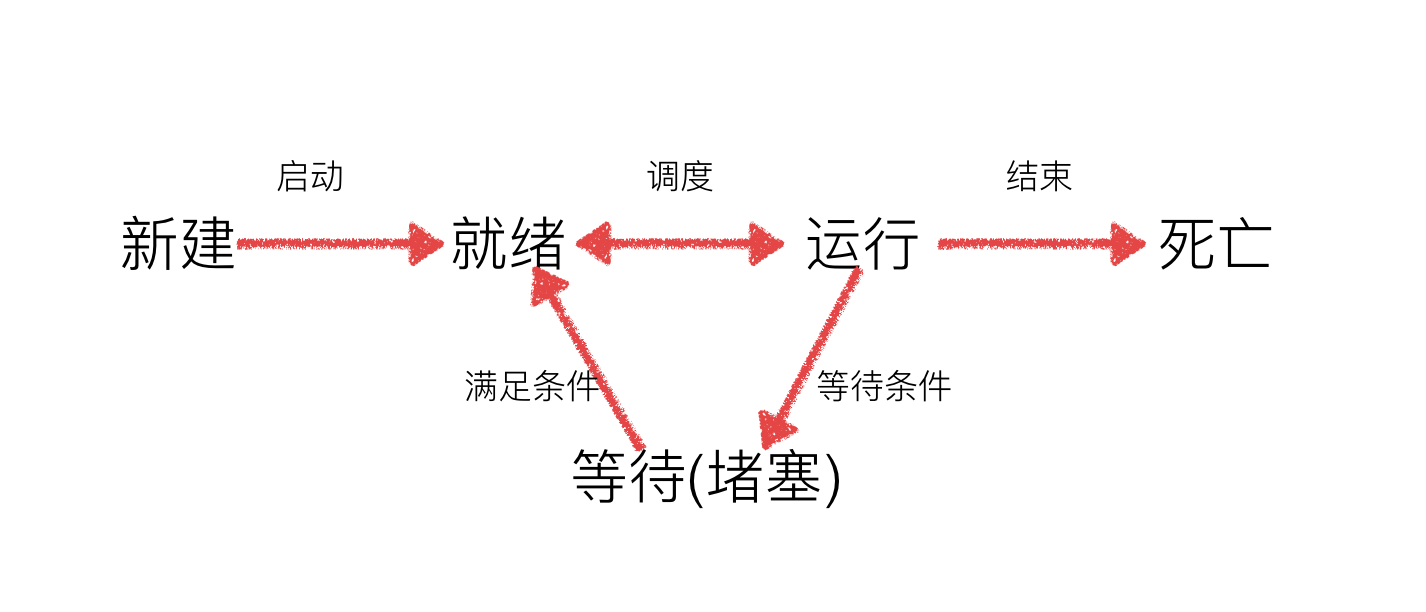

1 status of the process

- Ready status: the running conditions have been met and are waiting for cpu execution

- Execution status: the cpu is executing its functions

- Wait state: wait for certain conditions to be met. For example, a program sleep is in the wait state

III. characteristics / advantages and disadvantages of the process

1 Comparison of processes and threads

Different definitions

- Process is an independent unit for resource allocation and scheduling

- Thread is an entity of a process. It is the basic unit of CPU scheduling and dispatching. It is a smaller basic unit that can run independently than a process The thread itself basically does not own system resources, but only some essential resources in operation (such as program counters, a set of registers and stacks), but it can share all the resources owned by the process with other threads belonging to the same process

difference

- A program has at least one process, and a process has at least one thread

- The division scale of threads is smaller than that of processes (less resources than processes), which makes the concurrency of multithreaded programs high.

- The process has independent memory units during execution, and multiple threads share memory, which greatly improves the running efficiency of the program

2 advantages and disadvantages

Threads and processes have their own advantages and disadvantages in use: thread execution overhead is small, but it is not conducive to resource management and protection; The process is the opposite.

Fourth, Python uses multiple processes

1 multi process simple to use

from multiprocessing import Process

import time

def process1():

for i in range(10):

time.sleep(1)

print("process%s1:" % ("-"*5), i)

print("process%s1Done" % ("-" * 5))

if __name__ == '__main__':

p = Process(target=process1)

p.start()

for i in range(10):

time.sleep(1)

print("process%smain:" % ("-"*5), i)

print("process%smainDone" % ("-" * 5))

2 get process id

from multiprocessing import Process

import time

import os

def process1():

for i in range(10):

time.sleep(1)

print("process%s1:" % ("-"*5), i, [os.getpid()]) # Obtain process id using os module

print("process%s1Done" % ("-" * 5))

if __name__ == '__main__':

p = Process(target=process1)

p.start()

for i in range(10):

time.sleep(1)

print("process%smain:" % ("-"*5), i, [os.getpid()]) # Obtain process id using os module

print("process%smainDone" % ("-" * 5))

3. Syntax structure and common methods

Process syntax structure:

Process([group [, target [, name [, args [, kwargs]]]]])

- target: if the function reference is passed, the task of this sub process is to execute the code here

- args: parameter passed to the function specified by target, passed in tuple

- kwargs: pass named arguments to the function specified by target

- Name: set a name for the process. You can not set it

- Group: Specifies the process group, which is not used in most cases

Common methods for instance objects created by Process:

- start(): start the child process instance (create child process)

- is_alive(): determine whether the child process of the process is still alive

- join([timeout]): whether to wait for the execution of the child process to end, or how many seconds to wait

- Daemon: daemon, which is False by default; When set to True (set before start()), the main process will stop and exit no matter where the child process goes after the main process is completed

- terminate(): terminate the child process immediately regardless of whether the task is completed or not

Common properties of instance objects created by Process:

- name: alias of the current process. The default is Process-N, and N is an integer incremented from 1

- pid: pid of the current process (process number)

from multiprocessing import Process

import time

import os

def process1():

for i in range(10):

time.sleep(1)

print("process%s1:" % ("-"*5), i, [os.getpid()])

print("process%s1Done" % ("-" * 5))

if __name__ == '__main__':

p = Process(target=process1)

p.start()

print("Of child processes name: %s;process id: %d" % (p.name, p.pid))

print("Whether the child process survives:%s" % p.is_alive()) # Whether the child process survives

# p.join() # Block here until the execution of the child process is completed, and the time can be set

p.terminate() # Whether the task is completed or not, terminate the child process immediately, but it takes a little time to end, otherwise p.is_alive() or True

time.sleep(1)

print("Of child processes name: %s;process id: %d" % (p.name, p.pid))

print("Whether the child process survives:%s" % p.is_alive()) # Whether the child process survives

# for i in range(10):

# time.sleep(1)

#

# print("process%smain:" % ("-"*5), i, [os.getpid()])

print("process%smainDone" % ("-" * 5))

4 pass parameters to the function specified by the child process

from multiprocessing import Process

import time

import os

def process1(name, age, **kwargs):

for i in range(10):

time.sleep(1)

print("process%s1:" % ("-"*5), i, [os.getpid()])

print(name, age, kwargs)

print("process%s1Done" % ("-" * 5))

if __name__ == '__main__':

p = Process(target=process1, args=("chen", 18), kwargs={"key1": "value1", "key2": "value2"})

p.start()

print("Of child processes name: %s;process id: %d" % (p.name, p.pid))

print("Whether the child process survives:%s" % p.is_alive()) # Whether the child process survives

# p.join() # Block here until the execution of the child process is completed, and the time can be set

# p.terminate() # Whether the task is completed or not, terminate the child process immediately, but it takes a little time to end, otherwise p.is_alive() or True

time.sleep(1)

print("Of child processes name: %s;process id: %d" % (p.name, p.pid))

print("Whether the child process survives:%s" % p.is_alive()) # Whether the child process survives

# for i in range(10):

# time.sleep(1)

#

# print("process%smain:" % ("-"*5), i, [os.getpid()])

print("process%smainDone" % ("-" * 5))

5 global variables are not shared between processes

from multiprocessing import Process

import time

import os

test_list = [1, 2, 3, 4, 5]

def process1(name, test_list):

test_list.append(name)

print(id(test_list), name, ":", test_list)

print("process%s1Done" % ("-" * 5))

if __name__ == '__main__':

p1 = Process(target=process1, args=("chen", test_list))

p1.start()

p2 = Process(target=process1, args=("jun", test_list))

p2.start()

p3 = Process(target=process1, args=("ming", test_list))

p3.start()

p1.join()

p2.join()

p3.join()

print(id(test_list), "main:", test_list)

print("process%smainDone" % ("-" * 5))

"""

44719880 chen : [1, 2, 3, 4, 5, 'chen']

process-----1Done

44916488 jun : [1, 2, 3, 4, 5, 'jun']

process-----1Done

44392200 ming : [1, 2, 3, 4, 5, 'ming']

process-----1Done

44107272 main: [1, 2, 3, 4, 5]

process-----mainDone

"""

Thread comparison

from threading import Thread

import time

import os

test_list = [1, 2, 3, 4, 5]

def process1(name, test_list):

test_list.append(name)

print(id(test_list), name, ":", test_list)

print("process%s1Done" % ("-" * 5))

if __name__ == '__main__':

p1 = Thread(target=process1, args=("chen", test_list))

p1.start()

p2 = Thread(target=process1, args=("jun", test_list))

p2.start()

p3 = Thread(target=process1, args=("ming", test_list))

p3.start()

p1.join()

p2.join()

p3.join()

print(id(test_list), "main:", test_list)

print("process%smainDone" % ("-" * 5))

"""

44006600 chen : [1, 2, 3, 4, 5, 'chen']

process-----1Done

44006600 jun : [1, 2, 3, 4, 5, 'chen', 'jun']

process-----1Done

44006600 ming : [1, 2, 3, 4, 5, 'chen', 'jun', 'ming']

process-----1Done

44006600 main: [1, 2, 3, 4, 5, 'chen', 'jun', 'ming']

process-----mainDone

"""

Another example

import threading

import time

from multiprocessing import Process

g_num = 20

def work1(num):

global g_num

for i in range(num):

g_num += 1

print("----in work1, g_num is %d---"%g_num)

def work2(num):

global g_num

for i in range(num):

g_num += 1

print("----in work2, g_num is %d---"%g_num)

if __name__ == '__main__': #Multiple processes need to run in the main function

print("---Before process creation g_num is %d---"%g_num)

t1 = Process(target=work1, args=(1000000,))

t1.start()

t2 = Process(target=work2, args=(1000000,))

t2.start()

time.sleep(5)

print("2 The final result of two processes operating on the same global variable is:%s" % g_num)

"""

---Before process creation g_num is 20---

----in work1, g_num is 1000020---

----in work2, g_num is 1000020---

2 The final result of two processes operating on the same global variable is:20

"""

6 interprocess communication - Queue

When initializing the Queue() object (for example, q=Queue()), if the maximum number of messages that can be received is not specified in parentheses or the number is negative, it means that there is no upper limit on the number of acceptable messages (until the end of memory);

-

Queue.qsize(): returns the number of messages contained in the current queue;

-

Queue.empty(): returns True if the queue is empty, otherwise False;

-

Queue.full(): returns True if the queue is full, otherwise False;

-

Queue.get([block[, timeout]]): get a message in the queue and remove it from the queue. The default value of block is True;

- 1) If the default value of block is used and timeout (in seconds) is not set, if the message queue is empty, the program will be blocked (stopped in the reading state) until the message is read from the message queue. If timeout is set, it will wait for timeout seconds. If no message has been read, an "Queue.Empty" exception will be thrown;

- 2) If the block value is False and the message queue is empty, the "Queue.Empty" exception will be thrown immediately;

-

Queue.get_nowait(): equivalent to queue get(False);

-

Queue.put(item,[block[, timeout]]): writes the item message to the queue. The default value of block is True;

- 1) If the default value of block is used and timeout (in seconds) is not set, if there is no space to write in the message queue, the program will be blocked (stopped in the write state) until space is made available from the message queue. If timeout is set, it will wait for timeout seconds. If there is no space, it will throw a "Queue.Full" exception;

- 2) If the block value is False, if there is no space to write in the message queue, the "Queue.Full" exception will be thrown immediately;

-

Queue.put_nowait(item): equivalent to queue put(item, False);

import time

from multiprocessing import Process

from multiprocessing import Queue

g_num = 20

# g_list = [1, 2, 3, 4]

q = Queue(3) # Initialize a Queue object, which can receive up to 4 put messages

def work1(q, num):

g_num = 0

for i in range(num):

g_num = q.get()

g_num += 1

q.put(g_num)

print("----in work1, g_num is %d---"%g_num)

def work2(q, num):

g_num = 0

for i in range(num):

g_num = q.get()

g_num += 1

q.put(g_num)

print("----in work2, g_num is %d---"%g_num)

if __name__ == '__main__': #Multiple processes need to run in the main function

print("---Before process creation g_num is %d---"%g_num)

q.put(g_num)

t1 = Process(target=work1, args=(q, 100000))

t1.start()

t2 = Process(target=work2, args=(q, 100000))

t2.start()

while t1.is_alive() or t2.is_alive():

pass

g_num = q.get()

print("2 The final result of two processes operating on the same global variable is:%s" % g_num)

"""

---Before process creation g_num is 20---

----in work2, g_num is 200002---

----in work1, g_num is 200020---

2 The final result of a process operating on the same global variable is:200020

"""

7 process pool - Pool

When the number of sub processes to be created is small, you can directly use the Process in multiprocessing to dynamically generate multiple processes. However, if there are hundreds or even thousands of goals, the workload of manually creating processes is huge. At this time, you can use the Pool method provided by the multiprocessing module.

When initializing the Pool, you can specify a maximum number of processes. When a new request is submitted to the Pool, if the Pool is not full, a new process will be created to execute the request; However, if the number of processes in the Pool has reached the specified maximum, the request will wait until the end of a process in the Pool, and the previous process will not be used to perform a new task

multiprocessing. Analysis of common pool functions:

- apply_async(func[, args[, kwds]]): call func in non blocking mode (parallel execution, blocking mode must wait for the previous process to exit before executing the next process). Args is the parameter list passed to func, and kwds is the keyword parameter list passed to func;

- close(): close the Pool so that it will no longer accept new tasks;

- terminate(): terminate immediately regardless of whether the task is completed or not; The difference between close() and terminate () is that close() will wait for the worker process in the pool to finish executing and then close the pool, while terminate () will close directly

- join(): the main process is blocked and waits for the child process to exit. It must be used after close() or terminate();

Note: if you want to create a process using Pool, you need to use multiprocessing Queue () in Manager () instead of multiprocessing Queue(), otherwise you will get the following error message:

RuntimeError: Queue objects should only be shared between processes through inheritance.

import time

# from multiprocessing import Process

# from multiprocessing import Queue

from multiprocessing import Pool, Manager

g_num = 20

# g_list = [1, 2, 3, 4]

def work(q, num, time_num):

start_time = time.time()

g_num = 0

for i in range(num):

g_num = q.get()

g_num += 1

q.put(g_num)

end_time = time.time()

print("----in work%d, g_num is %d---Time consuming:%0.3f" % (time_num, g_num, end_time-start_time))

if __name__ == '__main__': # Multiple processes need to run in the main function

q = Manager().Queue(3) # Initialize a Queue object, which can receive up to 3 put messages

print("---Before process creation g_num is %d---"%g_num)

q.put(g_num, False)

pool = Pool(3)

for i in range(10):

result = pool.apply_async(work, (q, 1000, i))

print(result)

print(pool._cache) # View the number of processes

# pool.terminate() # The difference between close() and terminate() is that close() will wait for the worker process in the pool to finish executing and then close the pool, while terminate() will close directly

pool.close()

pool.join() # The main process is blocked and waits for the child process to exit. It must be used after close() or terminate()

print(result.successful()) # If it is not completed, an exception will be thrown. Completion is True

print("-"*150)

print(pool._cache) # View the number of processes

while len(pool._cache) > 0:

pass

g_num = q.get()

print("The final result of all processes operating on the same global variable is:%s" % g_num)