Catalogue

1. Usage scenario of queue class

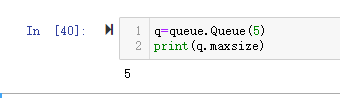

2.2 queue class attributes and methods

3.2 use the methods of empty(), full(), qsize() to check the queue status

3.3 use put(), put_ The nowait () method is inserted into the queue

3.4 using get(), get_ The nowait () method reads the queue

3.5 viewing the three shared variables of the queue

3. Use demonstration of queue class

3.1 classic producer consumer model

3.2 more clearly display the order of the queue

Python multithreaded programming directory

Python multithreaded programming-01-threading module

Python multithreaded programming - 02 threading module - use of locks

Python multithreaded programming - 03 threading module - Condition

Python multithreaded programming - 04 threading module - Event

Python multithreaded programming - 05 threading module - Semaphore and BoundedSemaphore

Python multithreaded programming - 06 threading module - Timer

Python multithreaded programming - 07 threading module - Barrier

Python multithreaded programming - 08 threading review

1. Usage scenario of queue class

The queue class in the Queue/queue module is the most classic class of the module. It implements FIFO queue and first in first out queue. The first task added is the first retrieval task, which is also one of the most classic scenes in daily life.

2. queue class

2.1. queue class constructor

Queue.Queue(maxsize = 0 )

Constructor of FIFO queue. Maxsize is an integer that sets the maximum number of items that can be put on the queue. When this size is reached, the queue length will not be extended until the queue item is consumed. If maxsize is less than or equal to 0, the queue size is infinite.

2.2 queue class attributes and methods

| Serial number | Properties and methods | describe |

| 1 | Attribute {all_tasks_done | A shared variable, threading Condition class object |

| 2 | Method: empty() | Returns a Boolean value to determine whether the queue is empty. |

| 3 | Method # full() | Returns a Boolean value to determine whether the queue is full. |

| 4 | Method: get(block=True, timeout=None) | Get data from the queue. If it is empty, blocking = False will directly report empty exception. If blocking = True, it means wait a while. timeout must be 0 or positive. None means to wait all the time, 0 means to be unequal, and a positive number n means to wait for N seconds and cannot be read. An empty exception is reported |

| 5 | Method: get_nowait() | Equivalent to get(False) |

| 6 | Method join() | All elements in the queue are executed and task is called_ Remain blocked until the done () signal |

| 7 | Attribute max_size | Maximum length of queue |

| 8 | Attribute mutex | Mutex: any operation to obtain the status of the queue (empty(),qsize(), etc.) or modify the contents of the queue (get,put, etc.) must hold the mutex. There are two operations: require to obtain the lock and release to release the lock. At the same time, the mutex is shared by three shared variables at the same time, that is, the require and release operations when operating condition, that is, the mutex is operated. |

| 9 | Attribute not_empty | A shared variable, threading Condition class object |

| 10 | Attribute not_full | A shared variable, threading Condition class object |

| 11 | Method put(item, block=True, timeout=None) | Put the item in the queue. If block is true (default) and timeout is None, block it before there is free space; If timeout is a positive value, the maximum blocking time is {timeout seconds; If Empty block is False, an exception is thrown. |

| 12 | Method put_nowait(item) | Equivalent to put(item,False) |

| 13 | Method qsize() | Returns the size of the queue |

| 14 | Attribute queue | A collection Deque class object |

| 15 | Method task_done() | Used to indicate that an element in the queue has been executed. This method will be used by the join() method. |

| 16 | Attribute unfinished_tasks | Whenever an item is put into the Queue, the count of unfinished tasks (the attribute of unfinished_tasks of the Queue object) will be increased by 1. Whenever task is called_ When you use the done () method, the count is decremented by 1. When the number of unfinished tasks decreases to 0, the queue The blocking of join() disappears. |

3. queue.Queue usage scenario

3.1 creating queues

First, create an infinite or finite FIFO queue

q=queue.Queue(maxsize=0) #Infinite FIFO queue q=queue.Queue() #Infinite FIFO queue q=queue.Queue(maxsize=5) #FIFO queue with length of 5

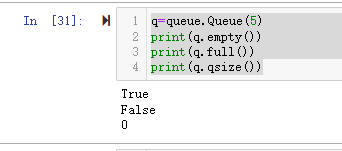

3.2 use the methods of empty(), full(), qsize() to check the queue status

Use # empty(), full(), qsize() to check whether the queue is empty, full, and the exact length of the queue

q=queue.Queue(5) print(q.empty()) print(q.full()) print(q.qsize())

Operation results:

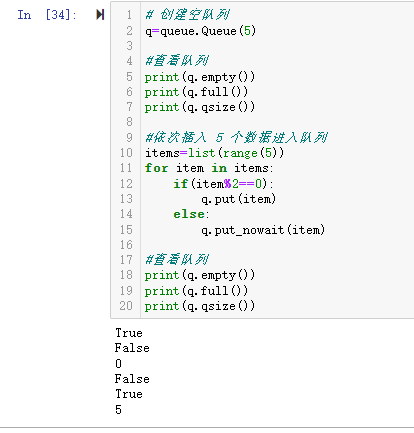

3.3 use put(), put_ The nowait () method is inserted into the queue

# Create an empty queue

q=queue.Queue(5)

#View queue

print(q.empty())

print(q.full())

print(q.qsize())

#Insert five data into the queue in turn

items=list(range(5))

for item in items:

if(item%2==0):

q.put(item)

else:

q.put_nowait(item)

#View queue

print(q.empty())

print(q.full())

print(q.qsize())The operation results are as follows:

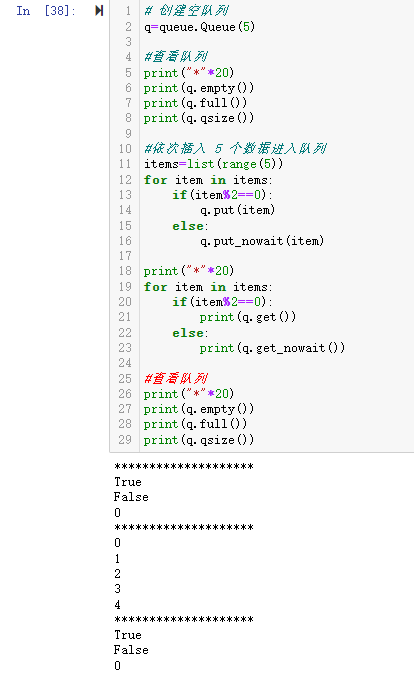

3.4 using get(), get_ The nowait () method reads the queue

# Create an empty queue

q=queue.Queue(5)

#View queue

print("*"*20)

print(q.empty())

print(q.full())

print(q.qsize())

#Insert five data into the queue in turn

items=list(range(5))

for item in items:

if(item%2==0):

q.put(item)

else:

q.put_nowait(item)

print("*"*20)

for item in items:

if(item%2==0):

print(q.get())

else:

print(q.get_nowait())

#View queue

print("*"*20)

print(q.empty())

print(q.full())

print(q.qsize())The operation results are as follows:

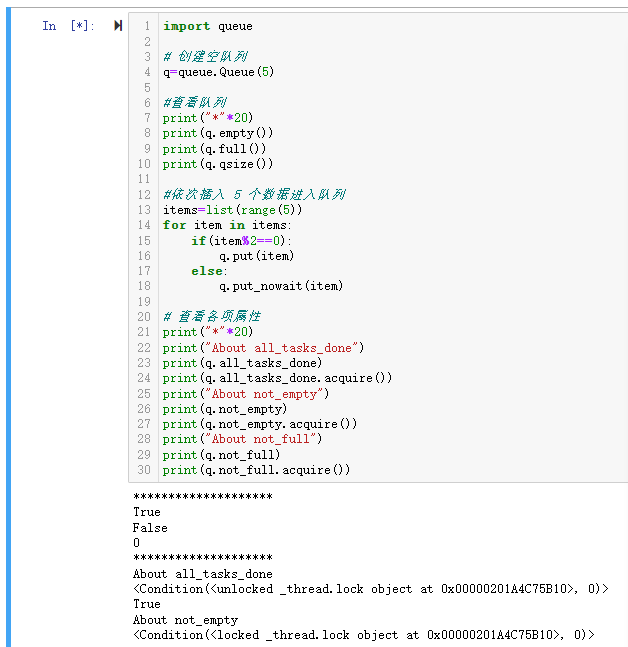

3.5 viewing the three shared variables of the queue

The three shared variables are the attribute all_tasks_done, attribute not_empty, attribute not_full, they are threading Condition class object.

As can be seen from the following code, when the queue is full, acquire all first_ tasks_ Done, after obtaining the result of True, acquire not_empty, it is blocked. In fact, the reverse is also True. This is because the operation of these three shared variables needs to obtain the mutex of the queue. When any of these three Condition variables does not release notify/notifyAll, the other two shared variables cannot be operated.

import queue

# Create an empty queue

q=queue.Queue(5)

#View queue

print("*"*20)

print(q.empty())

print(q.full())

print(q.qsize())

#Insert five data into the queue in turn

items=list(range(5))

for item in items:

if(item%2==0):

q.put(item)

else:

q.put_nowait(item)

# View each attribute

print("*"*20)

print("About all_tasks_done")

print(q.all_tasks_done)

print(q.all_tasks_done.acquire())

print("About not_empty")

print(q.not_empty)

print(q.not_empty.acquire())

print("About not_full")

print(q.not_full)

print(q.not_full.acquire())

3. Use demonstration of queue class

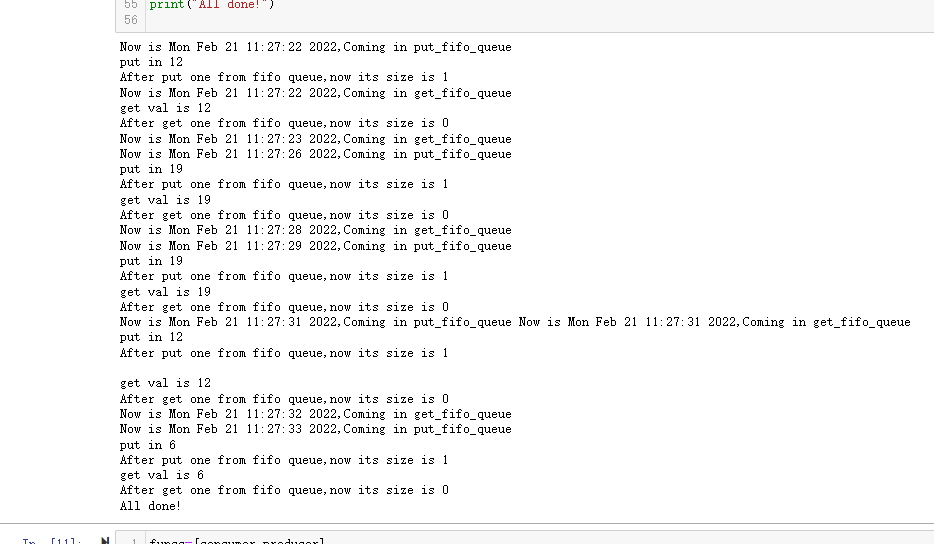

3.1 classic producer consumer model

The following code is the classic producer consumer model. Please see the comments in the code for the explanation of each line of code.

import random

import queue

import threading

import time

# Create a MyThread class and rewrite the run method

class MyThread(threading.Thread):

def __init__(self,func,args,name):

super().__init__()

self.func=func

self.args=args

self.name=name

def run(self):

self.func(*self.args)

# Get an element from the fifo queue

def get_fifo_queue(fifo_queue):

print("Now is {0},Coming in get_fifo_queue ".format(time.ctime()))

val=fifo_queue.get(1)

print("get val is {0}".format(val))

print("After get one from fifo queue,now its size is {0}".format(fifo_queue.qsize()))

# Push an element into the fifo queue

def put_fifo_queue(fifo_queue,val):

print("Now is {0},Coming in put_fifo_queue ".format(time.ctime()))

print("put in {0}".format(val))

fifo_queue.put(val)

print("After put one from fifo queue,now its size is {0}".format(fifo_queue.qsize()))

# The producer function continuously pushes elements into fifo according to the given number of items, and takes a random rest of 1 ~ 5 seconds

def producer(fifo_queue,goods_num):

for tmp in range(goods_num):

put_fifo_queue(fifo_queue,random.randint(1,20))

time.sleep(random.randint(1,5))

# The consumer function continuously obtains elements from fifo according to the given number of items, and takes a random rest of 1 ~ 5 seconds

def consumer(fifo_queue,goods_num):

for tmp in range(goods_num):

get_fifo_queue(fifo_queue)

time.sleep(random.randint(1,5))

# Here is the list of producer consumer functions. Please note that producers first and consumers later.

funcs=[producer,consumer]

test_goods_num=5

test_fifo_queue=queue.Queue(10)

threads=[]

# Create the corresponding MyThread according to the number of producer consumer function lists

for func in funcs:

t=MyThread(func,(test_fifo_queue,test_goods_num),func.__name__)

threads.append(t)

# Start thread

for t in threads:

t.start()

# Wait for the thread to complete

for t in threads:

t.join()

print("All done!")According to the following operation results, it can be clearly seen that although the lock is not explicitly used in the whole code, the producer consumer conducts corresponding activities in an orderly manner according to the FIFO order.

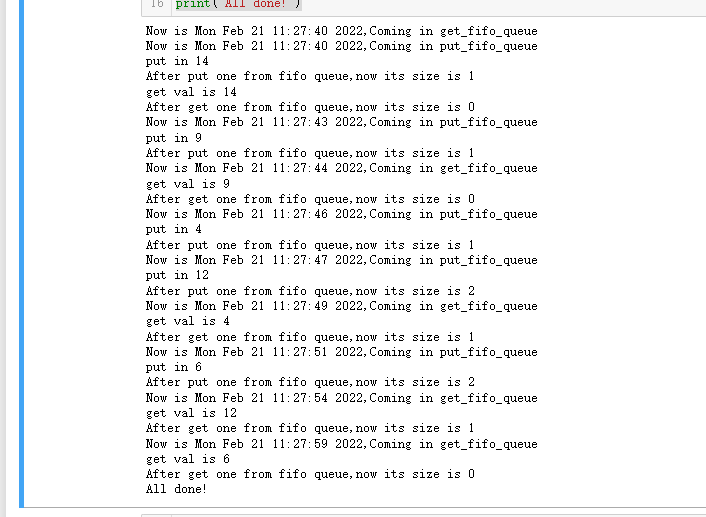

3.2 more clearly display the order of the queue

The code of 3.1 is to generate and start the producer thread first, and then generate and start the consumer thread. What if I change these two orders?

# Change the order of producers and consumers

funcs=[consumer,producer]

test_goods_num=5

test_fifo_queue=queue.Queue(10)

threads=[]

for func in funcs:

t=MyThread(func,(test_fifo_queue,test_goods_num),func.__name__)

threads.append(t)

for t in threads:

t.start()

for t in threads:

t.join()

print("All done!")According to the following code, even if the consumer thread is called first, but there is no item, the initiative of the queue will be automatically handed over to the producer thread. After the producer thread pushes an element of the queue, the consumer activity will be carried out automatically. At this time, the coder does not need any judgment and lock work, This series of behaviors is done implicitly.

'''

If you think it's OK, please point a praise or collection, and think of a blog to increase popularity. Thank you very much!

More welcome to discuss together, learn and make progress together!

'''