Any language needs multitasking capability, and Python is naturally the same. Many people criticize Python for being slow. Python is only slow when multithreading is used. If you haven't used multithreading, it's your problem. In other words, why Python's multithreading is slow is because of the GIL lock of CPython interpreter, Python's multithreading directly calls the multithreading of the operating system, that is, Python's multithreading is real multithreading, not virtual. CPython is theoretically single threaded in the process of interpreting Python code, and CPython will interpret Python code on multiple operating system threads, which is the root cause of Python's slowness. If you use other Python interpreters, you won't have this problem. CPython also tries to remove the GIL lock, but the efficiency is not as high as it is now.

It's a little far from that. For Python, there are three ways to realize multitasking: multi process, multi thread and co process. Only multi process can be truly parallel, and multi thread and co process are concurrent.

1 process

python's built-in multiprocessing package provides an interface to call multiple processes.

1.1 basic application

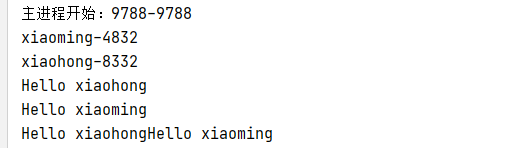

import time

import multiprocessing

def task(name):

while True:

print(f"Hello {name}")

time.sleep(1)

def main():

p1 = multiprocessing.Process(target=task, args=("xiaoming",), name="xiaoming")

p2 = multiprocessing.Process(target=task, args=("xiaohong",), name="xiaohong")

p1.start()

if p1.is_alive():

print(f"{p1.name}-{p1.pid}")

p2.start()

if p2.is_alive():

print(f"{p2.name}-{p2.pid}")

if __name__ == '__main__':

print(f"Main process start:{multiprocessing.process.current_process().pid}-{multiprocessing.process.current_process().pid}")

main()

This is the most basic application. The same task requires two processes to execute together.

1.2 the child process is the daemon of the main process

Some scenarios may require sub processes to guard the main process. For example, for a game with Beijing music, the background music will start after the game starts. When the game stops, the background music will naturally stop. You can't stop the game. The background music continues.

class Game:

def __init__(self, name):

self.name = name

def screen(self):

while True:

print(f"play{self.name}In....")

time.sleep(1)

def bg_music(self):

while True:

print("Background music playing....")

time.sleep(2)

def main():

game = Game("CS1.5", True)

p_game = multiprocessing.Process(target=game.screen, daemon=True)

p_mucis = multiprocessing.Process(target=game.bg_music, daemon=True)

p_game.start()

p_mucis.start()

time.sleep(10)

print("My wife went home and quickly collected the computer")

if __name__ == '__main__':

main()

The above example has three processes. The main process stops after simulating playing the game for 10 seconds. The game sub process and background music sub process are the daemons of the main process. After the main process stops, it stops automatically.

1.3 common methods of operation process

attribute

p.name p.pid

method

p.is_alive() # Returns true or false p.start() # Start process p.join() # The main process waits for the execution of the child process to end p.terminate() # Terminate process p.kill() # Kill process

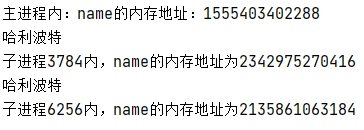

1.4 child processes have independent memory space

Process is the smallest unit of resources allocated by the operating system. Each process has an independent address space.

import multiprocessing

name = "Harry Potter"

def show():

print(name)

print(f"Subprocess{multiprocessing.process.current_process().pid}Inside, name The memory address of is{id(name)}")

def main():

p1 = multiprocessing.Process(target=show)

p2 = multiprocessing.Process(target=show)

p1.start()

p2.start()

print(f"In main process: name Memory address:{id(name)}")

if __name__ == '__main__':

main()

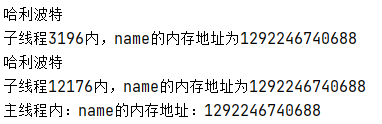

In order to distinguish the obvious, let's play a trick on multithreading. Multiple threads in the same process share the same memory address space.

import threading

name = "Harry Potter"

def show():

print(name)

print(f"Child thread{threading.current_thread().ident}Inside, name The memory address of is{id(name)}")

def main():

t1 = threading.Thread(target=show)

t2 = threading.Thread(target=show)

t1.start()

t2.start()

print(f"Within the main thread: name Memory address:{id(name)}")

if __name__ == '__main__':

main()

1.5 communication between processes

The previous examples basically do one thing for one process, but in the actual development process, most processes cooperate to complete the work. In the commonly used producer consumer model, the communication between multiple processes can be realized by queue.

import time

import multiprocessing

import random

def product(q, delay):

while True:

if q.full():

print("Productivity saturation slows production")

delay = 10

else:

x = random.randint(1, 100)

print(f"Producer production{x}")

q.put(x)

delay = 1

time.sleep(delay)

def consume(q, delay):

while True:

if q.empty():

print("Fast consumption, slow down consumption")

delay = 10

else:

result = q.get()

print(f"Consumer consumption: {result}")

delay=1

time.sleep(delay)

def main():

q = multiprocessing.Queue(maxsize=10)

p_pro = multiprocessing.Process(target=product, args=(q, 1))

p_con = multiprocessing.Process(target=consume, args=(q, 1))

p_pro.start()

p_con.start()

if __name__ == '__main__':

main()

Note that the queue here does not use queue. Queue does not support multithreading and will report an error

1.6 process pool

Creating and destroying processes will occupy system resources. If you don't know how many processes are needed, such as web requests, and you don't know when more requests will come, you can use pooling technology to create a task queue. All tasks are queued in the task queue. The process pool processes a specified number of tasks each time, and the processes are recycled, Eliminates the performance overhead of creating and destroying.

These two modules in the standard library provide support for process pool and thread pool:

from concurrent.futures import ThreadPoolExecutor, ProcessPoolExecutor

2 threads

Process is the smallest unit of operating system scheduling resources, and thread is the smallest unit of CPU scheduling. There can be multiple threads in the same process, and multiple threads share the same address space

2.1 basic use

The Thread class of the threading module provides Python multithreading high-level API s

import threading

def sing():

while True:

print("singing")

def dance():

while True:

print("dancing")

def main():

threading.Thread(target=sing).start()

threading.Thread(target=dance).start()

if __name__ == '__main__':

main()

The same definition of thread and process is not repeated, and process operation can be imitated

2.2 local threads

Local thread means that each thread has an isolated memory space. Multithreading does not interfere with each other‘

2.3 thread lock

I just simulated the problem of multithreading competing for global variables. In practice, multithreading will not be used for this subtraction

import time

import threading

count = 10

def decr():

global count

temp = count

# Analog delay, 0.01s, is very short for human beings, but it can do many things for CPU,

# When IO blocking occurs, the operating system will switch to another thread. At this time, the count has not been subtracted by 1,

# Therefore, the count data obtained by 10 threads is 10. Don't reduce it by 10 times or 9. This is obviously wrong

time.sleep(0.01)

count = temp - 1

print(f"==Current thread id{threading.current_thread().ident}==count = {count}\n")

ts = []

for i in range(10):

t = threading.Thread(target=decr)

t.start()

ts.append(t)

for t in ts:

t.join() # The main thread is blocked, waiting for the execution of the child thread to end

"""

==Current thread id2332==count = 9

==Current thread id12000==count = 9

==Current thread id8972==count = 9

==Current thread id13424==count = 9

==Current thread id12016==count = 9

==Current thread id3620==count = 9

==Current thread id12740==count = 9

==Current thread id10176==count = 9

==Current thread id12116==count = 9

==Current thread id1052==count = 9

"""

Using a lock, after a thread locks a piece of code, other threads can continue to operate only after the thread is unlocked

import time

import threading

count = 10

lock = threading.Lock()

def decr():

try:

lock.acquire() # Acquire lock

global count

temp = count

time.sleep(0.01)

count = temp - 1

print(f"==Current thread id{threading.current_thread().ident}==count = {count}\n")

finally:

lock.release() # Release lock support with context manager

ts = []

for i in range(10):

t = threading.Thread(target=decr)

t.start()

ts.append(t)

for t in ts:

t.join() # The main thread is blocked, waiting for the execution of the child thread to end

"""

==Current thread id10828==count = 9

==Current thread id1044==count = 8

==Current thread id4116==count = 7

==Current thread id1624==count = 6

==Current thread id660==count = 5

==Current thread id2748==count = 4

==Current thread id6552==count = 3

==Current thread id6540==count = 2

==Current thread id2836==count = 1

==Current thread id7304==count = 0

"""

2.4 deadlock

import time

import threading

# Create two locks

lockA = threading.Lock()

lockB = threading.Lock()

a = 0

b = 0

def taskA():

global a, b

lockA.acquire()

temp = a

print("variable a Lock")

lockB.acquire()

temp_ = b

print("variable b Lock")

lockB.release()

print("variable b Release lock")

lockA.release()

print("variable a Release lock")

# When taskA grabs a lock, taskB grabs b lock

def taskB():

global a, b

lockB.acquire()

time.sleep(1)

temp = b

print("variable b Lock")

lockA.acquire()

temp_ = a

print("variable a Lock")

lockB.release()

print("variable a Release lock")

lockA.release()

print("variable b Release lock")

def main():

taskA()

taskB()

ts = []

for i in range(10):

t = threading.Thread(target=main)

t.start()

ts.append(t)

for t in ts:

t.join() # The main thread is blocked, waiting for the execution of the child thread to end

2.5 recursive lock (deadlock resolution)

When two threads compete for a lock at the same time, a deadlock will occur. The program will be blocked all the time and will not run down. Therefore, deadlock must be avoided in the program. Recursive lock can solve this problem. RLock itself has a counter. If acquire is encountered, the counter + 1. If the counter is greater than 0, other threads cannot obtain the lock, If release is encountered, the counter is - 1

import time

import threading

# Create two locks

lockR = threading.RLock()

a = 0

b = 0

def taskA():

global a, b

lockR.acquire()

temp = a

print("variable a Lock")

lockR.acquire()

temp_ = b

print("variable b Lock")

lockR.release()

print("variable b Release lock")

lockR.release()

print("variable a Release lock")

# When taskA grabs a lock, taskB grabs b lock

def taskB():

global a, b

lockR.acquire()

time.sleep(1)

temp = b

print("variable b Lock")

lockR.acquire()

temp_ = a

print("variable a Lock")

lockR.release()

print("variable a Release lock")

lockR.release()

print("variable b Release lock")

def main():

taskA()

taskB()

ts = []

for i in range(10):

t = threading.Thread(target=main)

t.start()

ts.append(t)

for t in ts:

t.join() # The main thread is blocked, waiting for the execution of the child thread to end

2.6 semaphore

The semaphore itself is also a lock. At the same time, only a set number of threads can perform operations

import threading

import time

sem = threading.Semaphore(5)

def task():

with sem:

print("Hello")

time.sleep(2)

ts = []

for i in range(100):

t = threading.Thread(target=task)

t.start()

ts.append(t)

for t in ts:

t.join()

The simple example above did not 2s print hello five times.

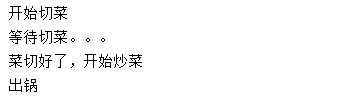

2.7 event object

Common sense in life, you must cut the dishes before cooking. If the dishes are not cut well, you have to wait. For threads, it is blocked.

The Event object provides a simple thread communication method,

event.isSet() #Return the status value of event; The initial value is False event.wait() #If event.isSet()==False, the thread will be blocked; event.set() #Set the status value of event to True, and all threads blocking the pool will be activated to enter the ready state and wait for the operating system to schedule; event.clear() #The status value of the recovery event is False.

import threading

import time

event = threading.Event()

def qiecai():

print("Start cutting vegetables")

time.sleep(5)

event.isSet() or event.set()

def zuofan():

print("Waiting to cut vegetables...")

event.wait()

print("When the food is cut, start cooking")

time.sleep(3)

print("Out of the pot")

event.clear()

t1 = threading.Thread(target=qiecai)

t2 = threading.Thread(target=zuofan)

t1.start()

t2.start()

t1.join()

t2.join()

2.8 condition object

acquire(*args)

Request the underlying lock. This method calls the corresponding method of the underlying lock, and the return value is the return value of the corresponding method of the underlying lock.

release()

Release the underlying lock. This method calls the corresponding method of the underlying lock. No return value.

wait(timeout=None)

Wait until notified or timeout occurs. If the thread does not obtain a lock when calling this method, an error is thrown RuntimeError Abnormal.

This method releases the bottom lock and then blocks until the same conditional variable is invoked in another thread. notify() or notify_all() Wake it up, or until an optional timeout occurs. Once awakened or timed out, it regains the lock and returns.

When provided timeout Parameter and not None It should be a floating-point number representing the timeout of the operation, in seconds (can be decimal).

When the bottom lock is a RLock ,I won't use it release() Method releases the lock because it may actually not be unlocked when it is acquired recursively multiple times. Instead, use RLock Class, which can be unlocked even if it is obtained repeatedly recursively. Then, when the lock is reacquired, another internal interface is used to restore the recursion level.

return True ,Unless provided timeout Expired, return in this case False.

In 3.2 Version change: Obviously, methods always return None.

wait_for(predicate, timeout=None)

Wait until the condition evaluates to true. predicate It should be a callable object and its return value can be interpreted as a Boolean value. Can provide timeout Parameter gives the maximum waiting time.

This utility method is called repeatedly wait() Until the judgment is satisfied or a timeout occurs. The return value is the last return value of the judgment, and will be returned if the method times out False .

Ignoring the timeout function, calling this method is roughly equivalent to writing:

while not predicate():

cv.wait()

Therefore, the same rules apply to wait() : The lock must remain acquired when invoked and be reacquired on return. With locking, execute judgment.

notify(n=1)

By default, wake up a thread waiting for this condition. If the calling thread calls this method without obtaining a lock, an RuntimeError Abnormal.

This method wakes up the most n A thread waiting for this condition variable; If no thread is waiting, this is an empty operation.

In the current implementation, if at least n Threads are waiting to wake up accurately n Threads. But relying on this behavior is not safe. In the future, the implementation of optimization will sometimes wake up more than n Threads.

Note: the awakened thread does not really recover to the time it called wait() ,Until it can regain the lock. because notify() If the lock is not released, its caller should do so.

notify_all()

Wake up all threads waiting for this condition. This method behaves like notify() Similar, but not just wake up a single thread, but wake up all waiting threads. If the calling thread does not obtain a lock when calling this method, a RuntimeError Abnormal.

notifyAll Method is an deprecated alias for this method.

import threading

import time

count = 500

con = threading.Condition()

class Producer(threading.Thread):

# Producer Function

def run(self):

global count

while True:

if con.acquire():

# When count is less than or equal to 1000, production is carried out

if count > 1000:

con.wait()

else:

count = count + 100

msg = self.name + ' produce 100, count=' + str(count)

print(msg)

# Wake up the waiting thread after generation,

# Pick a thread from the waiting pool and tell it to call the acquire method to try to get the lock

con.notify()

con.release()

time.sleep(1)

class Consumer(threading.Thread):

# Consumer function

def run(self):

global count

while True:

# Consume when count is greater than or equal to 100

if con.acquire():

if count < 100:

con.wait()

else:

count = count - 5

msg = self.name + ' consume 5, count=' + str(count)

print(msg)

con.notify()

# Wake up the waiting thread after generation,

# Pick a thread from the waiting pool and tell it to call the acquire method to try to get the lock

con.release()

time.sleep(1)

def test():

for i in range(2):

p = Producer()

p.start()

for i in range(5):

c = Consumer()

c.start()

if __name__ == '__main__':

test()