unittest

Official reference address: https://docs.python.org/zh-cn/3/library/unittest.html?highlight=assertequal#module-unittest

1. Installation and import

# unittest yes python Built in module for testing code,It can be imported directly without installation

import unittest

2. Use

matters needing attention:

1,The test file must be imported into the module first import unittest 2,Test class must inherit unittest.TestCase 3,The test method must be test start 4,Unified for test class execution unittest.main()

5,setUpClass and tearDownClass Must use@classmethod Decorator

6,unittest Execute test cases. The default is based on ASCII Load test cases in the order of codes, and the order of numbers and letters is: 0-9,A-Z,a-z.

# coding:utf-8 import unittest #Module import import requests ''' unittest characteristic: 1,The test file must be imported into the module first import unittest 2,Test class must inherit unittest.TestCase 3,The test method must be test start 4,Unified for test class execution unittest.main() 5,setUpClass and tearDownClass Must use@classmethod Decorator ''' class TestDemo(unittest.TestCase): @classmethod # All test Run once before operation def setUpClass(cls): print("setUpClass Start the test method") @classmethod # All test Run once after running def tearDownClass(cls): print("tearDownClass All test methods are completed") # Set each test Instructions to be executed before the test starts,Prepare test environment def setUp(self): print("setUp Prepare the environment") # Set each test Instructions to be executed after the test is completed, and clean up the test environment def tearDown(self): print("tearDown Environmental Restoration ") # test method def test_step_one(self): self.assertEqual(6, 6, msg="Fail") def test_step_two(self): # Check the equality of the two values; self.assertEqual([1, 2, 3], [1, 2, 3], "Inconsistent assertion comparison") # Assert whether 1 and 1 are equal def test_step_three(self): # Compare test values with true self.assertTrue(True, msg="Failure reason: inconsistent comparison") # def test_step_four(self): # self.assertEquals([1, 2, 3], [3, 2, 1], msg="Inconsistent comparison") if __name__=="__main__": # Provides a command line interface for testing scripts,Used to test inheritance unittest.TestCase In the class of test Initial test case unittest.main()

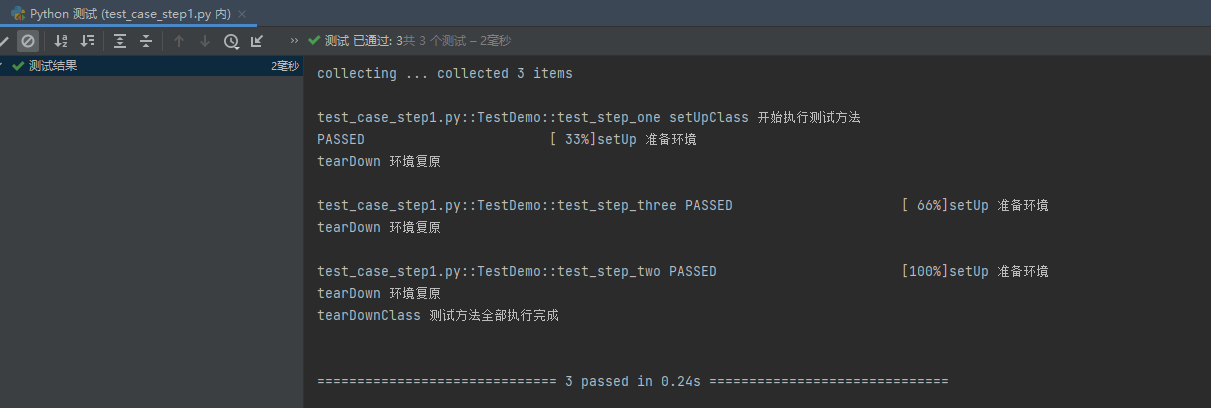

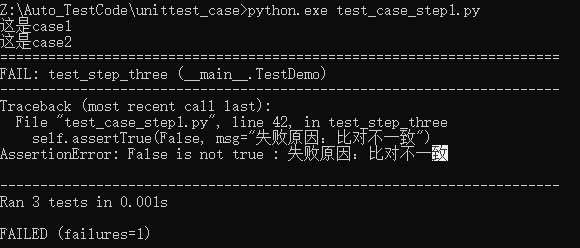

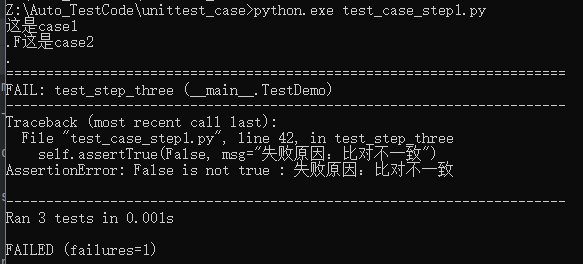

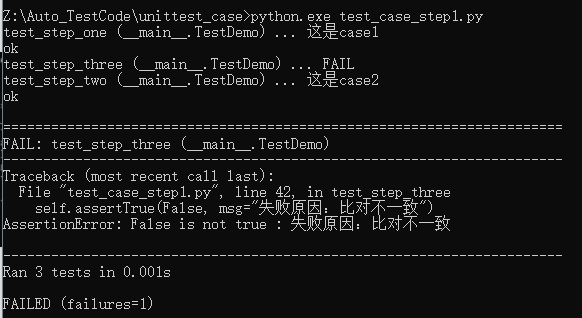

Execution results

unittest.main(verbosity=0) is concise and can only obtain the total number of test cases and total results

unittest.main(verbosity=1) by default, each successful use case is preceded by a ".", Each failed use case is preceded by an "E"

unittest.main(verbosity=2) details, which will display all relevant information of each test case

3. Decorator

@ is a modifier

Skip test cases [will not execute methods, classes, setup, etc. modified by modifiers]

@unittest.skip(reason)

Skip tests decorated with this decorator. reason Is the reason the test was skipped.

@unittest.skipIf(condition, reason)

When condition When true, skip the decorated test.

@unittest.skipUnless(condition, reason)

Skip decorated tests unless condition It's true.

@unittest.expectedFailure

Mark the test as an expected failure or error. If the test fails or is in the test function itself (not in a test fixture Method), the test will be considered successful. If the test passes, it will be considered as a test failure.

exception unittest.SkipTest(reason)

Throw this exception to skip a test.

Example:

# coding:utf-8 import unittest #Module import import requests ''' unittest characteristic: 1,The test file must be imported into the module first import unittest 2,Test class must inherit unittest.TestCase 3,The test method must be test start 4,Unified for test class execution unittest.main() 5,setUpClass and tearDownClass Must use@classmethod Decorator ''' class TestDemo(unittest.TestCase): # test method @unittest.skip("Unconditionally skip tests decorated with this decorator: test_step_one") def test_step_one(self): self.assertEqual(1, 2, "Inconsistent assertion comparison") # Assert whether 1 and 1 are equal @unittest.skipIf(True, "When condition When true, skip the decorated test: test_step_two") def test_step_two(self): # Compare test values with true self.assertEqual(1, 1, "Inconsistent assertion comparison") # Assert whether 1 and 1 are equal @unittest.skipUnless(False, "Skip decorated tests unless condition True: test_step_three") def test_step_three(self): self.assertEqual(1, 2, "Inconsistent assertion comparison") # Assert whether 1 and 1 are equal @unittest.expectedFailure # Expected failure def test_step_four(self): # Check the equality of the two values; self.assertEqual(1, 2, "Inconsistent assertion comparison") # Assert whether 1 and 1 are equal if __name__=="__main__": # Provides a command line interface for testing scripts,Used to test inheritance unittest.TestCase In the class of test Initial test case unittest.main()

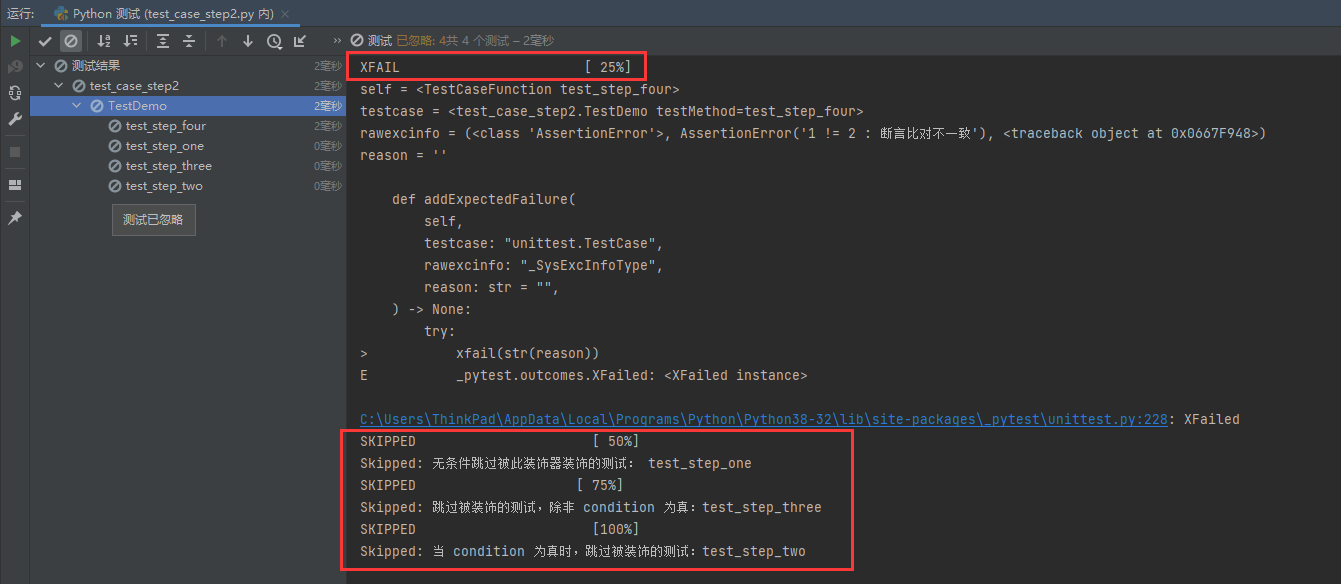

Execution results

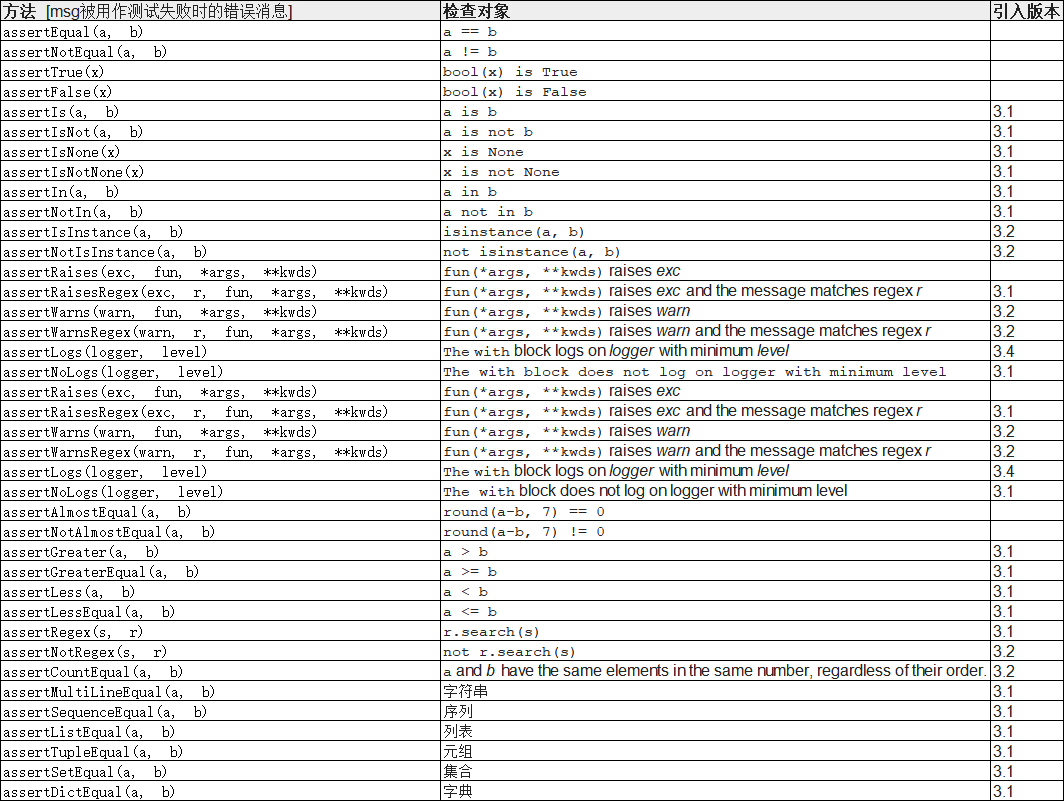

4. Assertion

pytest installation and use

Official reference link: https://docs.pytest.org/en/7.0.x/

1. Installation and import

# pytest yes python Third party unit test framework, compatible unittest

pip install pytest

2. Use

matters needing attention

1,File names need to meet test_*.py Format or*_test.py Format. 2,Test class to Test Start and cannot have init Method, which can contain one or more test_Function at the beginning 3,Direct execution pytest.main(): Automatically find the current directory to test_A file that begins with or begins with_test Ending py file 4,Assertion use assert

1,Automatically find the current directory to test_xxx Begin or begin with xxx_test Ending py file 2,main() Executable parameters and plug-in parameters can be passed in (via[](multiple parameters are separated by commas) 3,pytest.main(['Directory name']) # Run all use cases under the directory and subdirectory 4,pytest.main(['test_xx.py']) # Run all use cases of the specified module 5,pytest.main(['test_xx.py::TestClass::test_def']) # Specify modules, classes, use cases (Methods) 6,Other parameter configuration -m=xxx: Run tagged use cases -reruns=xxx,Failed to rerun -q: Quiet mode, Do not output environment information -v: Rich information mode, Output more detailed use case execution information -s: Displays the in the program print/logging output --resultlog=./log.txt generate log --junitxml=./log.xml generate xml report

pytest There will be 6 exits during execution code detailed list code 0 All use cases passed the execution code 1 All use cases have been executed, and there are failed use cases code 2 Artificial interruption during test execution code 3 An internal error occurred during test execution code 4 pytest Command line usage error code 5 No available test case files were found

Run pytest

# Execute all use cases in the current directory > pytest #==== 2 failed, 4 passed, 3 skipped, 1 xfailed in 0.32s ==== # Execute the specified module > pytest test_run_case_pytest.py #2 failed, 3 skipped, 1 xfailed in 0.26s # Execute the specified use case under the specified module > pytest test_run_case_pytest.py::TestPy::test_funski #==== 1 skipped in 0.02s ==== # Fuzzy matching inclusion func Use case > pytest -k func test_run_case_pytest.py #==== 2 failed, 4 deselected in 0.25s ====

3. Assert

Pytest uses python's own keyword assert to assert [format: assert expression, "expected failure information"] that the result is True, the use case is successful, and the False use case fails

4. Modifier

pytest.skip

# pytest.skip (Used within functions to skip test cases) def test_funski(self): for i in range(9): if i > 3: pytest.skip("Skip test in function") pass

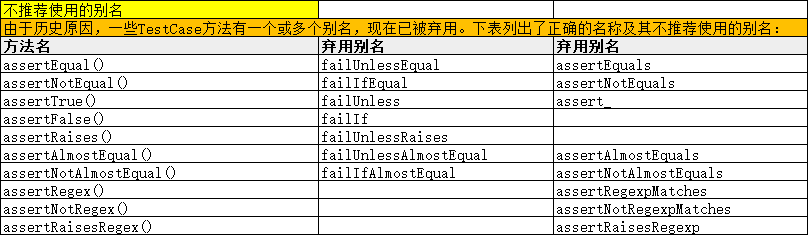

Execution result:

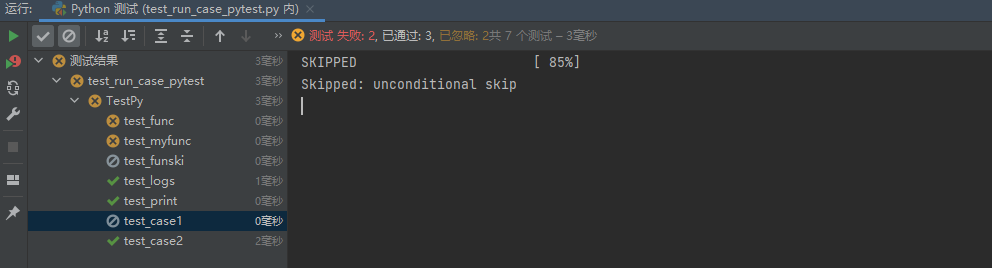

@pytest.mark.skip # Unconditional skip use case (method) def test_case1(self): print("\n") assert 2 + 3 == 6, "The result comparison does not meet the requirements"

Execution result:

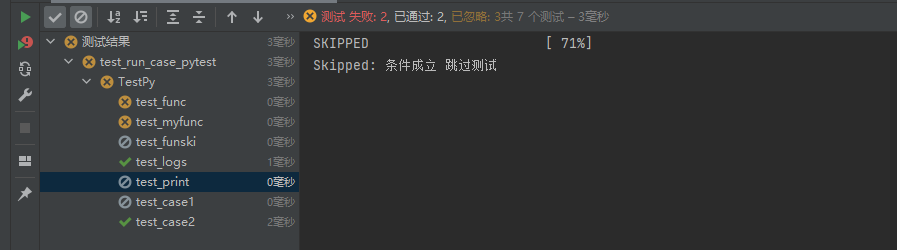

@pytest.mark.skipif(condition="2>0", reason="If the condition holds, skip the test") def test_print(self): print("print Print log")

Execution result:

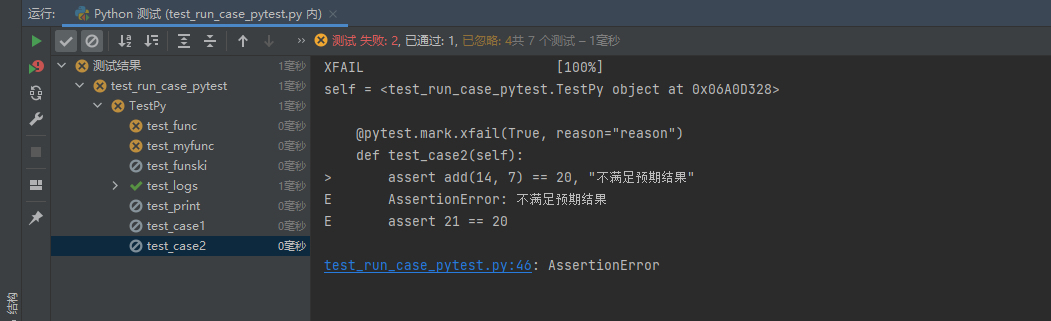

@pytest.mark.xfail(True, reason="reason") # Skip the expected failure case when condition =True And if it actually fails, skip the use case def test_case2(self): assert add(14, 7) == 20, "Not meeting the expected results"

Execution result:

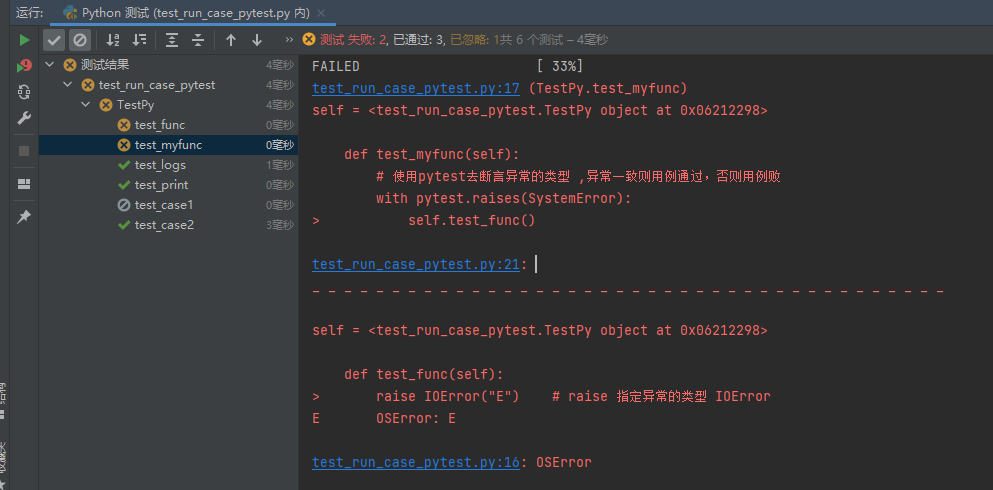

# coding:utf-8 import pytest from pawd.base.Logger import * def add(a:int, b:int=0): return a+b class TestPy(): ''' 1,File names need to meet test_*.py Format or*_test.py Format. 2,Test class to Test Start, and cannot have init Method, which can contain one or more test_Function at the beginning 3,Direct execution pytest.main(): Automatically find the current directory to test_A file that begins with or begins with_test Ending py file :return: ''' def test_func(self): raise IOError("E") # raise Specifies the type of exception IOError def test_myfunc(self): # use pytest To assert the type of exception ,If the exception is consistent, the use case passes, otherwise the use case fails with pytest.raises(SystemError): self.test_func() def test_logs(self): logs.info("logs Print log") def test_print(self): print("print Print log") @pytest.mark.skip # Unconditional skip use case def test_case1(self): print("\n") assert 2 + 3 == 6, "The result comparison does not meet the requirements" @pytest.mark.flaky(reruns=5, reruns_delay=2) def test_case2(self): for i in range(9): # assert add(14, i) == 20, "Not meeting the expected results" try: assert add(14, i) == 20, "Not meeting the expected results" except: continue else: logs.debug(i) if __name__ == "__main__": ''' 1,Automatically find the current directory to test_xxx Begin or begin with xxx_test Ending py file 2,main() Executable parameters and plug-in parameters can be passed in (via[](multiple parameters are separated by commas) 3,pytest.main(['Directory name']) # Run all use cases under the directory and subdirectory 4,pytest.main(['test_xx.py']) # Run all use cases of the specified module 5,pytest.main(['test_xx.py::TestClass::test_def']) # Specify modules, classes, use cases (Methods) 6,Other parameter configuration -m=xxx: Run tagged use cases -reruns=xxx,Failed to rerun -q: Quiet mode, Do not output environment information -v: Rich information mode, Output more detailed use case execution information -s: Displays the in the program print/logging output --resultlog=./log.txt generate log --junitxml=./log.xml generate xml report ''' pytest.main(['-s'])

Execution result:

expand

Unit test

Definition: refers to checking the implementation and logic of code, aiming at modules, functions, classes, etc

Core concepts:

Test case: an instance of testcase is a test case. Prepare the setUp of the environment before testing, execute the test code (run), and restore the environment after testing (tearDown)

TestSuite: a collection of multiple test cases.

TestLoader: used to load testcases into TestSuite.

test fixture (test environment data preparation and data cleaning or test scaffold): build and destroy the test case environment

TextTestRunner: used to execute test cases, where run(test) will execute the run(result) method in TestSuite/TestCase.

Test directory structure

Log: log recording and management function. Different log levels are set for different situations to facilitate problem location;

Report: test report generation, management and instant notification, and rapid response to test results;

Source: the management of configuration files and static resources follows the principle of high cohesion and low coupling;

Common: management of common functions, methods and general operations, following the principle of high cohesion and low coupling;

TestCase: test case management function. A function point corresponds to one or more cases to improve the coverage as much as possible;

TestData: test data management function, separating data from script, reducing maintenance cost and improving portability;

TestSuite: test component management function, assemble and build different test frameworks for different scenarios and different requirements, and follow the flexibility and expansibility of the framework;

Statistics: test result statistics management function, statistics, analysis, comparison and feedback of test results each time, data driven, providing reference for software optimization and process improvement;

Continuous: continuous integration environment, i.e. CI environment, including test file submission, scanning and compilation, test execution, report generation and timely notification. Continuous integration is the core of automatic testing!