qt +opencv dnn+tensorflow to realize on duty early warning

In the process of security, have you ever had such experience? When someone enters the sensitive area, they do not find out in time. Today, qt +opencv dnn+tensorflow is used to realize the early warning of sensitive areas

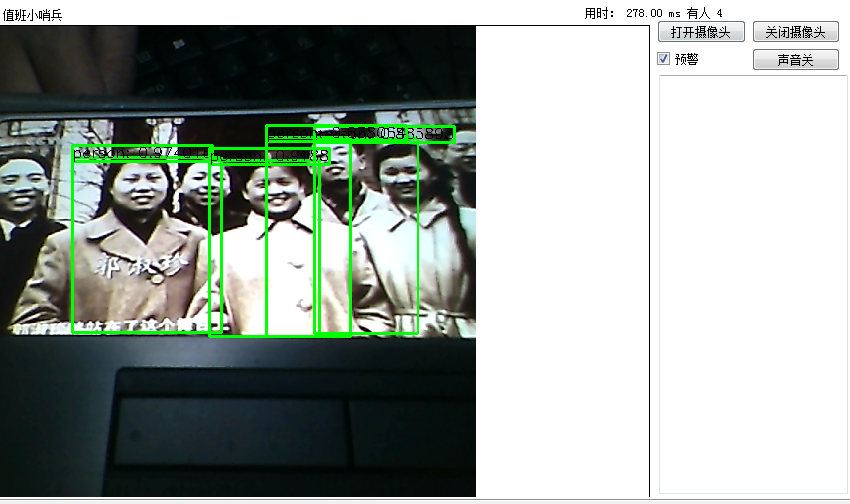

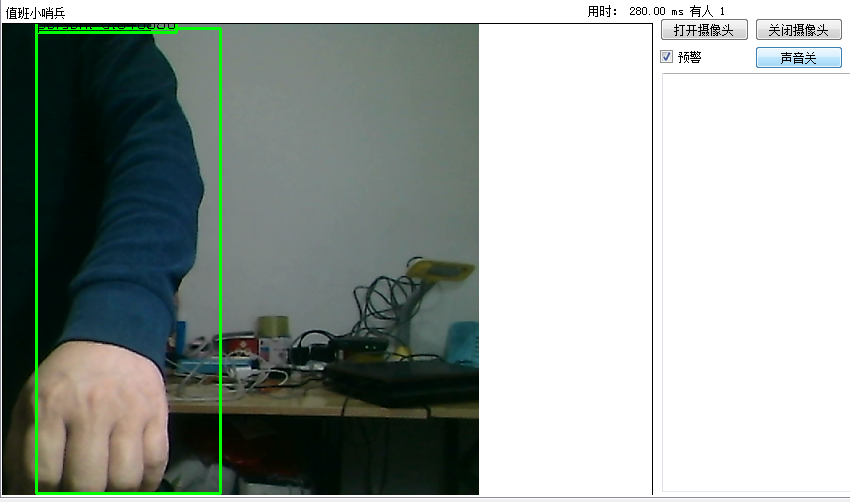

The following picture:

When the alarm is turned on, an audible alarm will be given when someone enters the camera area.

The main principle code is as follows: (change the picture Mat to the camera)

Some of the following codes are from the Internet (thanks to the original author)

#include "stdafx.h"

#include

#include

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

using namespace cv;

using namespace dnn;

float confThreshold, nmsThreshold;

std::vectorstd::string classes;

void postprocess(Mat& frame, const std::vector& out, Net& net);

void drawPred(int classId, float conf, int left, int top, int right, int bottom, Mat& frame);

int main(int argc, char** argv)

{

confThreshold = 0.5;

nmsThreshold = 0.4;

float scale = 1.0;

Scalar mean = { 0, 0, 0 };

bool swapRB = true;

int inpWidth = 300;

int inpHeight = 300;

String modelPath = "frozen_inference_graph.pb";

String configPath = "ssd_mobilenet_v1_coco_2017_11_17.pbtxt";

String framework = "";

int backendId = cv::dnn::DNN_BACKEND_OPENCV;

int targetId = cv::dnn::DNN_TARGET_CPU;

String classesFile = R"(object_detection_classes_coco.txt)";

// Open file with classes names.

if (!classesFile.empty()) {

const std::string& file = classesFile;

std::ifstream ifs(file.c_str());

if (!ifs.is_open())

CV_Error(Error::StsError, "File " + file + " not found");

std::string line;

while (std::getline(ifs, line)) {

classes.push_back(line);

}

}

// Load a model.

Net net = readNet(modelPath, configPath, framework);

net.setPreferableBackend(backendId);

net.setPreferableTarget(targetId);

std::vector<String> outNames = net.getUnconnectedOutLayersNames();

static const std::string kWinName = "opencv dnntest";

// Process frames.

Mat frame, blob;

frame = imread("test.jpg");

// Create a 4D blob from a frame.

Size inpSize(inpWidth > 0 ? inpWidth : frame.cols,

inpHeight > 0 ? inpHeight : frame.rows);

blobFromImage(frame, blob, scale, inpSize, mean, swapRB, false);

// Run a model.

net.setInput(blob);

if (net.getLayer(0)->outputNameToIndex("im_info") != -1)

{

resize(frame, frame, inpSize);

Mat imInfo = (Mat_<float>(1, 3) << inpSize.height, inpSize.width, 1.6f);

net.setInput(imInfo, "im_info");

}

std::vector<Mat> outs;

net.forward(outs, outNames);

postprocess(frame, outs, net);

// Put efficiency information.

std::vector<double> layersTimes;

double freq = getTickFrequency() / 1000;

double t = net.getPerfProfile(layersTimes) / freq;

std::string label = format("Inference time: %.2f ms", t);

putText(frame, label, Point(0, 15), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 255, 0));

imshow(kWinName, frame);

waitKey(0);

return 0;

}

void postprocess(Mat& frame, const std::vector& outs, Net& net)

{

static std::vector outLayers = net.getUnconnectedOutLayers();

static std::string outLayerType = net.getLayer(outLayers[0])->type;

std::vector<int> classIds;

std::vector<float> confidences;

std::vector<Rect> boxes;

if (net.getLayer(0)->outputNameToIndex("im_info") != -1)

{

CV_Assert(outs.size() == 1);

float* data = (float*)outs[0].data;

for (size_t i = 0; i < outs[0].total(); i += 7) {

float confidence = data[i + 2];

if (confidence > confThreshold) {

int left = (int)data[i + 3];

int top = (int)data[i + 4];

int right = (int)data[i + 5];

int bottom = (int)data[i + 6];

int width = right - left + 1;

int height = bottom - top + 1;

classIds.push_back((int)(data[i + 1]) - 1);

boxes.push_back(Rect(left, top, width, height));

confidences.push_back(confidence);

}

}

}

else if (outLayerType == "DetectionOutput") {

CV_Assert(outs.size() == 1);

float* data = (float*)outs[0].data;

for (size_t i = 0; i < outs[0].total(); i += 7) {

float confidence = data[i + 2];

if (confidence > confThreshold) {

int left = (int)(data[i + 3] * frame.cols);

int top = (int)(data[i + 4] * frame.rows);

int right = (int)(data[i + 5] * frame.cols);

int bottom = (int)(data[i + 6] * frame.rows);

int width = right - left + 1;

int height = bottom - top + 1;

classIds.push_back((int)(data[i + 1]) - 1); // Skip 0th background class id.

boxes.push_back(Rect(left, top, width, height));

confidences.push_back(confidence);

}

}

}

else if (outLayerType == "Region") {

for (size_t i = 0; i < outs.size(); ++i) {

float* data = (float*)outs[i].data;

for (int j = 0; j < outs[i].rows; ++j, data += outs[i].cols) {

Mat scores = outs[i].row(j).colRange(5, outs[i].cols);

Point classIdPoint;

double confidence;

minMaxLoc(scores, 0, &confidence, 0, &classIdPoint);

if (confidence > confThreshold) {

int centerX = (int)(data[0] * frame.cols);

int centerY = (int)(data[1] * frame.rows);

int width = (int)(data[2] * frame.cols);

int height = (int)(data[3] * frame.rows);

int left = centerX - width / 2;

int top = centerY - height / 2;

classIds.push_back(classIdPoint.x);

confidences.push_back((float)confidence);

boxes.push_back(Rect(left, top, width, height));

}

}

}

}

else

CV_Error(Error::StsNotImplemented, "Unknown output layer type: " + outLayerType);

std::vector<int> indices;

NMSBoxes(boxes, confidences, confThreshold, nmsThreshold, indices);

for (size_t i = 0; i < indices.size(); ++i) {

int idx = indices[i];

Rect box = boxes[idx];

drawPred(classIds[idx], confidences[idx], box.x, box.y,

box.x + box.width, box.y + box.height, frame);

}

}

void drawPred(int classId, float conf, int left, int top, int right, int bottom, Mat& frame)

{

rectangle(frame, Point(left, top), Point(right, bottom), Scalar(0, 255, 0));

std::string label = format("%.2f", conf);

if (!classes.empty()) {

CV_Assert(classId < (int)classes.size());

label = classes[classId] + ": " + label;

}

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

rectangle(frame, Point(left, top - labelSize.height),

Point(left + labelSize.width, top + baseLine), Scalar::all(255), FILLED);

putText(frame, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 0.5, Scalar());

}

After understanding the principle. qt is used as the interface. Add the sound processing module, you can realize a security alarm system. (free download will be provided after the initial improvement of the system)

Extension: it can realize dynamic detection and early warning for a variety of animals.

Open the IP camera using opencv. Multiple alarm, etc.

Because the older machine can not meet the real-time requirements in about 300ms. The i7 machine can achieve 30-50ms basic synchronization with the camera. One of the reasons for using tensorflow is that it is tested 10-20 times faster than the same level yolo3 module.