RabbitMQ is an open source message broker software that implements Advanced Message Queuing Protocol (AMQP). RabbitMQ server is written in Erlang language. It can provide a general platform for sending and receiving messages for your application, and ensure the security of messages in the transmission process. RabbitMQ official website,RabbitMQ Chinese Documents.

Install RabbitMQ

Install EPEL source

[root@anshengme ~]# yum -y install epel-release

Install erlang

[root@anshengme ~]# yum -y install erlang

Install RabbitMQ

[root@anshengme ~]# yum -y install rabbitmq-server

Start and set the boot machine to start

You need hostname parsing before starting RabbitMQ, otherwise you can't start it.

[root@anshengme ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 anshengme ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

[root@anshengme ~]# systemctl start rabbitmq-server [root@anshengme ~]# systemctl enable rabbitmq-server Created symlink from /etc/systemd/system/multi-user.target.wants/rabbitmq-server.service to /usr/lib/systemd/system/rabbitmq-server.service.

View startup status

What I don't know in the process of learning can be added to me? python learning communication deduction qun, 784758214 There are good learning video tutorials, development tools and e-books in the group. Share with you the current talent needs of python enterprises and how to learn python from zero foundation, and what to learn [root@anshengme ~]# netstat -tulnp |grep 5672 tcp 0 0 0.0.0.0:25672 0.0.0.0:* LISTEN 37507/beam.smp tcp6 0 0 :::5672 :::* LISTEN 37507/beam.smp

pika

The pika module is officially recognized as the API interface for operating RabbitMQ.

Install pika

pip3 install pika

pika: https://pypi.python.org/pypi/pika

test

>>> import pika

Work Queues

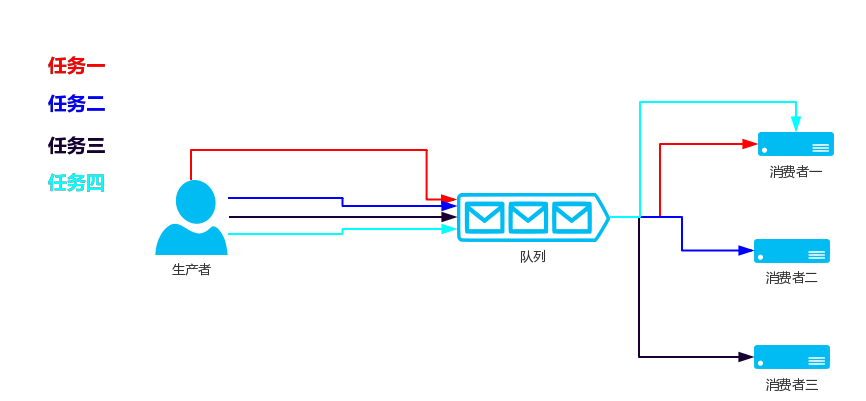

If you start multiple consumers, the task of producer production will be performed by consumers in sequence, which is the Work Queues model.

Producer code

# _*_ codin:utf-8 _*_

import pika

# Connect to RabbitMQ This is a blocked connection

connection = pika.BlockingConnection(pika.ConnectionParameters('192.168.56.100'))

# Generate a pipe

channel = connection.channel()

# Create a queue through a pipeline

channel.queue_declare(queue='hello')

# Send data in the queue, body content, routing_key queue, exchange switch, send Hello World data to hello queue through the switch!

channel.basic_publish(exchange='', routing_key='hello', body='Hello World!')

# Close the connection

connection.close()

Consumer Code

# _*_ codin:utf-8 _*_

import pika

# Connect to RabbitMQ This is a blocked connection

connection = pika.BlockingConnection(pika.ConnectionParameters('192.168.56.100'))

# Generate a pipe

channel = connection.channel()

# If the queue is not generated when the consumer connects to the queue, then the consumer generates the queue. If the queue has been generated, then ignore it.

channel.queue_declare(queue='hello')

# callback

def callback(ch, method, properties, body):

print(" [x] Received %r" % body)

# Consumption, when receiving messages from the hello queue, callback function is invoked. no_ack consumer needs to confirm that the task has been processed or that False has to confirm that the task has been processed.

channel.basic_consume(callback, queue='hello', no_ack=True)

# Start accepting tasks, block

channel.start_consuming()

Persistence

Queue persistence

Imagine that if our consumer suddenly drops down halfway through the task, we can change no_ack=False to let the consumer confirm that the task is completed after each execution, and then remove the task from the queue, but if RabbitMQ's server stops our task, it will still be lost. Loss.

First, we need to make sure that RabitMQ will never be lost in our queue. To do this, we need to declare a queue with a new name, task_queue:

channel.queue_declare(queue='task_queue', durable=True)

Durable needs to be written on both producers and consumers, and durable only persists our queues, not messages.

Message persistence

Message persistence only requires adding a delivery_mode=2 when adding a message

channel.basic_publish(exchange='',

routing_key='world',

body='Hello World!',

properties=pika.BasicProperties(

# 2 = message persistence

delivery_mode=2,

))

Add the following code to the callback function of the consumer:

ch.basic_ack(delivery_tag = method.delivery_tag)

Fair distribution of messages

Every consumer handles only one task at the same time. For example, now there are three consumers. At the beginning, three tasks have been assigned to three consumers on average. So these three consumers are currently performing tasks at the same time. When the fourth task comes, they will still be assigned to the first consumer and the fifth task will be assigned to the first consumer. When it comes, it's allocated to a second consumer, and so on.

So what's wrong with the above situation? For example, different consumers have different time to perform tasks. When we need three consumers to perform tasks, such as when the second consumer task is finished, other consumers are still performing tasks. When the fourth task comes, we hope to give it to the second consumer. Now this function only needs to add a code to the consumer:

channel.basic_qos(prefetch_count=1)

The complete code is as follows

Consumer Code

#!/usr/bin/env python

import pika

import time

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.56.100'))

channel = connection.channel()

channel.queue_declare(queue='task_queue', durable=True)

print(' [*] Waiting for messages. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] Received %r" % body)

time.sleep(10)

print(" [x] Done")

ch.basic_ack(delivery_tag=method.delivery_tag)

channel.basic_qos(prefetch_count=1)

channel.basic_consume(callback,

queue='task_queue')

channel.start_consuming()

Producer code

#!/usr/bin/env python

import pika

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.56.100'))

channel = connection.channel()

channel.queue_declare(queue='task_queue', durable=True)

for n in range(10):

message = "Hello World! %s" % (n + 1)

channel.basic_publish(exchange='',

routing_key='task_queue',

body=message,

properties=pika.BasicProperties(

delivery_mode=2, # make message persistent

))

print(" [x] Sent %r" % message)

connection.close()

Message transmission type

Previous examples are basically 1-to-1 message sending and receiving, that is, messages can only be sent to a designated queue, but sometimes you want your message to be received by all Queues, similar to the broadcast effect, then exchange is needed.

Exchange is typed when defined to determine which Queue s are eligible to receive messages

| attribute | describe |

|---|---|

| fanout | All queue s from bind to exchange can receive messages |

| direct | The only queue determined by routingKey and exchange can receive messages |

| topic | All queue s that conform to routingKey (which can be an expression in this case) bind by routingKey can receive messages. |

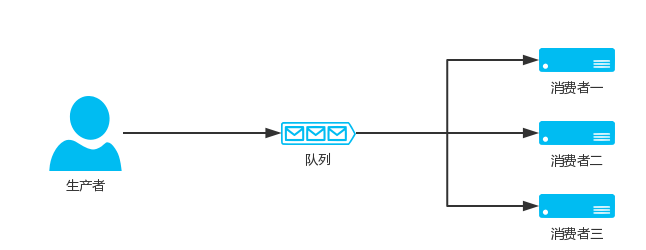

Fanout (Publish and Subscribe)

As long as there are consumers, all consumers will be received when my producer releases a message.

# Consumer

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters(host='192.168.56.100'))

channel = connection.channel()

channel.exchange_declare(exchange='logs', type='fanout')

# Without specifying queue name, rabbit randomly assigns a name, exclusive=True automatically deletes queue after the consumer who uses the queue disconnects.

result = channel.queue_declare(exclusive=True)

# Get queue's name

queue_name = result.method.queue

# Bind queue to exchange

channel.queue_bind(exchange='logs', queue=queue_name)

def callback(ch, method, properties, body):

print(" [x] %r" % body)

channel.basic_consume(callback,queue=queue_name,no_ack=True)

channel.start_consuming()

# Producer import pika connection = pika.BlockingConnection(pika.ConnectionParameters(host='192.168.56.100')) channel = connection.channel() # fanout to everyone channel.exchange_declare(exchange='logs', type='fanout') channel.basic_publish(exchange='logs', routing_key='', body="Hello World!") connection.close()

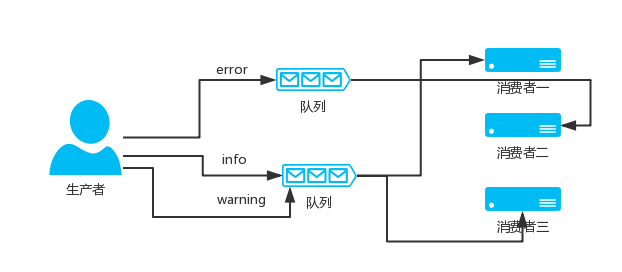

Direct (keyword)

RabbitMQ also supports keyword-based sending, i.e., queue binding keywords, sender sending data to message exchange based on keywords, exchange decides that data should be sent to the specified queue according to keyword.

Producer code

#!/usr/bin/env python

import pika

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.56.100'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

type='direct')

severity = sys.argv[1] if len(sys.argv) > 1 else 'info'

message = ' '.join(sys.argv[2:]) or 'Hello World!'

channel.basic_publish(exchange='direct_logs',

routing_key=severity,

body=message)

print(" [x] Sent %r:%r" % (severity, message))

connection.close()

Consumer Code

#!/usr/bin/env python

import pika

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.56.100'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

type='direct')

result = channel.queue_declare(exclusive=True)

queue_name = result.method.queue

severities = sys.argv[1:]

if not severities:

sys.stderr.write("Usage: %s [info] [warning] [error]\n" % sys.argv[0])

sys.exit(1)

for severity in severities:

channel.queue_bind(exchange='direct_logs',

queue=queue_name,

routing_key=severity)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r:%r" % (method.routing_key, body))

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()

Topic (fuzzy matching)

Under topic type, the queue can be bound with several obscure keywords, and then the sender sends the data to exchange. Exchange matches the incoming "routing value" and "keyword" and sends the data to the specified queue if the match is successful.

Expressional symbols indicate:

| Symbol | describe |

|---|---|

| # | Represents that 0 or more words can be matched |

| * | Represents that only one word can be matched |

| Sender Routing Value | In the queue | Match or not |

|---|---|---|

| ansheng.me | ansheng.* | Mismatch |

| ansheng.me | ansheng.# | matching |

Consumer Code

#!/usr/bin/env python

import pika

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.56.100'))

channel = connection.channel()

channel.exchange_declare(exchange='topic_logs',

type='topic')

result = channel.queue_declare(exclusive=True)

queue_name = result.method.queue

binding_keys = sys.argv[1:]

if not binding_keys:

sys.stderr.write("Usage: %s [binding_key]...\n" % sys.argv[0])

sys.exit(1)

for binding_key in binding_keys:

channel.queue_bind(exchange='topic_logs',

queue=queue_name,

routing_key=binding_key)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r:%r" % (method.routing_key, body))

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()

Producer code

#!/usr/bin/env python

import pika

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.56.100'))

channel = connection.channel()

channel.exchange_declare(exchange='topic_logs',

type='topic')

routing_key = sys.argv[1] if len(sys.argv) > 1 else 'anonymous.info'

message = ' '.join(sys.argv[2:]) or 'Hello World!'

channel.basic_publish(exchange='topic_logs',

routing_key=routing_key,

body=message)

print(" [x] Sent %r:%r" % (routing_key, message))

connection.close()

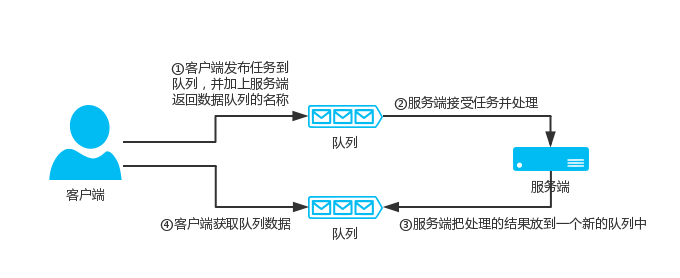

RPC(Remote procedure call)

The client sends a task to the server, and the server returns the result of the task to the client.

If you are still confused in the world of programming, you can join our Python learning button qun: 784758214 to see how our predecessors learned! Exchange experience! I am a senior Python development engineer, from basic Python script to web development, crawler, django, data mining and so on, zero-based to the actual project data have been collated. To every Python buddy! Share some learning methods and small details that need attention. Click to join us. python learner gathering place

- RPC Server

# _*_coding:utf-8_*_

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.56.100'))

channel = connection.channel()

# Declare an RPC QUEUE

channel.queue_declare(queue='rpc_queue')

def fib(n):

if n == 0:

return 0

elif n == 1:

return 1

else:

return fib(n - 1) + fib(n - 2)

def on_request(ch, method, props, body):

# Accept the passed value

n = int(body)

print(" [.] fib(%s)" % n)

# Fibonacci handing over to fib function

response = fib(n)

# Send the results back, and the consumer becomes the producer.

ch.basic_publish(exchange='',

routing_key=props.reply_to,

# The UUID from the client is sent back by the way.

properties=pika.BasicProperties(correlation_id=props.correlation_id),

body=str(response))

# Persistence

ch.basic_ack(delivery_tag=method.delivery_tag)

# Handling only one task at a time

channel.basic_qos(prefetch_count=1)

channel.basic_consume(on_request, queue='rpc_queue')

print(" [x] Awaiting RPC requests")

channel.start_consuming()

RPC Client

What I don't know in the process of learning can be added to me?

python Learning Exchange Button qun,784758214

//There are good learning video tutorials, development tools and e-books in the group.

//Share with you the current talent needs of python enterprises and how to learn python from zero foundation, and what to learn

# _*_coding:utf-8_*_

import pika

import uuid

class FibonacciRpcClient(object):

def __init__(self):

self.connection = pika.BlockingConnection(pika.ConnectionParameters(

host='192.168.56.100'))

self.channel = self.connection.channel()

result = self.channel.queue_declare(exclusive=True)

# The server returns the new Queue name of the processed data

self.callback_queue = result.method.queue

self.channel.basic_consume(self.on_response, no_ack=True,

queue=self.callback_queue)

def on_response(self, ch, method, props, body):

# corr_id equals the ID just sent, which means that this message is mine.

if self.corr_id == props.correlation_id:

self.response = body

def call(self, n):

self.response = None

# Generate a unique ID, equivalent to the ID of each task

self.corr_id = str(uuid.uuid4())

self.channel.basic_publish(exchange='',

routing_key='rpc_queue',

properties=pika.BasicProperties(

# Let the server finish processing and put the data in this Queue

reply_to=self.callback_queue,

# Add a task ID

correlation_id=self.corr_id,

),

body=str(n))

while self.response is None:

# Keep going to Queue to receive messages, but instead of blocking, it's going round and round.

self.connection.process_data_events()

return int(self.response)

fibonacci_rpc = FibonacciRpcClient()

print(" [x] Requesting fib(30)")

response = fibonacci_rpc.call(30)

print(" [.] Got %r" % response)