Original link: From practice to principle, take you through gRPC

Hello, I'm fried fish.

gRPC is very popular in the Go language. More and more friends are using it. Recently, they have also been in Amway. I hope this article can show you the cleverness of gRPC. This article is quite long. Please be prepared to read it.

The contents of this paper are as follows:

sketch

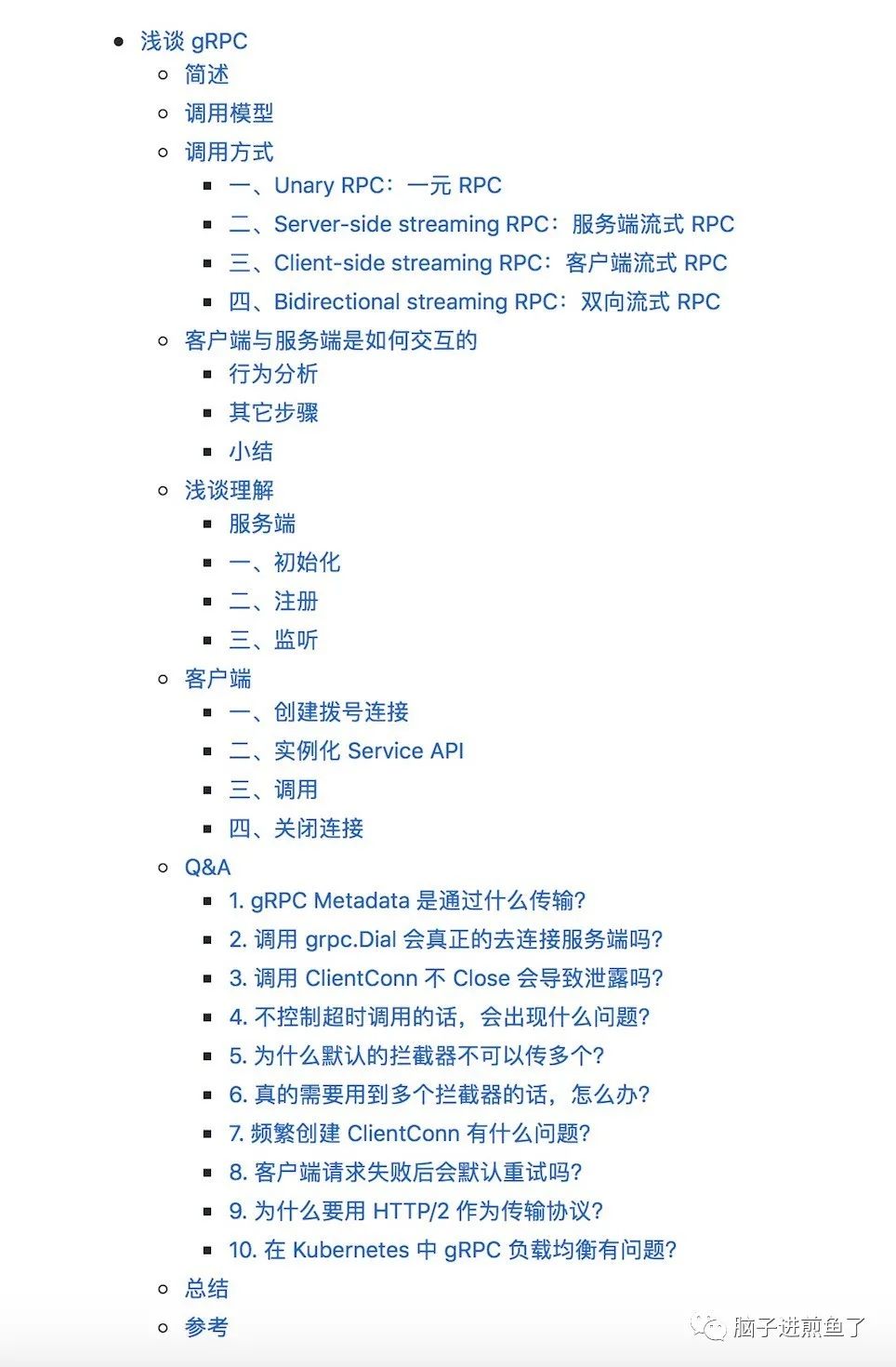

gRPC is a high-performance, open source and general RPC framework designed for mobile and HTTP/2. At present, C, Java and Go language versions are available: gRPC, gRPC Java and gRPC Go The C version supports C, C + +, and node JS, python, ruby, Objective-C, PHP and c# support.

gRPC is designed based on HTTP/2 standard and brings features such as two-way flow, flow control, header compression, multiple multiplexing requests on a single TCP connection and so on. These features make it perform better on mobile devices, save power and save space.

Call model

1. The client (gRPC Stub) calls the A method to initiate RPC calls.

2. Use Protobuf for object serialization compression (IDL) of request information.

3. After receiving the request, the server (gRPC Server) decodes the request body, performs business logic processing and returns it.

4. Use Protobuf for object serialization compression (IDL) on the response results.

5. The client receives the server response and decodes the request body. Call back the called A method, wake up the client call waiting for the response (blocking) and return the response result.

Call mode

1, Unary RPC: unary RPC

Server

type SearchService struct{}

func (s *SearchService) Search(ctx context.Context, r *pb.SearchRequest) (*pb.SearchResponse, error) {

return &pb.SearchResponse{Response: r.GetRequest() + " Server"}, nil

}

const PORT = "9001"

func main() {

server := grpc.NewServer()

pb.RegisterSearchServiceServer(server, &SearchService{})

lis, err := net.Listen("tcp", ":"+PORT)

...

server.Serve(lis)

}

-

Create gRPC Server object. You can understand it as an abstract object on the Server side.

-

Register the SearchService (which contains the server-side interface that needs to be called) with the gRPC Server. Internal registry for. In this way, when a request is received, the service interface can be found through the internal "service discovery" and transferred for logical processing.

-

Create a Listen to Listen on the TCP port.

-

gRPC Server start LIS Accept until Stop or GracefulStop.

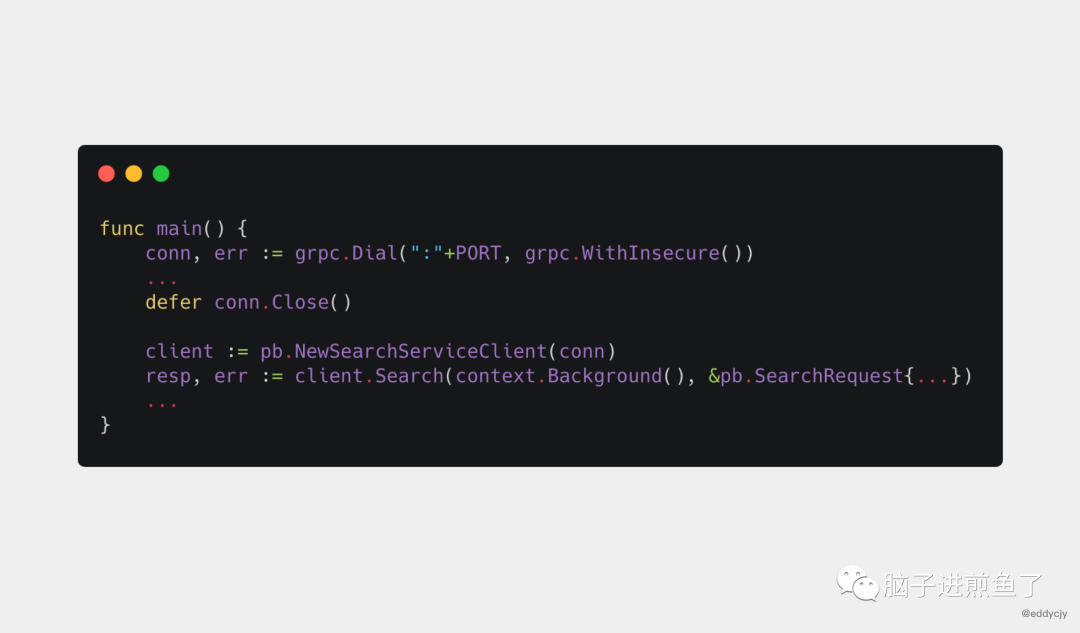

Client

func main() {

conn, err := grpc.Dial(":"+PORT, grpc.WithInsecure())

...

defer conn.Close()

client := pb.NewSearchServiceClient(conn)

resp, err := client.Search(context.Background(), &pb.SearchRequest{

Request: "gRPC",

})

...

}

-

Creates a connection handle to a given target (server).

-

Create a client object for the SearchService.

-

Send the RPC request, wait for the synchronous response, and return the response result after getting the callback.

2, Server side streaming RPC: server side streaming RPC

Server

func (s *StreamService) List(r *pb.StreamRequest, stream pb.StreamService_ListServer) error {

for n := 0; n <= 6; n++ {

stream.Send(&pb.StreamResponse{

Pt: &pb.StreamPoint{

...

},

})

}

return nil

}

Client

func printLists(client pb.StreamServiceClient, r *pb.StreamRequest) error {

stream, err := client.List(context.Background(), r)

...

for {

resp, err := stream.Recv()

if err == io.EOF {

break

}

...

}

return nil

}

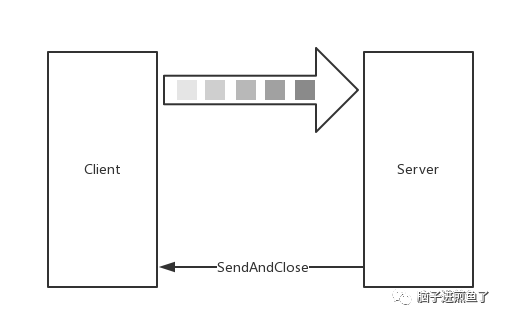

3, Client side streaming RPC

Server

func (s *StreamService) Record(stream pb.StreamService_RecordServer) error {

for {

r, err := stream.Recv()

if err == io.EOF {

return stream.SendAndClose(&pb.StreamResponse{Pt: &pb.StreamPoint{...}})

}

...

}

return nil

}

Client

func printRecord(client pb.StreamServiceClient, r *pb.StreamRequest) error {

stream, err := client.Record(context.Background())

...

for n := 0; n < 6; n++ {

stream.Send(r)

}

resp, err := stream.CloseAndRecv()

...

return nil

}

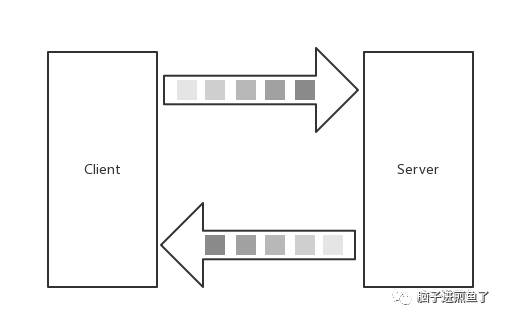

4, Bidirectional streaming RPC: bidirectional streaming RPC

Server

func (s *StreamService) Route(stream pb.StreamService_RouteServer) error {

for {

stream.Send(&pb.StreamResponse{...})

r, err := stream.Recv()

if err == io.EOF {

return nil

}

...

}

return nil

}

Client

func printRoute(client pb.StreamServiceClient, r *pb.StreamRequest) error {

stream, err := client.Route(context.Background())

...

for n := 0; n <= 6; n++ {

stream.Send(r)

resp, err := stream.Recv()

if err == io.EOF {

break

}

...

}

stream.CloseSend()

return nil

}

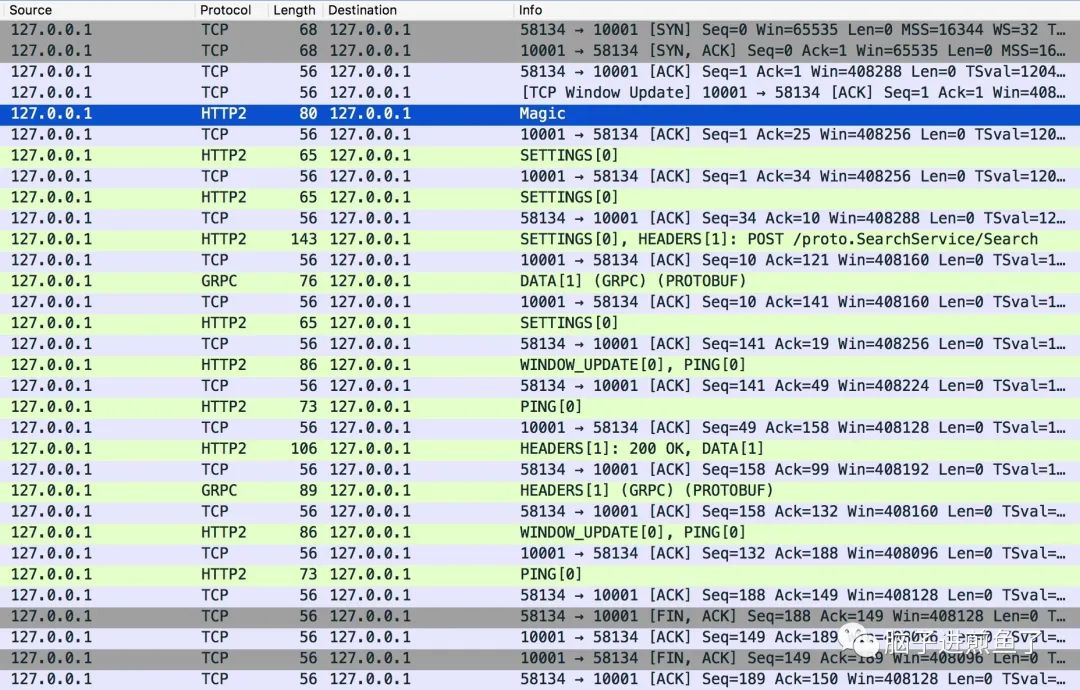

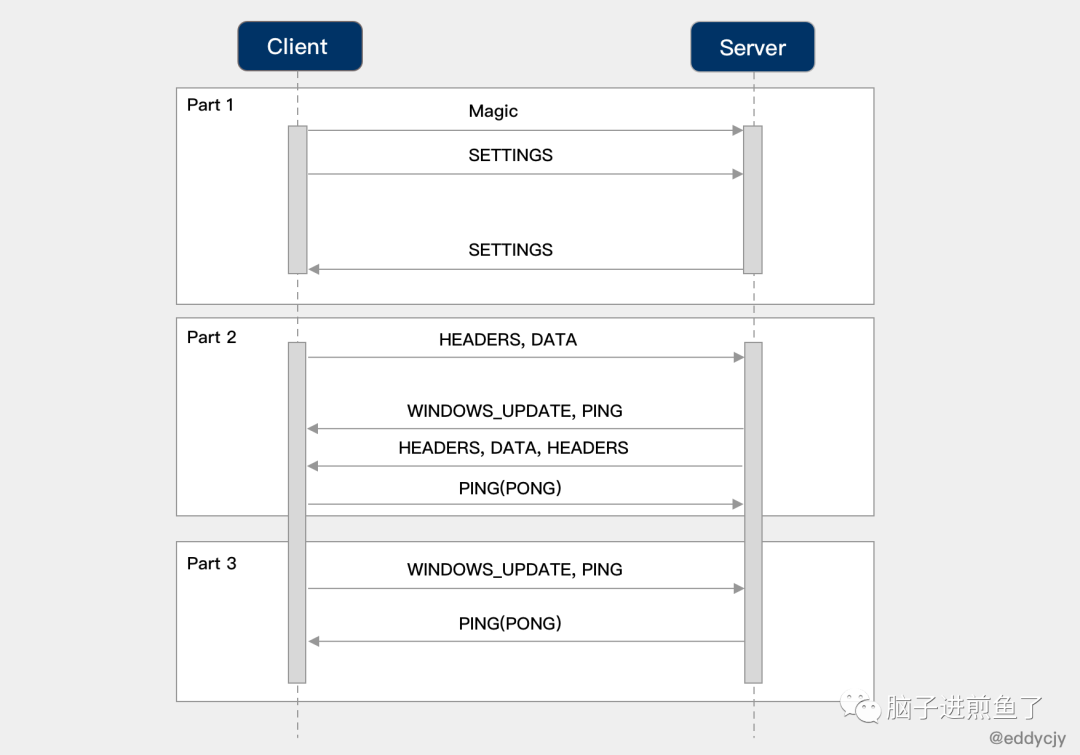

How do client and server interact

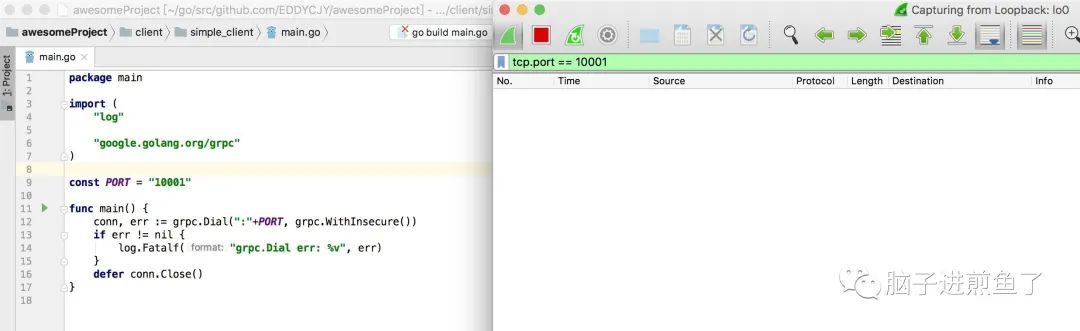

Before starting the analysis, we need to have an initial impression of the gRPC call. The simplest thing is to capture packets from the Client side to the Server side and see what it has done in the whole process. As shown below:

-

Magic

-

SETTINGS

-

HEADERS

-

DATA

-

SETTINGS

-

WINDOW_UPDATE

-

PING

-

HEADERS

-

DATA

-

HEADERS

-

WINDOW_UPDATE

-

PING

After a little sorting, we find that there are twelve behaviors, which are more important. Before starting the analysis, I suggest you think about their functions first? It's better to make a bold guess and learn with questions.

behavior analysis

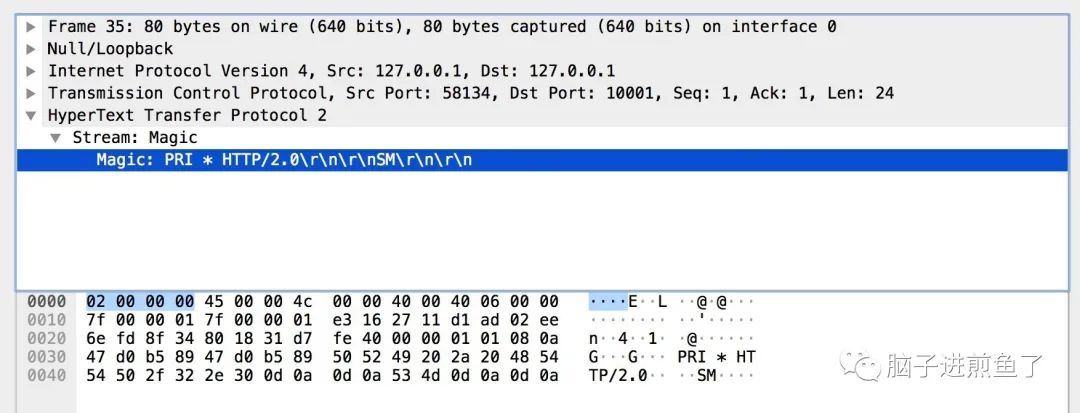

Magic

The main function of Magic frame is to establish the preamble of HTTP/2 request. In HTTP/2, both ends are required to send a connection foreword as the final confirmation of the protocol used, and determine the initial setting of HTTP/2 connection. The client and server send different connection forewords respectively.

The Magic frame in the figure above is one of the forewords of the client. The content is PRI * HTTP/2.0\r\n\r\nSM\r\n\r\n to confirm that HTTP/2 connection is enabled.

SETTINGS

The SETTINGS frame is mainly used to set the parameters of this connection. The scope is the whole connection rather than a single flow.

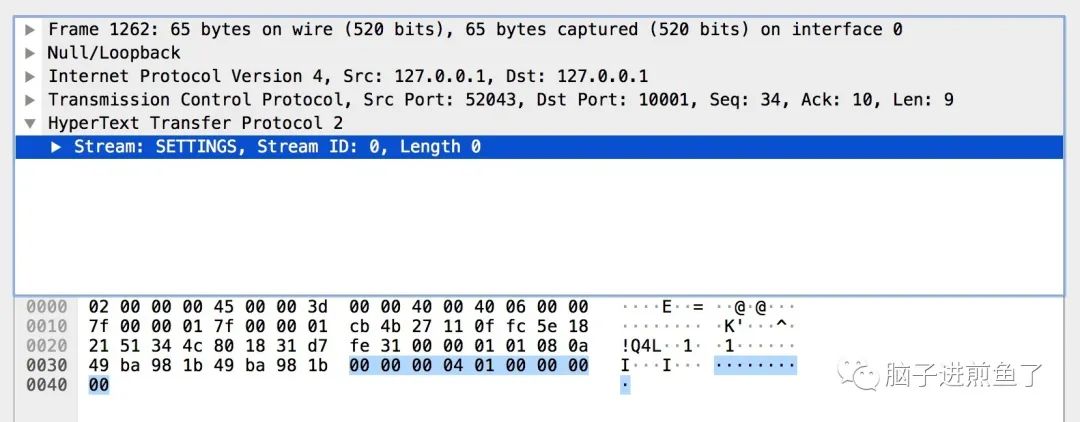

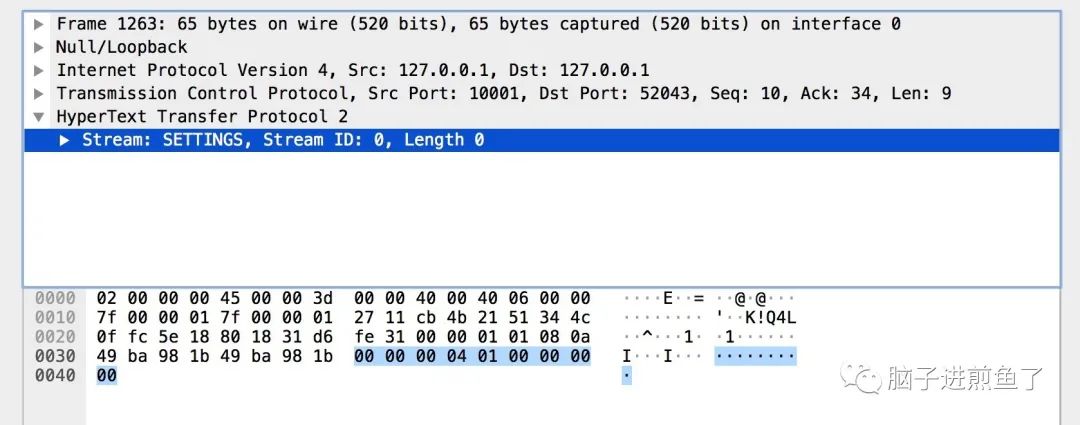

The SETTINGS frames in the figure above are all empty SETTINGS frames. Figure 1 is the preface of the client connection (Magic and SETTINGS frames constitute the connection preface respectively). Figure 2 shows the of the server. In addition, we can see multiple SETTINGS frames from the figure. Why? This is because after sending the connection preface, the client and server need to have a step of interactive confirmation. The corresponding frame is the SETTINGS frame with ACK ID.

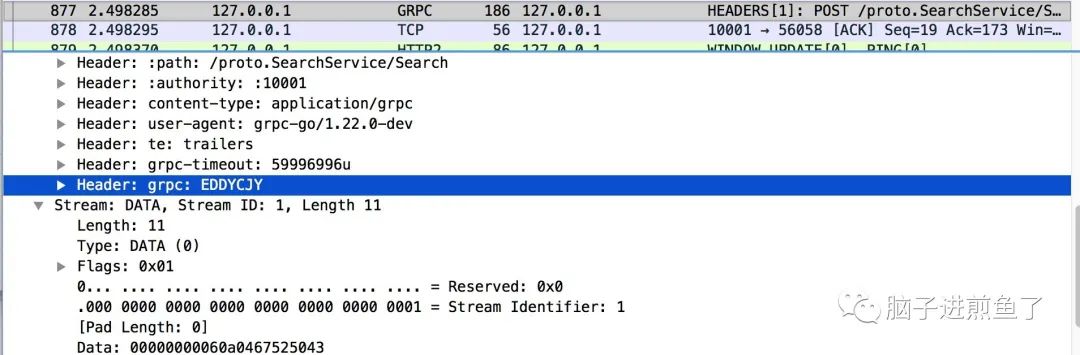

HEADERS

The main function of HEADERS frame is to store and propagate HTTP header information. We noticed that there are some familiar information in HEADERS, which are as follows:

-

method: POST

-

scheme: http

-

path: /proto.SearchService/Search

-

authority: :10001

-

content-type: application/grpc

-

user-agent: grpc-go/1.20.0-dev

You will find that these things are very familiar. In fact, they are the basic attributes of gRPC. In fact, they are far more than these. They just show how much they are set. For example, gRPC timeout and gRPC encoding are also set here.

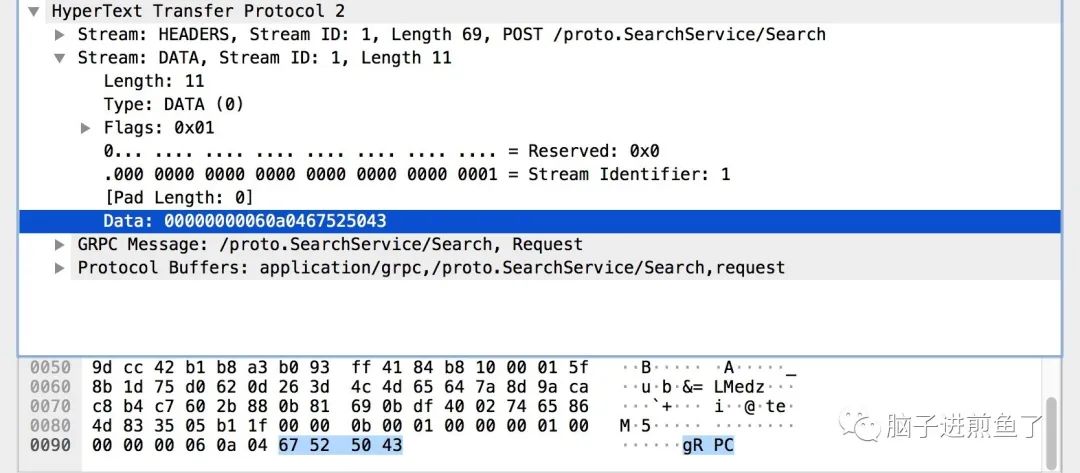

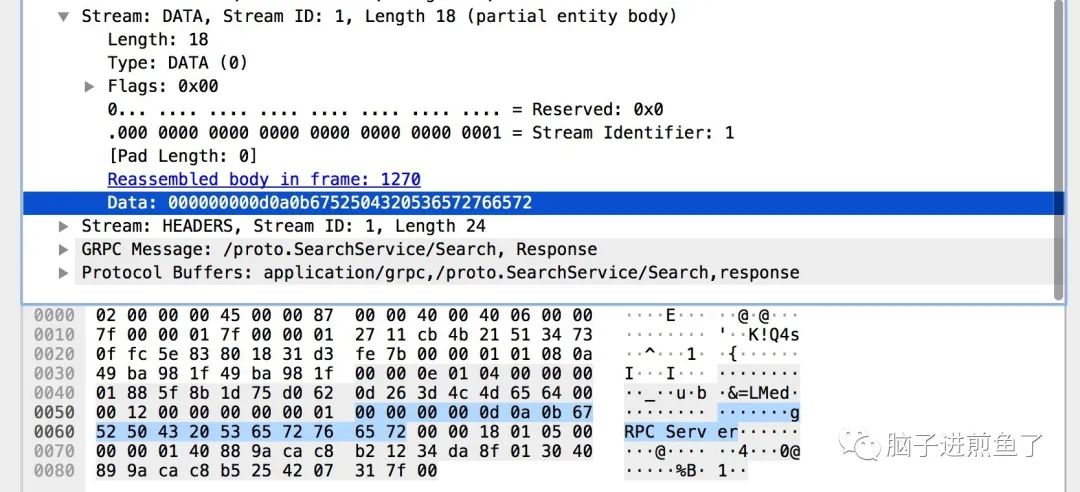

DATA

The main function of DATA frame is to load subject information, which is a DATA frame. In the figure above, it is obvious that our request parameter gRPC is stored in it. Just understand this.

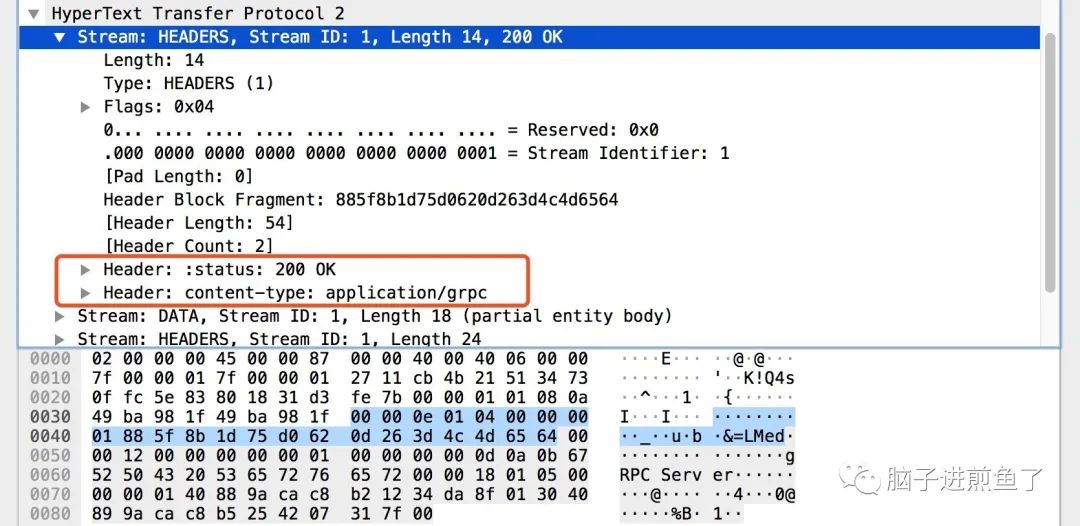

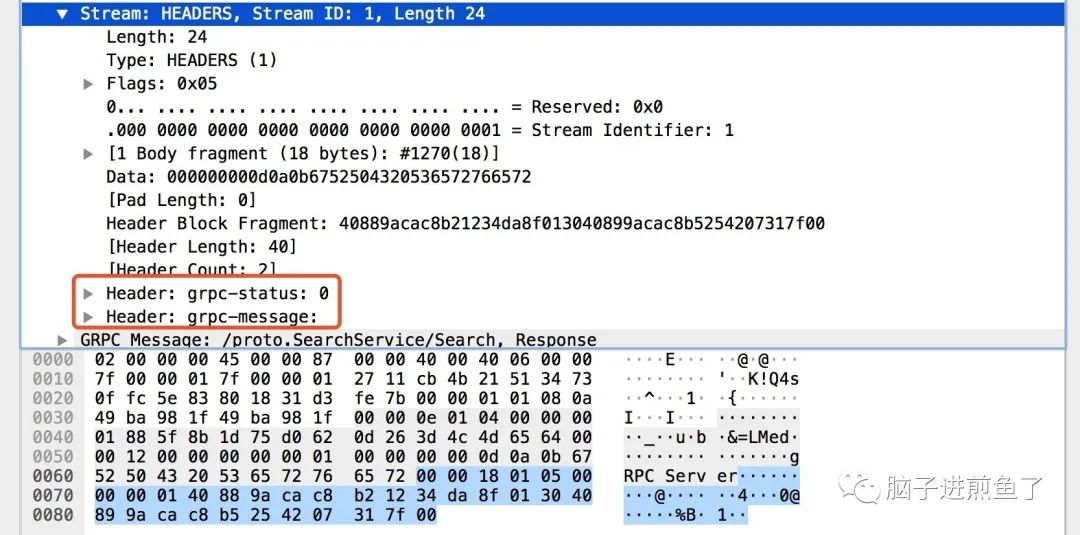

HEADERS, DATA, HEADERS

In the figure above, the HEADERS frame is relatively simple, which tells us the HTTP response status and the content format of the response.

In the figure above, the DATA frame mainly carries the DATA set of response results, and the gRPC Server in the figure is the response result of our RPC method.

In the figure above, the HEADERS frame mainly carries gRPC status and gRPC status messages. gRPC status and gRPC message in the figure are the results of our gRPC call status.

Other steps

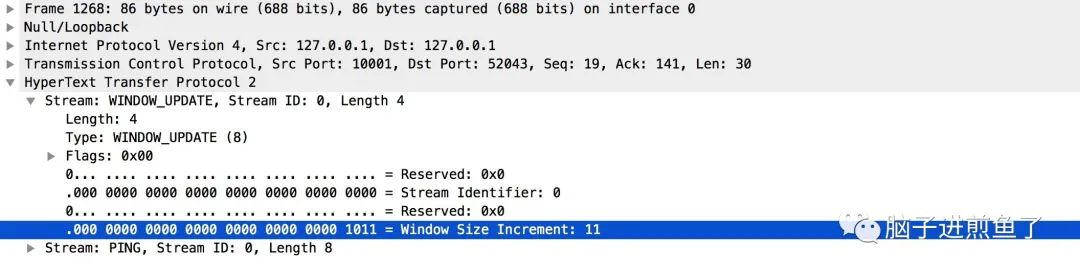

WINDOW_UPDATE

The main function is to manage and control the flow window. Usually, after a connection is opened, the server and client will immediately exchange SETTINGS frames to determine the size of the flow control window. By default, the size is set to about 65 KB, but you can issue a window_ The update frame sets different sizes for flow control.

PING/PONG

The main function is to judge whether the current connection is still available. It is also commonly used to calculate the round-trip time. In fact, it's also PING/PONG. Everyone should be familiar with it.

Summary

-

Before establishing a connection, the client / server will send the connection preamble (Magic+SETTINGS) to establish the protocol and configuration items.

-

When transmitting data, flow control strategies such as WINDOW_UPDATE will be involved.

-

When propagating gRPC additional information, it is propagated and set based on the HEADERS frame; The specific request / response DATA is stored in the DATA frame.

-

The request / response results are divided into two types: HTTP and gRPC status responses.

-

When the client initiates PING, the server will respond to PONG, or vice versa.

For the basic use of gRPC, you can see my other gRPC introduction series. I believe it will be helpful to you.

On understanding

Server

Why can four lines of code play a gRPC Server and what logic is done internally. Have you ever thought about it? Next, we analyze it step by step to see what is sacred inside.

1, Initialization

// grpc.NewServer()

func NewServer(opt ...ServerOption) *Server {

opts := defaultServerOptions

for _, o := range opt {

o(&opts)

}

s := &Server{

lis: make(map[net.Listener]bool),

opts: opts,

conns: make(map[io.Closer]bool),

m: make(map[string]*service),

quit: make(chan struct{}),

done: make(chan struct{}),

czData: new(channelzData),

}

s.cv = sync.NewCond(&s.mu)

...

return s

}

This is a relatively simple example, mainly grpc Server and perform initialization. The following are involved:

-

lis: listen to the address list.

-

opts: service options, including Credentials, Interceptor, and some basic configurations.

-

conns: list of client connection handles.

-

m: Service information mapping.

-

quit: exit signal.

-

done: completion signal.

-

czData: used to store the channelz related data of ClientConn, addrConn and Server.

-

cv: when exiting gracefully, it will wait for this semaphore until all RPC requests are processed and disconnected.

2, Register

pb.RegisterSearchServiceServer(server, &SearchService{})

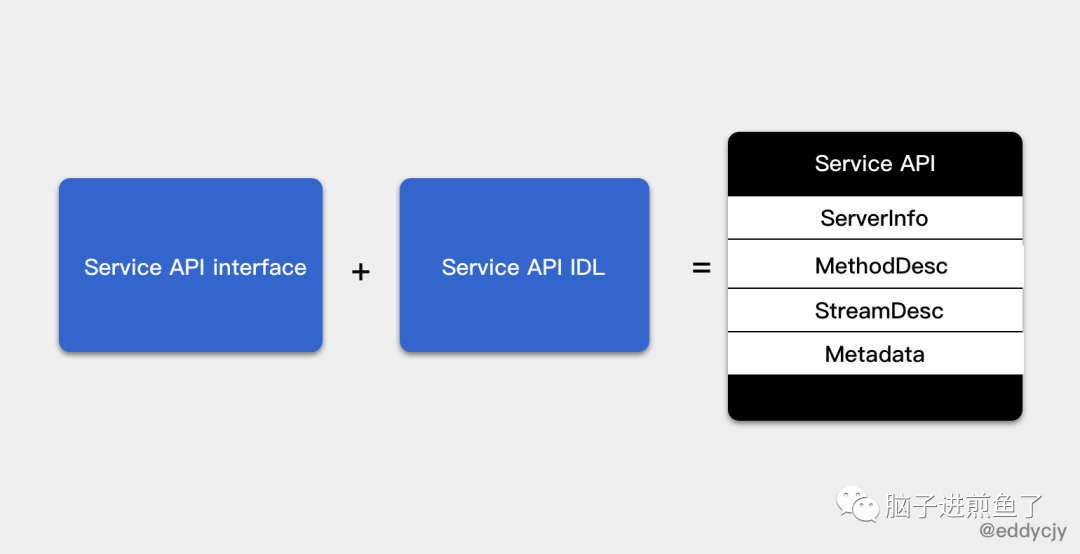

Step 1: Service API interface

// search.pb.go

type SearchServiceServer interface {

Search(context.Context, *SearchRequest) (*SearchResponse, error)

}

func RegisterSearchServiceServer(s *grpc.Server, srv SearchServiceServer) {

s.RegisterService(&_SearchService_serviceDesc, srv)

}

Remember the Protobuf we usually write? In the generated pb. In the go} file, the specific implementation constraints of the Service APIs interface will be defined. When we register with gRPC Server, we will pass in the functional interface implementation of application Service. At this time, the generated , RegisterServer , method will ensure the consistency between the two.

Step 2: Service API IDL

You want to pass it on? Impossible, please define the interface method consistent with Protobuf. But that&_ SearchService_ What does servicedesc do? The code is as follows:

// search.pb.go

var _SearchService_serviceDesc = grpc.ServiceDesc{

ServiceName: "proto.SearchService",

HandlerType: (*SearchServiceServer)(nil),

Methods: []grpc.MethodDesc{

{

MethodName: "Search",

Handler: _SearchService_Search_Handler,

},

},

Streams: []grpc.StreamDesc{},

Metadata: "search.proto",

}

This looks like the service description code, which is used to internally express what "I" have. The following are involved:

-

ServiceName: service name

-

HandlerType: service interface, used to check whether the implementation provided by the user meets the interface requirements

-

Methods: unary method set. Note that the {Handler} method in the structure corresponds to the final RPC processing method, which will be used in the stage of executing RPC methods.

-

Streams: streaming method set

-

Metadata: metadata is something that describes data attributes. This section mainly describes the search service server service

Step 3: Register Service

func (s *Server) register(sd *ServiceDesc, ss interface{}) {

...

srv := &service{

server: ss,

md: make(map[string]*MethodDesc),

sd: make(map[string]*StreamDesc),

mdata: sd.Metadata,

}

for i := range sd.Methods {

d := &sd.Methods[i]

srv.md[d.MethodName] = d

}

for i := range sd.Streams {

...

}

s.m[sd.ServiceName] = srv

}

In the last step, we will register the previous service interface information and service description information to the internal service to facilitate the use of subsequent actual calls. The following are involved:

-

server: service interface information

-

md: RPC method set for a meta service

-

sd: RPC method set for streaming services

-

mdata: metadata, metadata

Summary

This chapter mainly introduces the sorting and registration behavior of gRPC Server before startup. It seems very simple, but in fact, everything is in advance for the subsequent actual operation. So let's sort out our ideas and connect them in series, as follows:

3, Monitor

Next, in the whole process, the most important and most concerned listening / processing stage, the core code is as follows:

func (s *Server) Serve(lis net.Listener) error {

...

var tempDelay time.Duration

for {

rawConn, err := lis.Accept()

if err != nil {

if ne, ok := err.(interface {

Temporary() bool

}); ok && ne.Temporary() {

if tempDelay == 0 {

tempDelay = 5 * time.Millisecond

} else {

tempDelay *= 2

}

if max := 1 * time.Second; tempDelay > max {

tempDelay = max

}

...

timer := time.NewTimer(tempDelay)

select {

case <-timer.C:

case <-s.quit:

timer.Stop()

return nil

}

continue

}

...

return err

}

tempDelay = 0

s.serveWG.Add(1)

go func() {

s.handleRawConn(rawConn)

s.serveWG.Done()

}()

}

}

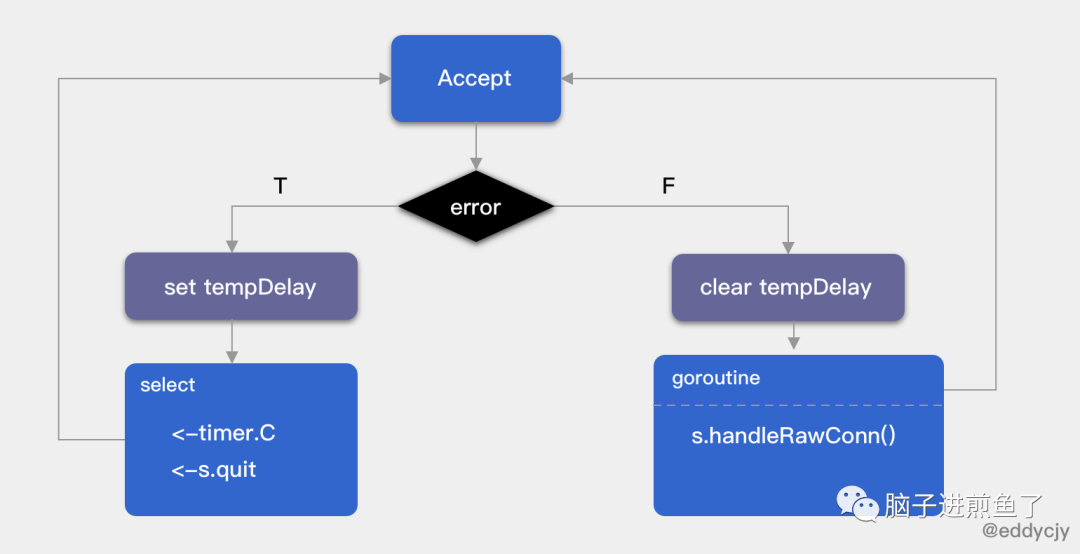

Serve will call different listening modes according to different external incoming listeners, which is also called. Net Listener will have high charm, flexibility and scalability. TCPConn is the most commonly used in gRPC Server, which is based on TCP Listener. Next, let's take a look at the specific processing logic, as follows:

-

Loop processing connection, through {LIS Accept removes the connection. If there is no connection to be processed in the queue, a blocking wait will be formed.

-

If {LIS If accept , fails, the sleep mechanism will be triggered. If it fails for the first time, it will sleep for 5ms, otherwise it will be doubled. If it fails again, it will continue to double until the upper sleep time is 1s. After hibernation, it will try to remove the next "it".

-

If {LIS If accept , succeeds, reset the sleep time count and start a new goroutine, call the , handleRawConn , method to execute / process new requests, that is, we like to say that "each request is processed by a different goroutine".

-

During the cycle, the scenario of "exiting" the service is included, mainly including hard shutdown and graceful restart of the service.

client

1, Create a dial-up connection

// grpc.Dial(":"+PORT, grpc.WithInsecure())

func DialContext(ctx context.Context, target string, opts ...DialOption) (conn *ClientConn, err error) {

cc := &ClientConn{

target: target,

csMgr: &connectivityStateManager{},

conns: make(map[*addrConn]struct{}),

dopts: defaultDialOptions(),

blockingpicker: newPickerWrapper(),

czData: new(channelzData),

firstResolveEvent: grpcsync.NewEvent(),

}

...

chainUnaryClientInterceptors(cc)

chainStreamClientInterceptors(cc)

...

}

grpc. The dial , method is actually for , grpc The encapsulation of dialcontext , differs in that , ctx , is directly passed into , context Background. Its main function is to create a client connection with a given target. It undertakes the following responsibilities:

-

Initialize ClientConn

-

Initialize (process LB based) load balancing configuration

-

Initialize channelz

-

Initialize retry rules and client unary / streaming interceptors

-

Initialize the basic information on the protocol stack

-

Timeout control of related context

-

Initialize and resolve address information

-

Create a connection with the server

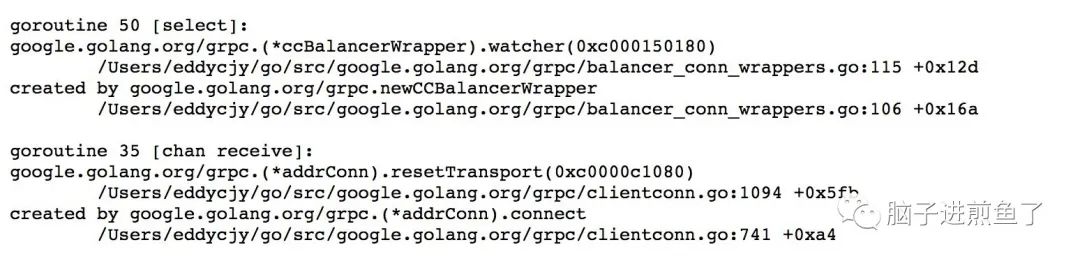

Not even

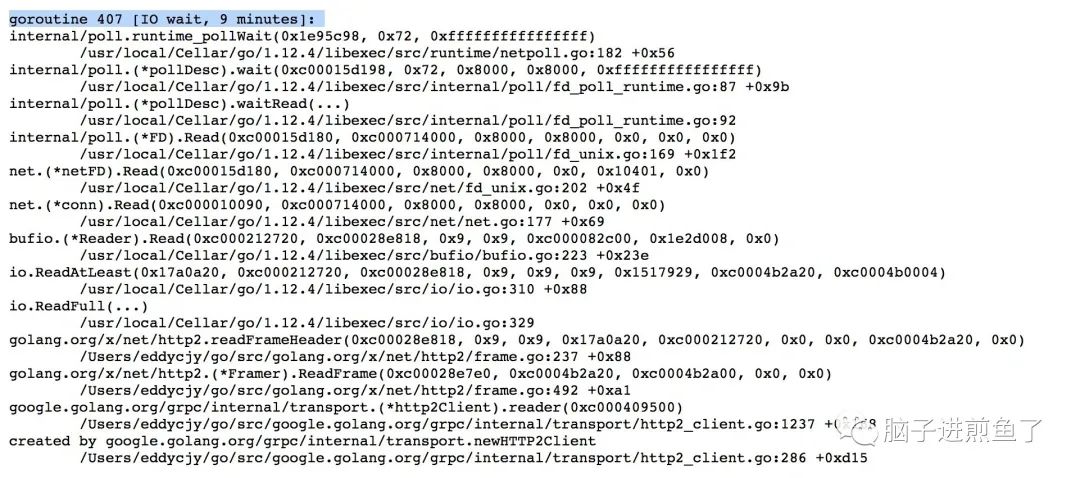

I heard some people call grpc After dial , the client has established a connection with the server, but is this right? Let's have a bird's-eye view of goroutine running. As follows:

We can have several core methods that have been waiting / processing signals, which can be known by analyzing the underlying source code. The following are involved:

func (ac *addrConn) connect() func (ac *addrConn) resetTransport() func (ac *addrConn) createTransport(addr resolver.Address, copts transport.ConnectOptions, connectDeadline time.Time) func (ac *addrConn) getReadyTransport()

Here, we mainly analyze the {resetTransport} method prompted by goroutine to see what has been done. The core code is as follows:

func (ac *addrConn) resetTransport() {

for i := 0; ; i++ {

if ac.state == connectivity.Shutdown {

return

}

...

connectDeadline := time.Now().Add(dialDuration)

ac.updateConnectivityState(connectivity.Connecting)

newTr, addr, reconnect, err := ac.tryAllAddrs(addrs, connectDeadline)

if err != nil {

if ac.state == connectivity.Shutdown {

return

}

ac.updateConnectivityState(connectivity.TransientFailure)

timer := time.NewTimer(backoffFor)

select {

case <-timer.C:

...

}

continue

}

if ac.state == connectivity.Shutdown {

newTr.Close()

return

}

...

if !healthcheckManagingState {

ac.updateConnectivityState(connectivity.Ready)

}

...

if ac.state == connectivity.Shutdown {

return

}

ac.updateConnectivityState(connectivity.TransientFailure)

}

}

In this method, you will continue to try to create a connection. If it is successful, it will end. Otherwise, continue to try to create a connection according to the retry mechanism of the Backoff algorithm until it succeeds. In conclusion, simply calling DialContext is to establish a connection asynchronously, that is, it does not take effect immediately and is in the "Connecting" state, but it is available only when it reaches the "Ready" state.

Really

There is no package on the packet capture tool, so is this really connected? I think this is a question of expression, and we should be as rigorous as possible. If you really want to connect to the server through the {DialContext} method, you need to call the} WithBlock} method. Although it will lead to blocking and waiting, the connection will eventually reach the {Ready} state (handshake succeeded). As shown below:

2, Instantiate Service API

type SearchServiceClient interface {

Search(ctx context.Context, in *SearchRequest, opts ...grpc.CallOption) (*SearchResponse, error)

}

type searchServiceClient struct {

cc *grpc.ClientConn

}

func NewSearchServiceClient(cc *grpc.ClientConn) SearchServiceClient {

return &searchServiceClient{cc}

}

This is the instance Service API interface, which is relatively simple.

3, Call

// search.pb.go

func (c *searchServiceClient) Search(ctx context.Context, in *SearchRequest, opts ...grpc.CallOption) (*SearchResponse, error) {

out := new(SearchResponse)

err := c.cc.Invoke(ctx, "/proto.SearchService/Search", in, out, opts...)

if err != nil {

return nil, err

}

return out, nil

}

The RPC method generated by proto is more like a packing box, which puts the required things in, but actually calls grpc Invoke method. As follows:

func invoke(ctx context.Context, method string, req, reply interface{}, cc *ClientConn, opts ...CallOption) error {

cs, err := newClientStream(ctx, unaryStreamDesc, cc, method, opts...)

if err != nil {

return err

}

if err := cs.SendMsg(req); err != nil {

return err

}

return cs.RecvMsg(reply)

}

From the overview, you can focus on three block calls. As follows:

-

newClientStream: obtain transport layer Trasport and package it into ClientStream for return. The actions of load balancing, timeout control, Encoding and Stream are basically the same as those of the server.

-

cs.SendMsg: sends an RPC request, but it does not assume the function of waiting for a response.

-

cs.RecvMsg: block waiting for the received RPC method response result.

connect

// clientconn.go

func (cc *ClientConn) getTransport(ctx context.Context, failfast bool, method string) (transport.ClientTransport, func(balancer.DoneInfo), error) {

t, done, err := cc.blockingpicker.pick(ctx, failfast, balancer.PickOptions{

FullMethodName: method,

})

if err != nil {

return nil, nil, toRPCErr(err)

}

return t, done, nil

}

In the {newClientStream} method, we obtain ClientTransport and ServerTransport abstracted from the Transport layer through the {getTransport} method, which is actually to obtain a connection for subsequent RPC call transmission.

4, Close connection

// conn.Close()

func (cc *ClientConn) Close() error {

defer cc.cancel()

...

cc.csMgr.updateState(connectivity.Shutdown)

...

cc.blockingpicker.close()

if rWrapper != nil {

rWrapper.close()

}

if bWrapper != nil {

bWrapper.close()

}

for ac := range conns {

ac.tearDown(ErrClientConnClosing)

}

if channelz.IsOn() {

...

channelz.AddTraceEvent(cc.channelzID, ted)

channelz.RemoveEntry(cc.channelzID)

}

return nil

}

This method cancels the ClientConn context and closes all underlying transports. The following are involved:

-

Context Cancel

-

Clear and close client connections

-

Empty and close the parser connection

-

Empty and close the load balancing connection

-

Add trace reference

-

Remove current channel information

Q&A

1. How is grpc metadata transmitted?

2. Call grpc Will dial really connect to the server?

Yes, but it is asynchronously connected. The connection status is connecting. But if you set grpc With block option, the wait will be blocked (wait for the handshake to succeed). In addition, you should note that when grpc ctx timeout control has no effect on withblock.

3. Will calling ClientConn without closing cause disclosure?

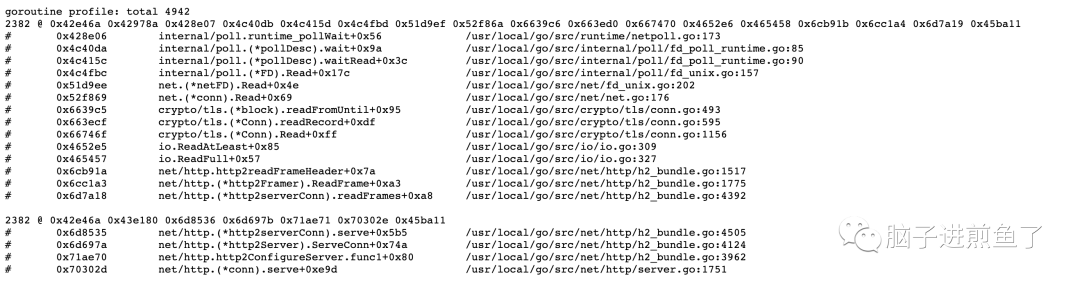

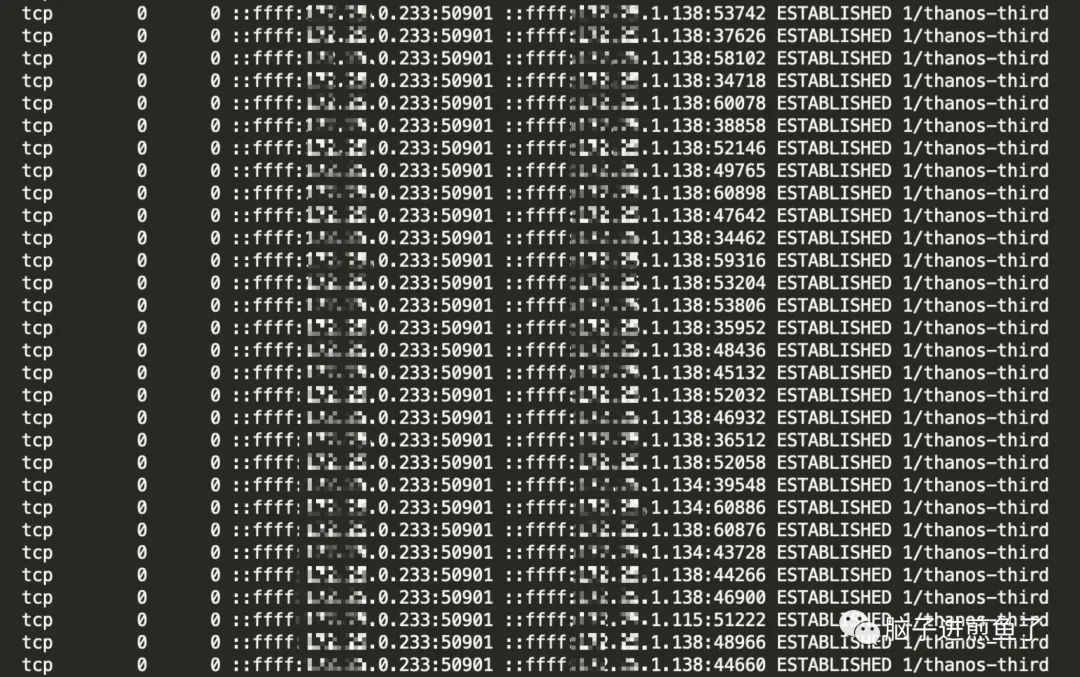

Yes, unless your client is not a resident process, it will passively recycle resources at the end of the application. However, if it is a resident process and you really forget to execute the Close statement, it will cause leakage. As shown below:

3.1. client

3.2. Server

3.3. TCP

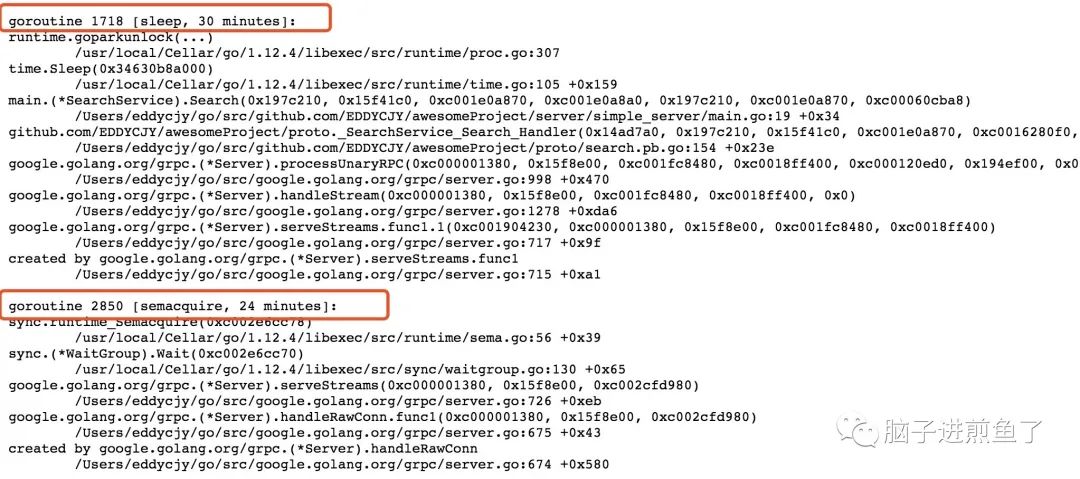

4. What will happen if the timeout call is not controlled?

There will be no problems in a short time, but the savings will continue to leak. In the end, of course, the service cannot provide response. As shown below:

5. Why can't multiple interceptors be transmitted by default?

func chainUnaryClientInterceptors(cc *ClientConn) {

interceptors := cc.dopts.chainUnaryInts

if cc.dopts.unaryInt != nil {

interceptors = append([]UnaryClientInterceptor{cc.dopts.unaryInt}, interceptors...)

}

var chainedInt UnaryClientInterceptor

if len(interceptors) == 0 {

chainedInt = nil

} else if len(interceptors) == 1 {

chainedInt = interceptors[0]

} else {

chainedInt = func(ctx context.Context, method string, req, reply interface{}, cc *ClientConn, invoker UnaryInvoker, opts ...CallOption) error {

return interceptors[0](ctx, method, req, reply, cc, getChainUnaryInvoker(interceptors, 0, invoker), opts...)

}

}

cc.dopts.unaryInt = chainedInt

}

When there are multiple interceptors, the first interceptor is taken. Therefore, the conclusion is that multiple transmission is allowed, but it is useless.

6. What if multiple interceptors are needed?

You can use the grpc.net provided by go grpc middleware Unaryinterceptor and grpc Streamminterceptor is a chain method, which is convenient, fast and worry-free.

It's not enough just to use it. Let's take a deeper look and see how it is implemented. The core code is as follows:

func ChainUnaryClient(interceptors ...grpc.UnaryClientInterceptor) grpc.UnaryClientInterceptor {

n := len(interceptors)

if n > 1 {

lastI := n - 1

return func(ctx context.Context, method string, req, reply interface{}, cc *grpc.ClientConn, invoker grpc.UnaryInvoker, opts ...grpc.CallOption) error {

var (

chainHandler grpc.UnaryInvoker

curI int

)

chainHandler = func(currentCtx context.Context, currentMethod string, currentReq, currentRepl interface{}, currentConn *grpc.ClientConn, currentOpts ...grpc.CallOption) error {

if curI == lastI {

return invoker(currentCtx, currentMethod, currentReq, currentRepl, currentConn, currentOpts...)

}

curI++

err := interceptors[curI](currentCtx, currentMethod, currentReq, currentRepl, currentConn, chainHandler, currentOpts...)

curI--

return err

}

return interceptors[0](ctx, method, req, reply, cc, chainHandler, opts...)

}

}

...

}

When the number of interceptors is greater than 1, start recursion from , interceptors[1], and each recursive interceptor , interceptors[i] will continue to execute, and finally really execute the , handler , method. At the same time, people often ask what is the execution order of the interceptor. Have you reached a conclusion through this code?

7. What's the problem with frequent creation of ClientConn?

We can reverse verify this problem. Suppose we don't share ClientConn, what will happen? As follows:

func BenchmarkSearch(b *testing.B) {

for i := 0; i < b.N; i++ {

conn, err := GetClientConn()

if err != nil {

b.Errorf("GetClientConn err: %v", err)

}

_, err = Search(context.Background(), conn)

if err != nil {

b.Errorf("Search err: %v", err)

}

}

}

Output results:

... connection error: desc = "transport: Error while dialing dial tcp :10001: socket: too many open files" ... connection error: desc = "transport: Error while dialing dial tcp :10001: socket: too many open files" ... connection error: desc = "transport: Error while dialing dial tcp :10001: socket: too many open files" ... connection error: desc = "transport: Error while dialing dial tcp :10001: socket: too many open files" FAIL exit status 1

When your application scenario generates / calls ClientConn at the same time with high frequency, it may cause the system to occupy too many file handles. In this case, you can change the mode of the application generating / calling ClientConn, or pool it. For this, please refer to the grpc go pool project.

8. Will the client retry by default after the request fails?

Retries will continue until the context is cancelled. In terms of retry time, backoff algorithm is used as the reconnection mechanism, and the default maximum retry time interval is 120s.

9. Why use HTTP/2 as the transport protocol?

Many clients need to access the network through HTTP proxy. gRPC is all implemented with HTTP/2. When the proxy starts to support HTTP/2, gRPC data can be transparently forwarded. Not only that, the reverse proxy responsible for load balancing, access control, etc. can be seamlessly compatible with gRPC, which is much more scientific than the Thrift designed by wire protocol@ ctiller @ Teng Yifei

10. Is there a problem with gRPC load balancing in Kubernetes?

The RPC Protocol of gRPC is implemented based on the HTTP/2 standard. A major feature of HTTP/2 is that it does not need to re-establish a new connection every time a request is issued, but will reuse the original connection.

Therefore, this will cause Kube proxy to perform load balancing only when the connection is established, and each subsequent RPC request will use the original connection, so in fact, each subsequent RPC request will run to the same place.

Note: when using k8s service for load balancing

summary

-

gRPC is based on HTTP/2 + Protobuf.

-

gRPC has four call modes: unary, server / client streaming and bidirectional streaming.

-

The additional information of gRPC will be reflected in the HEADERS frame, and the DATA is in the DATA frame.

-

Client requests if grpc Dial establishes the connection asynchronously by default, and the status is Connecting at that time.

-

If the Client requests synchronization, it calls WithBlock() and the completion status is Ready.

-

Server listening is a cycle of waiting for connection. If not, it will sleep. The maximum sleep time is 1s; If a new request is received, start a new goroutine to process it.

-

grpc. If clientconn does not close the connection, it will lead to leakage of goroutine and Memory.

-

If no timeout control is applied to any internal / external call, there will be a leak and the client will try again and again.

-

In a specific scenario, if grpc Clientconn is regulated, which will affect the call.

-

If the interceptor does not use go grpc middleware chain processing, it will overwrite.

-

Care should be taken when selecting the load balancing mode of gRPC.

reference resources

-

https://github.com/grpc/grpc/blob/master/doc/PROTOCOL-HTTP2.md

-

https://www.ibm.com/developerworks/cn/web/wa-http2-under-the-hood/index.html

WeChat public number [programmer Huang Xiaoxie] is the former engineer of ant Java, who focuses on sharing Java technology dry cargo and job search experience. It is not limited to BAT interview, algorithm, computer basis, database, distributed official account, spring family bucket, micro service, high concurrency, JVM, Docker container, ELK, big data, etc. After paying attention, reply to [book] to receive 20 selected high-quality e-books necessary for Java interview.