preface

Thesis address: https://arxiv.org/pdf/1708.05031.pdf?ref=https://codemonkey.link

Code address: https://github.com/hexiangnan/neural_collaborative_filtering

motivation

This is an earlier article. Recommendation systems often use the dot product of vectors to learn the interactive information between vectors,

Purpose of vector dot product: this has the advantage of fast calculation speed, so this strategy is often used in rough layout. Vector dot product if both vectors have L2 norm, the meaning of representation is the cosine distance of the two vectors. However, I think that the code implementation of many recommendation systems does not carry out L2 norm for two vectors in advance. At present, I think a reasonable explanation is that generally, after dot product, FC layer will be connected to classify or reduce dimension. Can FC realize the function of L2 norm to a certain extent, so L2 norm in advance is unnecessary.

The author of this paper proposes to use multi-layer linear layer to learn the interactive information between user and item. The author believes that deeper neural network can better characterize the characteristics of recommendation system than simple dot product.

structure

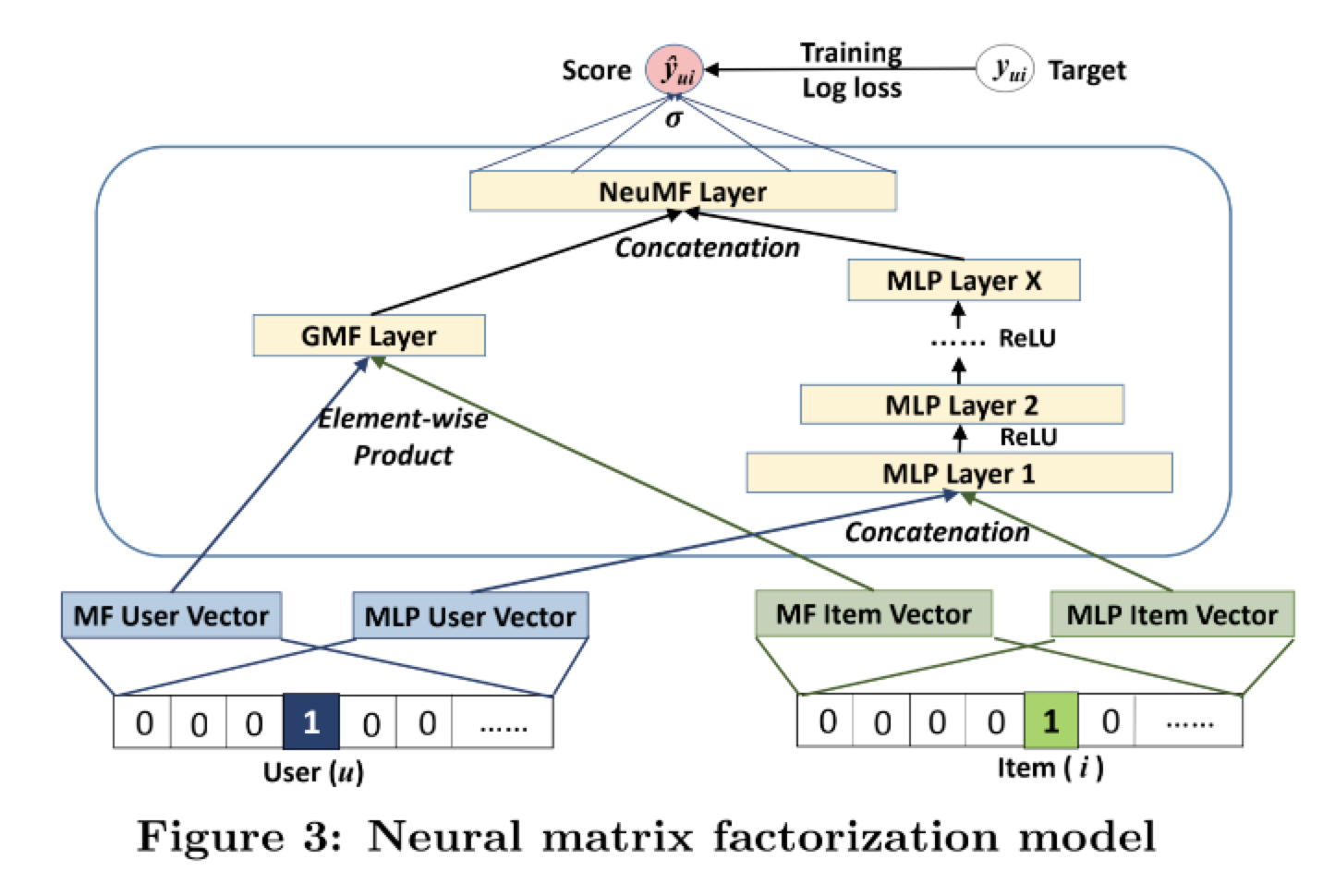

The structure of the recommendation system is really simple and clear. The whole structure is divided into left and right branches. The two branches can be independent. First, the user feature and item entered are in the format of one hot through torch nn. Embedding can encode the features of onehot into features with the same dimension and are directly related to onehot.

Next, MF User Vector and MLP User Vector are two features obtained by passing the characteristics of user through two different FC layers. The MF item vector and MLP item Vector on the right are the same. Different FC is used so that the left and right branches can be completely independent. The purpose will be described later.

Left branch: the user and item features of MF are dot multiplied in the GMF layer.

Right branch: the user and item features of MLP are sent to MLP layer.

Finally, the output features of MLP and GMF are concat enated in dimension, and then classified after passing through FC layer.

The following is the demo of nmf with 10 length sequences on the user side:

import torch

import torch.nn as nn

class RecoClsNMF(nn.Module):

def __init__(self,input_dim,output_dim):

super().__init__()

self.input_dim = input_dim

self.output_dim = output_dim

self.mf_user = torch.nn.Linear(self.input_dim, self.input_dim)

self.mf_item = torch.nn.Linear(self.input_dim, self.input_dim)

self.mlp_user = torch.nn.Linear(self.input_dim, self.input_dim)

self.mlp_item = torch.nn.Linear(self.input_dim, self.input_dim)

self.fc_layers = torch.nn.ModuleList()

layers = [2, 8, 4, 2, 1]

for idx, (in_size, out_size) in enumerate(zip(layers[:-1], layers[1:])):

self.fc_layers.append(torch.nn.Linear(in_size * self.output_dim, out_size * self.output_dim))

def forward(self, user, item):

# user:[bs,lebgth,dim] item:[bs,dim]

user = torch.sum(user,dim=1) # [bs,dim]

# user vector

mf_user = self.mf_user(user)

mlp_user = self.mlp_user(user)

# item vector

mf_item = self.mf_item(item)

mlp_item = self.mlp_item(item)

# GMF

GMF_out = torch.mul(mf_user, mf_item) # batch,dim

# MLP

vector = torch.cat([mlp_user, mlp_item], dim=-1) # batch,dim*2

for idx, _ in enumerate(range(len(self.fc_layers))):

vector = self.fc_layers[idx](vector)

vector = torch.nn.ReLU()(vector)

MLP_out = vector # vector->batch,dim

# NeuMF

x = torch.cat([MLP_out, GMF_out], dim=-1) # batch,dim*2

return x

if __name__ == '__main__':

model = RecoClsNMF(128,128)

user = torch.randn((16,10,128))

item = torch.randn((16,128))

print("user shape",user.shape)

print("item shape", item.shape)

out = model(user, item)

print("out shape",out.shape)

Output result:

user shape torch.Size([16, 10, 128]) item shape torch.Size([16, 128]) out shape torch.Size([16, 256])

The code is very simple and the effect is OK.