Problem recurrence

-

Directly use the minio java sdk (version 8.3.3) to merge (test) multiple existing files (test1,test2) in the compose bucket in the ceph cluster. The code is as follows

@Test void contextLoads() throws Exception{ MinioClient minioClient = MinioClient.builder() .endpoint("http://172.23.27.119:7480") .credentials("4S897Y9XN9DBR27LAI1L", "WmZ6JRoMNxmtE9WtXM9Jrz8BhEdZnwzzAYcE6b1z") .build(); composeObject(minioClient,"compose","compose",List.of("test1","test2"),"test"); } public boolean composeObject(MinioClient minioClient,String chunkBucKetName, String composeBucketName, List<String> chunkNames, String objectName) throws ServerException, InsufficientDataException, ErrorResponseException, IOException, NoSuchAlgorithmException, InvalidKeyException, InvalidResponseException, XmlParserException, InternalException { List<ComposeSource> sourceObjectList = new ArrayList<>(chunkNames.size()); for (String chunk : chunkNames){ sourceObjectList.add( ComposeSource.builder() .bucket(chunkBucKetName) .object(chunk) .build() ); } minioClient.composeObject( ComposeObjectArgs.builder() .bucket(composeBucketName) .object(objectName) .sources(sourceObjectList) .build() ); return true; } -

Operation error

The error is 400, BadDigest

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-3f8uyoyq-1641788937074)( https://raw.githubusercontent.com/CooperXJ/ImageBed/master/img/20211104143125.png )]

Completely confused o((⊙⊙) O, what about S3 compatibility?

Troubleshooting

journal

-

The first thing I think of is to check the rgw log to see what is the cause of the error

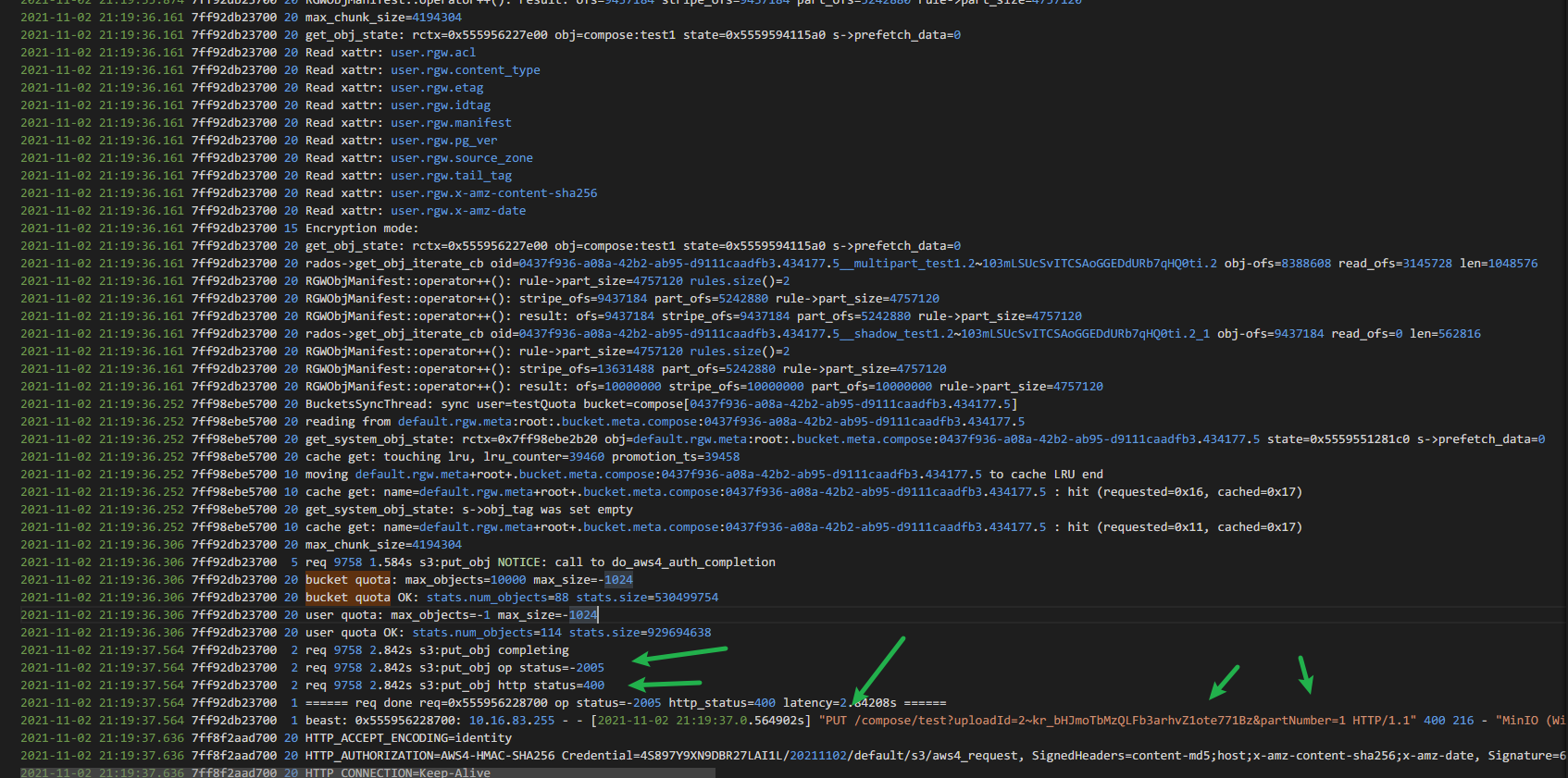

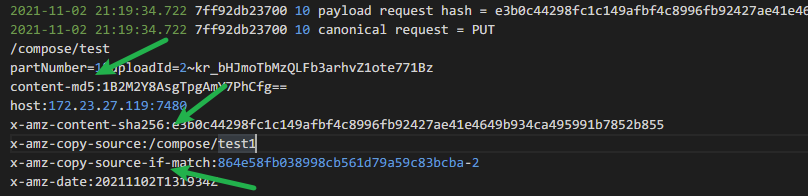

The logs are as follows: (only some logs are intercepted here)

After analyzing the log, the previous two head request s (obtaining the metadata information of test1 and test2) and init multipartUpload were successful, but finally an error occurred when uploading the first block for the first time (i.e. test1), reporting an error of 400, and the op status code is - 2005, which means no error. Isn't it funny? How do I know what - 2005 means?

-

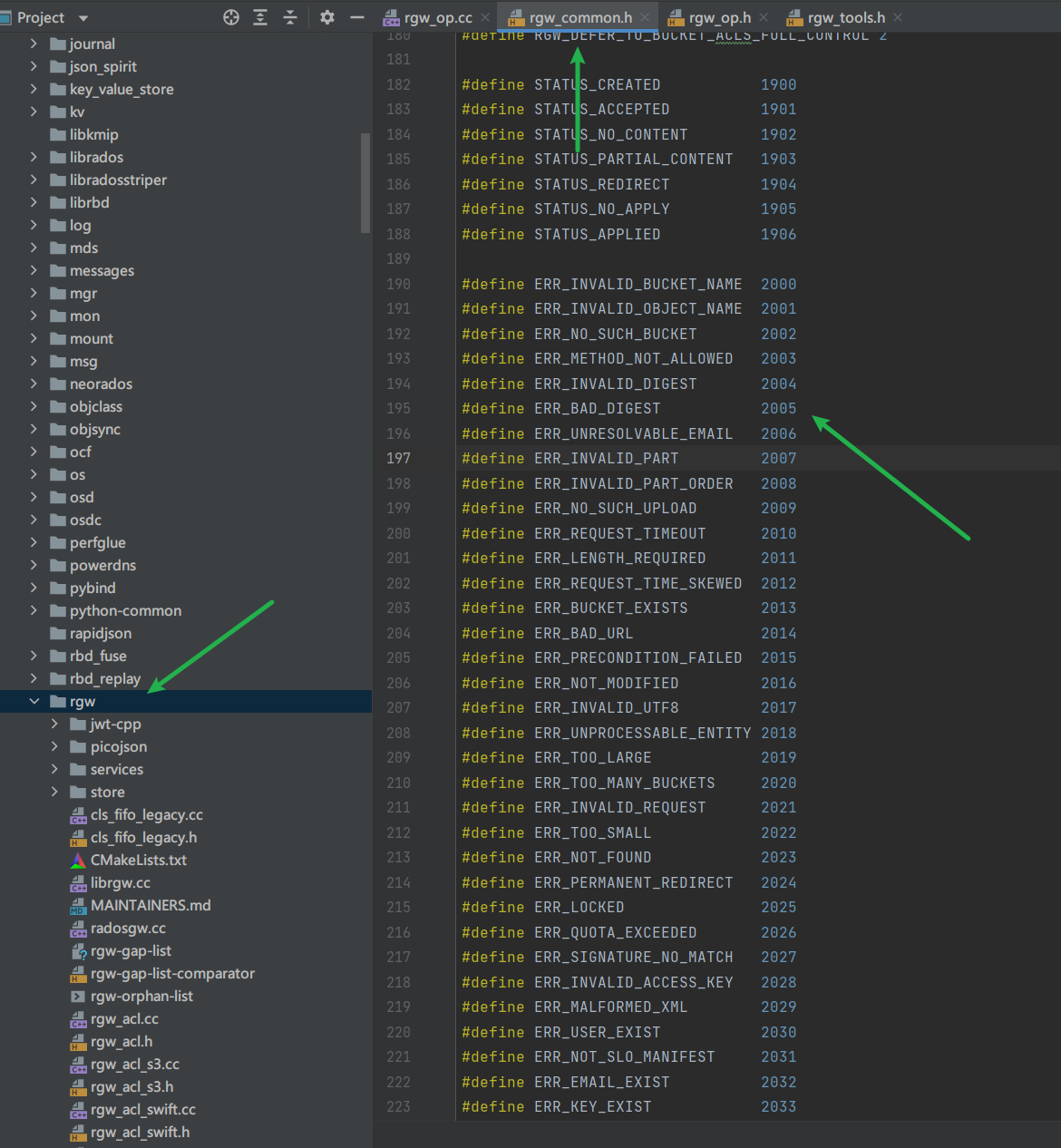

No, go and see what - 2005 is in the rgw source code (alas, I only know some syntax in C + +, but I'm not good at it. The following are the results of random analysis, which makes me feel like solving a case)

-

Finally under rgw

Good guy, I finally found the error code 2005

Half the success, ha ha ha (looking back now, I'm still too young...)

-

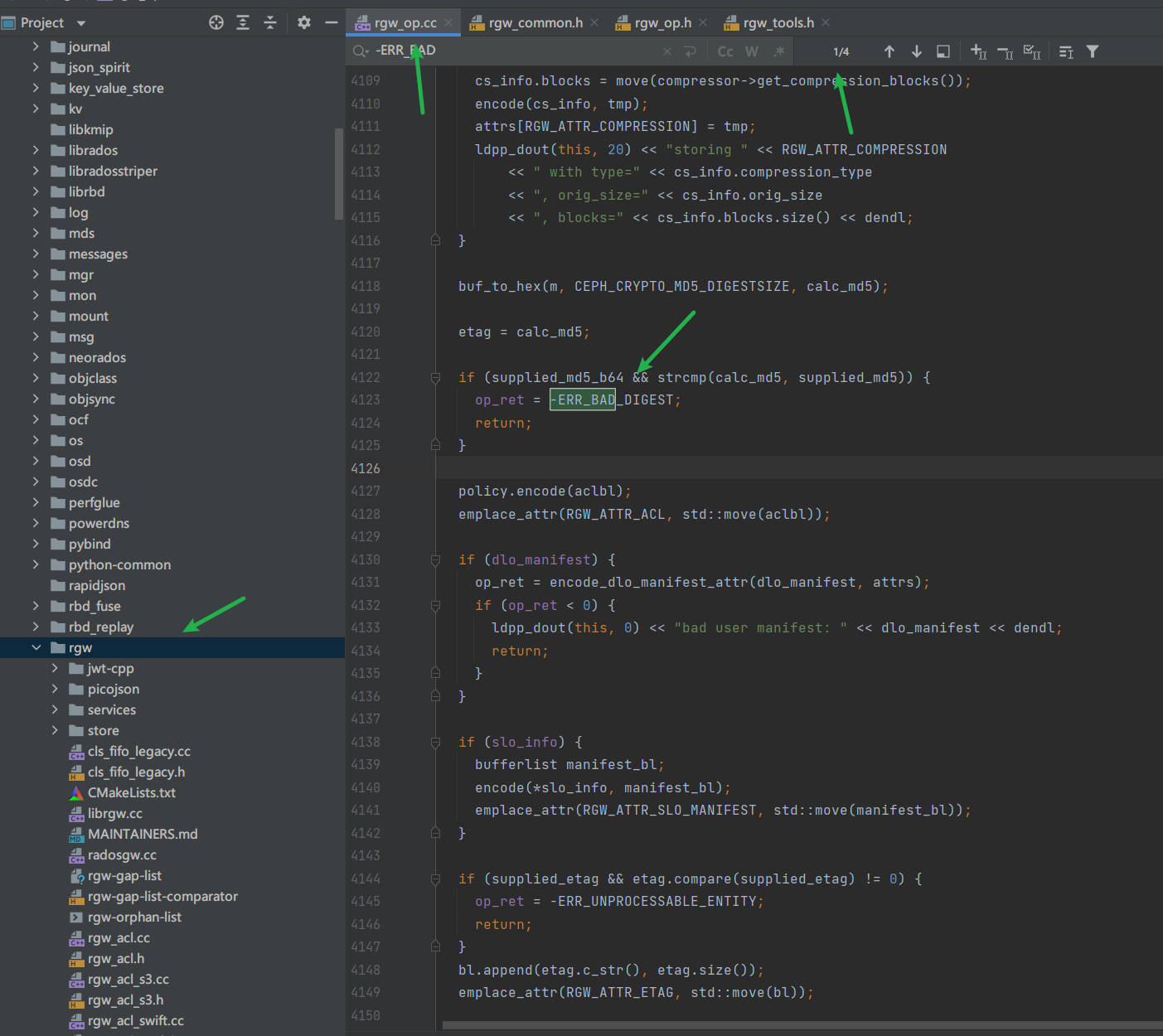

Continue tracking ERR_BAD_DIGEST, where it appears

Finally at rgw_ We found a hiding place under op.cc. Look at the name. Well, it should be processing the uploaded code. It appeared four times. There are not many places to check. I'm secretly happy

-

First, you can see the third and fourth places. It is found that if these two places report errors, there must be corresponding log output, but it is obvious that the previous rgw log value does not have a corresponding error log, so it is excluded

-

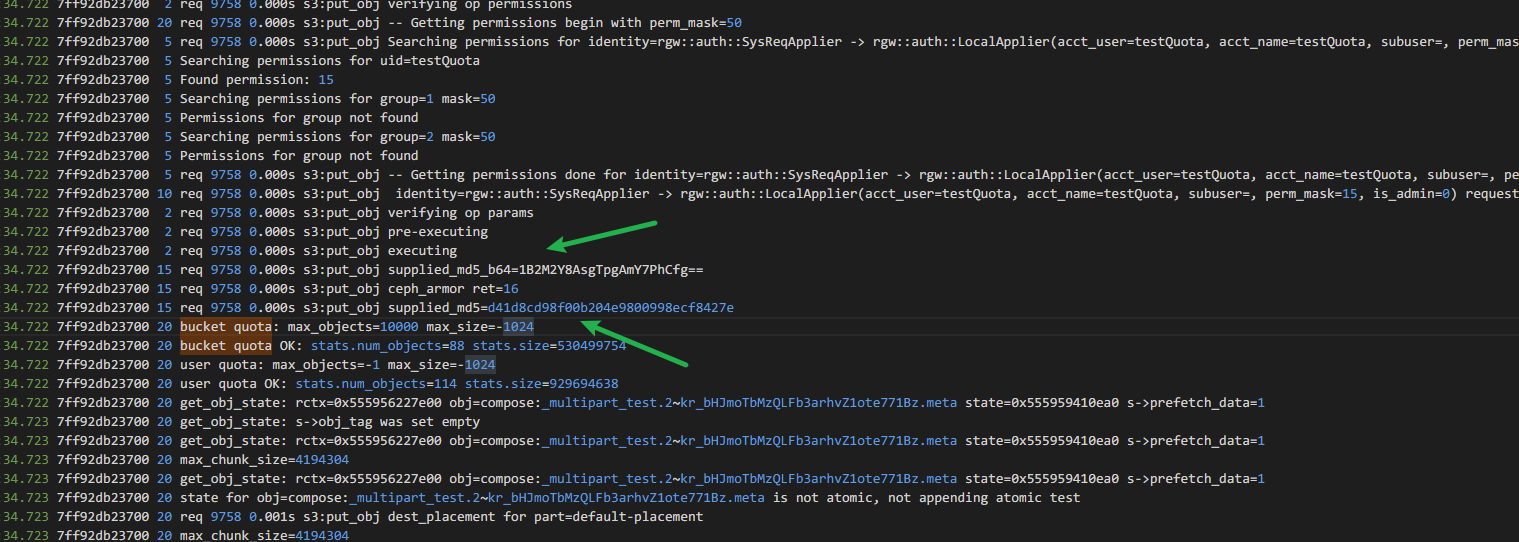

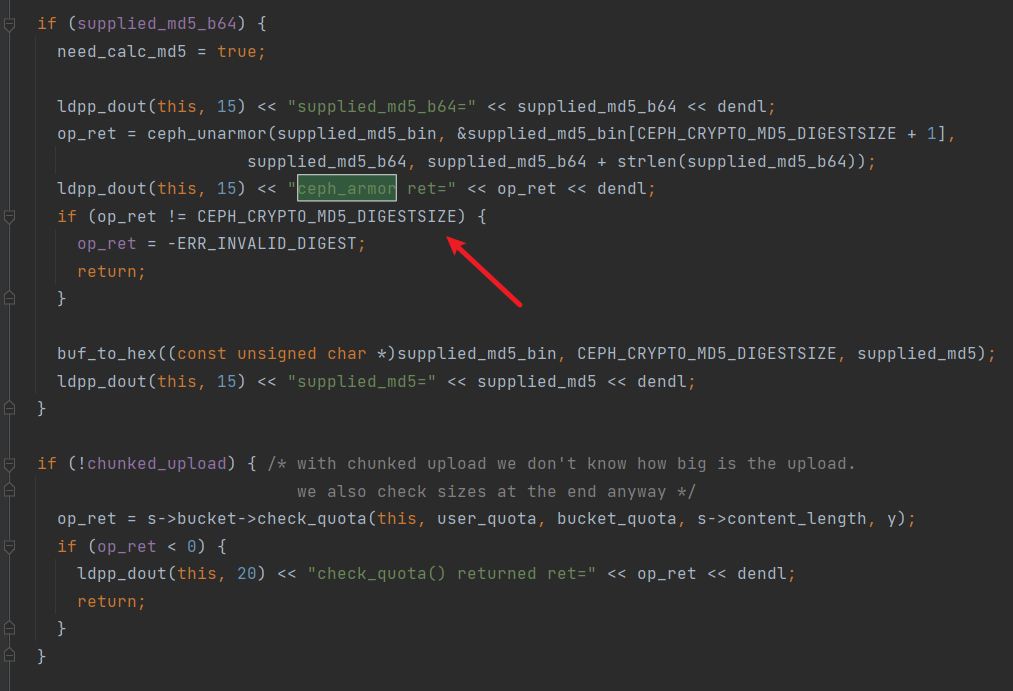

Then there are only the first and second places. The codes of these two places are almost the same, as follows

if (supplied_md5_b64 && strcmp(calc_md5, supplied_md5)) { op_ret = -ERR_BAD_DIGEST; return; }It seems that you are verifying the md5 provided, but you are not sure

-

Then I took a closer look at the corresponding methods of the two places

It is obvious that the first place is Put, the second place is Post, and my rgw log is Put request, so it is locked in the first place (the truth is about to be found)

-

Continue to analyze the code at the first place

Find this thing

Since these places appear together, the first one is supplied_md5_b64, but I'm still not sure whether it's Content-MD5, but the following are very similar to those in the request header

So I suspect it is MD5 in the request header

-

Comparison log

-

In order to compare the reference samples with the rgw logs reporting errors, I decided to use the sdk of aws s3 to conduct an experiment. The principle is the same as that of minio. It is also to merge test1 and test2 originally existing in compose. It should be noted that partNumber starts from 1, not from 0, and an error will be reported from 0. Don't ask me why I know, because I stepped on the pit....

@Test public void test1(){ String bucketName = "compose"; String keyName = "test"; AWSCredentials awsCredentials = new BasicAWSCredentials("4S897Y9XN9DBR27LAI1L","WmZ6JRoMNxmtE9WtXM9Jrz8BhEdZnwzzAYcE6b1z"); AmazonS3 s3Client = AmazonS3ClientBuilder.standard().withCredentials(new AWSStaticCredentialsProvider(awsCredentials)) .withEndpointConfiguration(new AwsClientBuilder.EndpointConfiguration("http://172.23.27.119:7480","")) .withPathStyleAccessEnabled(true) .build(); InitiateMultipartUploadRequest initRequest = new InitiateMultipartUploadRequest("compose","test"); InitiateMultipartUploadResult initResponse = s3Client.initiateMultipartUpload(initRequest); List<PartETag> partETags = new ArrayList<>(); List<String> list = List.of("test1","test2"); for (int i = 0; i < list.size(); i++) { CopyPartRequest request = new CopyPartRequest() .withDestinationBucketName(bucketName) .withPartNumber(i+1) .withUploadId(initResponse.getUploadId()) .withDestinationKey(keyName) .withSourceBucketName(bucketName) .withSourceKey(list.get(i)); partETags.add(s3Client.copyPart(request).getPartETag()); } CompleteMultipartUploadRequest compRequest = new CompleteMultipartUploadRequest(bucketName, keyName, initResponse.getUploadId(), partETags); s3Client.completeMultipartUpload(compRequest); } -

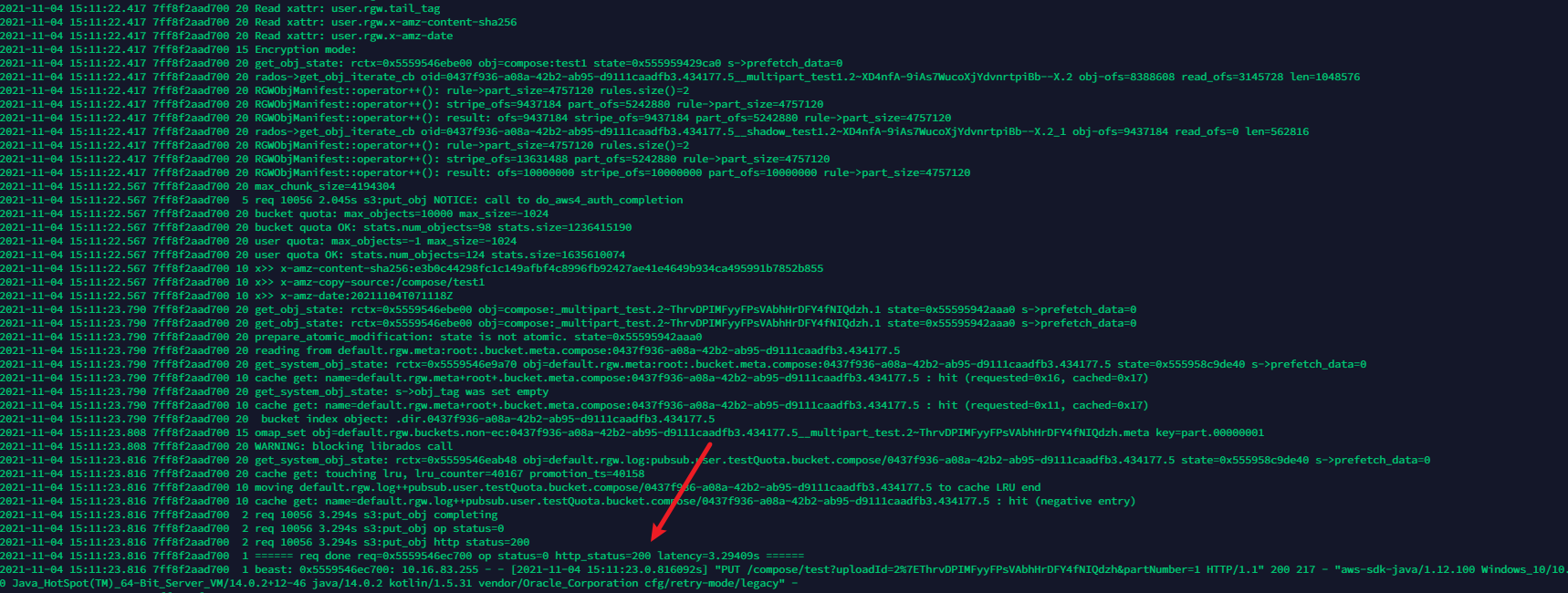

The log is as follows

I can see success, but I see something different with my sharp eyes

-

Finding differences

minio

aws

You can see that there is no log of minio green arrow execution in aws,

-

Review the rgw code again

You can see that there is this line of code before the first place

So far, we can make sure that minio carries a request header during Put operation, which may be md5

Packet capture for analysis

-

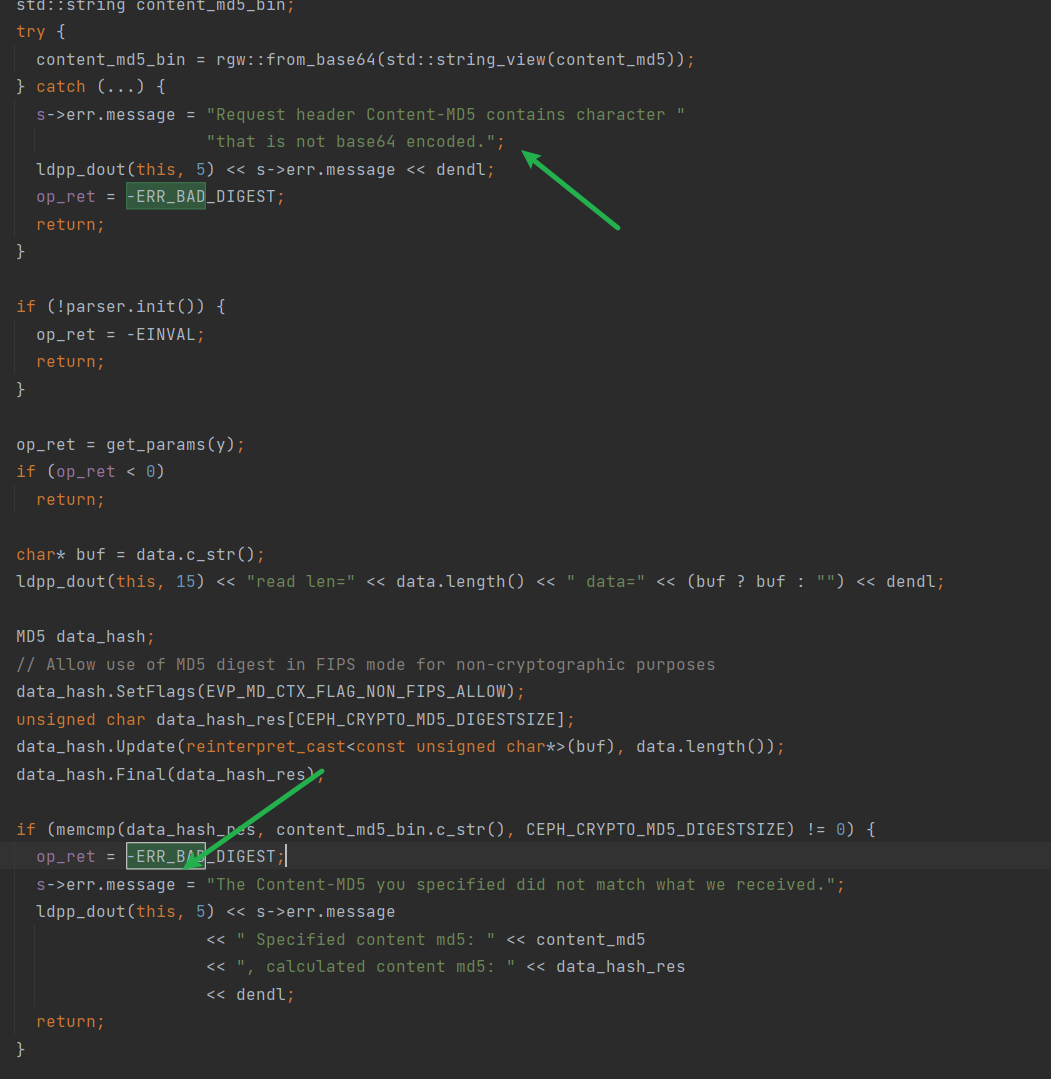

Analyze minio's request

There is indeed a Content-MD5 request. Suddenly, I found that this MD5 looks familiar. After a different request test, I found that this guy hasn't changed!!

Later, it was found that this thing is the result of base64 requests after md5 of ''. No wonder different requests are the same. The original body sent is empty, so this thing will be available when md5 is calculated

The following x-amz-content-sha256: e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855 also means that the body sent is empty. No wonder it is consistent every time

Cause analysis

Every time minio sends a Put request, the md5 request header will be added for data security. However, the body uploaded by the composeObject action itself is empty (essentially a copy copyPart operation). Therefore, the local request body is empty. Therefore, the md5 value is inconsistent with the md5 value calculated by ceph after receiving the md5, which leads to the merge failure.

verification

minio code validation

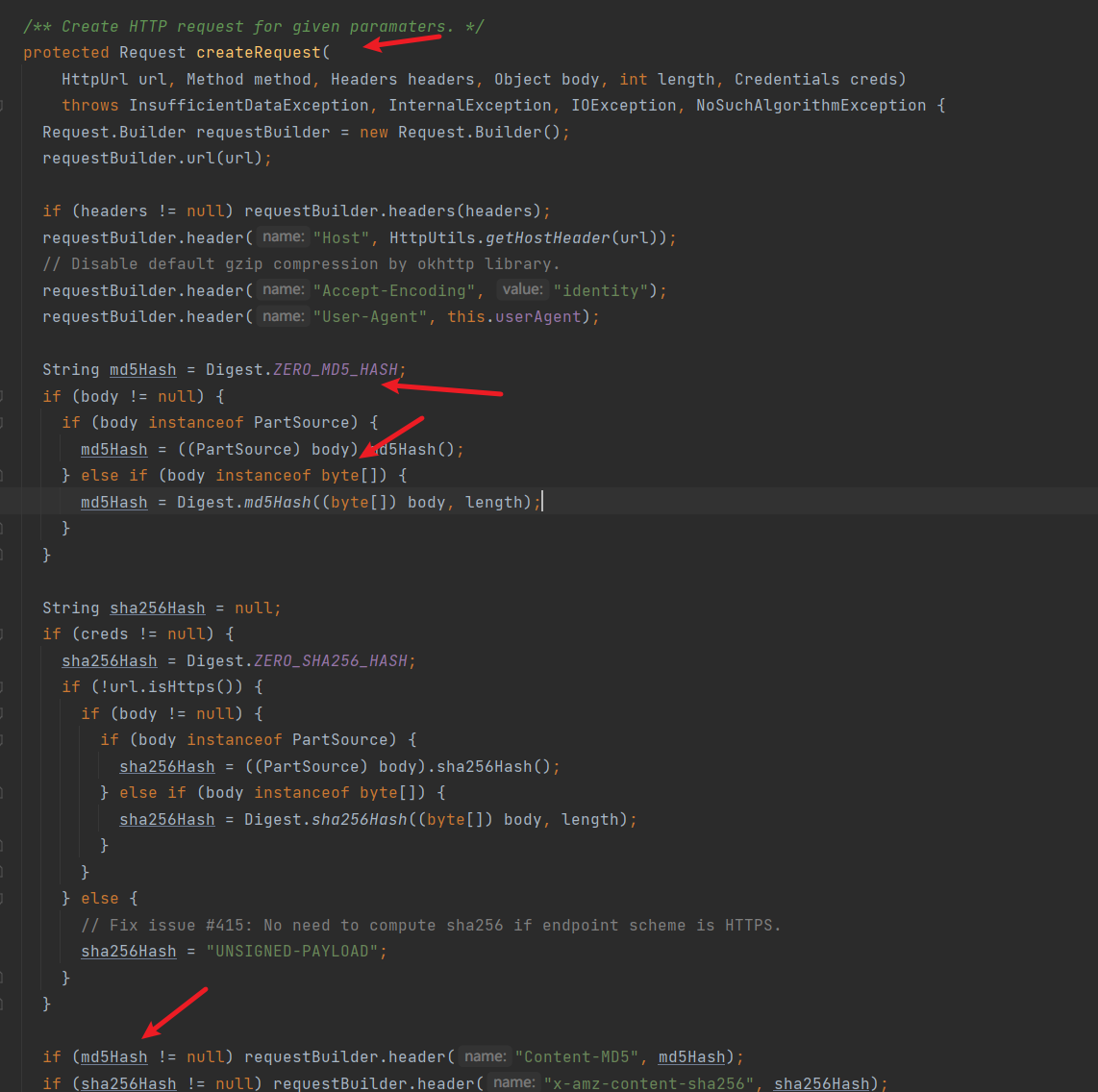

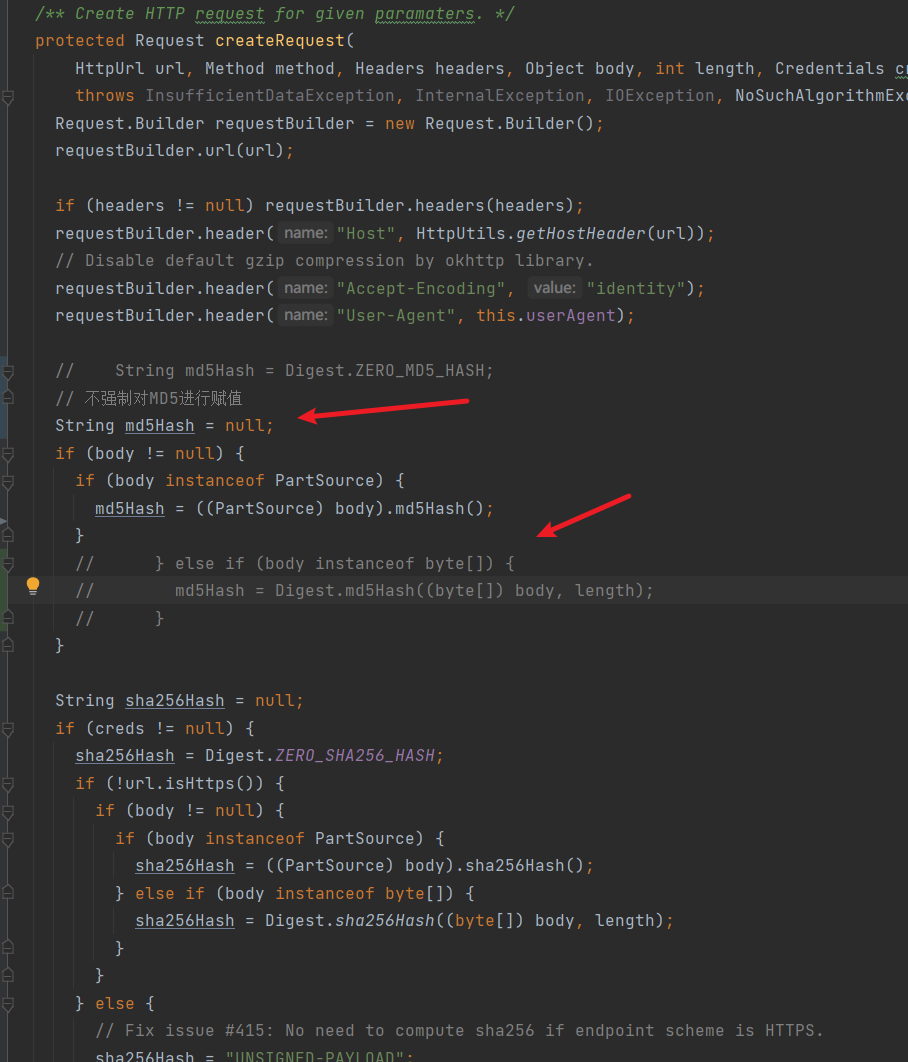

You can see that minio always brings md5 when constructing requests

Modify the source code (modify S3Base.java)

In order not to force md5 values to be sent, annotate these two places and then conduct composeObject. The final test result is successful!

summary

To be honest, this is the first troubleshooting process. I feel I have grown a lot. It is the first time to look at the source code so carefully. It is true that all bug s can find the cause in front of the source code.

In short, I am very happy to find the reason.

But it's not wrong to think about it carefully. minio and ceph are competitive. If you use minio's sdk to operate ceph, there will inevitably be bug s. Why should I fit you? I have my rules. You have your rules

The d5 value is not forced to be sent, and the composeObject is performed after these two points are commented out. The final test result is successful!

summary

To be honest, this is the first troubleshooting process. I feel I have grown a lot. It is the first time to look at the source code so carefully. It is true that all bug s can find the cause in front of the source code.

In short, I am very happy to find the reason.

But it's not wrong to think about it carefully. minio and ceph are competitive. If you use minio's sdk to operate ceph, there will inevitably be bug s. Why should I fit you? I have my rules. You have your rules