cause

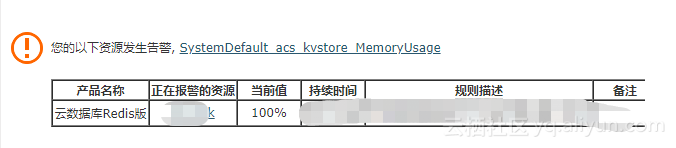

We always use redis of Aliyun. We are not a high concurrent application. We mainly use redis as distributed locks and a small amount of caches. It hardly needs maintenance. Yesterday afternoon, we suddenly received an alarm email.

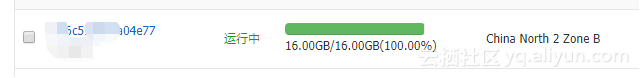

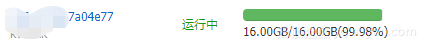

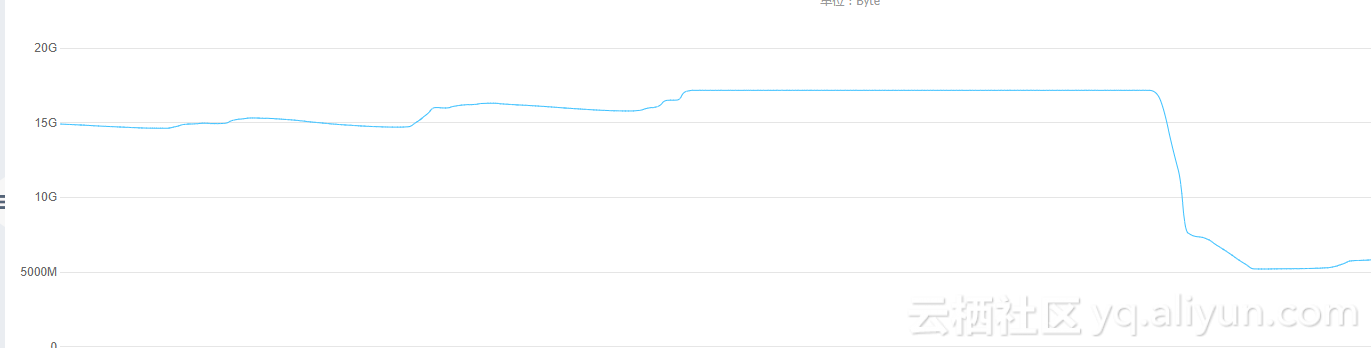

On-line redis memory is used 100% and instantaneous nerve tension sensation is confirmed by console.

This is a 16G online example, usually less than 50%.

Investigation and repair

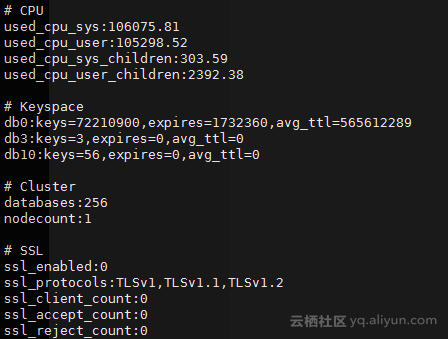

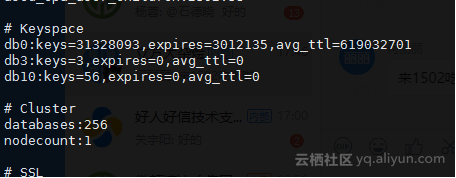

info information

Basic use is the default db 0, see keys has more than 70 million, set the expiration time keys only 173 W..

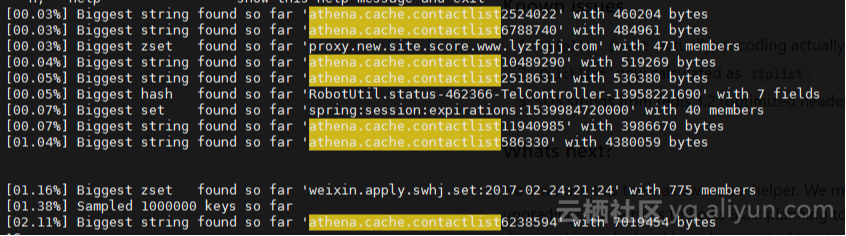

Find bigkey and find a way to release some keys first

Hang the bigkeys command first

$ redis-cli -h xxx.redis.rds.aliyuncs.com -a xxx --bigkeys

Several large string key s with high frequency were found, which were confirmed by development colleagues. The newly added redis cache took 10 days. The switch was shut down before notification, so as to avoid affecting the tasks that had already been run. When you enter redis, delete a few larger keys temporarily, and release a small amount of memory.

BoundValueOperations<String, String> contactListCache = kvLockTemplate.boundValueOps("athena.cache.contactlist" + user.getId());

Now there are too many keys. If the direct keys are very inefficient, the good thing is that redis provides SCAN natively. It can iterate through, write a simple python script, scan 100W each time with scan, and delete the relevant keys.

import redis def clean_excess(host='xxx.redis.rds.aliyuncs.com', port=6379, db=0, password='xxx', pattern=None): _redis = redis.StrictRedis(host=host, port=port, db=db, password=password) i = None while i != 0: if i is None: i = 0 print('>> scan index', i) _scan = _redis.scan(i, match=pattern, count="1000000") i, l = _scan if l: for _i in l: print("-- delete key {}".format(_i)) _redis.delete(_i) if __name__ == '__main__': clean_excess(pattern="athena.cache.contactlist*")

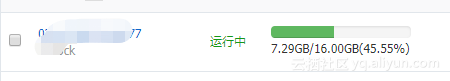

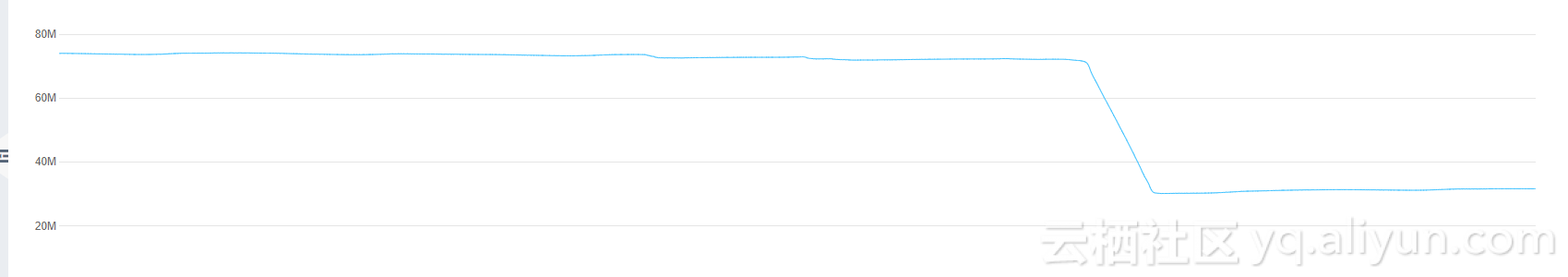

After running, the memory usage dropped to 45%, and the memory problem was solved here.

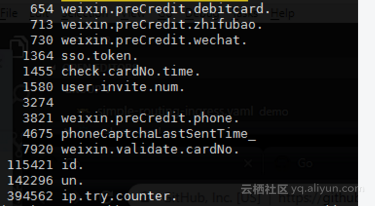

The proportion of keys under awk rapid sampling statistics

Fortunately, we are all keyPrefix + digital id format, here sample 100W to see the proportion.

redis-cli -h xxx.redis.rds.aliyuncs.com -a xxx scan 0 count 1000000 | awk -F '[0-9]' '{s=NF>0?$1:$0;print s}' | sort | uniq -c | sort -n

Find a development classmate to confirm that ip.try.counter is originally a version used to lock user's IP attempt to log in. It didn't set the expiration time, the rest of the key s are more or less with some magical logic, it can't move basically.... The heart is collapsed, so first clean up these things out ________

import redis def clean_excess(host='xxx.redis.rds.aliyuncs.com', port=6379, db=0, password='xxx', pattern=None): _redis = redis.StrictRedis(host=host, port=port, db=db, password=password) i = None while i != 0: if i is None: i = 0 print('>> scan index', i) _scan = _redis.scan(i, match=pattern, count="1000000") i, l = _scan if l: for _i in l: print("-- delete key {}".format(_i)) _redis.delete(_i) if __name__ == '__main__': clean_excess(pattern="ip.try.counter.*")

Running for about nine hours... After cleaning up, there are more than 30 million key s left, but memory is not released. The rest of the students asked the development can hardly move. Utilization rate is now maintained below 50% for a while.

epilogue

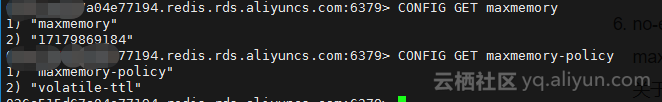

- On the issue of redis memory ejection strategy, the default maxmemory given by Aliyun is exactly equal to the size of your memory configuration, that is, 100% memory utilization will trigger. The default of ejection strategy is that valatile-ttl will only eject the key with expiration time. Compared with our situation, most of them are not set expiration time, which is basically a drop in the bucket. If we change the strategy to allke. Whether ys-xx can be effectively ejected will affect the normal operation of the business

Ultimately, it is still necessary to standardize the user's habits. The set expiration time should not be lazy. Independent allocation of resources with large memory requirements is indeed necessary.

- In fact, there are many good tools available to diagnose redis. https://scalegrid.io/blog/the-top-6-free-redis-memory-analysis-tools/

But here rdb can only analyze dump.rdb file. Several other tools are inefficient for keys in order of magnitude. Fortunately, there is a certain regularity in our keys naming, so I wrote a simple script to sample statistics. What I like better is redis-memory-for-key (just to count the memory occupancy of specific keys). Maybe I use the wrong posture. Welcome together. discuss