background

We encountered 10000 concurrency per second in production at Christmas. After WAF combined with relevant log analysis, we found that several interfaces in our applet were flooded by people.

For these interfaces, our front-end uses varnish for caching. Theoretically, they should all be returned in milliseconds. Should not cause too much pressure on production by its home page?

So we found the actual requests of nearly 100 users and "played back". We found that the response time of these requests was much higher than the speed of our varnish returning to the front end.

So we further analyzed and found that the problem lies in these requests - all of which are get methods and question marks (?) The value of the following parameters is not the goods, channels and modules in our station. It is generated randomly, and these parameters are different every time you request it. Some values even spread old K, S, preserved egg, Ding Gou, hee hee, ha ha. Have you seen sku_id pass a ha ha or hello world? It'S really unprecedented at all times, at home and abroad!

After further analysis combined with WAF, we find that these IPS with random generation system without module id and product id are only accessed once, but when they are accessed once, there are many requested IPS, up to 5-digit IPS, and they are requested once at a certain point at the same time. It is obvious that this is a typical black product or a crawler trying to bypass our front-end varnish, further bypass our redis and hit dB, resulting in great pressure on dB when loading the home page.

Propose improvement

Hackers, some data companies and crawlers have a large number of IP addresses. They don't need high frequency to crawl or maliciously attack website data. They just need to launch 6-digit or even 7-digit IP to visit your website every few seconds, and your website can't carry it.

Therefore, judging from the business logic, we say that the commodity data has a product_id, you can't count 100000 SKUs. If you come to visit with a sku_id doesn't exist in the system. What do you want to do with this access?

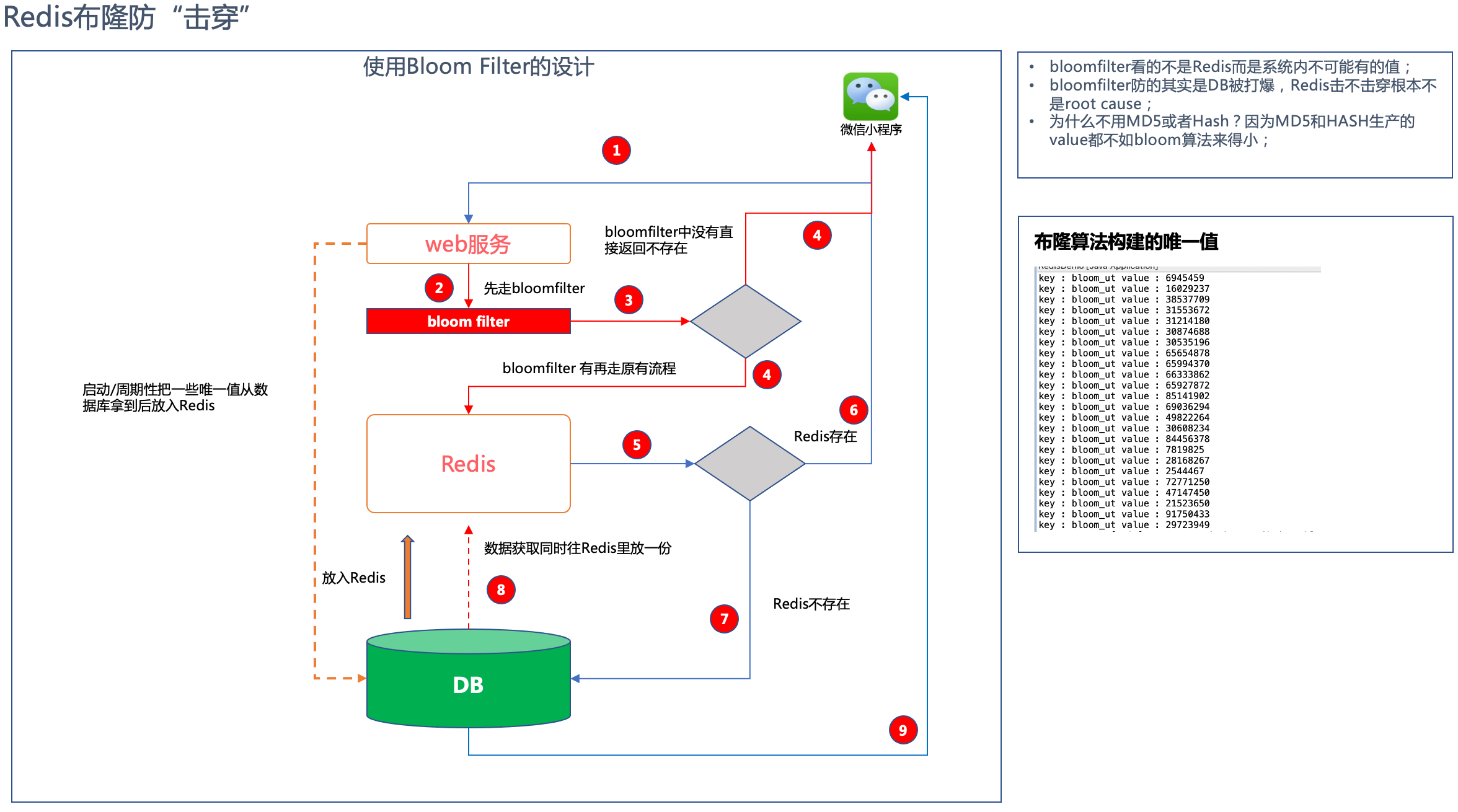

Therefore, we have obtained the corresponding anti breakdown solutions as follows

We were in the last article Correct posture of SpringBoot+Redis bloom filter against malicious traffic breakdown cache The code given in depends on redis itself to load the bloom filter module.

This time, we insist on using cloud native, directly use the bloom algorithm used by google's guava tool class, and then store it in redis with setBit. Because if we simply use guava, the content of Bloom filter in the memory will be cleared after the application is restarted. Therefore, we have well combined guava's algorithm and the method of using redis as the storage medium, instead of installing bloom filter plug-in for redis as I did in the previous article.

After all, it's an exaggeration to install plug-ins for redis in production. At the same time, there is an emergency, which gives us only 20 minutes to respond. Therefore, we need to immediately put on a set of code to intercept such malicious requests, otherwise the home page of the applet application can't carry it. Therefore, we use this "smart" method to implement it again.

The engineering code is given below.

Full code for production (my open source version is newer and stronger than the production code)^_^

application_local.yml

server:

port: 9080

tomcat:

max-http-post-size: -1

max-http-header-size: 10240000

spring:

application:

name: redis-demo

servlet:

multipart:

max-file-size: 10MB

max-request-size: 10MB

redis:

password: 111111

sentinel:

nodes: localhost:27001,localhost:27002,localhost:27003

master: master1

database: 0

switchFlag: 1

lettuce:

pool:

max-active: 50

max-wait: 10000

max-idle: 10

min-idl: 5

shutdown-timeout: 2000

timeBetweenEvictionRunsMillis: 5000

timeout: 5000

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <parent> <groupId>org.mk.demo</groupId> <artifactId>springboot-demo</artifactId> <version>0.0.1</version> </parent> <artifactId>redis-demo</artifactId> <name>rabbitmq-demo</name> <packaging>jar</packaging> <dependencies> <dependency> <groupId>com.auth0</groupId> <artifactId>java-jwt</artifactId> </dependency> <dependency> <groupId>cn.hutool</groupId> <artifactId>hutool-crypto</artifactId> </dependency> <!-- redis must --> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-data-redis</artifactId> <exclusions> <exclusion> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-logging</artifactId> </exclusion> <exclusion> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> </exclusion> </exclusions> </dependency> <!-- jedis must --> <dependency> <groupId>redis.clients</groupId> <artifactId>jedis</artifactId> </dependency> <!--redisson must start --> <dependency> <groupId>org.redisson</groupId> <artifactId>redisson-spring-boot-starter</artifactId> <version>3.13.6</version> <exclusions> <exclusion> <groupId>org.redisson</groupId> <artifactId>redisson-spring-data-23</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>org.apache.commons</groupId> <artifactId>commons-lang3</artifactId> </dependency> <!--redisson must end --> <dependency> <groupId>org.redisson</groupId> <artifactId>redisson-spring-data-21</artifactId> <version>3.13.1</version> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-thymeleaf</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> <exclusions> <exclusion> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-logging</artifactId> </exclusion> <exclusion> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-jdbc</artifactId> <exclusions> <exclusion> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-logging</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>druid</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-configuration-processor</artifactId> <optional>true</optional> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-log4j2</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> <exclusions> <exclusion> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-logging</artifactId> </exclusion> <exclusion> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> </exclusion> </exclusions> </dependency> <dependency> <groupId>org.aspectj</groupId> <artifactId>aspectjweaver</artifactId> </dependency> <dependency> <groupId>com.lmax</groupId> <artifactId>disruptor</artifactId> </dependency> <dependency> <groupId>com.google.guava</groupId> <artifactId>guava</artifactId> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> </dependency> <dependency> <groupId>com.fasterxml.jackson.core</groupId> <artifactId>jackson-databind</artifactId> </dependency> <dependency> <groupId>org.aspectj</groupId> <artifactId>aspectjweaver</artifactId> </dependency> <dependency> <groupId>com.lmax</groupId> <artifactId>disruptor</artifactId> </dependency> <dependency> <groupId>com.google.guava</groupId> <artifactId>guava</artifactId> </dependency> <dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> </dependency> <dependency> <groupId>com.fasterxml.jackson.core</groupId> <artifactId>jackson-databind</artifactId> </dependency> </dependencies> </project>

Several key POMS are given The parent information used in XML

Don't copy online, most of them are wrong. I must remember that the spring boot version, guava version, redis version, redistribution version and jackson version here are strict. The contents related to log4j should be replaced with 2.7.1 according to my previous blog (apache log4j2.7.1 is a relatively safe version at present)

<properties>

<java.version>1.8</java.version>

<jacoco.version>0.8.3</jacoco.version>

<aldi-sharding.version>0.0.1</aldi-sharding.version>

<!-- <spring-boot.version>2.4.2</spring-boot.version> -->

<spring-boot.version>2.3.1.RELEASE</spring-boot.version>

<!-- spring-boot.version>2.0.6.RELEASE</spring-boot.version> <spring-cloud-zk-discovery.version>2.1.3.RELEASE</spring-cloud-zk-discovery.version -->

<zookeeper.version>3.4.13</zookeeper.version>

<spring-cloud.version>Greenwich.SR5</spring-cloud.version>

<dubbo.version>2.7.3</dubbo.version>

<curator-framework.version>4.0.1</curator-framework.version>

<curator-recipes.version>2.8.0</curator-recipes.version>

<!-- druid.version>1.1.20</druid.version -->

<druid.version>1.2.6</druid.version>

<guava.version>27.0.1-jre</guava.version>

<fastjson.version>1.2.59</fastjson.version>

<dubbo-registry-nacos.version>2.7.3</dubbo-registry-nacos.version>

<nacos-client.version>1.1.4</nacos-client.version>

<!-- mysql-connector-java.version>8.0.13</mysql-connector-java.version -->

<mysql-connector-java.version>5.1.46</mysql-connector-java.version>

<disruptor.version>3.4.2</disruptor.version>

<aspectj.version>1.8.13</aspectj.version>

<spring.data.redis>1.8.14-RELEASE</spring.data.redis>

<seata.version>1.0.0</seata.version>

<netty.version>4.1.42.Final</netty.version>

<nacos.spring.version>0.1.4</nacos.spring.version>

<lombok.version>1.16.22</lombok.version>

<javax.servlet.version>3.1.0</javax.servlet.version>

<mybatis.version>2.1.0</mybatis.version>

<pagehelper-mybatis.version>1.2.3</pagehelper-mybatis.version>

<spring.kafka.version>1.3.10.RELEASE</spring.kafka.version>

<kafka.client.version>1.0.2</kafka.client.version>

<shardingsphere.jdbc.version>4.0.0</shardingsphere.jdbc.version>

<xmemcached.version>2.4.6</xmemcached.version>

<swagger.version>2.9.2</swagger.version>

<swagger.bootstrap.ui.version>1.9.6</swagger.bootstrap.ui.version>

<swagger.model.version>1.5.23</swagger.model.version>

<swagger-annotations.version>1.5.22</swagger-annotations.version>

<swagger-models.version>1.5.22</swagger-models.version>

<swagger-bootstrap-ui.version>1.9.5</swagger-bootstrap-ui.version>

<sky-sharding-jdbc.version>0.0.1</sky-sharding-jdbc.version>

<cxf.version>3.1.6</cxf.version>

<jackson-databind.version>2.11.1</jackson-databind.version>

<gson.version>2.8.6</gson.version>

<groovy.version>2.5.8</groovy.version>

<logback-ext-spring.version>0.1.4</logback-ext-spring.version>

<jcl-over-slf4j.version>1.7.25</jcl-over-slf4j.version>

<spock-spring.version>2.0-M2-groovy-2.5</spock-spring.version>

<xxljob.version>2.2.0</xxljob.version>

<java-jwt.version>3.10.0</java-jwt.version>

<commons-lang.version>2.6</commons-lang.version>

<hutool-crypto.version>5.0.0</hutool-crypto.version>

<maven.compiler.source>${java.version}</maven.compiler.source>

<maven.compiler.target>${java.version}</maven.compiler.target>

<compiler.plugin.version>3.8.1</compiler.plugin.version>

<war.plugin.version>3.2.3</war.plugin.version>

<jar.plugin.version>3.1.1</jar.plugin.version>

<quartz.version>2.2.3</quartz.version>

<h2.version>1.4.197</h2.version>

<zkclient.version>3.4.14</zkclient.version>

<httpcore.version>4.4.10</httpcore.version>

<httpclient.version>4.5.6</httpclient.version>

<mockito-core.version>3.0.0</mockito-core.version>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<oseq-aldi.version>2.0.22-RELEASE</oseq-aldi.version>

<poi.version>4.1.0</poi.version>

<poi-ooxml.version>4.1.0</poi-ooxml.version>

<poi-ooxml-schemas.version>4.1.0</poi-ooxml-schemas.version>

<dom4j.version>1.6.1</dom4j.version>

<xmlbeans.version>3.1.0</xmlbeans.version>

<java-jwt.version>3.10.0</java-jwt.version>

<commons-lang.version>2.6</commons-lang.version>

<hutool-crypto.version>5.0.0</hutool-crypto.version>

<nacos-discovery.version>2.2.5.RELEASE</nacos-discovery.version>

<spring-cloud-alibaba.version>2.2.1.RELEASE</spring-cloud-alibaba.version>

<redission.version>3.16.1</redission.version>

</properties>Then there is the auto assembly class

BloomFilterHelper

package org.mk.demo.redisdemo.bloomfilter;

import com.google.common.base.Preconditions;

import com.google.common.hash.Funnel;

import com.google.common.hash.Hashing;

public class BloomFilterHelper<T> {

private int numHashFunctions;

private int bitSize;

private Funnel<T> funnel;

public BloomFilterHelper(Funnel<T> funnel, int expectedInsertions, double fpp) {

Preconditions.checkArgument(funnel != null, "funnel Cannot be empty");

this.funnel = funnel;

// Calculate bit array length

bitSize = optimalNumOfBits(expectedInsertions, fpp);

// Calculate the number of hash method executions

numHashFunctions = optimalNumOfHashFunctions(expectedInsertions, bitSize);

}

public int[] murmurHashOffset(T value) {

int[] offset = new int[numHashFunctions];

long hash64 = Hashing.murmur3_128().hashObject(value, funnel).asLong();

int hash1 = (int) hash64;

int hash2 = (int) (hash64 >>> 32);

for (int i = 1; i <= numHashFunctions; i++) {

int nextHash = hash1 + i * hash2;

if (nextHash < 0) {

nextHash = ~nextHash;

}

offset[i - 1] = nextHash % bitSize;

}

return offset;

}

/**

* Calculate bit array length

*/

private int optimalNumOfBits(long n, double p) {

if (p == 0) {

// Set minimum desired length

p = Double.MIN_VALUE;

}

int sizeOfBitArray = (int) (-n * Math.log(p) / (Math.log(2) * Math.log(2)));

return sizeOfBitArray;

}

/**

* Calculate the number of hash method executions

*/

private int optimalNumOfHashFunctions(long n, long m) {

int countOfHash = Math.max(1, (int) Math.round((double) m / n * Math.log(2)));

return countOfHash;

}

}

RedisBloomFilter auto assembly class

package org.mk.demo.redisdemo.bloomfilter;

import com.google.common.base.Preconditions;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Service;

@Service

public class RedisBloomFilter {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Autowired

private RedisTemplate redisTemplate;

/**

* Adds a value based on the given bloom filter

*/

public <T> void addByBloomFilter(BloomFilterHelper<T> bloomFilterHelper, String key, T value) {

Preconditions.checkArgument(bloomFilterHelper != null, "bloomFilterHelper Cannot be empty");

int[] offset = bloomFilterHelper.murmurHashOffset(value);

for (int i : offset) {

logger.info(">>>>>>add into bloom filter->key : " + key + " " + "value : " + i);

redisTemplate.opsForValue().setBit(key, i, true);

}

}

/**

* Judge whether the value exists according to the given bloom filter

*/

public <T> boolean includeByBloomFilter(BloomFilterHelper<T> bloomFilterHelper, String key, T value) {

Preconditions.checkArgument(bloomFilterHelper != null, "bloomFilterHelper Cannot be empty");

int[] offset = bloomFilterHelper.murmurHashOffset(value);

for (int i : offset) {

logger.info(">>>>>>check key from bloomfilter : " + key + " " + "value : " + i);

if (!redisTemplate.opsForValue().getBit(key, i)) {

return false;

}

}

return true;

}

}

Redissentinelconfig redis core configuration class

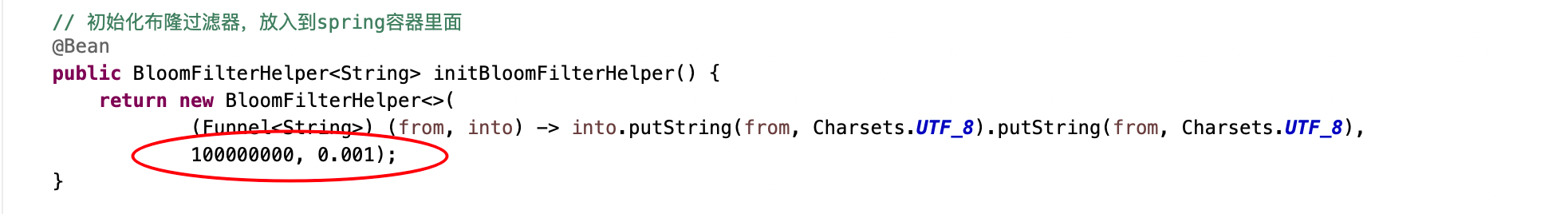

In it, I declare an initBloomFilterHelper method of bloomfilterhelper < string > return type

package org.mk.demo.redisdemo.config;

import com.fasterxml.jackson.annotation.JsonAutoDetect;

import com.fasterxml.jackson.annotation.PropertyAccessor;

import com.fasterxml.jackson.databind.ObjectMapper;

import redis.clients.jedis.HostAndPort;

import org.apache.commons.lang3.StringUtils;

import org.apache.commons.pool2.impl.GenericObjectPoolConfig;

import org.mk.demo.redisdemo.bloomfilter.BloomFilterHelper;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.autoconfigure.condition.ConditionalOnMissingBean;

import org.springframework.cache.annotation.EnableCaching;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.connection.*;

import org.springframework.data.redis.connection.lettuce.LettuceConnectionFactory;

import org.springframework.data.redis.connection.lettuce.LettucePoolingClientConfiguration;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.Jackson2JsonRedisSerializer;

import org.springframework.data.redis.serializer.StringRedisSerializer;

import org.springframework.stereotype.Component;

import java.time.Duration;

import java.util.LinkedHashSet;

import java.util.Set;

import com.google.common.base.Charsets;

import com.google.common.hash.Funnel;

@Configuration

@EnableCaching

@Component

public class RedisSentinelConfig {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Value("${spring.redis.nodes:localhost:7001}")

private String nodes;

@Value("${spring.redis.max-redirects:3}")

private Integer maxRedirects;

@Value("${spring.redis.password}")

private String password;

@Value("${spring.redis.database:0}")

private Integer database;

@Value("${spring.redis.timeout}")

private int timeout;

@Value("${spring.redis.sentinel.nodes}")

private String sentinel;

@Value("${spring.redis.lettuce.pool.max-active:8}")

private Integer maxActive;

@Value("${spring.redis.lettuce.pool.max-idle:8}")

private Integer maxIdle;

@Value("${spring.redis.lettuce.pool.max-wait:-1}")

private Long maxWait;

@Value("${spring.redis.lettuce.pool.min-idle:0}")

private Integer minIdle;

@Value("${spring.redis.sentinel.master}")

private String master;

@Value("${spring.redis.switchFlag}")

private String switchFlag;

@Value("${spring.redis.lettuce.pool.shutdown-timeout}")

private Integer shutdown;

@Value("${spring.redis.lettuce.pool.timeBetweenEvictionRunsMillis}")

private long timeBetweenEvictionRunsMillis;

public String getSwitchFlag() {

return switchFlag;

}

/**

* Connection pool configuration information

*

* @return

*/

@Bean

public LettucePoolingClientConfiguration getPoolConfig() {

GenericObjectPoolConfig config = new GenericObjectPoolConfig();

config.setMaxTotal(maxActive);

config.setMaxWaitMillis(maxWait);

config.setMaxIdle(maxIdle);

config.setMinIdle(minIdle);

config.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

LettucePoolingClientConfiguration pool = LettucePoolingClientConfiguration.builder().poolConfig(config)

.commandTimeout(Duration.ofMillis(timeout)).shutdownTimeout(Duration.ofMillis(shutdown)).build();

return pool;

}

/**

* Configure Redis Cluster information

*/

@Bean

@ConditionalOnMissingBean

public LettuceConnectionFactory lettuceConnectionFactory() {

LettuceConnectionFactory factory = null;

String[] split = nodes.split(",");

Set<HostAndPort> nodes = new LinkedHashSet<>();

for (int i = 0; i < split.length; i++) {

try {

String[] split1 = split[i].split(":");

nodes.add(new HostAndPort(split1[0], Integer.parseInt(split1[1])));

} catch (Exception e) {

logger.error(">>>>>>A configuration error occurred!Please confirm: " + e.getMessage(), e);

throw new RuntimeException(String.format("A configuration error occurred!Please confirm node=[%s]Is it correct", nodes));

}

}

// If it's sentinel mode

if (!StringUtils.isEmpty(sentinel)) {

logger.info(">>>>>>Redis use SentinelConfiguration");

RedisSentinelConfiguration redisSentinelConfiguration = new RedisSentinelConfiguration();

String[] sentinelArray = sentinel.split(",");

for (String s : sentinelArray) {

try {

String[] split1 = s.split(":");

redisSentinelConfiguration.addSentinel(new RedisNode(split1[0], Integer.parseInt(split1[1])));

} catch (Exception e) {

logger.error(">>>>>>A configuration error occurred!Please confirm: " + e.getMessage(), e);

throw new RuntimeException(String.format("A configuration error occurred!Please confirm node=[%s]Is it correct", sentinelArray));

}

}

redisSentinelConfiguration.setMaster(master);

redisSentinelConfiguration.setPassword(password);

factory = new LettuceConnectionFactory(redisSentinelConfiguration, getPoolConfig());

}

// If it is a single node, use Standalone mode

else {

if (nodes.size() < 2) {

logger.info(">>>>>>Redis use RedisStandaloneConfiguration");

for (HostAndPort n : nodes) {

RedisStandaloneConfiguration redisStandaloneConfiguration = new RedisStandaloneConfiguration();

if (!StringUtils.isEmpty(password)) {

redisStandaloneConfiguration.setPassword(RedisPassword.of(password));

}

redisStandaloneConfiguration.setPort(n.getPort());

redisStandaloneConfiguration.setHostName(n.getHost());

factory = new LettuceConnectionFactory(redisStandaloneConfiguration, getPoolConfig());

}

} else {

logger.info(">>>>>>Redis use RedisClusterConfiguration");

RedisClusterConfiguration redisClusterConfiguration = new RedisClusterConfiguration();

nodes.forEach(n -> {

redisClusterConfiguration.addClusterNode(new RedisNode(n.getHost(), n.getPort()));

});

if (!StringUtils.isEmpty(password)) {

redisClusterConfiguration.setPassword(RedisPassword.of(password));

}

redisClusterConfiguration.setMaxRedirects(maxRedirects);

factory = new LettuceConnectionFactory(redisClusterConfiguration, getPoolConfig());

}

}

return factory;

}

@Bean

public RedisTemplate<String, Object> redisTemplate(LettuceConnectionFactory lettuceConnectionFactory) {

RedisTemplate<String, Object> template = new RedisTemplate<>();

template.setConnectionFactory(lettuceConnectionFactory);

Jackson2JsonRedisSerializer jacksonSerial = new Jackson2JsonRedisSerializer<>(Object.class);

ObjectMapper om = new ObjectMapper();

// Specify the fields to be serialized, field,get, set, and modifier range. ANY includes private and public

om.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

jacksonSerial.setObjectMapper(om);

StringRedisSerializer stringSerial = new StringRedisSerializer();

template.setKeySerializer(stringSerial);

// template.setValueSerializer(stringSerial);

template.setValueSerializer(jacksonSerial);

template.setHashKeySerializer(stringSerial);

template.setHashValueSerializer(jacksonSerial);

template.afterPropertiesSet();

return template;

}

// Initialize the bloom filter and put it into the spring container

@Bean

public BloomFilterHelper<String> initBloomFilterHelper() {

return new BloomFilterHelper<>(

(Funnel<String>) (from, into) -> into.putString(from, Charsets.UTF_8).putString(from, Charsets.UTF_8),

100000000, 0.001);

}

}

Use of code

Remember that the layout filter can only add or delete data and re feed data.

We can do this in production. Let's tell you how to operate with two examples.

Example 1: protect all Diamond members before the event date

The start date of the activity is new year's day, January 1, 2022. Before that, all swordsman members need to enter the protection.

So we wrote a JOB to load tens of millions of members into the bloom filter at one time. Then, when the activity takes effect at 9:00 a.m. on January 1, as long as you come in with a member token that does not exist in the system, such as ut, all the requests are blocked at the bloom filter layer.

Example 2. For sku in all categories_ ID for protection.

There are 16 categories of products with applet or front-end app, each of which has 1000 SKUs, almost 120000 SKUs. These SKUs will be accompanied by 2-3 operations on and off the shelf every day. Then we will make a job, which will run in 5 minutes. Pull out all SKUs in "on shelf status" from the database and feed them to bloom filter. Delete the key of Bloom filter before feeding. Of course, in order to achieve more accuracy, we will use MQ asynchrony. After the loading and unloading are completed, click the [Effective] button, and then an MQ will notify a Service to delete the key in the original bloom filter, and then feed the full amount of sku_id, the whole process is completed in seconds.

Let's take example 1 to see the implementation of business code. Here, we make a Service, which loads the token s of all users when the application is started.

UTBloomFilterInit

package org.mk.demo.redisdemo.bloomfilter;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.ApplicationArguments;

import org.springframework.boot.ApplicationRunner;

import org.springframework.core.annotation.Order;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Component;

@Component

@Order(1) // If more than one class implements the ApplicationRunner interface, you can use this annotation to specify the execution order

public class UTBloomFilterInit implements ApplicationRunner {

private final static String BLOOM_UT = "bloom_ut";

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Autowired

private BloomFilterHelper bloomFilterHelper;

@Autowired

private RedisBloomFilter redisBloomFilter;

@Autowired

private RedisTemplate redisTemplate;

@Override

public void run(ApplicationArguments args) throws Exception {

try {

if (redisTemplate.hasKey(BLOOM_UT)) {

logger.info(">>>>>>bloom filter key->" + BLOOM_UT + " existed, delete it first then init");

redisTemplate.delete(BLOOM_UT);

}

for (int i = 0; i < 10000; i++) {

StringBuffer ut = new StringBuffer();

ut.append("ut_");

ut.append(i);

redisBloomFilter.addByBloomFilter(bloomFilterHelper, BLOOM_UT, ut.toString());

}

logger.info(">>>>>>init ut into redis bloom successfully");

} catch (Exception e) {

logger.info(">>>>>>init ut into redis bloom failed:" + e.getMessage(), e);

}

}

}

This Service will simulate loading a full number of users into the bloom filter when the spring boot starts.

RedisBloomController

package org.mk.demo.redisdemo.controller;

import org.mk.demo.redisdemo.bean.UserBean;

import org.mk.demo.redisdemo.bloomfilter.BloomFilterHelper;

import org.mk.demo.redisdemo.bloomfilter.RedisBloomFilter;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.ResponseBody;

import org.springframework.web.bind.annotation.RestController;

@RestController

@RequestMapping("demo")

public class RedisBloomController {

private final static String BLOOM_UT = "bloom_ut";

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Autowired

private BloomFilterHelper bloomFilterHelper;

@Autowired

private RedisBloomFilter redisBloomFilter;

@PostMapping(value = "/redis/checkBloom", produces = "application/json")

@ResponseBody

public String check(@RequestBody UserBean user) {

try {

boolean b = redisBloomFilter.includeByBloomFilter(bloomFilterHelper, BLOOM_UT, user.getUt());

if (b) {

return "existed";

} else {

return "not existed";

}

} catch (Exception e) {

logger.error(">>>>>>init bloom error: " + e.getMessage(), e);

return "check error";

}

}

}

Test service interception

We use the ut existing in the bloom filter to access and get the following results

We use the ut that does not exist in the bloom filter to access, and get the following results

This judgment is a response at the millisecond level under 3000 concurrency

summary

In fact, we can load hundreds of millions of data into bloom filters. bloom is much smaller than hash or md5, and it hardly repeats.

The size of Bronner data depends on these two values:

These two values are interpreted as within 100 million pieces of data, and the error (omission) accuracy is 1.5 per 10000 The bloom filter returns false, and 100% can be considered as nonexistent. The higher the accuracy, the larger the storage space in redis. It is divided at one time, not only 10000 at present, so it needs 200k, but 100000 next time, so it needs 12MB. Let's take a look at how many resources are used in redis with an accuracy of one ten thousandth of 100 million data.

100 million pieces of data are only 200 trillion, which is too small a case for Redis production.

The introduction of common scenes of Bloom filter will also be combed by the way