redis detailed notes

xxd

2021 / 12 / 03 Beijing

1, Getting started with redis

redis installation

1. Download redis-5.0 7.tar. GZ and put it in our Linux directory / opt

2. In the / opt directory, unzip the command: tar -zxvf redis-5.0 7.tar. gz

3. After decompression, a folder appears: redis-5.0 seven

4. Enter the directory: CD redis-5.0 seven

5. On redis-5.0 Execute the make command in the 7 directory

function make Deliberate error parsing during command: 1. install gcc (gcc yes linux The next compiler is c Program compiler) Internet access: yum install gcc-c++ Version test: gcc-v 2. secondary make 3. Jemalloc/jemalloc.h: There is no such file or directory function make distclean Later make 4. Redis Test((no need to execute)

6. If make is completed, continue to execute make install

7. View the default installation directory: usr/local/bin

1 /usr This is a very important directory, similar to windows Lower Program Files,Store user's program

8. Copy profile (standby)

cd /usr/local/bin ls -l # Back up redis.com in the redis decompression directory conf mkdir myredis cp redis.conf myredis # Copy a backup, form a good habit, and we will modify this file # Modify the configuration to ensure that it can be applied in the background vim redis.conf

Set redis to start the background daemon

A,redis.conf In the configuration file daemonize Daemon thread, default is NO.

B,daemonize Is used to specify redis Whether to start as a daemon thread.

daemonize set up yes perhaps no difference

daemonize:yes

redis Single process multithreading mode is adopted. When redis.conf Medium options daemonize Set as yes When, it means on

Daemon mode. In this mode, redis Will run in the background and pid Number written to redis.conf option

pidfile In the set file, at this time redis Will always run unless manually kill The process.

daemonize:no

When daemonize Option set to no The current interface will enter redis Command line interface, exit Force exit or close

Connection tool(putty,xshell etc.)Will lead to redis The process exited.

Modify the binding host address of the connection in redis

network

After the installation of redis, if you need to connect to the content externally, you need to modify some configurations accordingly so that it can be accessed outside the virtual machine

bind 127.0.0.1 # Bound port protected-mode yes #Protection mode port 6379 # port

Check whether redis is started

# View process ps -ef | grep redis # Short command view process lsof -i :6379 see redis Start

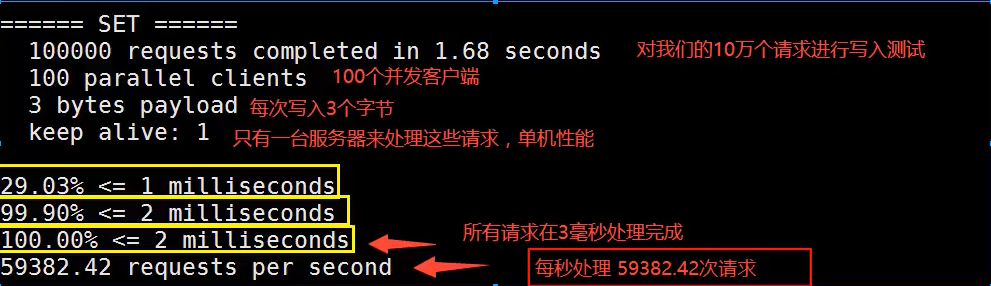

redis performance test

Redis benchmark is the official redis performance test tool

redis-benchmark [option] [option value]

The optional parameters are:

| Serial number | option | describe | Default value |

| 1 | -h | Specify the server host name | 127.0.0.1 |

| 2 | -p | Specify server port | 6379 |

| 3 | -s | Specify server socket | |

| 4 | -c | Specifies the number of concurrent connections | 50 |

| 5 | -n | Specify the number of requests | 10000 |

| 6 | -d | Specifies the data size of the SET/GET value in bytes | 2 |

| 7 | -k | 1=keep alive 0=reconnect | 1 |

| 8 | -r | SET/GET/INCR uses random keys and Sadd uses random values | |

| 9 | -P | Pipeline requests | 1 |

| 10 | -q | Force to exit redis. Show only query/sec values | |

| 11 | –csv | Export in CSV format | |

| 12 | *-L * (lowercase letter of L) | Generate a loop and permanently execute the test | |

| 13 | -t | Run only a comma separated list of test commands. | |

| 14 | *-i * (capital letter of i) | Idle mode. Open only N idle connections and wait. |

2, redis database operation

Detailed explanation of redis official website command

DBSIZE #View the number of key s in the database #By default, there are 16 databases in redis (which can be modified in the configuration file). By default, database No. 0 is used, and the database names are accumulated by default and cannot be defined by themselves. If the database needs to set a password, set a database password, and all of them need password verification. flushdb #Empty current library flushall #Empty all libraries # Exists is the name of the key to determine whether a key exists exists key xiao #Remove a key from the current library move aa 1 # expire key seconds: set the lifetime for a given key. When the key expires (the lifetime is 0), it will be deleted automatically. Normal time is not set. The default is - 1 (never expires) # ttl key to check how many seconds are left to expire, - 1 means it will never expire, - 2 means it has expired # type key to see what type of key you have

3, Data types in redis

As a nosql database, the core of redis is single thread operation. It can be used as database, cache and message agent. It supports data structures such as strings, hashes, lists, collections, sorted collections with range queries, bitmaps, hyperlogs, geospatial indexes with radius queries and streams. Redis has built-in replication, lua script, LRU eviction, transaction and different levels of disk persistence, and provides high availability through redis sentinel and redis cluster automatic partition.

1. String string type

Single value

String type is binary safe, which means that redis's string can contain any data, such as jpg pictures or serialized objects. String type is the most basic data type of redis. The string value in a redis can be 512M at most.

# set setting value. When setting, you can use expire key seconds to specify the expiration time # Get get value # del delete value # append add value 127.0.0.1:6379> append key1 "hello" # APPEND the nonexistent key, which is equivalent to SET key1 "hello" 127.0.0.1:6379> APPEND key1 "-2333" # Append (integer) the existing string. The length is increased from 5 characters to 10 characters # strlen gets the length of the string # incr and decr must be numbers to add or subtract, + 1 and - 1. # The incrby and decrby commands add the specified increment value to the number stored in the key. 127.0.0.1:6379> set views 0 OK 127.0.0.1:6379> incr views (integer) 1 127.0.0.1:6379> incr views (integer) 2 127.0.0.1:6379> incr views (integer) 3 127.0.0.1:6379> decr views (integer) 2 127.0.0.1:6379> decr views (integer) 1 127.0.0.1:6379> incrby views 10 (integer) 14 127.0.0.1:6379> decrby views 5 (integer) 9 127.0.0.1:6379> # Range [range] # getrange gets the value within the specified range, similar to between And, from zero to negative one indicates all (0 - 1) and other ranges indicate that the subscript is calculated from 0 # Setrange sets the value within the specified range. The format is the specific value of setrange key value (replace the following value from the current subscript) 127.0.0.1:6379> set key2 abcdef123456 OK 127.0.0.1:6379> strlen key2 (integer) 12 127.0.0.1:6379> getrange key2 0 -1 "abcdef123456" 127.0.0.1:6379> getrange key2 0 4 "abcde" 127.0.0.1:6379> 127.0.0.1:6379> get key2 "abcdef123456" 127.0.0.1:6379> setrange key2 3 123456789 (integer) 12 127.0.0.1:6379> get key2 "abc123456789" 127.0.0.1:6379> 127.0.0.1:6379> get key2 "abc123456789" 127.0.0.1:6379> setrange key2 4 xx (integer) 12 127.0.0.1:6379> get jey2 (nil) 127.0.0.1:6379> get key2 "abc1xx456789" 127.0.0.1:6379> # The setex (set with expire) key is used to set the second value together with the expiration time # setnx (set if not exist) sets the value. If it does not exist, it sets the corresponding key value. If it does exist, it does not set the value, and returns 0. # The mset Mset command is used to set one or more key value pairs at the same time. # The mget command returns the values of all (one or more) given keys. If a given key does not exist, the key returns the special value nil. # msetnx when all keys are set successfully, 1 is returned. If all the given keys fail to be set (at least one key already exists), 0 is returned. Atomic operation 127.0.0.1:6379> mset key3 xiaoxiao key4 xiaoxiao1 OK 127.0.0.1:6379> keys * 1) "name2" 2) "key2" 3) "views" 4) "name" 5) "key3" 6) "key1" 7) "key4" 127.0.0.1:6379> keys * 1) "name2" 2) "key2" 3) "views" 4) "name" 5) "key1" 127.0.0.1:6379> mget name2 key1 key2 key3 1) "linux" 2) "helloworld" 3) "redis" 4) (nil) 127.0.0.1:6379> keys * 1) "name2" 2) "key2" 3) "views" 4) "name" 5) "key3" 6) "key1" 7) "key4" 127.0.0.1:6379> msetnx key1 xiaoxiao key5 xiaoxioa3 (integer) 0 127.0.0.1:6379> keys * 1) "name2" 2) "key2" 3) "views" 4) "name" 5) "key3" 6) "key1" 7) "key4" 127.0.0.1:6379> get key5 (nil) # Traditional object cache set user:1 value(json data) # Can be used to cache objects mset user:1:name zhangsan user:1:age 2 mget user:1:name user:1:age # =================================================== # getset (get before set) # =================================================== 127.0.0.1:6379> getset db mongodb # No old value, return nil (nil) 127.0.0.1:6379> get db "mongodb" 127.0.0.1:6379> getset db redis # Return old value mongodb "mongodb" 127.0.0.1:6379> get db "redis"

2. List list

Single value multi value

# Lpush: inserts one or more values into the list header. (left) # rpush: inserts one or more values at the end of the list. (right) # lrange: returns the elements within the specified interval in the list. The interval is specified by offset START and END. Where 0 represents the first element of the list, 1 represents the second element of the list, and so on. Negative subscripts can also be used, with - 1 representing the last element of the list, - 2 representing the penultimate element of the list, and so on. 127.0.0.1:6379> lpush list aa bb cc dd (integer) 4 127.0.0.1:6379> lrange list 0 -1 1) "dd" 2) "cc" 3) "bb" 4) "aa" 127.0.0.1:6379> lrange list 0 1 1) "dd" 2) "cc" # The lpop command removes and returns the first element of the list. When the list key does not exist, nil is returned. # rpop removes the last element of the list, and the return value is the removed element. 127.0.0.1:6379> lpop list "dd" 127.0.0.1:6379> rpop list "aa" 127.0.0.1:6379> lrange list 0 -1 1) "cc" 2) "bb" # lindex gets the value of the subscript corresponding to the element 127.0.0.1:6379> lrange list 0 -1 1) "cc" 2) "bb" 127.0.0.1:6379> lindex list 0 "cc" 127.0.0.1:6379> lrange list 0 -1 1) "cc" 2) "bb" 127.0.0.1:6379> lindex list 1 "bb" # llen returns the length of the list 127.0.0.1:6379> llen list (integer) 2 # lrem key removes the elements in the list equal to the parameter value according to the value of the parameter count 127.0.0.1:6379> lrange list2 0 -1 1) "4jkl" 2) "3ghi" 3) "2def" 4) "1abc" 127.0.0.1:6379> lrem list2 0 "1abc" (integer) 1 127.0.0.1:6379> lrem list2 1 "1abc" (integer) 0 127.0.0.1:6379> lrem list2 1 "2def" (integer) 1 127.0.0.1:6379> lrange list2 0 -1 1) "4jkl" 2) "3ghi" # Ltrim key trims a list, that is, only the elements within the specified interval are retained in the list, and the elements not within the specified interval will be deleted. 127.0.0.1:6379> rpush list3 value1 value2 value3 (integer) 3 127.0.0.1:6379> lrange list3 0 -1 1) "value1" 2) "value2" 3) "value3" 127.0.0.1:6379> ltrim list3 1 2 OK 127.0.0.1:6379> lrange list3 0 -1 1) "value2" 2) "value3" # Rpop lpush removes the last element of the list, adds it to another list, and returns. 127.0.0.1:6379> lrange list2 0 -1 1) "4jkl" 2) "3ghi" 127.0.0.1:6379> lrange list3 0 -1 1) "value2" 2) "value3" 127.0.0.1:6379> rpoplpush list2 list3 "3ghi" 127.0.0.1:6379> lrange list2 0 -1 1) "4jkl" 127.0.0.1:6379> lrange list3 0 -1 1) "3ghi" 2) "value2" 3) "value3" # lset key index value sets the value of the element whose index is the index of the list key to value. 127.0.0.1:6379> exists list # LSET the empty list (key does not exist) (integer) 0 127.0.0.1:6379> lset list 0 item # report errors (error) ERR no such key 127.0.0.1:6379> lpush list "value1" # LSET non empty list (integer) 1 127.0.0.1:6379> lrange list 0 0 1) "value1" 127.0.0.1:6379> lset list 0 "new" # Update value OK 127.0.0.1:6379> lrange list 0 0 1) "new" 127.0.0.1:6379> lset list 1 "new" # Error when index exceeds the range (error) ERR index out of range #linsert key before/after pivot value is used to insert elements before or after elements in the list. Insert the value value into the list key, before or after the value pivot. 127.0.0.1:6379> lrange list3 0 -1 1) "value0" 2) "value2" 3) "value3" 127.0.0.1:6379> linsert list3 before value2 value1 (integer) 4 127.0.0.1:6379> lrange list3 0 -1 1) "value0" 2) "value1" 3) "value2" 4) "value3"

Performance summary:

List is actually a linked list. Node before after can be inserted on its left or right.

Inserting on both sides or changing values is the most efficient. Intermediate elements are relatively inefficient.

Message queuing, message queuing: Lpush Rpop stack: Lpush Lpop

List is a linked list. Anyone with a little knowledge of data structure should be able to understand its structure. Using the Lists structure, we can easily implement the latest message ranking and other functions. Another application of list is message queue. You can use the push operation of list to store tasks in the list, and then the working thread takes out the tasks for execution with pop operation. Redis also provides an api to operate a section in the list. You can query directly and delete the elements of a section in the list. Redis's list is a two-way linked list with each sub element of String type. You can add or delete elements from the head or tail of the list through push and pop operations. In this way, the list can be used as a stack or a queue.

3. Set set

Unordered non repeating set

# =================================================== # sadd adds one or more member elements to the collection and cannot be repeated # smembers returns all members in the collection. # The sismember command determines whether a member element is a member of a collection. # =================================================== 127.0.0.1:6379> sadd myset "hello" (integer) 1 127.0.0.1:6379> sadd myset "kuangshen" (integer) 1 127.0.0.1:6379> sadd myset "kuangshen" (integer) 0 127.0.0.1:6379> SMEMBERS myset 1) "kuangshen" 2) "hello" 127.0.0.1:6379> SISMEMBER myset "hello" (integer) 1 127.0.0.1:6379> SISMEMBER myset "world" (integer) 0 # =================================================== # scard, get the number of elements in the collection # =================================================== 127.0.0.1:6379> scard myset (integer) 2 # =================================================== # srem key value is used to remove one or more member elements from the collection # =================================================== 127.0.0.1:6379> srem myset "kuangshen" (integer) 1 127.0.0.1:6379> SMEMBERS myset 1) "hello" # =================================================== # The srandmember key command returns a random element in a collection. # =================================================== 127.0.0.1:6379> SMEMBERS myset 1) "kuangshen" 2) "world" 3) "hello" 127.0.0.1:6379> SRANDMEMBER myset "hello" 127.0.0.1:6379> SRANDMEMBER myset 2 1) "world" 2) "kuangshen" 127.0.0.1:6379> SRANDMEMBER myset 2 1) "kuangshen" 2) "hello" # =================================================== # spop key is used to remove one or more random elements of the specified key in the collection # =================================================== 127.0.0.1:6379> SMEMBERS myset 1) "kuangshen" 2) "world" 3) "hello" 127.0.0.1:6379> spop myset "world" 127.0.0.1:6379> spop myset "kuangshen" 127.0.0.1:6379> spop myset "hello" # =================================================== # smove SOURCE DESTINATION MEMBER # Moves the specified member element from the source collection to the destination collection. # =================================================== 127.0.0.1:6379> sadd myset "hello" (integer) 1 127.0.0.1:6379> sadd myset "world" (integer) 1 127.0.0.1:6379> sadd myset "kuangshen" (integer) 1 127.0.0.1:6379> sadd myset2 "set2" (integer) 1 127.0.0.1:6379> smove myset myset2 "kuangshen" (integer) 1 127.0.0.1:6379> SMEMBERS myset 1) "world" 2) "hello" 127.0.0.1:6379> SMEMBERS myset2 1) "kuangshen" 2) "set2" # =================================================== - Digital collection class - Difference set: sdiff - Intersection: sinter - Union: sunion # =================================================== 127.0.0.1:6379> sadd key1 a (integer) 1 127.0.0.1:6379> sadd key1 b (integer) 1 127.0.0.1:6379> sadd key1 c (integer) 1 127.0.0.1:6379> sadd key2 c (integer) 1 127.0.0.1:6379> sadd key2 d (integer) 1 127.0.0.1:6379> sadd key2 e (integer) 1 127.0.0.1:6379> SMEMBERS key1 1) "a" 2) "c" 3) "b" 127.0.0.1:6379> SMEMBERS key2 1) "d" 2) "c" 3) "e" 127.0.0.1:6379> sdiff key1 key2 # Difference set 1) "a" 2) "b" 127.0.0.1:6379> SINTER key1 key2 # intersection 1) "c" 127.0.0.1:6379> SUNION key1 key2 # Union 1) "b" 2) "c" 3) "a" 4) "d" 5) "e"

In the microblog application, all the followers of a user can be stored in a collection, and all their fans can be stored in a collection. Redis also provides intersection, union, difference and other operations for collections, which can easily realize functions such as common concern, common preference, second degree friend and so on. For all the above collection operations, you can also use different commands to choose whether to return the results to the client or save them to a new collection.

4. Hash hash

The K V mode remains unchanged, but V is a map set in the form of key value pairs, key value

value is a set of map s

# =================================================== # The hset and hget commands are used to assign values to fields in the hash table. # hmset and hmget set multiple field value pairs into the hash table at the same time. The existing fields in the hash table will be overwritten. # hgetall is used to return all fields and values in the hash table. # hdel is used to delete one or more specified fields in the hash table key # =================================================== 127.0.0.1:6379> hset myhash field1 "kuangshen" (integer) 1 127.0.0.1:6379> hget myhash field1 "kuangshen" 127.0.0.1:6379> HMSET myhash field1 "Hello" field2 "World" OK 127.0.0.1:6379> HGET myhash field1 "Hello" 127.0.0.1:6379> HGET myhash field2 "World" 127.0.0.1:6379> hgetall myhash 1) "field1" 2) "Hello" 3) "field2" 4) "World" 127.0.0.1:6379> HDEL myhash field1 (integer) 1 127.0.0.1:6379> hgetall myhash 1) "field2" 2) "World" # =================================================== # hlen gets the number of fields in the hash table. # =================================================== 127.0.0.1:6379> hlen myhash (integer) 1 127.0.0.1:6379> HMSET myhash field1 "Hello" field2 "World" OK 127.0.0.1:6379> hlen myhash (integer) 2 # =================================================== # hexists checks whether the specified field of the hash table exists. # =================================================== 127.0.0.1:6379> hexists myhash field1 (integer) 1 127.0.0.1:6379> hexists myhash field3 (integer) 0 # =================================================== # hkeys gets all field s in the hash table. # hvals returns the values of all fields in the hash table. # =================================================== 127.0.0.1:6379> HKEYS myhash 1) "field2" 2) "field1" 127.0.0.1:6379> HVALS myhash 1) "World" 2) "Hello" # =================================================== # hincrby adds the specified increment value to the field value in the hash table. # =================================================== 127.0.0.1:6379> hset myhash field 5 (integer) 1 127.0.0.1:6379> HINCRBY myhash field 1 (integer) 6 127.0.0.1:6379> HINCRBY myhash field -1 (integer) 5 127.0.0.1:6379> HINCRBY myhash field -10 (integer) -5 # =================================================== # hsetnx assigns a value to a field that does not exist in the hash table. # =================================================== 127.0.0.1:6379> HSETNX myhash field1 "hello" (integer) 1 # Set successfully, return 1. 127.0.0.1:6379> HSETNX myhash field1 "world" (integer) 0 # Returns 0 if the given field already exists. 127.0.0.1:6379> HGET myhash field1 "hello"

Application of hash:

Save some changed data, especially some user information, and some frequently changed information.

Redis hash is a mapping table of field and value of string type. Hash is especially suitable for storing objects. Store partially changed data, such as user information, etc.

5. Ordered set zset

A value is added on the basis of set;

set k1 v1

zset k1 score v1

127.0.0.1:6379> zadd myset 1 one #Add a value to zset (integer) 1 127.0.0.1:6379> zadd myset 2 two (integer) 1 127.0.0.1:6379> zadd myset 2 two 3 three #Add multiple values (integer) 1 127.0.0.1:6379> zrange myset 0 -1 # Get the value inside 1) "one" 2) "two" 3) "three" ##sort 127.0.0.1:6379> zadd saraly 2500 xiaohong (integer) 1 127.0.0.1:6379> zadd saraly 5000 zhangsan (integer) 1 127.0.0.1:6379> zadd saraly 500 xiaowang (integer) 1 127.0.0.1:6379> ZRANGEBYSCORE salary -inf +inf #Sorted from small to large, inf represents infinity (empty array) 127.0.0.1:6379> ZRANGEBYSCORE saraly -inf +inf 1) "xiaowang" 2) "xiaohong" 3) "zhangsan" 127.0.0.1:6379> ZRANGEBYSCORE saraly 0 -1 (empty array) 127.0.0.1:6379> ZRANGEBYSCORE saraly -inf +inf withscores #Sort from small to large, and then output the corresponding scores 1) "xiaowang" 2) "500" 3) "xiaohong" 4) "2500" 5) "zhangsan" 6) "5000" # Remove elements from rem 127.0.0.1:6379> zrange saraly 0 -1 1) "xiaowang" 2) "xiaohong" 3) "zhangsan" 127.0.0.1:6379> zrem saraly zhangsan (integer) 1 127.0.0.1:6379> zrange saraly 0 -1 1) "xiaowang" 2) "xiaohong" #Number of views 127.0.0.1:6379> zcard saraly (integer) 2 # =================================================== # zrank returns the ranking of specified members in an ordered set. The members of the ordered set are arranged in the order of increasing fractional value (from small to large). # =================================================== 127.0.0.1:6379> zadd salary 2500 xiaoming (integer) 1 127.0.0.1:6379> zadd salary 5000 xiaohong (integer) 1 127.0.0.1:6379> zadd salary 500 kuangshen (integer) 1 127.0.0.1:6379> ZRANGE salary 0 -1 WITHSCORES # Displays all members and their score values 1) "kuangshen" 2) "500" 3) "xiaoming" 4) "2500" 5) "xiaohong" 6) "5000" 127.0.0.1:6379> zrank salary kuangshen # Display the salary ranking of kuangshen, the lowest (integer) 0 127.0.0.1:6379> zrank salary xiaohong # Show xiaohong's salary ranking, third (integer) 2 # =================================================== # zrevrank returns the ranking of members in an ordered set. The ordered set members are sorted in descending order (from large to small) according to the score value. # =================================================== 127.0.0.1:6379> ZREVRANK salary kuangshen # Crazy God III (integer) 2 127.0.0.1:6379> ZREVRANK salary xiaohong # Little red first (integer) 0

Compared with set, sorted set adds a weight parameter score, so that the elements in the set can be arranged orderly according to score. For example, for a sorted set that stores the scores of the whole class, the set value can be the student number of the students, and the score can be the test score. In this way, natural sorting has been carried out when the data is inserted into the set. Sorted set can be used as a weighted queue. For example, the score of ordinary messages is 1 and the score of important messages is 2. Then the worker thread can choose to obtain work tasks in the reverse order of score. Give priority to important tasks. Leaderboard application, take TOP N operation!

4, Three special data types

1. geospatial geographic location

The geo feature of Redis is launched in Redis version 3.2. This function can store and operate the geographic location information given by the user. To realize functions that depend on geographic location information, such as nearby location and shaking. The data type of geo is zset. The data structure of geo has six common commands: geoadd, geopos, geodist, georadius, georadius by member, and gethash

geoadd

# grammar geoadd key longitude latitude member ... # Add the given spatial element (latitude, longitude, name) to the specified key. # These data will be stored in the key in the form of ordered set he, so that commands such as georadius and georadius by member can obtain these elements through location query later. # The geoadd command accepts parameters in the standard x,y format, so the user must enter longitude first and then latitude. # The coordinates geoadd can record are limited: areas very close to the poles cannot be indexed. # The effective longitude is between - 180 and 180 degrees, and the effective latitude is between -85.05112878 and 85.05112878 degrees., When the user tries to enter an out of range longitude or latitude, the geoadd command returns an error.

127.0.0.1:6379> geoadd china:city 116.23 40.22 Beijing (integer) 1 127.0.0.1:6379> geoadd china:city 121.48 31.40 Shanghai 113.88 22.55 Shenzhen 120.21 30.20 Hangzhou (integer) 3 127.0.0.1:6379> geoadd china:city 106.54 29.40 Chongqing 108.93 34.23 Xi'an 114.02 30.58 Wuhan (integer) 3

geopos

# grammar geopos key member [member...] #Return the positions (longitude and latitude) of all the given positioning elements from the key

127.0.0.1:6379> geopos china:city Beijing 1) 1) "116.23000055551528931" 2) "40.2200010338739844" 127.0.0.1:6379> geopos china:city Shanghai Chongqing 1) 1) "121.48000091314315796" 2) "31.40000025319353938" 2) 1) "106.54000014066696167" 2) "29.39999880018641676" 127.0.0.1:6379> geopos china:city Xinjiang 1) (nil)

geodist

# grammar geodist key member1 member2 [unit] # Returns the distance between two given positions. If one of the two positions does not exist, the command returns null. # The parameter unit of the specified unit must be one of the following units: # m is in meters # km is expressed in kilometers # mi is in miles # ft is in feet # If you do not explicitly specify the unit parameter, geodist defaults to meters. #The geodist command assumes that the earth is a perfect sphere when calculating the distance. In extreme cases, this assumption will cause a maximum error of 0.5% Bad.

127.0.0.1:6379> geodist china:city Beijing Shanghai "1088785.4302" 127.0.0.1:6379> geodist china:city Beijing Shanghai km "1088.7854" 127.0.0.1:6379> geodist china:city Chongqing Beijing km "1491.6716"

georadius

# grammar georadius key longitude latitude radius m|km|ft|mi [withcoord][withdist] [withhash][asc|desc][count count] # Take the given latitude and longitude as the center to find out the elements within a certain radius

Test: reconnect redis CLI and add the parameter - raw to force the output of Chinese, otherwise it will be garbled

[root@kuangshen bin]# redis-cli --raw -p 6379 # Search china:city for cities with coordinates of 100 30 and a radius of 1000km 127.0.0.1:6379> georadius china:city 100 30 1000 km Chongqing Xi'an # Withlist returns the location name and center distance 127.0.0.1:6379> georadius china:city 100 30 1000 km withdist Chongqing 635.2850 Xi'an 963.3171 # withcoord returns the location name and latitude and longitude 127.0.0.1:6379> georadius china:city 100 30 1000 km withcoord Chongqing 106.54000014066696167 29.39999880018641676 Xi'an 108.92999857664108276 34.23000121926852302 # Withlist withword returns the location name, distance, longitude and latitude count, and limits the number of searches 127.0.0.1:6379> georadius china:city 100 30 1000 km withcoord withdist count 1 Chongqing 635.2850 106.54000014066696167 29.39999880018641676 127.0.0.1:6379> georadius china:city 100 30 1000 km withcoord withdist count 2 Chongqing 635.2850 106.54000014066696167 29.39999880018641676 Xi'an 963.3171 108.92999857664108276 34.23000121926852302

georadiusbymember

# grammar georadiusbymember key member radius m|km|ft|mi [withcoord][withdist] [withhash][asc|desc][count count] # Find the element within the specified range. The center point is determined by the given location element

127.0.0.1:6379> GEORADIUSBYMEMBER china:city Beijing 1000 km Beijing Xi'an 127.0.0.1:6379> GEORADIUSBYMEMBER china:city Shanghai 400 km Hangzhou Shanghai

geohash

# grammar geohash key member [member...] # Redis uses geohash to convert two-dimensional latitude and longitude into one-dimensional string. The longer the string, the more accurate the position, and the more similar the two strings Indicates the closer the distance.

127.0.0.1:6379> geohash china:city Beijing Chongqing wx4sucu47r0 wm5z22h53v0 127.0.0.1:6379> geohash china:city Beijing Shanghai wx4sucu47r0 wtw6sk5n300

rem

GEO does not provide a command to delete members, but because the underlying implementation of GEO is zset, you can use the zrem command to delete geographic location information

127.0.0.1:6379> geoadd china:city 116.23 40.22 beijin 1 127.0.0.1:6379> zrange china:city 0 -1 # View all elements Chongqing Xi'an Shenzhen Wuhan Hangzhou Shanghai beijin Beijing 127.0.0.1:6379> zrem china:city beijin # Removing Elements 1 127.0.0.1:6379> zrem china:city Beijing # Removing Elements 1 127.0.0.1:6379> zrange china:city 0 -1 Chongqing Xi'an Shenzhen Wuhan Hangzhou Shanghai

2. Hyperloglog statistics

Redis on 2.8 Version 9 adds the hyperlog structure.

Redis HyperLogLog is an algorithm for cardinality statistics. The advantage of HyperLogLog is that when the number or volume of input elements is very, very large, the space required to calculate the cardinality is always fixed and very small.

In Redis, each hyperlog key only needs 12 KB of memory to calculate the cardinality of nearly 2 ^ 64 different elements. This is in sharp contrast to a collection where the more elements consume more memory when calculating the cardinality.

Hyperlog is an algorithm that provides an imprecise de duplication scheme.

Take a chestnut: if I want to count the UV of a web page (browse the number of users. The same user can only visit once a day). The traditional solution is to use the Set to save the user id, and then count the number of elements in the Set to obtain the page UV. However, this scheme can only carry a small number of users. Once the number of users becomes large, it needs to consume a lot of space to store the user id. my purpose is to count the number of users instead of It's saving users. It's a thankless scheme! The hyperlog of Redis can count a large number of users in 12k at most. Although it has an error rate of about 0.81%, it can be ignored for UV statistics, which do not need very accurate data.

Base:

For example, if the dataset {1, 3, 5, 7, 5, 7, 8}, the cardinality set of the dataset is {1, 3, 5, 7, 8}, and the cardinality (non repeating elements) is 5. Cardinality estimation is to quickly calculate the cardinality within the acceptable error range.

| command | describe |

|---|---|

| [PFADD key element [element ...] | Adds the specified element to the hyperlog |

| [PFCOUNT key [key ...] | Returns the cardinality estimate for a given hyperlog |

| [PFMERGE destkey sourcekey [sourcekey ...] | Merge multiple hyperloglogs into one HyperLogLog and calculate them together |

127.0.0.1:6379> PFADD mykey a b c d e f g h i j 1 127.0.0.1:6379> PFCOUNT mykey 10 127.0.0.1:6379> PFADD mykey2 i j z x c v b n m 1 127.0.0.1:6379> PFMERGE mykey3 mykey mykey2 OK 127.0.0.1:6379> PFCOUNT mykey3 15

3. Bitmap bitmap

During development, you may encounter this situation: you need to count some user information, such as active or inactive, login or not; In addition, if you need to record the user's clocking in a year, it is 1 if you punch in and 0 if you don't punch in. If you use ordinary key/value storage, 365 records will be recorded. If the number of users is large, the required space will be large. Therefore, Redis provides the data structure of bitmap. Bitmap is recorded by operating binary bits, i.e. 0 and 1; If you want to record the clocking in of 365 days, the form of bitmap is roughly as follows: 0101000111000111... What are the benefits of this? Of course, it saves memory. 365 days is equivalent to 365 bit, and 1 byte = 8 bit, so it is equivalent to using 46 bytes.

bitmap represents the corresponding value or state of an element through a bit bit, in which the key is the corresponding element itself. In fact, the bottom layer is also realized through the operation of string. Redis has added setbit, getbit, bitcount and other bitmap related commands since version 2.2.

setbit setting operation

SETBIT key offset value: set the offset bit of the key to value (1 or 0)

# Use bitmap to record the clock out records of one week in the above case, as shown below: # Monday: 1, Tuesday: 0, Wednesday: 0, Thursday: 1, Friday: 1, Saturday: 0, Sunday: 0 (1 means clock in, 0 means no clock out) 127.0.0.1:6379> setbit sign 0 1 0 127.0.0.1:6379> setbit sign 1 0 0 127.0.0.1:6379> setbit sign 2 0 0 127.0.0.1:6379> setbit sign 3 1 0 127.0.0.1:6379> setbit sign 4 1 0 127.0.0.1:6379> setbit sign 5 0 0 127.0.0.1:6379> setbit sign 6 0 0

getbit get operation

GETBIT key offset gets the value set by offset. If it is not set, it returns 0 by default

127.0.0.1:6379> getbit sign 3 # Check whether you clock in on Thursday 1 127.0.0.1:6379> getbit sign 6 # Check whether you clock in on the seventh day of the week 0

bitcount statistics operation

bitcount key [start, end] counts the number of keys whose upper level is 1

# According to the clocking records of this week, it can be seen that only 3 days are clocking in: 127.0.0.1:6379> bitcount sign 3

5, Redis Conf configuration file

1. Redis does not run as a daemon by default. You can modify this configuration item and use yes to enable the daemon

daemonize no

2. When Redis runs as a daemon, Redis writes the pid to / var / run / Redis.com by default pid file, which can be specified through pidfile

pidfile /var/run/redis.pid

3. Specify the Redis listening port. The default port is 6379. In a blog post, the author explained why 6379 is selected as the default port, because 6379 is the number corresponding to MERZ on the mobile phone button, and MERZ is taken from the name of Italian singer Alessia Merz

port 6379

4. Bound host address

bind 127.0.0.1

5. When the client is idle for how long, close the connection. If 0 is specified, it means that the function is closed

timeout 300

6. Specify the logging level. Redis supports four levels in total: debug, verbose, notice (used in production environment) and warning. The default is verbose

loglevel verbose

7. The logging mode is standard output by default. If Redis is configured to run in daemon mode, and the logging mode is configured here as standard output, the log will be sent to / dev/null

logfile stdout

8. Set the number of databases. The default database is 0. You can use the SELECT command to specify the database id on the connection

databases 16

9. Specify how many update operations will be performed within a certain period of time to synchronize the data to the data file. Multiple conditions can be combined with three conditions provided in the default configuration file of save Redis:

save 900 1 save 300 10 save 60 10000 means one change in 900 seconds (15 minutes), 10 changes in 300 seconds (5 minutes) and 10000 changes in 60 seconds, respectively.

10. Specify whether to compress the data when stored in the local database. The default is yes. Redis adopts LZF compression. If you want to save CPU time, you can turn this option off, but the database file will become huge rdbcompression yes

11. Specify the local database file name. The default value is dump rdb

dbfilename dump.rdb

12. Specify local database storage directory

dir ./

13. Set the IP address and port of the master service when the local machine is a slav e service. When Redis is started, it will automatically synchronize data from the master

slaveof

14. When password protection is set for the master service, the slav e service uses the password to connect to the master

masterauth

15. Set the Redis connection password. If the connection password is configured, the client needs to provide the password through the AUTH command when connecting to Redis. It is closed by default

requirepass foobared

config get requirepass #Get password is blank by default config set requirepass "123456" This is password 123456 # Log in to redis auth 123456 #The following operations are the same as others

16. Set the maximum number of client connections at the same time. By default, there is no limit. The number of client connections that Redis can open at the same time is the maximum number of file descriptors that Redis process can open. If maxclients 0 is set, it means there is no limit. When the number of client connections reaches the limit, Redis will close the new connection and return the max number of clients reached error message to the client

maxclients 128

17. Specify the maximum memory limit of redis. When redis starts, it will load data into memory. When the maximum memory is reached, redis will first try to clear the expired or about to expire keys. After this method is processed, it still reaches the maximum memory setting, and can no longer write, but can still read. Redis's new vm mechanism will store the Key in memory and the Value in the swap area

maxmemory

18. Specify whether to log after each update operation. Redis writes data to disk asynchronously by default. If it is not enabled, it may cause data loss for a period of time during power failure. Because redis synchronizes data files according to the above save conditions, some data will only exist in memory for a period of time. The default is no

appendonly no

19. Specifies the name of the update log file. The default is

appendonly.aof appendfilename appendonly.aof

20. Specify the update log condition. There are three optional values:

no: indicates that the operating system performs data cache synchronization to the disk (fast)

always: indicates that after each update operation, fsync() is called manually to write data to disk (slow and safe)

everysec: indicates one synchronization per second (compromise, default)

appendfsync everysec

21. Specify whether to enable the virtual memory mechanism. The default value is no. for a brief introduction, the VM mechanism stores the data in pages. Redis will swap the cold data of the pages with less access to the disk, and the pages with more access will be automatically swapped out of memory by the disk (I will carefully analyze redis's VM mechanism in the following article)

vm-enabled no

22. Virtual memory file path. The default value is / TMP / Redis Swap, which cannot be shared by multiple Redis instances

vm-swap-file /tmp/redis.swap

23. Store all data larger than VM Max memory in virtual memory. No matter how small VM Max memory is set, all index data is stored in memory (redis index data is keys), that is, when VM Max memory is set to 0, all values are actually stored in disk. The default value is 0 VM Max memory 0 24. Redis swap files are divided into many pages. An object can be saved on multiple pages, but one page cannot be shared by multiple objects. VM page size should be set according to the stored data size. The author suggests that if many small objects are stored, the page size should be set to 32 or 64 bytes; If you store very large objects, you can use a larger page. If you are not sure, you can use the default value

vm-page-size 32

25. Set the number of pages in the swap file. Since the page table (a bitmap indicating that the page is idle or used) is placed in memory, every 8 pages on the disk will consume 1 byte of memory.

vm-pages 134217728

26. Set the number of threads accessing the swap file. It is best not to exceed the number of cores of the machine. If it is set to 0, all operations on the swap file are serial, which may cause a long delay. The default value is 4

vm-max-threads 4

27. Set whether to combine smaller packets into one packet to send when answering to the client. It is on by default

glueoutputbuf yes

28. Specify a special hash algorithm when the number exceeds a certain number or the maximum element exceeds a critical value

hash-max-zipmap-entries 64

hash-max-zipmap-value 512

29. Specify whether to activate reset hash. It is on by default (described in detail later when Redis hash algorithm is introduced)

activerehashing yes

30. Specify other configuration files. You can use the same configuration file among multiple Redis instances on the same host, and each instance has its own specific configuration file

include /path/to/local.conf

6, Spring boot combined with redis

Check the Redis source code configuration in Springboot

@Configuration //This is a configuration class

@ConditionalOnClass(RedisOperations.class)

@EnableConfigurationProperties(RedisProperties.class) //Automatically import the configuration here

@Import({ LettuceConnectionConfiguration.class, JedisConnectionConfiguration.class }) //Import these two components, which are configured here, but in spring boot 2 After 0, the lettuce method is adopted, which can reduce the thread overhead. The netty method is used at the bottom, similar to the BIO method

public class RedisAutoConfiguration {

@Bean

@ConditionalOnMissingBean(name = "redisTemplate") //This annotation is added here. If we define our own redistemplet, it will invalidate this redistemplet.

public RedisTemplate<Object, Object> redisTemplate(RedisConnectionFactory redisConnectionFactory)

throws UnknownHostException {

RedisTemplate<Object, Object> template = new RedisTemplate<>();

template.setConnectionFactory(redisConnectionFactory);

return template;

}

@Bean

@ConditionalOnMissingBean

public StringRedisTemplate stringRedisTemplate(RedisConnectionFactory redisConnectionFactory)

throws UnknownHostException {

StringRedisTemplate template = new StringRedisTemplate();

template.setConnectionFactory(redisConnectionFactory);

return template;

}

}

Redistemplet configured by default in Springboot uses JDK serialization, which will escape Chinese strings.

Write your own redistemplet

@Configuration

public class RedisConfig {

@Bean

@SuppressWarnings("all")

public RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory factory) {

//Modify the String Object you often use

RedisTemplate<String, Object> template = new RedisTemplate<>();

template.setConnectionFactory(factory);

//Serialization configuration json serialization Object escape

Jackson2JsonRedisSerializer jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

ObjectMapper om = new ObjectMapper();

om.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

om.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL);

jackson2JsonRedisSerializer.setObjectMapper(om);

//String serialization

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

// The key is serialized by String

template.setKeySerializer(stringRedisSerializer);

// The key of hash is also serialized by String

template.setHashKeySerializer(stringRedisSerializer);

// value is serialized by jackson

template.setValueSerializer(jackson2JsonRedisSerializer);

// The value serialization method of hash is jackson

template.setHashValueSerializer(jackson2JsonRedisSerializer);

template.afterPropertiesSet();

return template;

}

}

Write your own redisUtils

Can be on the network, many packaged, can be used directly.

Select redis version

The version problem here:

If your spring boot The version number of is 1.4.0 To 1.5.0 Between, add redis of jar Add as package spring-boot-starter-data-redis and spring-boot-starter-redis Yes, you can... But if your spring boot The version number of is 1.4.0 It used to be 1.3.8 Before version, add redis of jar The package must be spring-boot-starter-redis of jar Package..

7, Other

1. redis transaction

Redis transaction concept:

Redis transaction is essentially a collection of commands. Transactions support the execution of multiple commands at a time, and all commands in a transaction will be serialized. During transaction execution, the commands in the execution queue will be serialized in order, and the command requests submitted by other clients will not be inserted into the transaction execution command sequence.

In conclusion, redis transaction is a one-time, sequential and exclusive execution of a series of commands in a queue.

Redis transactions do not have the concept of isolation level:

Batch operations are put into the queue cache before sending EXEC commands and will not be actually executed!

Redis does not guarantee atomicity:

In Redis, a single command is executed atomically, but the transaction does not guarantee atomicity and is not rolled back. If the execution of any command in the transaction fails, the remaining commands will still be executed. Three stages of Redis transaction

redis start transaction (Multi)

redis queue command

redis execute transaction (exec)

Redis transaction related commands:

watch key1 key2 ... #Monitor one or more keys. If the monitored key is changed by other commands before the transaction is executed, the Transaction interrupted (like optimistic lock) multi # Mark the beginning of a transaction block (queued) exec # Execute commands for all transaction blocks (once exec is executed, the previously added monitoring lock will be cancelled) discard # Cancels the transaction and discards all commands in the transaction block unwatch # Cancel the monitoring of all key s by watch

Pessimistic lock:

Pessimistic lock, as the name suggests, is very pessimistic. Every time you get the data, you think others will modify it, so you lock it every time you get the data, so others will block it until they get the lock. Many such locking mechanisms are used in traditional relational databases, such as row lock, table lock, read lock and write lock, which are locked before operation.

Optimistic lock

Pessimistic lock: as the name suggests, pessimistic lock is very pessimistic. Every time you get the data, you think others will modify it, so you lock it every time you get the data, so others will block it until it gets the lock. Many such locking mechanisms are used in traditional relational databases, such as row lock, table lock, read lock and write lock, which are locked before operation.

Use watch to detect the balance. The balance data changes during the transaction, and the transaction execution fails!

# Window one 127.0.0.1:6379> watch balance OK 127.0.0.1:6379> MULTI # After execution, execute window 2 code test OK 127.0.0.1:6379> decrby balance 20 QUEUED 127.0.0.1:6379> incrby debt 20 QUEUED 127.0.0.1:6379> exec # Modification failed! (nil) # Window II 127.0.0.1:6379> get balance "80" 127.0.0.1:6379> set balance 200 OK # Window 1: give up monitoring after a problem occurs, and then start again! 127.0.0.1:6379> UNWATCH # Abandon monitoring OK 127.0.0.1:6379> watch balance OK 127.0.0.1:6379> MULTI OK 127.0.0.1:6379> decrby balance 20 QUEUED 127.0.0.1:6379> incrby debt 20 QUEUED 127.0.0.1:6379> exec # success! 1) (integer) 180 2) (integer) 40

summary

The watch instruction is similar to an optimistic lock. When a transaction is committed, if the value of any of the multiple keys monitored by the watch has been changed by other clients, the transaction queue will not be executed when the transaction is executed using EXEC, and a null multi bulk response is returned to notify the caller of the transaction execution failure.

2. Persistence of redis

RDB (Redis database)

Writes a dataset snapshot in memory to disk within a specified time interval.

Initialization defaults to 10000 changes in 1 minute, 10 changes in 5 minutes, or 1 change in 15 minutes.

If you want to disable the RDB storage policy, you can either not set the save instruction or pass an empty string in the save instruction.

If the command is required to take effect immediately, you can directly save it in the background only with the Save command. Generate dump RDB file.

Trigger saving the generated rdb file

1. If the save rule is satisfied, the rdb rule will be triggered automatically

implement save command save: Just save it, regardless of others. It will block at this time. bgsave: redis Snapshot operations are performed asynchronously in the background.

2. RDB rules are also triggered when the flush command is executed

3. Exiting redis will also generate rdb files

The backup will automatically generate a dump RDB file

Recover rdb files

Put the rdb file into the redis startup directory, and then dump will be loaded automatically when redis starts rdb file.

advantage

1. Suitable for large-scale data recovery

2. The requirements for data integrity are not high

shortcoming

1. The process operation needs a certain time interval. If redis is unexpectedly down, the last modified data will be lost;

2. The fork process takes up a certain amount of memory.

AOF (append only file)

Record all our commands and execute all the files when recovering.

Log every write operation

# In the redis configuration file, this is off by default and needs to be modified to yes to enable logging appendonly no

After modifying the configuration file, restarting redis will regenerate an appendonly Aof documents; Inside is the log recording all write operations;

If this file is damaged and there is an error in it, redis cannot be started at this time. Redis check AOF can be used for repair;

After the file is normal, the data will be recovered automatically after restart;

advantage

It can ensure the integrity of data to the greatest extent.

In the log configuration level, you can choose whether to synchronize the aof log every time or every second.

shortcoming

The size of aof file is much larger than that of rdb file. aof files are appended infinitely by default, and the file will become larger and larger.

aof runs more slowly than rdb

summary

1. RDB persistence can snapshot and store your data within a specified time interval

2. Aof persistence records every write operation to the server. When the server restarts, these commands will be re executed to recover the original data. The AOF command additionally saves each write operation to the end of the file with redis protocol. Redis can also rewrite the AOF file in the background, so that the volume of the AOF file will not be too large.

3. Only cache. If you only want your data to exist when the server is running, you can also not use any persistence

4. Two persistence methods are enabled at the same time. In this case, when redis restarts, AOF files will be loaded first to recover the original data, because normally, the data set saved in AOF files is more complete than that saved in RDB files. RDB data is not real-time. When using both, the server will only find AOF files when restarting. Do you want to use AOF only? The author suggests no, because RDB is more suitable for backing up databases (AOF is changing and hard to back up), fast restart, and there will be no potential bugs in AOF, which will be kept as a means in case.

5. Performance recommendations because RDB files are only used for backup purposes, it is recommended to only persist RDB files on Slave, and only backup once every 15 minutes is enough. Only save 900 1 is retained. If you Enable AOF, the advantage is that in the worst case, only less than two seconds of data will be lost. The startup script is relatively simple. You can only load your own AOF file. The cost is that it brings continuous IO and AOF rewrite. Finally, the new data generated in the rewrite process will be written to the new file, resulting in almost inevitable blocking. As long as the hard disk is allowed, the frequency of AOF rewriting should be minimized. The default value of 64M for the basic size of AOF rewriting is too small, which can be set to more than 5G. By default, it exceeds 100% of the original size, and the size rewriting can be changed to an appropriate value. If AOF is not enabled, high availability can be achieved only by master Slave replay, which can save a lot of IO and reduce the system fluctuation caused by rewriting. The price is that if the Master/Slave is dropped at the same time, more than ten minutes of data will be lost. The startup script also needs to compare the RDB files in the two Master/Slave and load the newer one. This is the architecture of microblog.

3. redis subscription publication

Basic commands

| command | describe |

|---|---|

| Redis Unsubscribe command | Unsubscribe from a given channel. |

| Redis Subscribe command | Subscribe to information for a given channel or channels. |

| Redis Pubsub command | View subscription and publishing system status. |

| Redis Punsubscribe command | Unsubscribe from all channels in a given mode. |

| Redis Publish command | Sends information to the specified channel. |

| Redis Psubscribe command | Subscribe to one or more channels that match the given pattern. |

test

Subscriber

redis 127.0.0.1:6379> SUBSCRIBE redisChat Reading messages... (press Ctrl-C to quit) 1) "subscribe" 2) "redisChat" 3) (integer) 1

Sender

redis 127.0.0.1:6379> PUBLISH redisChat "Hello,Redis" (integer) 1 redis 127.0.0.1:6379> PUBLISH redisChat "Hello,Kuangshen" (integer) 1 # The subscriber's client displays the following message 1) "message" 2) "redisChat" 3) "Hello,Redis" 1) "message" 2) "redisChat" 3) "Hello,Kuangshen"

principle

Redis is implemented in C by analyzing pubsub C file to understand the underlying implementation of publish and subscribe mechanism, so as to deepen the understanding of redis.

Redis implements PUBLISH and SUBSCRIBE functions through PUBLISH, SUBSCRIBE, PSUBSCRIBE and other commands.

After subscribing to a channel through the SUBSCRIBE command, a dictionary is maintained in the redis server. The keys of the dictionary are channels, and the values of the dictionary are a linked list, which stores all clients subscribing to the channel. The key of the SUBSCRIBE command is to add the client to the subscription linked list of a given channel.

Send a message to subscribers through the Publish command. Redis server will use the given channel as the key, find the linked list of all clients subscribing to the channel in the channel dictionary maintained by redis server, traverse the linked list, and Publish the message to all subscribers. Pub/Sub literally means Publish and Subscribe (Subscribe). In redis, you can set message publishing and message subscription for a key value. When a message is published on a key value, all clients subscribing to it will receive corresponding messages. The most obvious use of this function is as a real-time messaging system, such as ordinary instant chat, group chat and other functions.

Usage scenario

Pub/Sub builds a real-time messaging system. Redis's Pub/Sub system can build a real-time messaging system, such as many examples of real-time chat systems built with Pub/Sub.

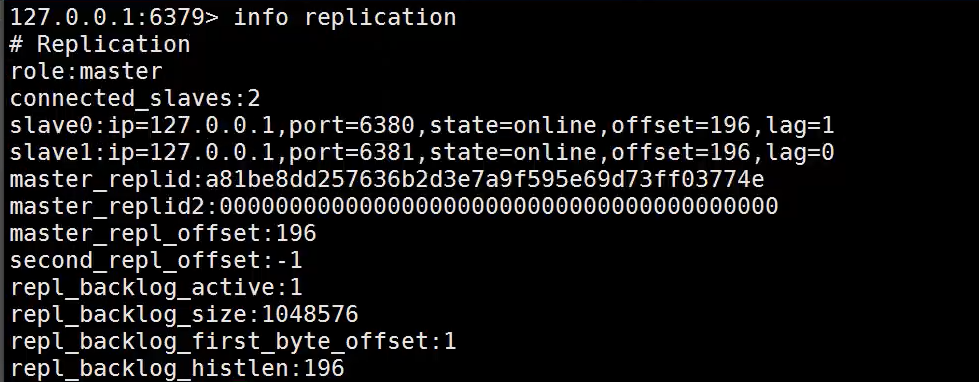

4. redis master-slave replication

Configure three configuration files, and then start three redis servers

redis is the primary node by default. Therefore, when building a cluster, it is mainly to modify the configuration of the slave node. Used to complete master-slave replication.

# View the information of the current node info replication

1. Build one master and two slave nodes

Modify profile content

1,Copy multiple redis.conf file 2,Specify port 6379, and so on 3,open daemonize yes 4,Pid File name pidfile /var/run/redis_6379.pid , And so on 5,Log File name logfile "6379.log" , And so on 6,Dump.rdb name dbfilename dump6379.rdb , And so on

2. A client with three ports connected by a sub table

redis-cli -p 6379 redis-cli -p 6380 redis-cli -p 6381

3. Configure the slave (both are the master by default, and the other two recognize the boss)

Execute the command for temporary configuration

# 6379 is not recognized on the 6380 machine (master node) SLAVEOF 127.0.0.1 6379 # 6379 is not recognized on the 6381 machine (master node) SLAVEOF 127.0.0.1 6379

After configuration, you can view the node information above 6379 (Master)

Modify the configuration file for master-slave replication

After configuration:

The host is responsible for writing and the slave is responsible for reading

The host is disconnected, but the slave is still connected to the host. At this time, write operations cannot be performed.

In the case of one master and two slaves, if the master is disconnected, the slave can use the command SLAVEOF NO ONE Change yourself to a host! This time Wait for other slaves to link to this node. Execute commands on a secondary server SLAVEOF NO ONE Will make this slave server Turn off the replication function and switch from the secondary server to the primary server. The data set obtained from the original synchronization will not be discarded.

If the slave is configured using the command line, it will change back to the host after restart! At this time, if you configure the original slave again, you can get the data written by the original host again.

Assignment principle

After the slave is successfully started and connected to the master, the master will send a sync command. After receiving the command, the master will start the background save process and collect all received commands for modifying the dataset. After the background process is executed, the master will transfer the entire data file to the slave and complete a complete synchronization.

Full copy: after receiving the database file data, the slave service saves it and loads it into memory.

Incremental replication: the master continues to send all new collected modification commands to the slave in turn to complete the synchronization. However, as long as the master is reconnected, a full synchronization (full replication) will be executed automatically

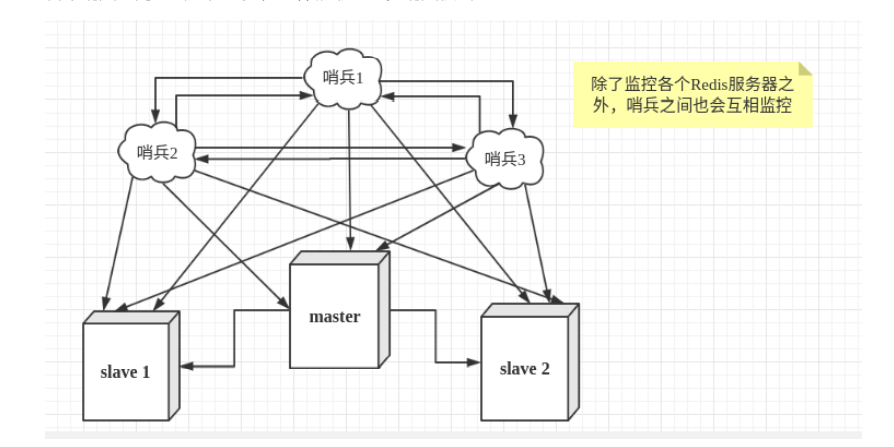

5. redis sentinel mode

Support master-slave replication Master slave switchover and failover Sentinel mode, master-slave manual to automatic, more robust Capacity expansion is troublesome and online capacity expansion is complex The implementation of sentinel mode is complex, and there are many choices

The role of Sentinels

The sentry here has two functions: sending commands to let the Redis server return to monitor its running status, including the master server and the slave server. When the sentinel detects that the master is down, it will automatically switch the slave to the master, and then notify other slave servers through publish subscribe mode to modify the configuration file and let them switch hosts. However, there may be problems when a sentinel process monitors the Redis server. Therefore, we can use multiple sentinels for monitoring. Each sentinel will also be monitored, which forms a multi sentinel mode.

Assuming that the main server goes down, sentry 1 detects this result first, and the system will not fail immediately. Sentry 1 subjectively thinks that the main server is unavailable, which becomes a subjective offline phenomenon. When the following sentinels also detect that the primary server is unavailable and the number reaches a certain value, a vote will be held between sentinels. The voting result is initiated by one sentinel to perform the "failover" operation. After the switch is successful, each sentinel will switch its monitored slave server to the host through the publish subscribe mode. This process is called objective offline

Sentry configuration description

# Example sentinel.conf # The port on which the sentinel sentinel instance runs is 26379 by default port 26379 # Sentry sentinel's working directory dir /tmp # ip port of the redis master node monitored by sentinel # The master name can be named by itself. The name of the master node can only be composed of letters A-z, numbers 0-9 and the three characters ". -" form. # quorum how many sentinel sentinels are configured to uniformly think that the master master node is lost, so it is objectively considered that the master node is lost # sentinel monitor <master-name> <ip> <redis-port> <quorum> sentinel monitor mymaster 127.0.0.1 6379 2 # When the requirepass foobared authorization password is enabled in the Redis instance, all clients connecting to the Redis instance will be able to use it To provide a password # Set the password of sentinel sentinel connecting master and slave. Note that the same authentication password must be set for master and slave # sentinel auth-pass <master-name> <password> sentinel auth-pass mymaster MySUPER--secret-0123passw0rd # After the specified number of milliseconds, the primary node does not respond to the sentinel sentinel. At this time, the sentinel subjectively thinks that the primary node goes offline for 30 seconds by default # sentinel down-after-milliseconds <master-name> <milliseconds> sentinel down-after-milliseconds mymaster 30000 # This configuration item specifies the maximum number of slave s that can be used to synchronize the new master at the same time when a failover active / standby switch occurs Step, The smaller the number, the better failover The longer it takes, But if this number is larger, it means more slave because replication Not available. You can ensure that there is only one at a time by setting this value to 1 slave Is in a state where the command request cannot be processed. # sentinel parallel-syncs <master-name> <numslaves> sentinel parallel-syncs mymaster 1 # Failover timeout can be used in the following aspects: #1. The interval between two failover of the same sentinel to the same master. #2. When a slave synchronizes data from an incorrect master, the time is calculated. Until the slave is corrected to the correct direction master When synchronizing data there. #3. The time required to cancel an ongoing failover. #4. During failover, configure the maximum time required for all slaves to point to the new master. But even after this super When, slaves Will still be correctly configured to point to master,But you don't parallel-syncs Here comes the configured rule # The default is three minutes # sentinel failover-timeout <master-name> <milliseconds> sentinel failover-timeout mymaster 180000 # SCRIPTS EXECUTION #Configure the script to be executed when an event occurs. You can notify the administrator through the script, such as sending mail when the system is not running normally Notify relevant personnel. #There are the following rules for the running results of scripts: #If the script returns 1 after execution, the script will be executed again later. The number of repetitions is currently 10 by default #If the script returns 2 after execution, or a return value higher than 2, the script will not be executed repeatedly. #If the script is terminated due to receiving a system interrupt signal during execution, the behavior is the same as when the return value is 1. #The maximum execution time of a script is 60s. If this time is exceeded, the script will be terminated by a SIGKILL signal and executed again that 's ok. #Notification script: when any warning level event occurs in sentinel (for example, subjective failure and objective failure of redis instance) Wait), will call this script. At this time, this script should be sent by e-mail, SMS And other ways to inform the system administrator about the abnormal system Information about the operation. When calling the script, two parameters will be passed to the script, one is the type of event and the other is the description of event. If sentinel.conf If the script path is configured in the configuration file, you must ensure that the script exists in the path and is executable OK, otherwise sentinel Unable to start normally, successfully. #Notification script # sentinel notification-script <master-name> <script-path> sentinel notification-script mymaster /var/redis/notify.sh # Client reconfiguration master node parameter script # When a master changes due to failover, this script will be called to notify the relevant clients about the master Information that the address has changed. # The following parameters will be passed to the script when calling the script: # <master-name> <role> <state> <from-ip> <from-port> <to-ip> <to-port> # At present, < state > is always "failover", # < role > is one of "leader" or "observer". # The parameters from IP, from port, to IP and to port are used to communicate with the old master and the new master (i.e. the old slave) # This script should be generic and can be called multiple times, not targeted. # sentinel client-reconfig-script <master-name> <script-path> sentinel client-reconfig-script mymaster /var/redis/reconfig.sh

6. redis cache penetration and avalanche

The use of Redis cache greatly improves the performance and efficiency of applications, especially in data query. But at the same time, it also brings some problems. Among them, the most crucial problem is the consistency of data. Strictly speaking, this problem has no solution. If data consistency is required, caching cannot be used. Other typical problems are cache penetration, cache avalanche and cache breakdown. At present, the industry also has more popular solutions.

Buffer penetration

The concept of cache penetration is very simple. When users want to query a data, they find that the redis memory database does not hit, that is, the cache does not hit, so they query the persistence layer database. No, so this query failed. When there are many users, the cache misses, so they all request the persistence layer database. This will put a lot of pressure on the persistence layer database, which is equivalent to cache penetration.

Buffer breakdown

Here, we need to pay attention to the difference between cache breakdown and cache breakdown. Cache breakdown means that a key is very hot. It is constantly carrying large concurrency. Large concurrency focuses on accessing this point. When the key fails, the continuous large concurrency will break through the cache and directly request the database, just like cutting a hole in a barrier. When a key expires, a large number of requests are accessed concurrently. This kind of data is generally hot data. Because the cache expires, the database will be accessed at the same time to query the latest data and write back to the cache, which will lead to excessive pressure on the database.

Cache avalanche

Cache avalanche means that the cache set expires in a certain period of time. One of the reasons for the avalanche. For example, when writing this article, it is about to arrive at double twelve o'clock, and there will be a wave of rush buying soon. This wave of goods will be put into the cache for a concentrated time. Suppose the cache is one hour. Then at one o'clock in the morning, the cache of these goods will expire. The access and query of these commodities fall on the database, which will produce periodic pressure peaks. Therefore, all requests will reach the storage layer, and the call volume of the storage layer will increase sharply, resulting in the storage layer hanging up.

In fact, centralized expiration is not very fatal. The more fatal cache avalanche is the downtime or disconnection of a node of the cache server. Because of the naturally formed cache avalanche, the cache must be created centrally in a certain period of time. At this time, the database can withstand the pressure. It is nothing more than periodic pressure on the database. The downtime of the cache service node will cause unpredictable pressure on the database server, which is likely to crush the database in an instant.

solve

The idea of high availability of redis means that since redis may hang up, I will add several more redis. After one is hung up, others can continue to work. In fact, it is a cluster. The idea of this solution is to control the number of threads reading and writing to the database cache by locking or queuing after the cache expires. For example, for a key, only one thread is allowed to query data and write cache, while other threads wait. Data preheating means that before the formal deployment, I first access the possible data in advance, so that part of the data that may be accessed in large quantities will be loaded into the cache. Before a large concurrent access is about to occur, manually trigger the loading of different cache keys and set different expiration times to make the time point of cache invalidation as uniform as possible.

7. Server redis self start script

You can put the startup script into the corresponding file that will be loaded automatically after linux starts. ubuntu is in / etc / RC Local file

# redis auto start script

[root@ser02 redis]# vim redserver.sh

#!/bin/bash

stop(){

/data/redis/bin/redis-cli -a redis shutdown

}

start(){

/data/redis/bin/redis-server /data/redis/conf/redis.conf &

}

case $1 in

start)

start

;;

stop)

stop

;;

restart)

stop

start

;;

*)

echo "Usage:$0 (start|stop|restart)"

esac

[root@ser02 redis]# chmod +x redserver.sh

[root@ser02 redis]# vim /etc/profile

export PATH=/data/redis/:$PATH

[root@ser02 redis]# source /etc/profile

8, redis interview

Redis (or other caching system)

Redis working model, redis persistence, redis expiration elimination mechanism, common forms of redis distributed clusters, distributed locks, cache breakdown, cache avalanche and cache consistency

common problem

Why is redis high performance?

Pure memory operation, single thread, efficient data structure, reasonable data coding and other optimization

How does single threaded redis utilize multi-core cpu machines?

The premise of considering performance problems is to understand where the performance bottleneck is. Redis aims at high-performance memory kv storage. Asynchronous io, Pure memory operation (persistent logic fork), all non blocking (non time consuming) operations and no complex calculations are involved. Turning on Multithreading will waste the overhead on locking, miss cache, and many data structures can not be unlocked. Moreover, the state and design of asynchronous services are more complex. Compared with multiple processes, the advantages of single thread model in performance and code design are more obvious, which are generally limited to the network or memory If you want to use it, you can use the port numbers of several redis core s in China to distinguish them

redis cache elimination strategy?

Volatile LRU: select the least recently used data from the data set with expiration time (server.db[i].expires); eliminate volatile TTL: select the data to expire from the data set with expiration time (server.db[i].expires); eliminate volatile random: select the data set with expiration time (server. DB [i]) . Expires): select the least recently used data from the data set (server.db[i].dict) to eliminate allkeys random: select the data from the data set (server.db[i].dict) to eliminate no exclusion: prohibit the expulsion of data

Detailed article

How does redis persist data?

RDB: it can snapshot and store your data at a specified time interval. AOF: records every write operation to the server. When the server restarts, these commands will be re executed to recover the original data.

Detailed article

What kinds of data structures does redis have?

String: cache, counter, distributed lock, etc. List: linked list, queue, Weibo followers timeline list, etc. Hash: user information, hash table, etc. Set: de weight, like, step on, common friends, etc. Zset: traffic ranking, hits ranking, etc.

What are the forms of redis clusters?

redis has three cluster modes: master-slave replication, sentinel mode and cluster.

Detailed article recommendation

-

There are a large amount of data with small key and value. How to save memory in redis?

-

How to ensure data consistency between redis and DB?

1. The first scheme adopts the delayed double deletion strategy

2. The second scheme: asynchronous update cache (synchronization mechanism based on subscription binlog)

Detailed article

How to solve cache penetration and cache avalanche?

Cache penetration: Generally speaking, the cache system will cache queries through the key. If there is no corresponding value, You should go to the back-end system (such as dB). At this time, if some malicious requests arrive, they will deliberately query the nonexistent key. When the number of requests at a certain time is large, it will cause great pressure on the back-end system. This is called cache penetration. How to avoid cache penetration: cache the query result when it is empty, set the cache time to be shorter, or insert the data corresponding to the key Clean up the cache after. Filter keys that must not exist. All possible keys can be put into a large bitmap and filtered through the bitmap during query. Cache avalanche: when the cache server restarts or a large number of caches fail in a certain period of time, when they fail, It will put a lot of pressure on the back-end system (DB) and lead to system crash. How to avoid cache avalanche: after the cache fails, control the number of threads reading and writing to the database cache by locking or queuing. For example, for a key, only one thread is allowed to query data and write to the cache, and other threads wait. https://zhuanlan.zhihu.com/p/75588064

- How to implement distributed locks with redis?

1. Use the set key value [EX seconds][PX milliseconds][NX|XX] Command (correct approach)

2. Redlock algorithm and Redisson implementation Detailed article

reference resources:

1. Crazy God said that teacher Qin Jiang's course is welcome to learn. The teacher spoke very well and recommended planting grass

Bili Bili - Crazy God says redis video

2. Shang Silicon Valley teacher Zhou Yang's redis course, brother Yang's video, not much to say, yyds

Bili Bili - teacher Zhou Yang, redis

recommend:

redis deep adventure - core principles and application practice by Qian Wenping

The teacher's speech is really good and very detailed. He has the ability to suggest that Jingdong buy it and read it carefully.

JD - link

redis deep adventure - electronic version: (alicloud disk) for learning only

"redis deep adventure. pdf" https://www.aliyundrive.com/s/yUckU6c6tff

Click the link to save, or copy the content of this paragraph, and open the "Alibaba cloud disk" APP. There is no need to download the high-speed online view, and the original video picture can be played at double speed.

This article details the typera file, which can be downloaded if necessary

"Redis typera export. pdf" https://www.aliyundrive.com/s/gKspq6gELhT

Click the link to save, or copy the content of this paragraph, and open the "Alibaba cloud disk" APP. There is no need to download the high-speed online view, and the original video picture can be played at double speed.

All the contents are for learning only. If there are any defects or problems in the article, you are welcome to point out, hug