Simple redis distributed lock

Locking:

set key my_random_value NX PX 30000

This command is better than setnx because the expiration time can be set at the same time. If the expiration time is not set, the application hangs and cannot be unlocked, so it is locked all the time.

Unlock:

if redis.call("get",KEYS[1])==ARGV[1] then

return redis.call("del",KEYS[1])

else

return 0

end

First compare the values and delete them after they are equal. Prevent other threads from unlocking the lock.

The above scheme is sufficient in general scenarios, but there are still some small problems:

- What if the expiration time is set to 3 seconds, but the business execution takes 4 seconds?

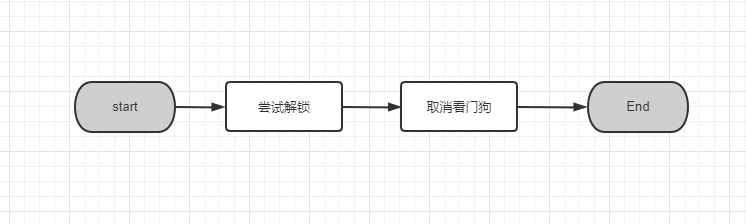

Solution: referring to the watchdog of redisson, you can start a thread in the background to see whether the business thread has finished executing. If not, extend the expiration time.

- redis is a single point. If it goes down, the whole system will crash. If it is a master-slave structure, the Master goes down and the stored key s have not been synchronized to the slave. At this time, the slave is upgraded to a new master, and client 2 can get the lock of the same resource from the new master. In this way, both client 1 and client 2 get the lock, which is not safe.

Solution: RedLock algorithm. Simply put, N (usually 5) independent redis nodes execute SETNX at the same time. If most of them succeed, they will get the lock. This allows a few nodes to be unavailable.

Let's take a look at how the industrial level implements redis distributed locks?

redis distributed lock implemented by Redission

Locking process:

Unlocking process:

Redistribute locks use the hash structure of redis.

- key: the name of the resource to be locked

- File: uuid + ":" + thread id

- value: numeric type, which can realize reentrant lock

Some APIs of Promise in netty are used in the source code. I list them to help you understand:

// Asynchronous operation completed and terminated normally

boolean isSuccess();

// Can asynchronous operations be cancelled

boolean isCancellable();

// Reason for asynchronous operation failure

Throwable cause();

// Add a listener to call back when the asynchronous operation is completed, which is similar to the callback function of javascript

Future<V> addListener(GenericFutureListener<? extends Future<? super V>> listener);

Future<V> removeListener(GenericFutureListener<? extends Future<? super V>> listener);

// Block until the asynchronous operation completes

Future<V> await() throws InterruptedException;

// The same as above, but an exception is thrown when the asynchronous operation fails

Future<V> sync() throws InterruptedException;

// Non blocking returns asynchronous results, and null if not completed

V getNow();

Source code analysis:

Locking:

public RLock getLock(String name) {

return new RedissonLock(connectionManager.getCommandExecutor(), name);

}

public RedissonLock(CommandAsyncExecutor commandExecutor, String name) {

super(commandExecutor, name);

//Command actuator

this.commandExecutor = commandExecutor;

//uuid

this.id = commandExecutor.getConnectionManager().getId();

//Timeout, 30s by default

this.internalLockLeaseTime = commandExecutor.getConnectionManager().getCfg().getLockWatchdogTimeout();

this.entryName = id + ":" + name;

}

public void lockInterruptibly(long leaseTime, TimeUnit unit) throws InterruptedException {

//Get thread id

long threadId = Thread.currentThread().getId();

//Attempt to acquire lock

Long ttl = tryAcquire(leaseTime, unit, threadId);

// lock acquired

//If ttl is empty, the locking is successful

if (ttl == null) {

return;

}

//If the lock acquisition fails, subscribe to the channel corresponding to the lock, and notify the thread to acquire the lock when other threads release the lock

RFuture<RedissonLockEntry> future = subscribe(threadId);

commandExecutor.syncSubscription(future);

try {

while (true) {

//Try to acquire the lock again

ttl = tryAcquire(leaseTime, unit, threadId);

// lock acquired

if (ttl == null) {

break;

}

// waiting for message

//If ttl is greater than 0, wait for ttl time before continuing to attempt to acquire the lock

if (ttl >= 0) {

getEntry(threadId).getLatch().tryAcquire(ttl, TimeUnit.MILLISECONDS);

} else {

getEntry(threadId).getLatch().acquire();

}

}

} finally {

//Unsubscribe from channel

unsubscribe(future, threadId);

}

// get(lockAsync(leaseTime, unit));

}

Let's take a look at the code trying to obtain the lock:

private Long tryAcquire(long leaseTime, TimeUnit unit, long threadId) {

return get(tryAcquireAsync(leaseTime, unit, threadId));

}

private <T> RFuture<Long> tryAcquireAsync(long leaseTime, TimeUnit unit, final long threadId) {

if (leaseTime != -1) {

//If there is an expiration time, the lock is acquired in the normal way

return tryLockInnerAsync(leaseTime, unit, threadId, RedisCommands.EVAL_LONG);

}

//First, execute the method of obtaining the lock according to the expiration time of 30 seconds

RFuture<Long> ttlRemainingFuture = tryLockInnerAsync(commandExecutor.getConnectionManager().getCfg().getLockWatchdogTimeout(), TimeUnit.MILLISECONDS, threadId, RedisCommands.EVAL_LONG);

//Asynchronous execution callback listening

ttlRemainingFuture.addListener(new FutureListener<Long>() {

@Override

//If the lock is still held, open the scheduled task to continuously refresh the expiration time of the lock

public void operationComplete(Future<Long> future) throws Exception {

//The execution did not complete successfully

if (!future.isSuccess()) {

return;

}

//Non blocking returns asynchronous results, and null if not completed

Long ttlRemaining = future.getNow();

// lock acquired

if (ttlRemaining == null) {

scheduleExpirationRenewal(threadId);

}

}

});

return ttlRemainingFuture;

}

Watchdog logic:

Netty's Timeout delay task is used.

- For example, if the lock expires for 30 seconds, the existence of the lock will be checked every 1 / 3 of the time, that is, 10 seconds. If the lock exists, the timeout time of the lock will be updated

Lock script

<T> RFuture<T> tryLockInnerAsync(long leaseTime, TimeUnit unit, long threadId, RedisStrictCommand<T> command) {

internalLockLeaseTime = unit.toMillis(leaseTime);

return commandExecutor.evalWriteAsync(getName(), LongCodec.INSTANCE, command,

//If the lock does not exist, set its value through hset and set the expiration time

"if (redis.call('exists', KEYS[1]) == 0) then " +

"redis.call('hset', KEYS[1], ARGV[2], 1); " +

"redis.call('pexpire', KEYS[1], ARGV[1]); " +

"return nil; " +

"end; " +

//If the lock already exists and the lock is the current thread, increment the value by 1 through hincrby and reset the expiration time

"if (redis.call('hexists', KEYS[1], ARGV[2]) == 1) then " +

"redis.call('hincrby', KEYS[1], ARGV[2], 1); " +

"redis.call('pexpire', KEYS[1], ARGV[1]); " +

"return nil; " +

"end; " +

//If the lock already exists but is not this thread, the expiration time is returned

"return redis.call('pttl', KEYS[1]);",

Collections.<Object>singletonList(getName()), internalLockLeaseTime, getLockName(threadId));

}

Unlock:

public RFuture<Void> unlockAsync(final long threadId) {

final RPromise<Void> result = new RedissonPromise<Void>();

//Bottom unlocking method

RFuture<Boolean> future = unlockInnerAsync(threadId);

future.addListener(new FutureListener<Boolean>() {

@Override

public void operationComplete(Future<Boolean> future) throws Exception {

if (!future.isSuccess()) {

cancelExpirationRenewal(threadId);

result.tryFailure(future.cause());

return;

}

Boolean opStatus = future.getNow();

//If NULL is returned, it proves that the unlocked thread and the current lock are not the same thread, and an exception is thrown

if (opStatus == null) {

IllegalMonitorStateException cause = new IllegalMonitorStateException("attempt to unlock lock, not locked by current thread by node id: "

+ id + " thread-id: " + threadId);

result.tryFailure(cause);

return;

}

if (opStatus) {

cancelExpirationRenewal(null);

}

result.trySuccess(null);

}

});

return result;

}

Unlock script:

protected RFuture<Boolean> unlockInnerAsync(long threadId) {

return commandExecutor.evalWriteAsync(getName(), LongCodec.INSTANCE, RedisCommands.EVAL_BOOLEAN,

"if (redis.call('exists', KEYS[1]) == 0) then " +

"redis.call('publish', KEYS[2], ARGV[1]); " +

"return 1; " +

"end;" +

//If the thread that releases the lock and the thread that already has the lock are not the same thread, null is returned

"if (redis.call('hexists', KEYS[1], ARGV[3]) == 0) then " +

"return nil;" +

"end; " +

//Release the lock once by decreasing hincrby by 1

"local counter = redis.call('hincrby', KEYS[1], ARGV[3], -1); " +

//If the remaining times is greater than 0, refresh the expiration time

"if (counter > 0) then " +

"redis.call('pexpire', KEYS[1], ARGV[2]); " +

"return 0; " +

"else " +

//Otherwise, it proves that the lock has been released. Delete the key and publish the lock release message

"redis.call('del', KEYS[1]); " +

"redis.call('publish', KEYS[2], ARGV[1]); " +

"return 1; "+

"end; " +

"return nil;",

Arrays.<Object>asList(getName(), getChannelName()), LockPubSub.unlockMessage, internalLockLeaseTime, getLockName(threadId));

}

There is a road to the mountain of books. Diligence is the path. There is no end to learning. It is hard to make a boat