Redis is used to implement a distributed lock, which is based on Go language.

preface

The previous articles are all theoretical knowledge. They are a little too much. They suddenly want to write some practical content. Just recently, the company is doing asynchronous task migration and uses distributed lock and task fragmentation. Therefore, it plans to write two practical articles to introduce the implementation methods of distributed lock and task fragmentation respectively. This should be often used in practical projects, Today's article will first explain the implementation of distributed locks.

Usage scenario

There are actually many scenarios for using distributed locks. I mainly encounter the following scenarios on Xiaomi:

-

To execute a scheduled task in a service cluster, we want only one machine to execute it, so we need a distributed lock. Only the machine that gets the lock can execute the scheduled task;

-

When an external request hits the cluster, for example, the request operates on orders. In order to avoid request reentry, we need to add a distributed lock on the order dimension to the entry.

Redis distributed lock

Redis distributed lock is a common question in the interview. Many students know to use SetNx() to obtain the lock. If the interviewer asks you the following two questions, do you know how to answer them?

-

What if the machine that gets the lock hangs up?

-

When the lock exceeds the time limit, A/B machines acquire the lock at the same time, which may be acquired at the same time. How to solve it?

In fact, Redis distributed locks are definitely not only solved by SetNx(). What? You don't know what setnx is. Brother Lou is a warm man , I'll answer you right away:

, I'll answer you right away:

Redis Setnx (SET if Not eXists) command sets the specified value for the specified key when the specified key does not exist. (return value: set successfully, return 1, set failed, return 0)

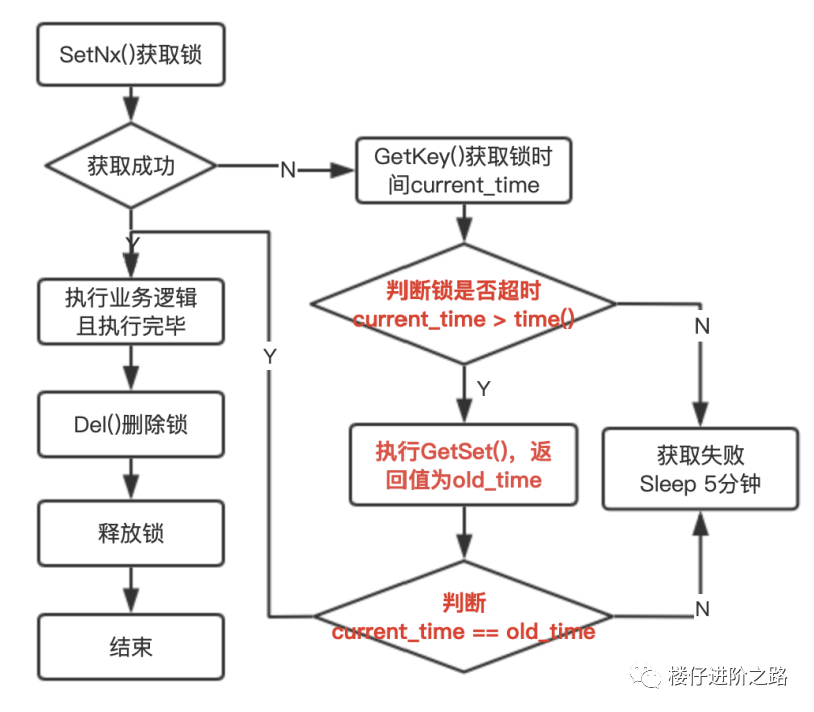

If you call SetNx() and return 1, it means that the lock has been obtained. If you return 0, it means that the lock has not been obtained. In order to avoid machine downtime & restart, the lock has not been released. Therefore, we need to record the timeout of the lock. The overall execution process is as follows:

-

First obtain the lock through SetNx() and set value as the timeout. If the lock is successfully obtained, it will be returned directly;

-

If the lock is not obtained, the machine may be down & restarted. You need to obtain the timeout value of the lock through GetKey(). If the lock does not timeout, it proves that the machine is not down & restarted, and obtaining the lock fails;

-

If the lock has timed out, you can obtain the lock again and set the new timeout time of the lock. In order to avoid multiple machines getting the lock at the same time, you need to use the GetSet() method, because GetSet() will return the previous old value. If two machines A/B execute the GetSet() method at the same time, if A executes first and B executes later, A calls the value returned by GetSet(), In fact, it is equivalent to the value called current_ (GetKey). Time, the value returned by B calling GetKey() is actually the new value set by A, which is certainly not equal to current_time, so we can judge who gets the lock first by whether the two times are equal. (this should be the most difficult place to understand distributed locks. Every time I revisit this logic, I will card in this place...)

Redis Getset command is used to set the value of the specified key and return the old value of the key. (return value: returns the old value of the given key. When the key has no old value, that is, the key does not exist, nil is returned; when the key exists but is not of string type, an error is returned.)

Some students may say that after writing a pile, my head is big. Come on, brother Lou drew a picture for you. Is it much clearer

Concrete implementation

Now that the basic principle is clear, let's start to pile up the code. Let's take a look at the logic of obtaining the lock. The notes in it are written in great detail. Even students who don't understand programming should be able to understand it:

//To obtain a distributed lock, consider the following:

// 1. Machine A obtains the lock, but before releasing the lock, the machine hangs up or restarts, which will cause all other machines to hang up. At this time, it is necessary to judge whether the lock needs to be reset according to the timeout time of the lock;

// 2. When the lock expires, two machines need to obtain the lock at the same time. Through GETSET method, let the machine executing the method first obtain the lock, and the other machine continues to wait.

func GetDistributeLock(key string, expireTime int64) bool {

currentTime := time.Now().Unix()

expires := currentTime + expireTime

redisAlias := "jointly"

// 1. Get the lock and set the value to the timeout of the lock

redisRet, err := redis.SetNx(redisAlias, key, expires)

if nil == err && utils.MustInt64(1) == redisRet {

//Lock successfully acquired

return true

}

// 2. When the machine that has obtained the lock suddenly restarts & hangs up, it is necessary to judge the timeout time of the lock. If the lock times out, the new machine can obtain the lock again

//2.1} timeout for obtaining lock

currentLockTime, err := redis.GetKey(redisAlias, key)

if err != nil {

return false

}

//2.2 when "lock timeout" is greater than or equal to "current time", it proves that the lock has not timed out and returns directly

if utils.MustInt64(currentLockTime) >= currentTime {

return false

}

//2.3} update the latest timeout to the value of the lock and return the timeout of the old lock

oldLockTime, err := redis.GetSet(redisAlias, key, expires)

if err != nil {

return false

}

//2.4 when the two "old timeout times" of the lock are equal, it proves that no other machine has performed GetSet operation before and successfully obtained the lock

//Note: concurrency exists here. If a and B compete at the same time, a will GetSet first. When B goes to GetSet again, oldLockTime is equal to the timeout set by A

if utils.MustString(oldLockTime) == currentLockTime {

return true

}

return false

}

For some functions inside utils MustString(),utils.MustInt64() is actually some type conversion functions encapsulated at the bottom, which should not affect your understanding. If you want to use them directly, you need to make a simple modification here.

Take another look at the logic of deleting locks:

//Delete distributed lock

//@ return bool true - deleted successfully; false - deletion failed

func DelDistributeLock(key string) bool {

redisAlias := "jointly"

redisRet := redis.Del(redisAlias, key)

if redisRet != nil {

return false

}

return true

}

Then business processing logic:

func DoProcess(processId int) {

fmt.Printf("Start section%d Threads\n", processId)

redisKey := "redis_lock_key"

for {

//Get distributed lock

isGetLock := GetDistributeLock(redisKey, 10)

if isGetLock {

fmt.Printf("Get Redis Key Success, id:%d\n", processId)

time.Sleep(time.Second * 3)

//Delete distributed lock

DelDistributeLock(redisKey)

} else {

//If the lock is not obtained, sleep for a while to avoid excessive redis load

time.Sleep(time.Second * 1)

}

}

}

Finally, start 10 multithreads to execute the DoProcess():

func main() {

//Initialize resources

var group string = "i18n"

var name string = "jointly_shop"

var host string

//Initialize resources

host = "http://ip:port"

_, err := xrpc.NewXRpcDefault(group, name, host)

if err != nil {

panic(fmt.Sprintf("initRpc when init rpc failed, err:%v", err))

}

redis.SetRedis("jointly", "redis_jointly")

//Open 10 threads to rob Redis distributed locks

for i := 0; i <= 9; i ++ {

go DoProcess(i)

}

//Prevent the child thread from exiting and the main thread from sleeping for a while

time.Sleep(time.Second * 100)

return

}

The program runs for 100s. We can see that only one thread obtains the lock each time, namely 2, 1, 5, 9 and 3. The execution results are as follows:

Start thread 0 Start the 6th thread Start the 9th thread Start the 4th thread Start the 5th thread Start the second thread Start the first thread Start the 8th thread Start the 7th thread Start the 3rd thread Get Redis Key Success, id:2 Get Redis Key Success, id:2 Get Redis Key Success, id:1 Get Redis Key Success, id:5 Get Redis Key Success, id:5 Get Redis Key Success, id:5 Get Redis Key Success, id:5 Get Redis Key Success, id:5 Get Redis Key Success, id:5 Get Redis Key Success, id:5 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:9 Get Redis Key Success, id:3 Get Redis Key Success, id:3 Get Redis Key Success, id:3 Get Redis Key Success, id:3 Get Redis Key Success, id:3

Pit encountered

There are some pits in the middle. Let me briefly say:

-

We have done a service migration before. We need to migrate the physical machine to the Neo cloud. When the traffic is migrated from the physical machine to the Neo cloud, don't forget to stop the scheduled tasks on the physical machine, otherwise the physical opportunity will seize the distributed lock. Especially after the code is changed, if the physical machine grabs the lock, it will continue to execute the old code, which is a big pit.

-

Don't modify the timeout of the distributed lock easily. In order to quickly troubleshoot the problem, you modified it once before, and then a very strange problem occurred. After troubleshooting for a day, you can't remember the specific problems clearly. If you are interested, you can simulate it yourself.

Postscript

In fact, I wrote this distributed lock in 2019. It has been running online for 2 years. You can run online with simple modifications. Don't worry about the pit, because I've walked through the pit.

Last week, I wrote a current limiting article. In addition, today's distributed lock is actually used in recent projects, so I'll sort it out. In fact, what I want to write most is the implementation method of task fragmentation, which is also a new skill recently obtained when doing asynchronous tasks in the company. It supports multiple machines to execute a task concurrently. Isn't it amazing? I'll share it later.

Look at the time. It's early morning. Go to bed~~