Introduction to NoSQL database

technological development

Classification of Technology

- Solve functional problems: Java, Jsp, RDBMS, Tomcat, HTML, Linux, JDBC, SVN

- Solve the problem of Extensibility: Struts, Spring, Spring MVC, Hibernate, Mybatis

- Solve performance problems: NoSQL, Java thread, Hadoop, Nginx, MQ, ElasticSearch

NoSQL database

NoSQL database overview

-

NoSQL(NoSQL = Not Only SQL), which means "not just SQL", generally refers to non relational databases. NoSQL does not rely on business logic storage, but is stored in a simple key value mode. Therefore, it greatly increases the expansion ability of the database.

- SQL standards are not followed.

- ACID is not supported.

- Far exceeds the performance of SQL.

-

NoSQL applicable scenarios

- Highly concurrent reading and writing of data

- Massive data reading and writing

- For high data scalability

Other NoSql

- Memcache

- Early NoSql database

- The data is in memory and is generally not persistent

- It supports simple key value mode and single type

- Generally, it is a database that is secondary persistent as a cache database

- Redis

- It covers almost most functions of Memcached

- The data is in memory and supports persistence. It is mainly used for backup and recovery

- In addition to supporting the simple key value mode, it also supports the storage of a variety of data structures, such as list, set, hash, zset, etc.

- Generally, it is a database that is secondary persistent as a cache database

- MongoDB

- High performance, open source, schema free document database

- The data is in memory. If the memory is insufficient, save the infrequent data to the hard disk

- Although it is a key value mode, it provides rich query functions for value (especially json)

- Support binary data and large objects

- It can replace RDBMS and become an independent database according to the characteristics of data. Or with RDBMS, store specific data

Redis overview installation

summary

- Redis is an open source key value storage system.

- Similar to Memcached, it supports relatively more stored value types, including string (string), list (linked list), set (set), zset(sorted set -- ordered set) and hash (hash type).

- These data types support push/pop, add/remove, intersection, union, difference and richer operations, and these operations are atomic.

- On this basis, Redis supports sorting in different ways.

- Like memcached, data is cached in memory to ensure efficiency.

- The difference is that Redis will periodically write the updated data to the disk or write the modification operation to the additional record file.

- On this basis, master-slave synchronization is realized.

Redis installation

Compilation and installation - omitted

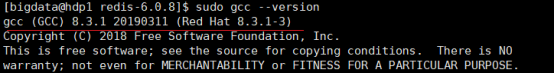

Preparation: Download and install the latest version of gcc compiler

- Install the compiling environment of C language

yum install centos-release-scl scl-utils-build yum install -y devtoolset-8-toolchain scl enable devtoolset-8 bash

- Test gcc version

gcc --version

install

- Download redis-6.2 1.tar. GZ put in / usr/local/bin directory

- tar -zxvf redis-6.2.1.tar.gz

- cd redis-6.2.1

- On redis-6.2 1 directory, execute the make command again (only compiled)

- make install

start-up

Foreground start (not recommended)

- When the foreground starts, the command line window cannot be closed, otherwise the server stops

src/redis-server

Background start

- Modify configuration

// Let the service start in the background daemonize no Change to yes

- Make a copy of redis Conf to another directory

cp /redis.conf /myredis/redis_6379.conf redis-server /myredis/redis.conf

docker installation

docker pull redis // There is a small bug. You need to create the mydata/redis/conf directory first mkdir -p /mydata/redis/conf touch /mydata/redis/conf/redis.conf docker run -p 6379:6379 --name redis \ -v /mydata/redis/data:/data \ -v /mydata/redis/conf/redis.conf:/etc/redis/redis.conf \ -d redis redis-server /etc/redis/redis.conf # Automatic opening sudo docker update redis --restart=always # Client link docker exec -it redis redis-cli

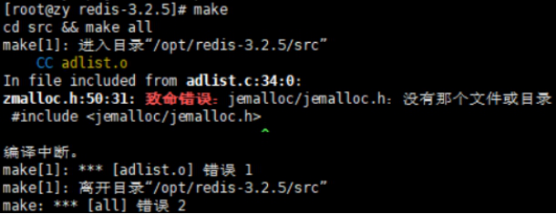

Problems encountered

make will report an error

- If the C language compilation environment is not ready, make will report an error - jemalloc / jemalloc h: There is no such document

- Solution: run make distclean

redis failed to start

- The docker mounted directory requires permissions

redis cannot be accessed externally

- Close selinux

vi /etc/selinux/config SELINUX=enforcing Change to SELINUX=disabled // Restart effective

- Turn off firewall

// add to firewall-cmd --zone=public --add-port=6379/tcp --permanent (--permanent It will take effect permanently without this parameter (it will become invalid after restart) // Reload firewall-cmd --reload // see firewall-cmd --zone= public --query-port=6379/tcp

- configuration file

// White list bind 0.0.0.0 // Turn off protection protected-mode no // Connection allowed - no test client-output-buffer-limit slave 1024mb 256mb 0 // Select close password // If you do not turn off the password, access will carry the password # requirepass

Redis introduces relevant knowledge

-

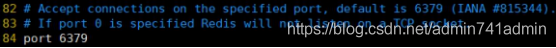

Where does port 6379 come from, Alessia Merz

-

database

- There are 16 databases by default. Similar to the array, the subscript starts from 0. The initial default is library 0

- Use the command select < dbid > to switch databases. For example, select 8 unified password management, and all libraries have the same password.

- dbsize view the number of key s in the current database

- Flush DB empties the current library

- Flush kill all libraries

-

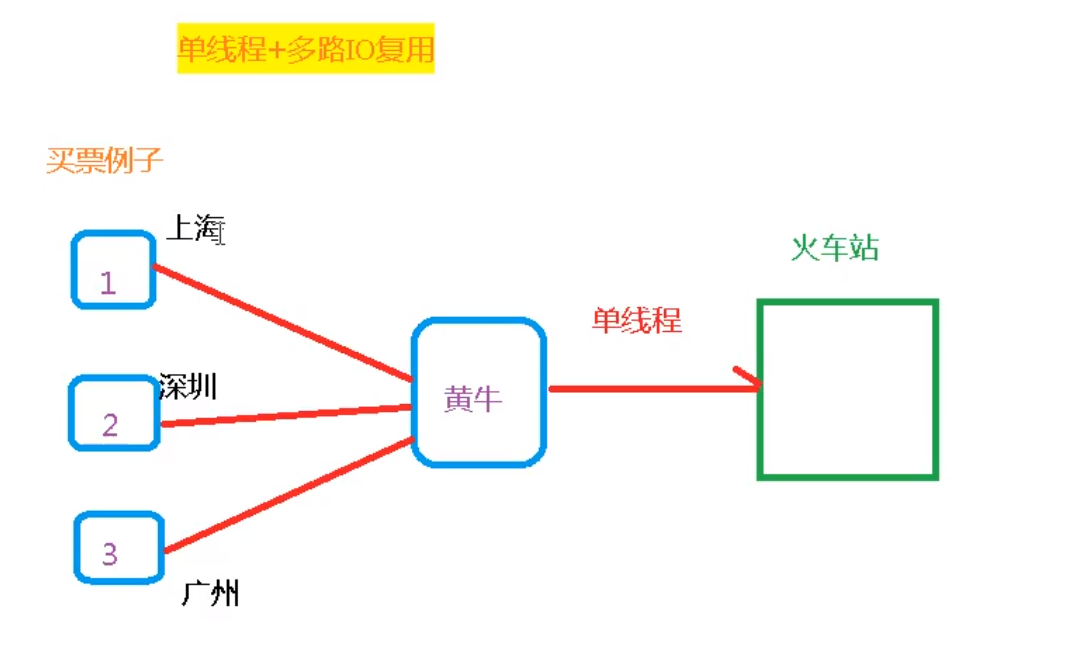

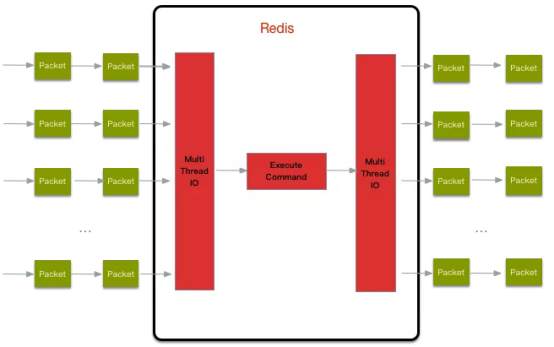

Redis is a single thread + multiple IO multiplexing technology

- Multiplexing refers to using a thread to check the ready status of multiple file descriptors (sockets). For example, call the select and poll functions to pass in multiple file descriptors. If one file descriptor is ready, it will return, otherwise it will be blocked until timeout. After the ready status is obtained, the real operation can be performed in the same thread or start the thread (for example, using thread pools)

Five common data types

Redis key and database

-

Keys * view all keys in the current library (matching: keys *1)

-

exists key determines whether a key exists

-

type key to see what type of key you have

-

del key deletes the specified key data

-

unlink key selects non blocking deletion according to value

-

Only the keys are deleted from the keyspace metadata. The real deletion will occur in subsequent asynchronous operations.

-

Expire key 10 seconds: sets the expiration time for a given key

-

ttl key to check how many seconds are left to expire, - 1 means it will never expire, - 2 means it has expired

-

The select command switches the database

-

dbsize view the number of key s in the current database

-

Flush DB empties the current library

-

Flush kill all libraries

String (String)

concept

- As like as two peas, String is the most basic type of Redis. You can understand it as a type that is exactly the same as Memcached, and a key corresponds to a value.

- The string type is binary safe. This means that the Redis string can contain any data. For example, jpg images or serialized objects.

- String type is the most basic data type of Redis. The string value in a Redis can be 512M at most

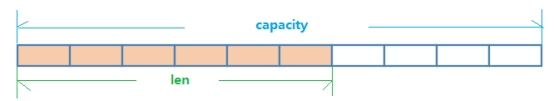

data structure

- The data structure of String is Simple Dynamic String (abbreviated as SDS). It is a String that can be modified. Its internal structure is similar to the ArrayList of Java. It uses the way of pre allocating redundant space to reduce the frequent allocation of memory.

- As shown in the figure, the space capacity actually allocated internally for the current string is generally higher than the actual string length len. When the string length is less than 1M, the expansion is to double the existing space. If it exceeds 1M, only 1M more space will be expanded at a time. Note that the maximum length of the string is 512M.

command

-

Set < key > < value > add key value pair

- *NX: when the key does not exist in the database, you can add the key value to the database

- *20: When the key exists in the database, you can add the key value to the database, which is mutually exclusive with the NX parameter

- *EX: timeout seconds of key

- *PX: timeout milliseconds of key, mutually exclusive with EX

-

get query corresponding key value

-

append appends the given to the end of the original value

-

strlen gets the length of the value

-

incr <key>

Increase the numeric value stored in the key by 1

You can only operate on numeric values. If it is blank, the new increment is 1 -

decr <key>

Subtract 1 from the numeric value stored in the key

You can only operate on numeric values. If it is blank, the new increment is - 1

Incrby / decrby < key > < step > increase or decrease the digital value stored in the key. Custom step size. -

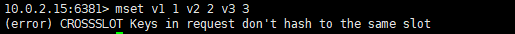

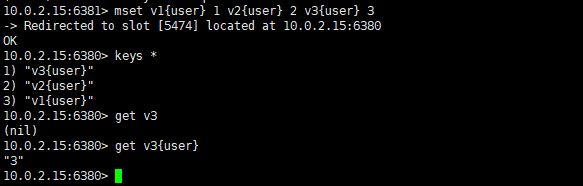

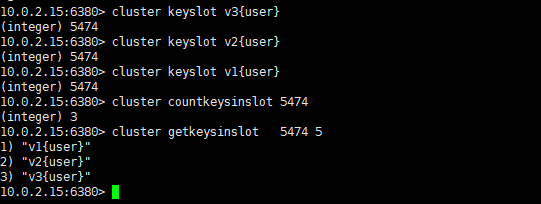

Mset < key1 > < value1 > < key2 > < Value2 >... Set one or more key value pairs at the same time

-

Mget < key1 > < key2 > < Key3 >... Get one or more value s at the same time

-

Msetnx < key1 > < value1 > < key2 > < Value2 >... Set one or more key value pairs at the same time if and only if all given keys do not exist. Atomicity, if one fails, all fail

-

Getrange < key > < start position > < end position > the range of values obtained, similar to substring, pre package and post package in java

-

Setrange < key > < start position > < value > overwrites the string value stored in < key > with < value >, starting from < start position > (the index starts from 0).

-

Setex < key > < expiration time > < value > set the expiration time in seconds while setting the key value.

-

GetSet < key > < value > replace the old with the new. Set the new value and obtain the old value at the same time.

###################################################string#####################

127.0.0.1:6379> set zgc hello

OK

127.0.0.1:6379> append zgc word

(integer) 9

127.0.0.1:6379> get zgc

"helloword"

127.0.0.1:6379> strlen zgc # String length

(integer) 9

127.0.0.1:6379> set count 0

OK

127.0.0.1:6379> type count # Type, the number at the moment is also type

string

127.0.0.1:6379> incr count # Self increment 1

(integer) 1

127.0.0.1:6379> incr count

(integer) 2

127.0.0.1:6379> get count

"2"

127.0.0.1:6379> incrby zgc 10 # Non numeric errors will be reported

(error) ERR value is not an integer or out of range

127.0.0.1:6379> incrby count 10 # Set step 10

(integer) 12

127.0.0.1:6379> get count

"12"

127.0.0.1:6379> GETRANGE zgc 0 -1 # Get all (same as get key)

"helloword"

127.0.0.1:6379> getrange zgc 1 2 # Up to the specified subscript, [1,2]

"el"

127.0.0.1:6379> setrange zgc -4 ,im # Cannot negative subscript

(error) ERR offset is out of range

127.0.0.1:6379> setrange zgc 5 ,im # Replace, the subscript starts to replace, and the replaced length is determined by the replacement length

(integer) 9

127.0.0.1:6379> get zgc

"hello,imd"

127.0.0.1:6379> setex guan 10 hhhhh # Set key and have expiration time

OK

127.0.0.1:6379> ttl guan # View expiration time

(integer) 7

127.0.0.1:6379> setnx my 123 # Set if it does not exist, success 1, failure 0

(integer) 1

127.0.0.1:6379> setnx my 3333

(integer) 0

127.0.0.1:6379> mset k1 v1 k2 v2 k3 v3 # Batch setting key value

OK

127.0.0.1:6379> mget k1 k2 k3 # Batch acquisition

1) "v1"

2) "v2"

3) "v3"

127.0.0.1:6379> mset k1 v11 k4 v4

OK

127.0.0.1:6379> mget k1 k4

1) "v11"

2) "v4"

127.0.0.1:6379> msetnx k4 v44 k5 v5 # If the batch does not exist, it is set and atomic (successful or failed together)

(integer) 0

127.0.0.1:6379> mget k4 k5

1) "v4"

2) (nil)

127.0.0.1:6379> getset you 123 # Get before setting

(nil)

127.0.0.1:6379> getset you 22

"123"

127.0.0.1:6379> get you

"22"

Summary:

Counter

Count multi unit quantity

Number of fans

Object cache storage!

List list

concept

Single key multi value Redis list is a simple string list, sorted by insertion order. You can add an element to the head (left) or tail (right) of the list. Its bottom layer is actually a two-way linked list, which has high performance on both ends, and the performance of the middle node through index subscript operation will be poor.

data structure

- The data structure of List is quickList

- First, when there are few list elements, a continuous memory storage will be used. This structure is ziplost, that is, compressed list. It stores all the elements next to each other and allocates a contiguous piece of memory

- When there is a large amount of data, it will be changed to quicklist. Because the additional pointer space required by ordinary linked lists is too large, it will waste space. For example, only int type data is stored in this list, and two additional pointers prev and next are required in the structure.

- Redis combines the linked list and zipplist to form a quicklist. That is to string multiple ziplist s using bidirectional pointers. This not only meets the fast insertion and deletion performance, but also does not appear too much spatial redundancy.

command

- Lpush / rpush < key > < value1 > < Value2 > < value3 >... Insert one or more values from the left / right.

- Lpop / rpop < key > spits out a value from the left / right. The value is in the key, and the light key dies.

- Rpoplpush < key1 > < key2 > spits out a value from the right of the < key1 > list and inserts it to the left of the < key2 > list.

- Lrange < key > < start > < stop > get elements by index subscript (from left to right)

- Lrange mylist 0 - 1, the first on the left of 0, the first on the right of - 1, (0-1 means to get all)

- Lindex < key > < index > get elements according to index subscripts (from left to right)

- Llen < key > get list length

- Linsert < key > before < value > < newvalue > insert < newvalue > after < value >

- Lrem < key > < n > < value > delete n values from the left (from left to right)

- Lset < key > < index > < value > replace the value with index in the list key

##########################################list########################################

127.0.0.1:6379> lpush fan 1 2 3 4 5 # Left entry

(integer) 5

127.0.0.1:6379> lrange fan 0 2 # Show [0,2]

1) "5"

2) "4"

3) "3"

127.0.0.1:6379> lrange fan 0 -1 # Show all

1) "5"

2) "4"

3) "3"

4) "2"

5) "1"

127.0.0.1:6379> rpush fan 88 99 # Right entry

(integer) 7

127.0.0.1:6379> lrange fan 0 -1

1) "5"

2) "4"

3) "3"

4) "2"

5) "1"

6) "88"

7) "99"

127.0.0.1:6379> lpop fan # The leftmost pop-up

"5"

127.0.0.1:6379> rpop fan #The rightmost pop-up

"99"

127.0.0.1:6379> lrange fan 0 -1

1) "4"

2) "3"

3) "2"

4) "1"

5) "88"

127.0.0.1:6379> llen fan # List length

(integer) 5

127.0.0.1:6379> lindex fan 4 # Displays the value of the specified subscript

"88"

127.0.0.1:6379> lrange fan 0 -1

1) "4"

2) "3"

3) "2"

4) "1"

5) "88"

127.0.0.1:6379> lrem fan 1 4 # Delete list count value, and return 0 if failed

(integer) 1

127.0.0.1:6379> lrange fan 0 -1

1) "3"

2) "2"

3) "1"

4) "88"

127.0.0.1:6379> ltrim fan 1 2 # The original list is changed by subscript interception

OK

127.0.0.1:6379> lrange fan 0 -1

1) "2"

2) "1"

127.0.0.1:6379> rpoplpush fan fan2 # Remove the last element of the list (the original list has been changed) and move it to the new list!

"1"

127.0.0.1:6379> lrange fan 0 -1

1) "2"

127.0.0.1:6379> lrange fan2 0 -1

1) "1"

127.0.0.1:6379> lrange fan 0 -1

1) "four"

2) "three"

3) "two"

4) "one"

127.0.0.1:6379> lset fan 2 two2 # Update a value by subscript

OK

127.0.0.1:6379> lrange fan 0 -1

1) "four"

2) "three"

3) "two2"

4) "one"

127.0.0.1:6379> lset fan 8 88 When lset designated index If it does not exist, an error is reported

(error) ERR index out of range

127.0.0.1:6379> linsert fan before two2 aaaaaaa # Specify value to insert the list before/after

(integer) 5

127.0.0.1:6379> lrange fan 0 -1

1) "four"

2) "three"

3) "aaaaaaa"

4) "two2"

5) "one"

Summary:

He's actually a linked list, before Node after , left,right Values can be inserted

If key If it does not exist, create a new linked list key Existing. If all values are removed, the empty linked list also means that it does not exist!

Insert or change values on both sides for the highest efficiency! Intermediate elements are relatively inefficient~

Message queuing! Message queue( Lpush Rpop), Stack( Lpush Lpop)!

Set

concept

- The functions provided by Redis set are similar to those of a list. The special feature is that set can automatically eliminate duplication. When you need to store a list data and do not want duplicate data, set is a good choice. Moreover, set provides an important interface to judge whether a member is in a set set, which is not provided by the list.

- Redis Set is an unordered Set of string type. Its bottom layer is actually a hash table with null value, so the complexity of adding, deleting and searching is O(1).

- An algorithm, with the increase of data, the length of execution time. If it is O(1), the data increases and the time to find the data remains the same

data structure

- The Set data structure is a dict dictionary, which is implemented with a hash table.

- The internal implementation of HashSet in Java uses HashMap, but all values point to the same object. The same is true for Redis's set structure. It also uses a hash structure internally. All values point to the same internal value.

command

- Sadd < key > < value1 > < Value2 >... Add one or more member elements to the set key, and the existing member elements will be ignored

- Smembers < key > fetch all values of the set.

- Sismember < key > < value > judge whether the set < key > contains the < value > value, with 1 and no 0

- Scar < key > returns the number of elements in the collection.

- SREM < key > < value1 > < Value2 >... Delete an element in the set.

- Spop < key > spits out a value randomly from the set.

- Srandmember < key > < n > randomly take n values from the set. Is not removed from the collection.

- Smove < source > < destination > value moves a value in a set from one set to another

- Sinter < key1 > < key2 > returns the intersection elements of two sets.

- Sunion < key1 > < key2 > returns the union elements of two sets.

- Sdiff < key1 > < key2 > returns the difference elements of two sets (those in key1, excluding those in key2)

##########################################set########################################

127.0.0.1:6379> sadd myset aa bb cc dd # add value

(integer) 4

127.0.0.1:6379> smembers myset # View all

1) "dd"

2) "aa"

3) "cc"

4) "bb"

127.0.0.1:6379> sismember myset cc # Determine whether a value exists

(integer) 1

127.0.0.1:6379> sismember myset ff

(integer) 0

127.0.0.1:6379> scard myset # Number of sets

(integer) 4

127.0.0.1:6379> srem myset dd ff # Delete, specify value

(integer) 2

127.0.0.1:6379> smembers myset

1) "bb"

2) "cc"

3) "aa"

127.0.0.1:6379> srandmember myset 1 # Randomly obtain N value s

1) "cc"

127.0.0.1:6379> srandmember myset 1

1) "aa"

127.0.0.1:6379> spop myset 1 # Randomly delete N

"bb"

127.0.0.1:6379> spop myset

"cc"

127.0.0.1:6379> smembers myset

1) "aa"

127.0.0.1:6379> smove myset myset2 v2 # Cutting value from one set to another

(integer) 1

127.0.0.1:6379> smembers myset

1) "v3"

2) "v1"

127.0.0.1:6379> smembers myset2

1) "v1"

2) "v2"

127.0.0.1:6379> sdiff myset myset2 # Difference set (centered on the first)

1) "v3"

127.0.0.1:6379> sinter myset myset2 #intersection

1) "v1"

127.0.0.1:6379> sunion myset myset2 #Union

1) "v1"

2) "v3"

3) "v2"

Summary:

Unordered non repeating set

Microblog, A Users put all the people they pay attention to in one place set In the collection! Put its fans in a collection! Common concern, common hobbies, second degree friends, recommended friends! (six degree segmentation theory)

Hash

concept

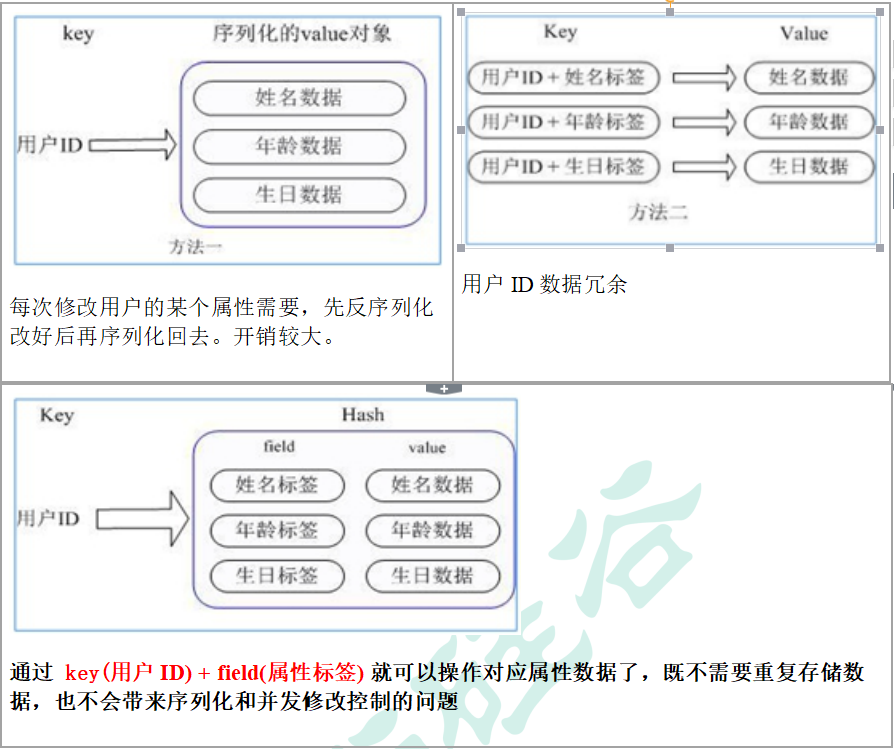

- Redis hash is a collection of key value pairs.

- Redis hash is a mapping table of field and value of string type. Hash is especially suitable for storing objects. Similar to map < string, Object > in Java

- There are two main storage methods:

data structure

- There are two data structures corresponding to the Hash type: ziplost (compressed list) and hashtable (Hash table). When the field value length is short and the number is small, ziplost is used, otherwise hashtable is used.

command

- Hset < key > < field > < value > assigns < value > to the < field > key in the < key > set

- Hget < key1 > < field > fetch value from < key1 > set < field >

- Hmset < key1 > < field1 > < value1 > < field2 > < Value2 >... Batch setting hash values

- Hexists < key1 > < field > check whether the given field exists in the hash table key.

- Hkeys < key > lists all field s of the hash set

- Hvals < key > lists all value s of the hash set

- Hincrby < key > < field > < increment > adds an increment of 1 - 1 to the value of the field in the hash table key

- Hsetnx < key > < field > < value > set the value of the field in the hash table key to value if and only if the field does not exist

##########################################hash########################################

127.0.0.1:6379> hset myhash v1 k1 # Set hash single key value

(integer) 1

127.0.0.1:6379> hget myhash v1 # Get hash single vlaue

"k1"

127.0.0.1:6379> hmset myhash v2 k2 v3 k3 # Set multiple key values

OK

127.0.0.1:6379> hmget myhash v1 v2 v3 # Get multiple value s

1) "k1"

2) "k2"

3) "k3"

127.0.0.1:6379> hgetall myhash # Get all key values

1) "v1"

2) "k1"

3) "v2"

4) "k2"

5) "v3"

6) "k3"

127.0.0.1:6379> hdel myhash v1 # Delete the specified key

(integer) 1

127.0.0.1:6379> hgetall myhash

1) "v2"

2) "k2"

3) "v3"

4) "k3"

127.0.0.1:6379> hlen myhash # hash length

(integer) 2

127.0.0.1:6379> hexists myhash v1 # Determine whether a key exists

(integer) 0

127.0.0.1:6379> hexists myhash v2

(integer) 1

127.0.0.1:6379> hkeys myhash # hash all key s

1) "v2"

2) "v3"

127.0.0.1:6379> hvals myhash # hash all value s

1) "k2"

2) "k3"

127.0.0.1:6379> hset myhash v4 5

(integer) 1

127.0.0.1:6379> hincrby myhash v4 1 # Specified key auto increment

(integer) 6

127.0.0.1:6379> hsetnx myhash v5 k5 # If there is no key in the hash, a new key is created. 1 is returned for success and 0 is returned for failure

(integer) 1

127.0.0.1:6379> hsetnx myhash v5 k55

(integer) 0

Summary:

Map Gather, key-map! When this value is a map Gather! Essence and String The type is not much different, but a simple one key-vlaue!

Ordered set Zset(sorted set)

concept

- Redis ordered set zset is very similar to ordinary set. It is a string set without duplicate elements.

- The difference is that each member of the ordered set is associated with a score, which is used to sort the members of the set from the lowest score to the highest score. The members of the set are unique, but the scores can be repeated.

- Because the elements are ordered, you can also quickly get a range of elements according to score or position.

- Accessing the intermediate elements of an ordered set is also very fast, so you can use an ordered set as a smart list without duplicate members.

data structure

- SortedSet(zset) is a very special data structure provided by Redis. On the one hand, it is equivalent to the Java data structure map < string, double >, which can give each element value a weight score. On the other hand, it is similar to TreeSet. The internal elements will be sorted according to the weight score to get the ranking of each element, You can also get the list of elements through the scope of score.

- The underlying zset uses two data structures

- hash is used to associate the element value with the weight score to ensure the uniqueness of the element value. The corresponding score value can be found through the element value.

- Jump table. The purpose of jump table is to sort the element value and obtain the element list according to the range of score.

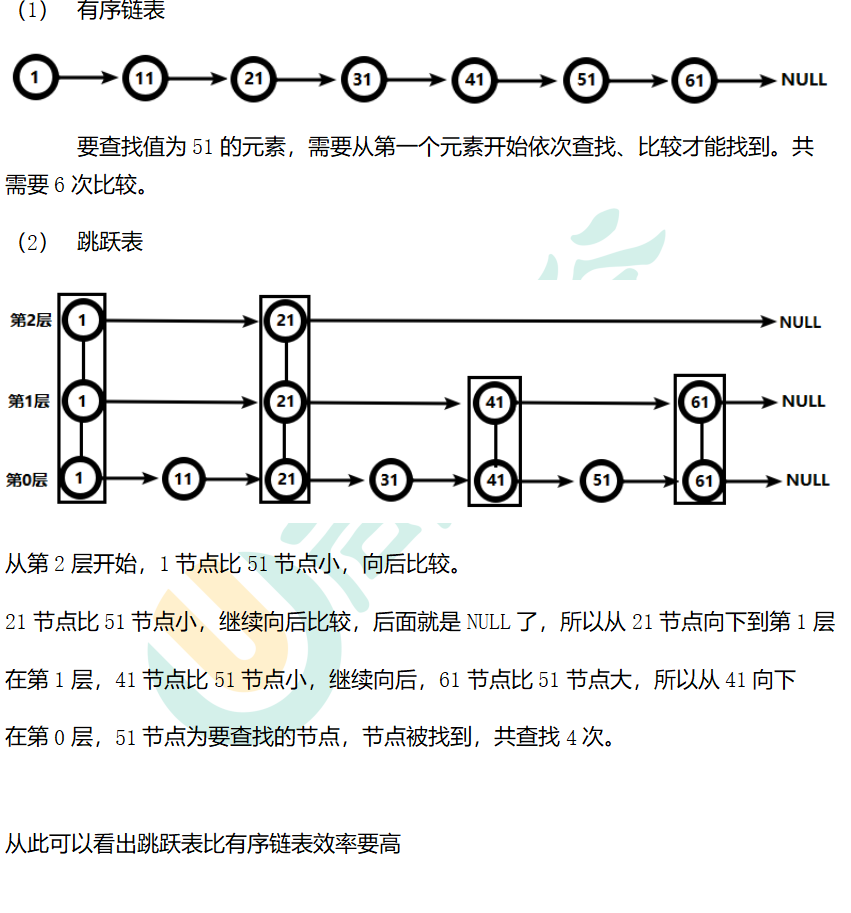

Skip table (skip table)

brief introduction

- Orderly collection is common in life, such as ranking students according to their grades, ranking players according to their scores, etc. For the underlying implementation of ordered sets, arrays, balanced trees, linked lists, etc. can be used. Insertion and deletion of array elements; Balanced tree or red black tree has high efficiency but complex structure; The linked list query needs to traverse all, which is inefficient.

- Redis uses a jump table. The efficiency of jump table is comparable to that of red black tree, and the implementation is much simpler than that of red black tree.

example

- Compare the ordered linked list and jump list, and query 51 from the linked list

command

- Zadd < key > < Score1 > < value1 > < score2 > < Value2 >... Add one or more member elements and their score values to the ordered set key.

- Zrange < key > < start > < stop > [WITHSCORES] returns the elements in the ordered set key with subscripts between < start > < stop >, with WITHSCORES, which can return scores and values to the result set.

- Zrangebyscore key minmax [WithCores] [limit offset count] returns all members in the ordered set key with score values between min and max (including those equal to min or max). Ordered set members are arranged in the order of increasing score value (from small to large).

- Zrevrangebyscore key maxmin [WithCores] [limit offset count] is the same as above, and it is arranged from large to small.

- Zincrby < key > < increment > < value > adds an increment to the score of the element

- Zrem < key > < value > delete the element with the specified value under the set

- Zcount < key > < min > < Max > counts the number of elements in the score interval of the set

- Zrank < key > < value > returns the ranking of the value in the collection, starting from 0.

##########################################Zset########################################

127.0.0.1:6379> zadd salary 2500 aa 5000 bb 800 cc # add to

(integer) 3

127.0.0.1:6379> zrange salary 0 -1 # Show all

1) "cc"

2) "aa"

3) "bb"

127.0.0.1:6379> zrangebyscore salary -inf +inf # zrangebyscore, ascending display, - inf +inf range, negative infinity, positive infinity

1) "cc"

2) "aa"

3) "bb"

127.0.0.1:6379>

127.0.0.1:6379> zrevrange salary 0 -1 # zrevrange, descending display

1) "bb"

2) "aa"

3) "cc"

127.0.0.1:6379> zrevrange salary 0 -1 withscores # Sort in descending order and display with key

1) "bb"

2) "5000"

3) "aa"

4) "2500"

5) "cc"

6) "800"

127.0.0.1:6379> zrangebyscore salary -inf 3000 withscores # Ascending sort, range, (negative infinity, 3000], with key,

1) "cc"

2) "800"

3) "aa"

4) "2500"

127.0.0.1:6379> zcard salary # Number of queries

(integer) 3

127.0.0.1:6379> zrem salary cc # Delete, specify value

(integer) 1

127.0.0.1:6379> zrange salary 0 -1

1) "aa"

2) "bb"

127.0.0.1:6379> zcount salary 5000 6000 # Count the number of key s in the [50006000] interval

Summary:

Ordered set

Ordinary message, 1, important message, 2, judgment with weight!

Redis profile introduction

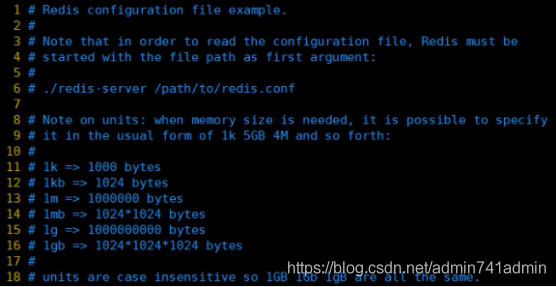

Units

- Configure size units. Some basic measurement units are defined at the beginning. Only bytes are supported, bit is not supported, and case is not sensitive

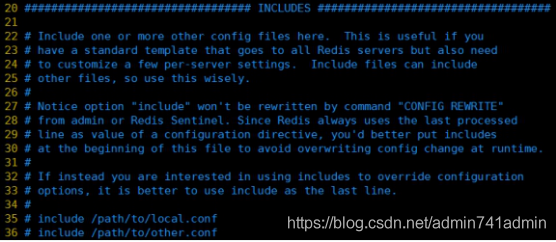

INCLUDES contains

- Public configuration files can be extracted

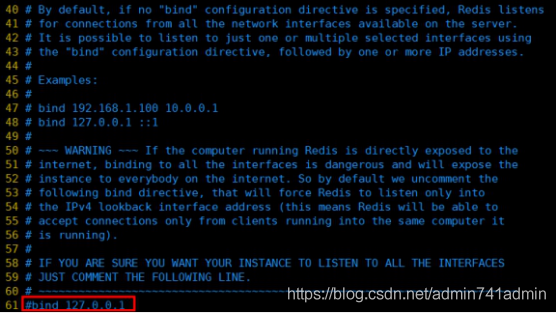

Network related configuration

bind

- By default, bind = 127.0 0.1 can only accept the access request of the local machine. Without writing, it can accept the access of any ip address without restriction

- The production environment must write the address of your application server; The server needs remote access, so it needs to be commented out

- If protected mode is enabled, Redis is only allowed to accept local responses without setting bind ip and password

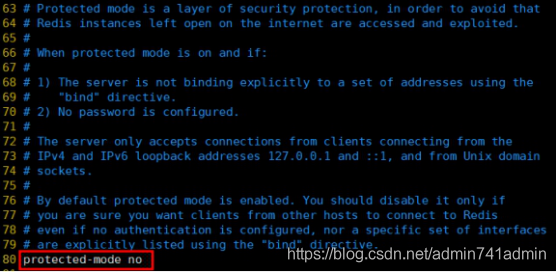

protected-mode

- Access protection mode, generally set no

Port

- Port number, 6379 by default

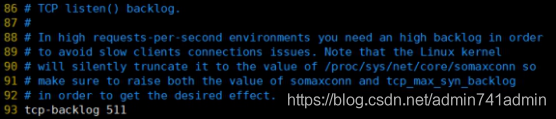

tcp-backlog

- Set the tcp backlog. The backlog is actually a connection queue. The total of the backlog queues = the queue that has not completed three handshakes + the queue that has completed three handshakes

- Set the tcp backlog. The backlog is actually a connection queue. The total of the backlog queues = the queue that has not completed three handshakes + the queue that has completed three handshakes

- Note that the Linux kernel will reduce this value to the value of / proc/sys/net/core/somaxconn (128), so confirm to increase the values of / proc/sys/net/core/somaxconn and / proc/sys/net/ipv4/tcp_max_syn_backlog (128) to achieve the desired effect

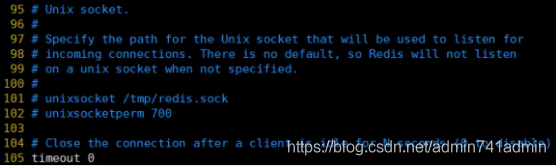

timeout

- How many seconds will an idle client be turned off? 0 means that the function is turned off. That is, never close.

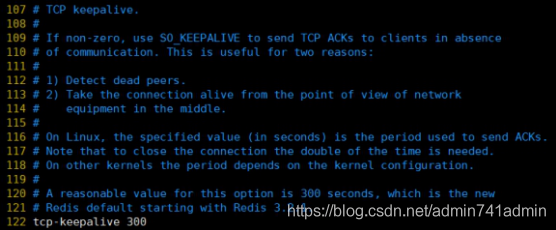

tcp-keepalive

- A heartbeat detection for accessing the client, once every n seconds.

- The unit is seconds. If it is set to 0, Keepalive detection will not be performed. It is recommended to set it to 60

GENERAL

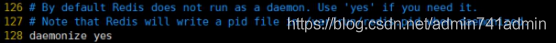

daemonize

- Whether it is a background process, set to yes

- Daemon, background start

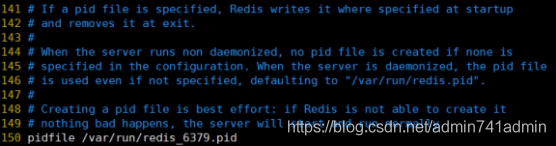

pidfile

- The location where the pid file is stored. Each instance will produce a different pid file

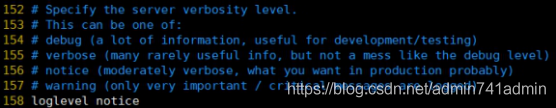

loglevel

- Specify the logging level. Redis supports four levels in total: debug, verbose, notice and warning. The default is notice

- The four levels are selected according to the use stage, and notice or warning is selected for the production environment

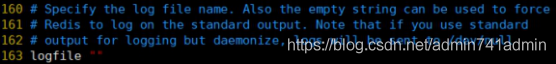

logfile

- Log file name

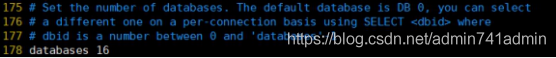

databases 16

- Set the number of libraries to 16 by default, and the default database is 0. You can use the SELECT command to specify the database id on the connection

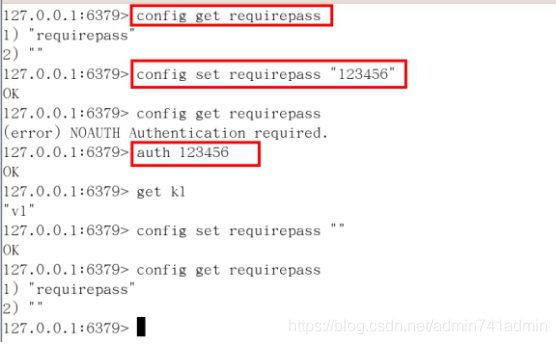

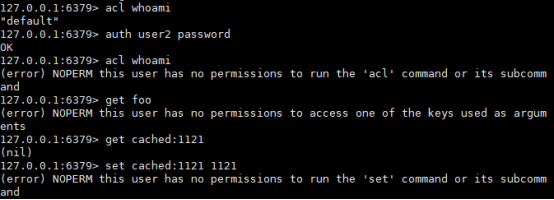

SECURITY security

Temporarily set password

- Setting the password in the command is only temporary. Restart the redis server and the password will be restored.

Permanently set password

- To permanently set the password, you need to set it in the configuration file.

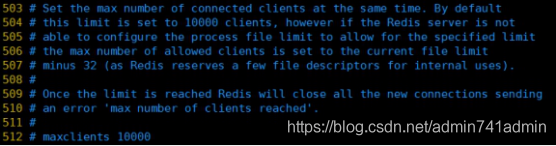

LIMITS limits

maxclients

- Set how many clients redis can connect to at the same time.

- 10000 clients by default.

- If this limit is reached, redis will reject new connection requests and send "max number of clients reached" to these connection requestors in response.

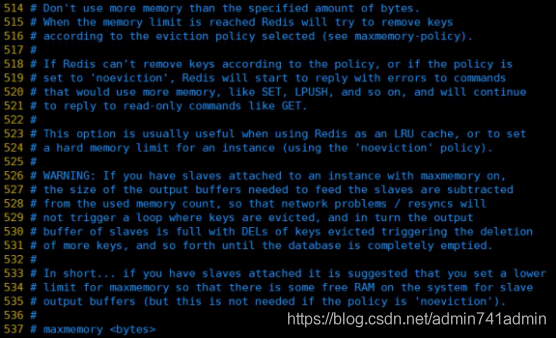

maxmemory

- It is recommended to set it. Otherwise, the memory will be full, resulting in server downtime

- Set the amount of memory redis can use. Once the memory usage limit is reached, redis will attempt to remove the internal data. The removal rule can be specified through maxmemory policy.

- If redis is unable to remove the data in memory according to the removal rules, or if "no removal allowed" is SET, redis will return error messages for those instructions that need to apply for memory, such as SET, LPUSH, etc.

- However, for instructions without memory request, they will still respond normally, such as GET. If your redis is a master redis (indicating that your redis has a slave redis), when setting the memory usage limit, you need to set aside some memory space in the system for the synchronization queue cache. This factor need not be considered only when you set "do not remove".

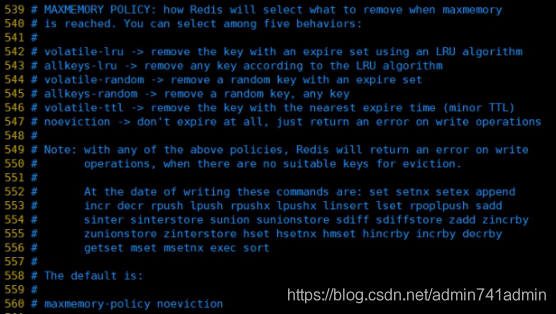

maxmemory-policy

- Once reids reaches the memory usage limit, remove the rule

- Volatile LRU: use LRU algorithm to remove keys, and only for keys with expiration time set; (least recently used)

- Allkeys LRU: in all set keys, LRU algorithm is used to remove keys

- Volatile random: remove random keys from the expiration collection, only for keys with expiration time set

- All keys random: remove random keys from all set keys

- Volatile TTL: remove the keys with the smallest TTL value, that is, the keys that will expire recently

- noeviction: no removal. For write operations, only error messages are returned

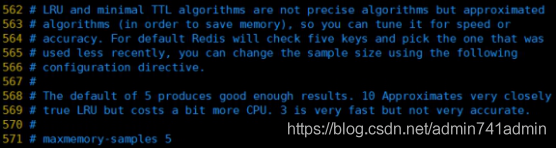

maxmemory-samples

- Setting the number of samples, LRU algorithm and minimum TTL algorithm are not accurate algorithms, but estimated values, so you can set the sample size. redis will check so many key s and select the LRU by default.

- Generally, the number from 3 to 7 is set. The smaller the value, the more inaccurate the sample is, but the smaller the performance consumption.

Publish and subscribe to Redis

What are publish and subscribe

-

Redis publish / subscribe (pub/sub) is a message communication mode: the sender (pub) sends messages and the subscriber (sub) receives messages.

-

Redis client can subscribe to any number of channels.

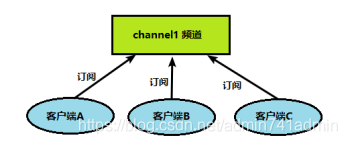

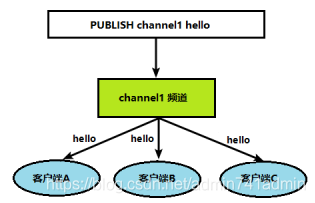

Publish and subscribe to Redis

- The client can subscribe to channels, as shown in the figure below

- When a message is published to this channel, the message will be sent to the subscribed client

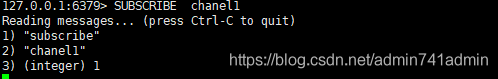

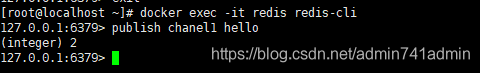

Publish subscribe command line implementation

-

Client subscription channel, SUBSCRIBE channel name

-

Channel publish message, publish channel name message

-

The subscription client sees the message

N ote: the published message is not persistent. If the subscribed client subscribes after the nth time of publishing the message, it cannot be viewed for the nth time, and can only receive the message published for the nth + 1st time after the subscription

Redis new data type

Bitmaps

concept

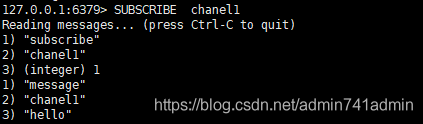

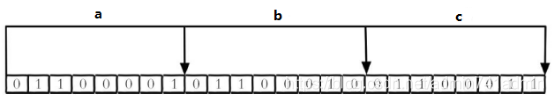

- Modern computers use binary (bit) as the basic unit of information, and one byte is equal to 8 bits. For example, the "abc" string is composed of 3 bytes, but it is actually represented in binary when stored in the computer. The ASCII codes corresponding to "abc" are 97, 98 and 99 respectively, and the corresponding binaries are 0110001, 01100010 and 01100011 respectively, as shown in the following figure

- Reasonable use of operation bits can effectively improve memory utilization and development efficiency.

- Redis provides Bitmaps, a "data type" that enables bit alignment:

- Bitmaps itself is not a data type. In fact, it is a string (key value), but it can operate on the bits of the string.

- Bitmaps provides a separate set of commands, so the methods of using bitmaps and strings in Redis are different. Bitmaps can be imagined as an array in bits. Each cell of the array can only store 0 and 1. The subscript of the array is called offset in bitmaps.

be careful

- The user id of many applications starts with a specified number (e.g. 10000). Directly matching the user id with the offset of Bitmaps is bound to cause some waste. The common practice is to subtract the specified number from the user id every time you do setbit operation.

- When initializing Bitmaps for the first time, if the offset is very large, the whole initialization process will be slow, which may cause Redis blocking.

command

- Setbit < key > < offset > < value > sets the value of an offset in Bitmaps (0 or 1)

- Getbit < key > < offset > gets the value of an offset in Bitmaps

- Bitcount < key > [start end] counts the number of strings with bit value of 1 from start byte to end byte

- Bitop and (or / not / xor) < destkey > [key...] bitop is a composite operation. It can do and (intersection), or (Union), not (non), xor (xor) operations of multiple Bitmaps and save the results in destkey.

##########################################Bitmap########################################

127.0.0.1:6379> setbit sign 0 1 # Check in on Monday

(integer) 0

127.0.0.1:6379> setbit sign 1 0 # I didn't sign in on Tuesday

(integer) 0

127.0.0.1:6379> setbit sign 2 0 # I didn't sign in on Wednesday

(integer) 0

127.0.0.1:6379> setbit sign 3 1 # Sign in on Thursday

(integer) 0

127.0.0.1:6379> getbit sign 2 # Get Wednesday status

(integer) 0

127.0.0.1:6379> bitcount sign 0 -1 # Total of all 1

(integer) 2

Summary:

similar z Ordered set, but not z

Bitmap Bitmap, data structure! Are operating binary bits to record, there are only two states: 0 and 1!

HyperLogLog

concept

- In our work, we often encounter functional requirements related to statistics, For example, counting website PV (PageView page visits) can be easily realized by using incr and incrby of Redis. But how to solve the problems that need to be de duplicated and counted, such as UV (unique visitor), the number of independent IP S, and the number of search records? This problem of finding the number of non repeating elements in the collection is called the cardinality problem

- What is cardinality?

- For example, if the dataset {1, 3, 5, 7, 5, 7, 8}, the cardinality set of the dataset is {1, 3, 5, 7, 8}, and the cardinality (non repeating elements) is 5. Cardinality estimation is to quickly calculate the cardinality within the acceptable error range.

- There are many solutions to the cardinality problem:

- The data is stored in the MySQL table, and distinct count is used to calculate the number of non duplicates

- Use the hash, set, bitmaps and other data structures provided by Redis to process

- The above scheme results are accurate, but with the continuous increase of data, the occupied space is becoming larger and larger, which is impractical for very large data sets.

- In Redis, each hyperlog key only needs 12 KB of memory to calculate the cardinality of nearly 2 ^ 64 different elements. This is in sharp contrast to a collection where the more elements consume more memory when calculating the cardinality.

- However, because HyperLogLog only calculates the cardinality based on the input elements and does not store the input elements themselves, HyperLogLog cannot return the input elements like a collection.

command

- Pfadd < key > < element > [element...] adds the specified element to the HyperLogLog. If the estimated approximate cardinality changes after executing the command, it returns 1, otherwise it returns 0.

- Fcount < key > [key...] calculate the approximate cardinality of HLL. You can calculate multiple hlls. For example, use HLL to store daily UVs and calculate weekly UVs. You can use 7-day UVs for combined calculation

- Pfmerge < destkey > < sourcekey > [sourcekey...] store the merged results of one or more hlls in another HLL. For example, monthly active users can use daily active users to consolidate and calculate the results

##########################################Hyperloglog########################################

127.0.0.1:6379> pfadd mykey a b c d e f g h i j # Add a set of elements

(integer) 1

127.0.0.1:6379> pfcount mykey # Count a set of elements

(integer) 10

127.0.0.1:6379> PFadd mykey2 i j z x c v b n m

(integer) 1

127.0.0.1:6379> pfcount mykey2

(integer) 9

127.0.0.1:6379> pfmerge mykey3 mykey mykey2 # Merge mykey3 = MyKey + mykey2 and de duplicate

OK

127.0.0.1:6379> pfcount mykey3

(integer) 15

Summary:

Follow s Unordered sets are similar, but not s

Web page UV (A person visits a website many times, but still counts as a person!)

Advantages: the occupied memory is fixed, 2/64 The technology of different elements only needs waste 12 KB Memory! If you want to compare from the perspective of memory Hyperloglog be the first choice!

0.81% error rate! If fault tolerance is allowed, it must be used Hyperloglog ! If fault tolerance is not allowed, use set Or your own data type!

Geospatial

concept

- Redis 3.2 adds support for Geo types. GEO, Geographic, abbreviation of Geographic information. This type is the 2-dimensional coordinate of the element, which is the longitude and latitude on the map. Based on this type, redis provides longitude and latitude setting, query, range query, distance query, longitude and latitude Hash and other common operations.

command

- Redis 3.2 adds support for Geo types. GEO, Geographic, abbreviation of Geographic information. This type is the 2-dimensional coordinate of the element, which is the longitude and latitude on the map. Based on this type, redis provides longitude and latitude setting, query, range query, distance query, longitude and latitude Hash and other common operations.

command

- Geoadd < key > < longitude > < latitude > < member > [longitude latitude member...] add geographic location (longitude, latitude, name)

- The effective longitude is from - 180 degrees to 180 degrees. The effective latitude ranges from -85.05112878 degrees to 85.05112878 degrees. When the coordinate position exceeds the specified range, the command will return an error.

- The added data cannot be added again.

- The two poles cannot be added directly. Generally, the city data will be downloaded and imported directly through the Java program at one time.

- Geopos < key > < member > [member...] obtain the coordinate value of the specified region

- Geodist < key > < member1 > < member2 > [m|km|ft|mi] obtain the linear distance between two positions

- m is in meters [default].

- km is expressed in kilometers.

- mi is in miles.

- ft is in feet.

- If you do not explicitly specify the unit parameter, GEODIST defaults to meters

- Georadius < key > < longitude > < latitude > radius m|km|ft|mi take the given latitude and longitude as the center to find out the elements within a certain radius

##########################################Geospatial########################################

127.0.0.1:6379> geoadd china:city 121.47 31.23 shanghai 120.15 30.28 hangzhou 113.27 23.13 gaungzhou # Add single / multiple

(integer) 3

127.0.0.1:6379> zrange china:city 0 -1 # The underlying implementation principle of geo is actually Zset! We can use the Zset command to operate geo!

1) "gaungzhou"

2) "hangzhou"

3) "shanghai"

4) "beijing"

127.0.0.1:6379> geopos china:city beijing hangzhou # Gets the longitude and latitude of the specified city

1) 1) "116.39999896287918091"

2) "39.90000009167092543"

2) 1) "120.15000075101852417"

2) "30.2800007575645509"

127.0.0.1:6379> geodist china:city shanghai hangzhou km # Distance between two member s, in km

"164.5694"

127.0.0.1:6379> georadius china:city 110 30 1000 km withdist withcoord count 1 georadius Take the given longitude and latitude as the center, find out the elements within a certain radius, and take 110,30 Longitude and latitude as the center,

1) 1) "gaungzhou"

2) "830.3533"

3) 1) "113.27000051736831665"

2) "23.13000101271457254"

127.0.0.1:6379> georadiusbymember china:city hangzhou 1000 km withdist withcoord # Take hangzhou as the center and find a member of 1000km. Withlist displays the straight-line distance between two points and withcoord displays the longitude and latitude of the point

1) 1) "hangzhou"

2) "0.0000"

3) 1) "120.15000075101852417"

2) "30.2800007575645509"

2) 1) "shanghai"

2) "164.5694"

3) 1) "121.47000163793563843"

2) "31.22999903975783553"

Summary:

The bottom is z,Ordered set.

Two points away, people nearby

Geographic location, effective longitude from-180 Degrees to 180 degrees. Effective latitude from-85.05112878 Degrees to 85.05112878 Degrees. When the coordinate position exceeds the above specified range, the command will return an error.

Redis_Jedis test

concept

- Redis official recommended Java connection development tool! Use java to operate redis middleware! If you want to use java to operate redis, you must be very familiar with Jedis!

jar package required by Jedis

<dependency> <groupId>redis.clients</groupId> <artifactId>jedis</artifactId> <version>3.2.0</version> </dependency>

Precautions for connecting to Redis

- redis. Comment out bind 127.0 in conf 0.1, then protected mode No

- Disable Linux Firewall: for example, execute commands in Linux(CentOS7), systemctl stop / disable firewall service

Test related data types

public void f1(){

Jedis jedis = new Jedis("192.168.56.10", 6379);

System.out.println(jedis.ping());

}

public void f2(){

Jedis jedis = new Jedis("192.168.56.10", 6379);

jedis.set("k1", "v1");

jedis.set("k2", "v2");

jedis.set("k3", "v3");

Set<String> keys = jedis.keys("*");

System.out.println(keys.size());

for (String key : keys) {

System.out.println(key);

}

// 3

//k3

//k1

//k2

}

public void f3(){

Jedis jedis = new Jedis("192.168.56.10", 6379);

jedis.lpush("mylist","aa");

jedis.lpush("mylist","bb");

List<String> list = jedis.lrange("mylist",0,-1);

for (String element : list) {

System.out.println(element);

}

// bb

//aa

}

Redis_Jedis instance

public class phone {

public static void main(String[] args) {

//Analog verification code transmission

verifyCode("111666");

}

public static void getRedisCode(String phone,String code){

//Connect to redis

Jedis jedis=new Jedis("192.168.56.10",6379);

//Verification code key

String codeKey="VerifyCode"+phone+":code";

String redisCode=jedis.get(codeKey);

//judge

if(redisCode.equals(code)){

System.out.println("success");

}else{

System.out.println("lose");

}

jedis.close();

}

//Each mobile phone can only send three times a day. Put the verification code into redis and set the expiration time to 120

public static void verifyCode(String phone,String code){

//Connect to redis

Jedis jedis=new Jedis("192.168.56.10",6379);

//Splice key

//Number of times sent by mobile phone key

String countKey="VerifyCode"+phone+":count";

//Verification code key

String codeKey="VerifyCode"+phone+":code";

String count=jedis.get(countKey);

if(count==null){

jedis.setex(countKey,24*60*60,"1");

}else if(Integer.parseInt(count)<=2){

jedis.incr(countKey);

}else if(Integer.parseInt(count)>2){

System.out.println("More than three times");

jedis.close();

}

String vscode = getcode();

jedis.setex(codeKey,120,vscode);

jedis.close();

}

public static String getcode(){

Random random=new Random();

String code="";

for(int i=0;i<6;i++){

int rand = random.nextInt(10);

code+=rand;

}

return code;

}

}

Redis and Spring Boot integration

- Consolidate dependent files

<!-- redis --> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-data-redis</artifactId> </dependency> <!-- spring2.X integrate redis what is needed common-pool2--> <dependency> <groupId>org.apache.commons</groupId> <artifactId>commons-pool2</artifactId> <version>2.6.0</version> </dependency>

- redis profile

#Redis server address spring.redis.host=192.168.140.136 #Redis server connection port spring.redis.port=6379 #Redis database index (0 by default) spring.redis.database= 0 #Connection timeout (MS) spring.redis.timeout=1800000 #Maximum number of connections in the connection pool (negative value indicates no limit) spring.redis.lettuce.pool.max-active=20 #Maximum blocking waiting time (negative number indicates no limit) spring.redis.lettuce.pool.max-wait=-1 #Maximum free connections in the connection pool spring.redis.lettuce.pool.max-idle=5 #Minimum free connections in connection pool spring.redis.lettuce.pool.min-idle=0

- redis configuration class

@EnableCaching

@Configuration

public class RedisConfig extends CachingConfigurerSupport {

@Bean

public RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory factory) {

RedisTemplate<String, Object> template = new RedisTemplate<>();

RedisSerializer<String> redisSerializer = new StringRedisSerializer();

Jackson2JsonRedisSerializer jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

ObjectMapper om = new ObjectMapper();

om.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

om.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL);

jackson2JsonRedisSerializer.setObjectMapper(om);

template.setConnectionFactory(factory);

//key serialization method

template.setKeySerializer(redisSerializer);

//value serialization

template.setValueSerializer(jackson2JsonRedisSerializer);

//value hashmap serialization

template.setHashValueSerializer(jackson2JsonRedisSerializer);

return template;

}

@Bean

public CacheManager cacheManager(RedisConnectionFactory factory) {

RedisSerializer<String> redisSerializer = new StringRedisSerializer();

Jackson2JsonRedisSerializer jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

//Solve the problem of query cache conversion exception

ObjectMapper om = new ObjectMapper();

om.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

om.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL);

jackson2JsonRedisSerializer.setObjectMapper(om);

// Configure serialization (solve the problem of garbled code), and the expiration time is 600 seconds

RedisCacheConfiguration config = RedisCacheConfiguration.defaultCacheConfig()

.entryTtl(Duration.ofSeconds(600))

.serializeKeysWith(RedisSerializationContext.SerializationPair.fromSerializer(redisSerializer))

.serializeValuesWith(RedisSerializationContext.SerializationPair.fromSerializer(jackson2JsonRedisSerializer))

.disableCachingNullValues();

RedisCacheManager cacheManager = RedisCacheManager.builder(factory)

.cacheDefaults(config)

.build();

return cacheManager;

}

}

- Test code

@RestController

@RequestMapping("/redisTest")

public class RedisTestController {

@Autowired

private RedisTemplate redisTemplate;

@GetMapping

public String testRedis() {

//Set value to redis

redisTemplate.opsForValue().set("name","lucy");

//Get value from redis

String name = (String)redisTemplate.opsForValue().get("name");

return name;

}

}

Redis_ Transaction lock mechanism

Redis transaction definition

- Redis transaction is a separate isolation operation: all commands in the transaction will be serialized and executed sequentially. During the execution of the transaction, it will not be interrupted by command requests sent by other clients.

- The main function of Redis transaction is to concatenate multiple commands to prevent other commands from jumping in the queue.

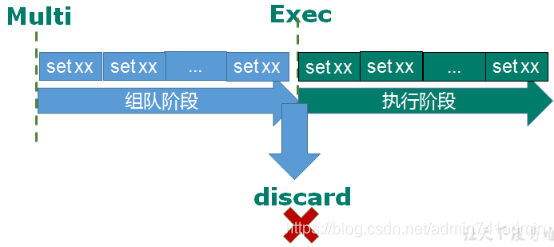

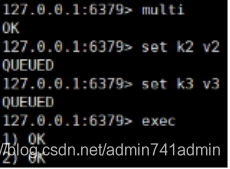

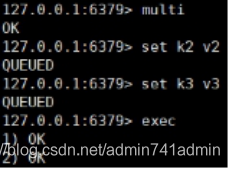

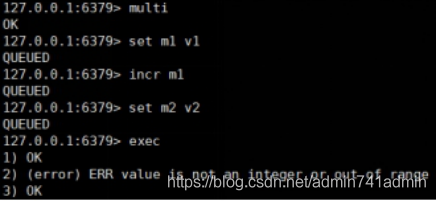

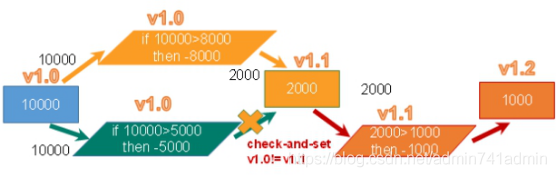

Multi,Exec,discard

- Starting from entering the Multi command, the entered commands will enter the command queue in turn, but will not be executed until entering Exec, Redis will execute the commands in the previous command queue in turn.

- In the process of team formation, you can give up team formation through discard

case

- case

- Team formation and submission succeeded

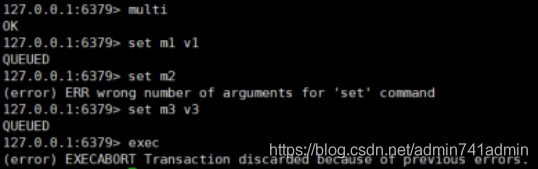

- Error reported in team formation stage, submission failed

- Team formation is successful, and there are successes and failures in submission

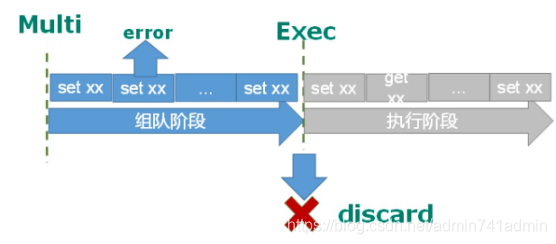

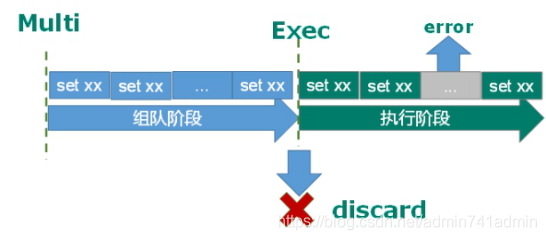

Failure handling of transactions

- If a command in the queue has a report error, all queues in the whole queue will be cancelled when it is executed.

- If an error is reported in a command at the execution stage, only the command with an error will not be executed, while other commands will be executed and will not be rolled back.

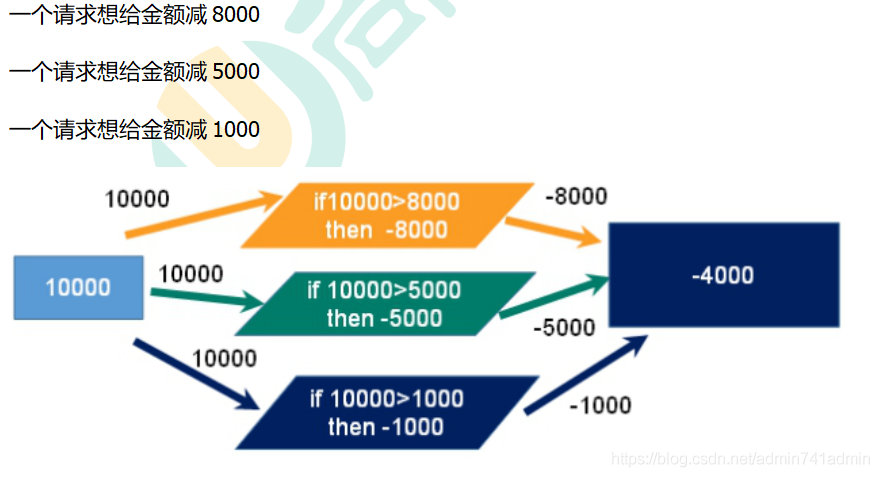

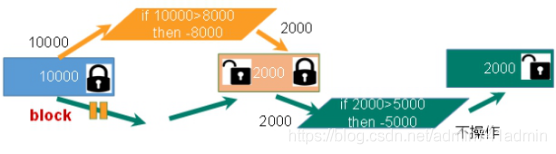

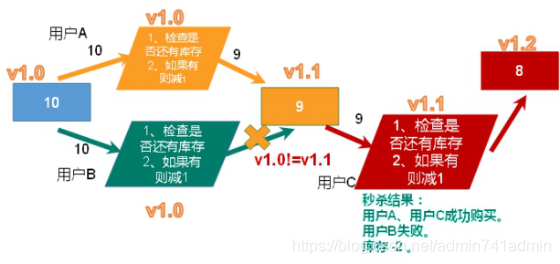

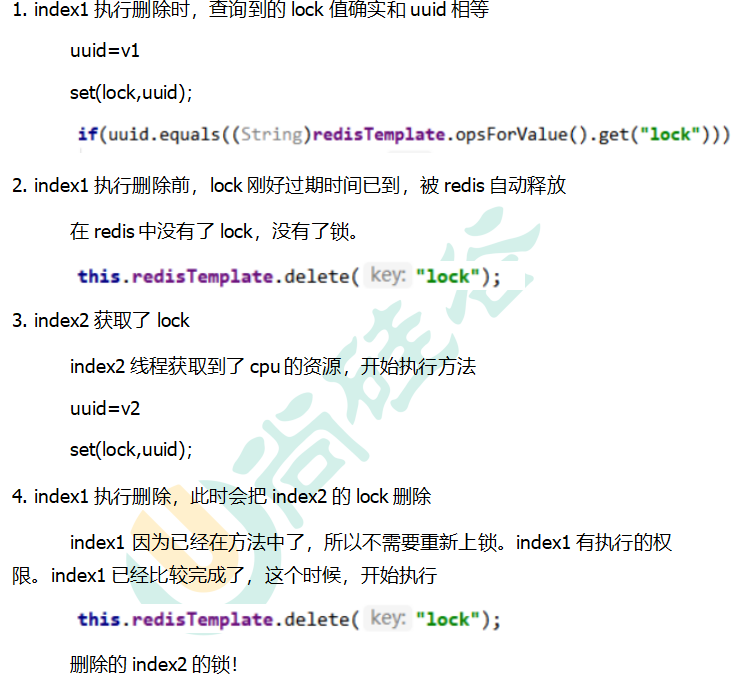

Problem of transaction conflict

example

Pessimistic lock

- Pessimistic lock, as the name suggests, is very pessimistic. Every time you get the data, you think others will modify it, so you lock it every time you get the data, so others will block the data until it gets the lock. Many such locking mechanisms are used in traditional relational databases, such as row lock, table lock, read lock and write lock, which are locked before operation.

Optimistic lock

- Optimistic lock, as the name suggests, is very optimistic. Every time you go to get the data, you think others will not modify it, so you won't lock it. However, when updating, you will judge whether others have updated the data during this period. You can use mechanisms such as version number. Optimistic locking is suitable for multi read applications, which can improve throughput. Redis uses this check and set mechanism to implement transactions.

WATCH key [key ...]

unwatch

- Cancel the monitoring of all key s by the WATCH command.

- If the EXEC command or DISCARD command is executed first after the WATCH command is executed, there is no need to execute UNWATCH.

- http://doc.redisfans.com/transaction/exec.html

Redis transaction three features

- Separate isolation operation

- All commands in a transaction are serialized and executed sequentially. During the execution of the transaction, it will not be interrupted by command requests sent by other clients.

- There is no concept of isolation level

- The commands in the queue will not be actually executed until they are committed, because any instructions will not be actually executed before the transaction is committed

- Atomicity is not guaranteed

- If a command fails to execute in a transaction, the subsequent commands will still be executed without rollback

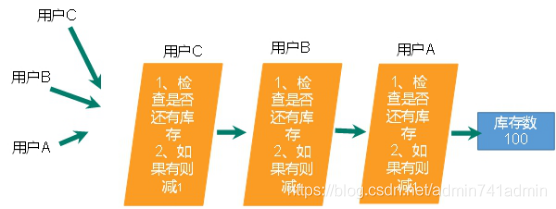

Redis transaction spike case

Second kill concurrent simulation

//Second kill process

public static boolean doSecKill(String uid,String prodid) throws IOException {

//1. Judgment of uid and prodid non null

if(uid == null || prodid == null) {

return false;

}

//Get jedis object through connection pool

JedisPool jedisPoolInstance = JedisPoolUtil.getJedisPoolInstance();

Jedis jedis = jedisPoolInstance.getResource();

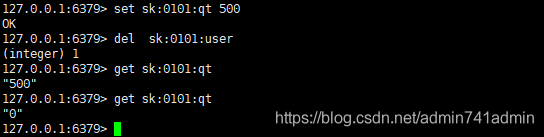

//3 splicing key

// 3.1 inventory key

String kcKey = "sk:"+prodid+":qt";

// 3.2 second kill successful user key

String userKey = "sk:"+prodid+":user";

//4. Get the inventory. If the inventory is null, the second kill has not started yet

String kc = jedis.get(kcKey);

if(kc == null) {

System.out.println("The second kill hasn't started yet, please wait");

jedis.close();

return false;

}

// 5 judge whether the user repeats the second kill operation

if(jedis.sismember(userKey, uid)) {

System.out.println("The second kill has been successful. You can't repeat the second kill");

jedis.close();

return false;

}

//6. Judge if the commodity quantity and inventory quantity are less than 1, the second kill is over

if(Integer.parseInt(kc)<=0) {

System.out.println("The second kill is over");

jedis.close();

return false;

}

//7.1 inventory-1

jedis.decr(kcKey);

//7.2 add successful users to the list

jedis.sadd(userKey,uid);

System.out.println("The second kill succeeded..");

jedis.close();

return true;

}

Connection timeout, solved by connection pool

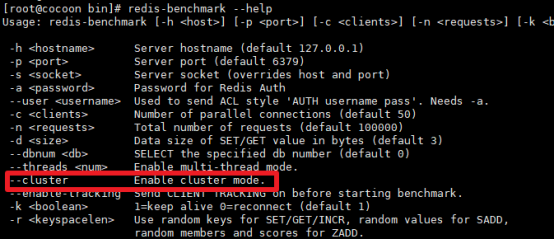

ab concurrent installation

networking

- yum install httpd-tools

No network - omitted

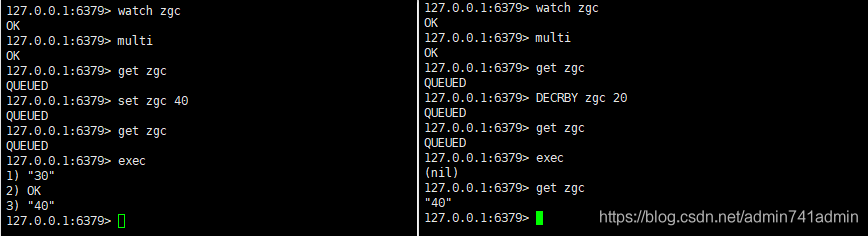

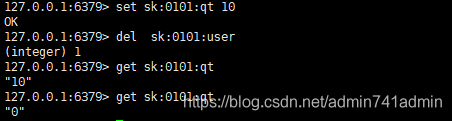

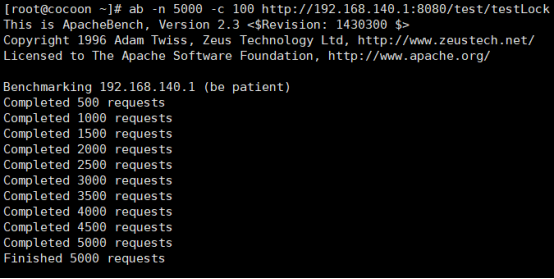

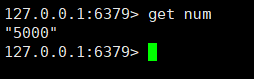

Oversold problem - simulation

ab test

- vim postfile simulates form submission parameters, ending with & symbol; Store the current directory.

- Content: prodid = 0101&

- ab -n 2000 -c 200 -k -p ./postfile -T application/x-www-form-urlencoded http://192.168.3.157:8080/Seckill/doseckill

Oversold display

Oversold problem - solve 1 - optimistic lock

- Use optimistic lock to eliminate users and solve the oversold problem.

//Second kill process

public static boolean doSecKill(String uid,String prodid) throws IOException {

//1. Judgment of uid and prodid non null

if(uid == null || prodid == null) {

return false;

}

//2. Connect to redis

//Jedis jedis = new Jedis("192.168.44.168",6379);

//Get jedis object through connection pool

JedisPool jedisPoolInstance = JedisPoolUtil.getJedisPoolInstance();

Jedis jedis = jedisPoolInstance.getResource();

//3 splicing key

// 3.1 inventory key

String kcKey = "sk:"+prodid+":qt";

// 3.2 second kill successful user key

String userKey = "sk:"+prodid+":user";

//Monitor inventory

jedis.watch(kcKey);

//4. Get the inventory. If the inventory is null, the second kill has not started yet

String kc = jedis.get(kcKey);

if(kc == null) {

System.out.println("The second kill hasn't started yet, please wait");

jedis.close();

return false;

}

// 5 judge whether the user repeats the second kill operation

if(jedis.sismember(userKey, uid)) {

System.out.println("The second kill has been successful. You can't repeat the second kill");

jedis.close();

return false;

}

//6. Judge if the commodity quantity and inventory quantity are less than 1, the second kill is over

if(Integer.parseInt(kc)<=0) {

System.out.println("The second kill is over");

jedis.close();

return false;

}

//7 second kill process

// //Use transaction

Transaction multi = jedis.multi();

//Team operation

multi.decr(kcKey);

multi.sadd(userKey,uid);

//implement

List<Object> results = multi.exec();

if(results == null || results.size()==0) {

System.out.println("The second kill failed....");

jedis.close();

return false;

}

// //7.1 inventory-1

// jedis.decr(kcKey);

// //7.2 add successful users to the list

// jedis.sadd(userKey,uid);

System.out.println("The second kill succeeded..");

jedis.close();

return true;

}

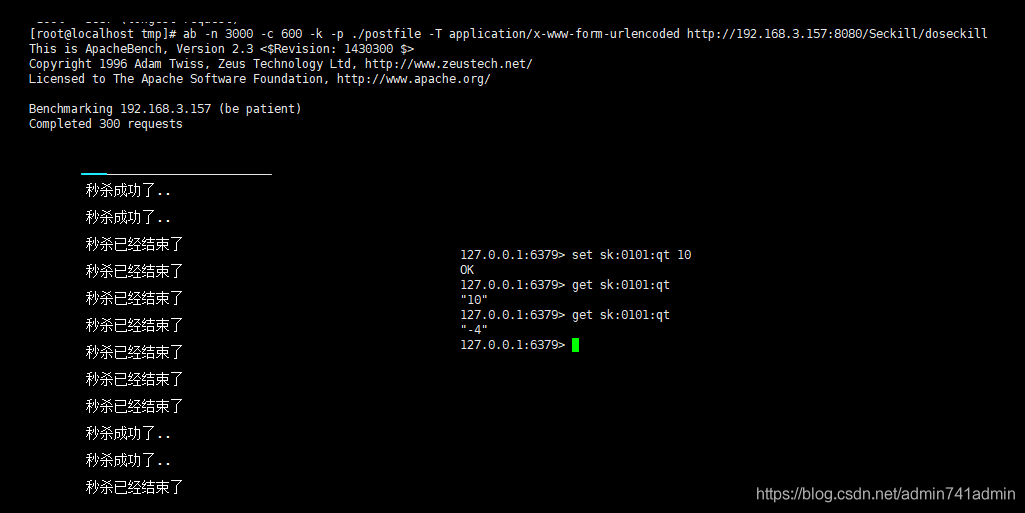

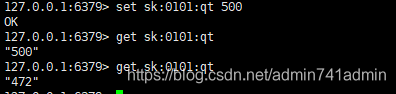

Inventory remaining problems

- It's been seconds, but there's still inventory. The reason is that optimistic locks cause many requests to fail. The first one didn't arrive, and the second one may arrive.

LUA script

- Lua is a small script language. Lua script can be easily called by C/C + + code or call C/C + + functions in turn. Lua does not provide a powerful library. A complete Lua interpreter is only 200k, so Lua is not suitable for developing independent applications, but as an embedded script language.

- Write complex or multi-step redis operations into a script and submit them to redis for execution at one time to reduce the number of repeated connections to redis. Improve performance.

- LUA scripts are similar to redis transactions. They have certain atomicity and will not be cut in line by other commands. They can complete some redis transactional operations.

- Note that the lua script function of redis can only be used in redis version 2.6 or above. After version 2.6 of redis, the contention problem is solved through lua script. In fact, redis uses its single thread feature to solve the multi task concurrency problem by means of task queue.

Inventory legacy - resolution 2

-

Solve the oversold problem

-

Inventory remaining problems

-

lua script

local userid=KEYS[1];

local prodid=KEYS[2];

local qtkey="sk:"..prodid..":qt";

local usersKey="sk:"..prodid.":usr';

local userExists=redis.call("sismember",usersKey,userid);

if tonumber(userExists)==1 then

return 2;

end

local num= redis.call("get" ,qtkey);

if tonumber(num)<=0 then

return 0;

else

redis.call("decr",qtkey);

redis.call("sadd",usersKey,userid);

end

return 1;

- code

static String secKillScript ="local userid=KEYS[1];\r\n" +

"local prodid=KEYS[2];\r\n" +

"local qtkey='sk:'..prodid..\":qt\";\r\n" +

"local usersKey='sk:'..prodid..\":usr\";\r\n" +

"local userExists=redis.call(\"sismember\",usersKey,userid);\r\n" +

"if tonumber(userExists)==1 then \r\n" +

" return 2;\r\n" +

"end\r\n" +

"local num= redis.call(\"get\" ,qtkey);\r\n" +

"if tonumber(num)<=0 then \r\n" +

" return 0;\r\n" +

"else \r\n" +

" redis.call(\"decr\",qtkey);\r\n" +

" redis.call(\"sadd\",usersKey,userid);\r\n" +

"end\r\n" +

"return 1" ;

static String secKillScript2 =

"local userExists=redis.call(\"sismember\",\"{sk}:0101:usr\",userid);\r\n" +

" return 1";

public static boolean doSecKill(String uid,String prodid) throws IOException {

JedisPool jedispool = JedisPoolUtil.getJedisPoolInstance();

Jedis jedis=jedispool.getResource();

//String sha1= .secKillScript;

String sha1= jedis.scriptLoad(secKillScript);

Object result= jedis.evalsha(sha1, 2, uid,prodid);

String reString=String.valueOf(result);

if ("0".equals( reString ) ) {

System.err.println("Empty!!");

}else if("1".equals( reString ) ) {

System.out.println("Rush purchase succeeded!!!!");

}else if("2".equals( reString ) ) {

System.err.println("The user has robbed!!");

}else{

System.err.println("Panic buying exception!!");

}

jedis.close();

return true;

}

- result

summary

- The teacher points 10 times, and the second kill is normal

- Using tool ab to simulate concurrent testing, oversold will occur. A negative number appears when viewing inventory.

- Second Edition: add transaction optimistic lock (solve oversold), but there are legacy inventory and connection timeout

- Version 3: connection pool solves timeout problem

- Fourth Edition: Solving Inventory dependency, LUA script

RDB of Redis persistence

concept

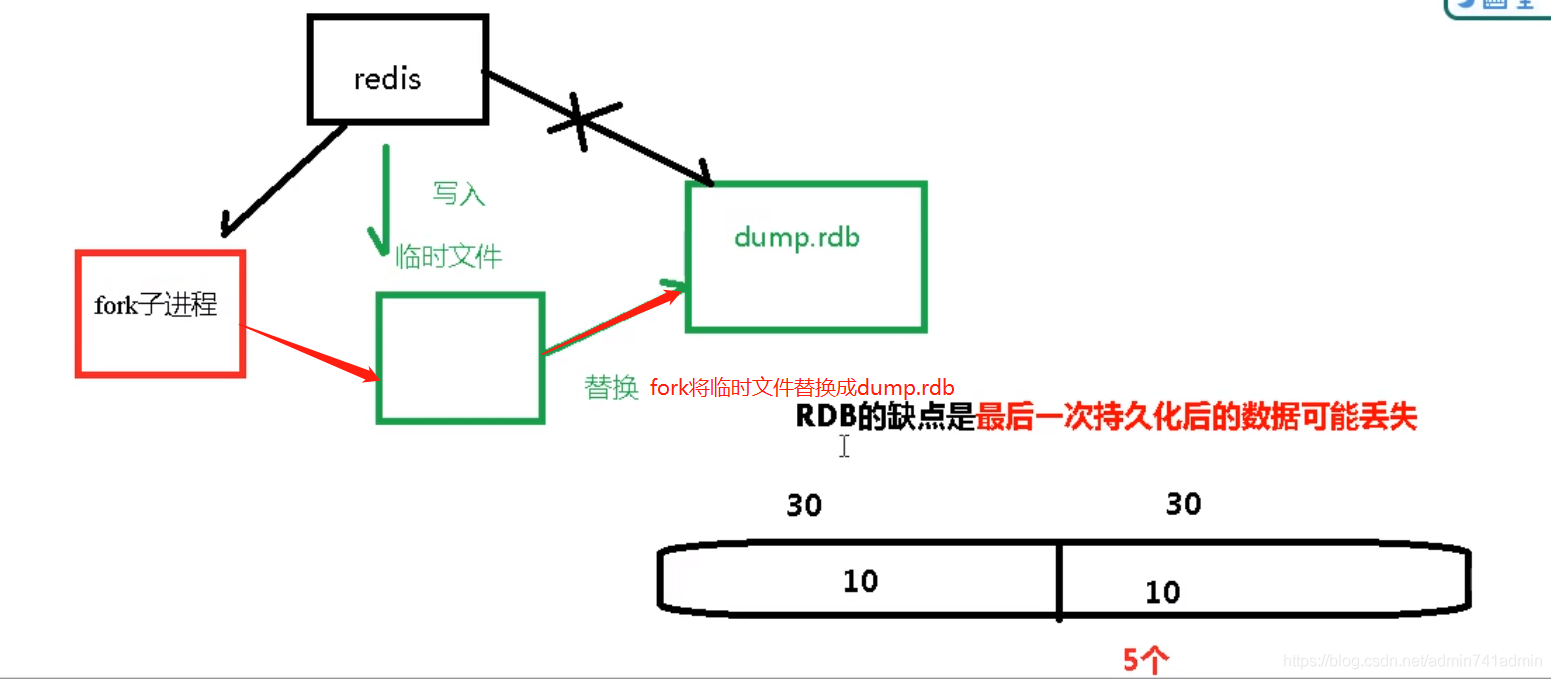

- Write the data set Snapshot in memory to disk within the specified time interval, that is, the jargon Snapshot. When it is restored, it reads the Snapshot file directly into memory.

- advantage

- Suitable for large-scale data recovery

- The requirements for data integrity and consistency are not high, so it is more suitable for use

- Save disk space

- Fast recovery

How is backup performed

- Redis will be created separately (fork) a subprocess will first write data to a temporary file for persistence. After the persistence process is completed, this temporary file will be used to replace the last persistent file. In the whole process, the main process does not perform any IO operation, which ensures high performance. If large-scale data recovery is required, and data recovery is difficult Integrity is not very sensitive, so RDB is more efficient than AOF. The disadvantage of RDB is that the data after the last persistence may be lost.

Fork

- Fork is used to copy a process that is the same as the current process. The values of all data (variables, environment variables, program counters, etc.) of the new process are consistent with those of the original process, but it is a brand-new process and serves as a child process of the original process

- In Linux programs, fork() will produce a child process exactly the same as the parent process, but the child process will often exec system calls after that. For efficiency reasons, Linux has introduced "copy on write technology"

- In general, the parent process and the child process share the same section of physical memory. Only when the contents of each section of the process space change, the contents of the parent process will be copied to the child process.

configuration file

################################ snapshot ################################# # # Save the DB on disk: save the database to disk # # Save < seconds > < update > # # If the specified number of seconds and database write operations are met, the database will be saved. # # The following is an example of a save operation: # At least one key value changes within 900 seconds (15 minutes) (database saving - persistence) # At least 10 key values change within 300 seconds (5 minutes) (database saving - persistence) # At least 10000 key values change within 60 seconds (1 minute) (database saving - persistence) # # Note: comment out the "save" line configuration item to disable the function of saving the database. # # You can also remove the previous "save" configuration by adding a configuration item with only one empty string (such as the following example). # # save "" save 900 1 save 30 10 save 60 10000 #By default, if the RDB snapshot persistence operation is activated (at least one condition is activated) and the persistence operation fails, Redis will stop accepting the update operation. #This will let users know that the data is not correctly stored on disk. Otherwise, no one will notice this problem, which may cause disaster. # #If the background storage (persistence) operation process works again, Redis will automatically allow the update operation. # #However, if you have properly configured the monitoring and backup of Redis server, you may want to turn off this function. #In this way, redis can continue to work as usual even if the background save operation fails. stop-writes-on-bgsave-error yes #Whether to export Use LZF to compress strings and objects when rdb database files? #By default, it is always set to 'yes'. It looks like a double-edged sword. #If you want to save some CPU in the stored sub process, set it to 'no', #But in this way, if your kye/value is compressible, your data connection everywhere will be large. rdbcompression yes #Starting from RDB version 5, a CRC64 check is placed at the end of the file. #This will make the format more attack resistant, but there will be a performance degradation of about 10% when storing or loading rbd files, #Therefore, in order to maximize performance, you can turn off this configuration item. # #RDB files without verification will have a 0 verification bit to tell the loading code to skip the verification check. rdbchecksum yes # The file name of the exported database dbfilename dump.rdb # working directory # # The exported database will be written to this directory, and the file name is the file name specified in the 'dbfilename' configuration item above. # # Only added files will also be created in this directory # # Note that you must configure a working directory in this instead of a file name. dir ./

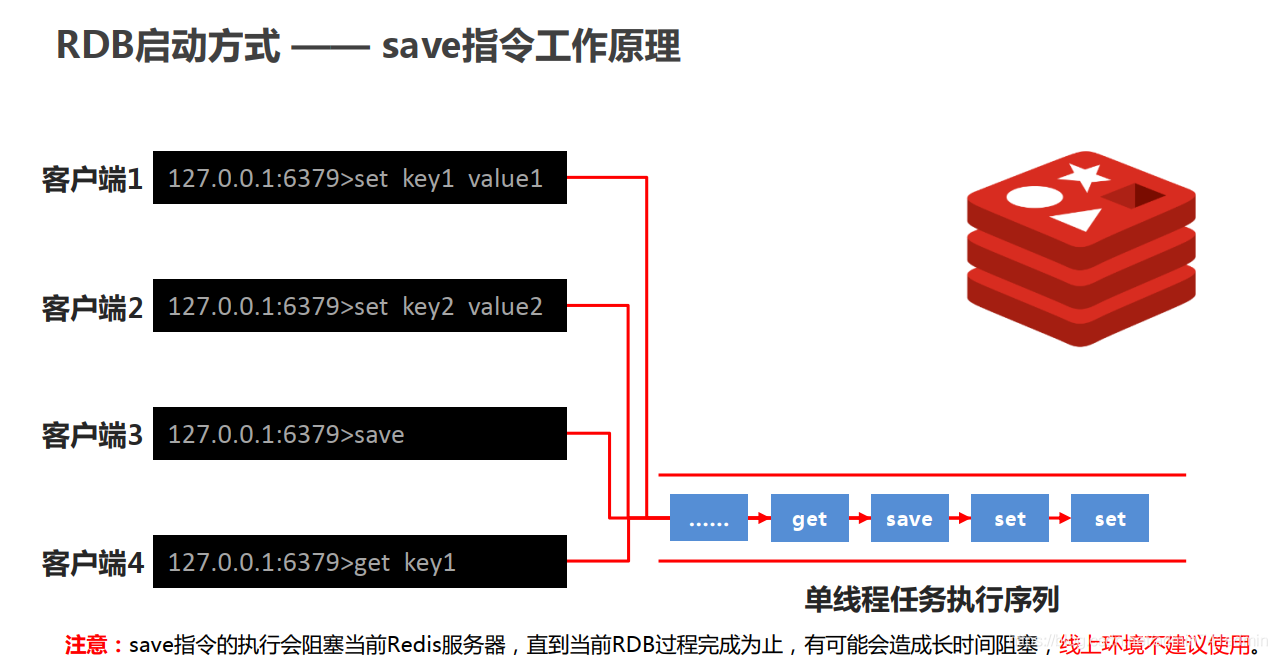

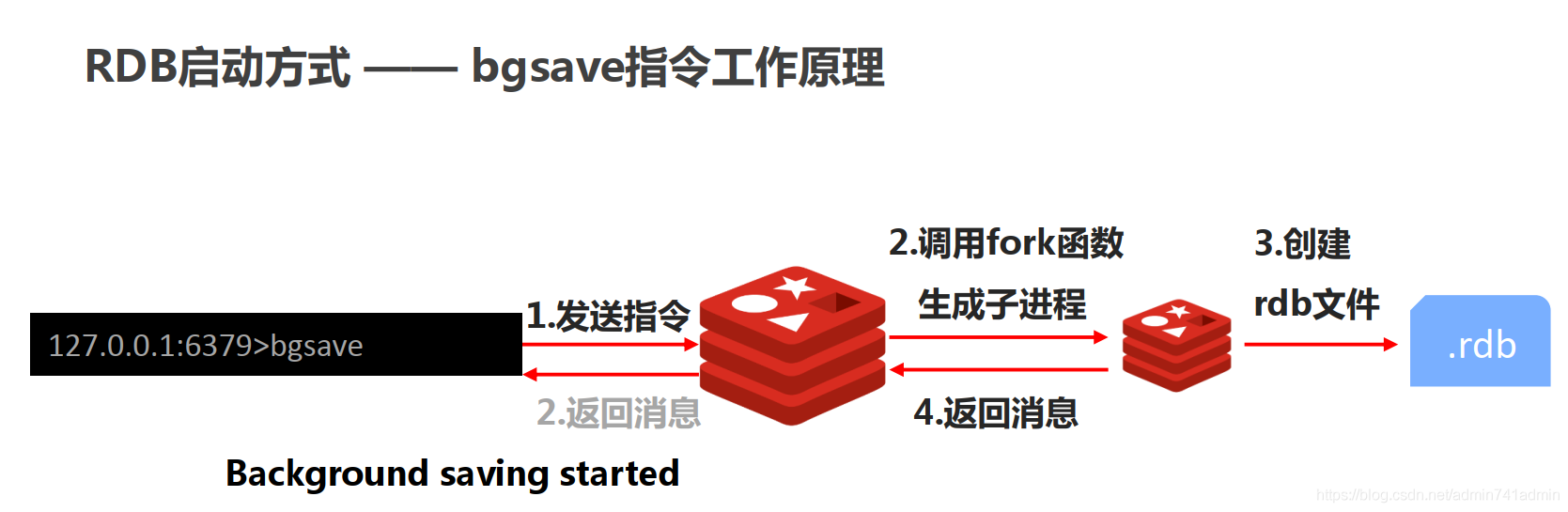

Difference between save and bgsave

concept

- Save: when saving, just save, regardless of others, and all are blocked. Save manually. Not recommended.

- bgsave: Redis will perform snapshot operations asynchronously in the background, and the snapshot can also respond to client requests.

- You can obtain the time of the last successful snapshot execution through the lastsave command

- Note: the bgsave command is optimized for save blocking. It is recommended that all RDB related operations in Redis adopt bgsave.

save

127.0.0.1:6379> set user n1 OK 127.0.0.1:6379> save OK

bgsave

[root@iZbp143t3oxhfc3ar7jey0Z redis-4.0.12]# redis-cli 127.0.0.1:6379> save OK 127.0.0.1:6379> set addr bj OK 127.0.0.1:6379> BGSAVE Background saving started

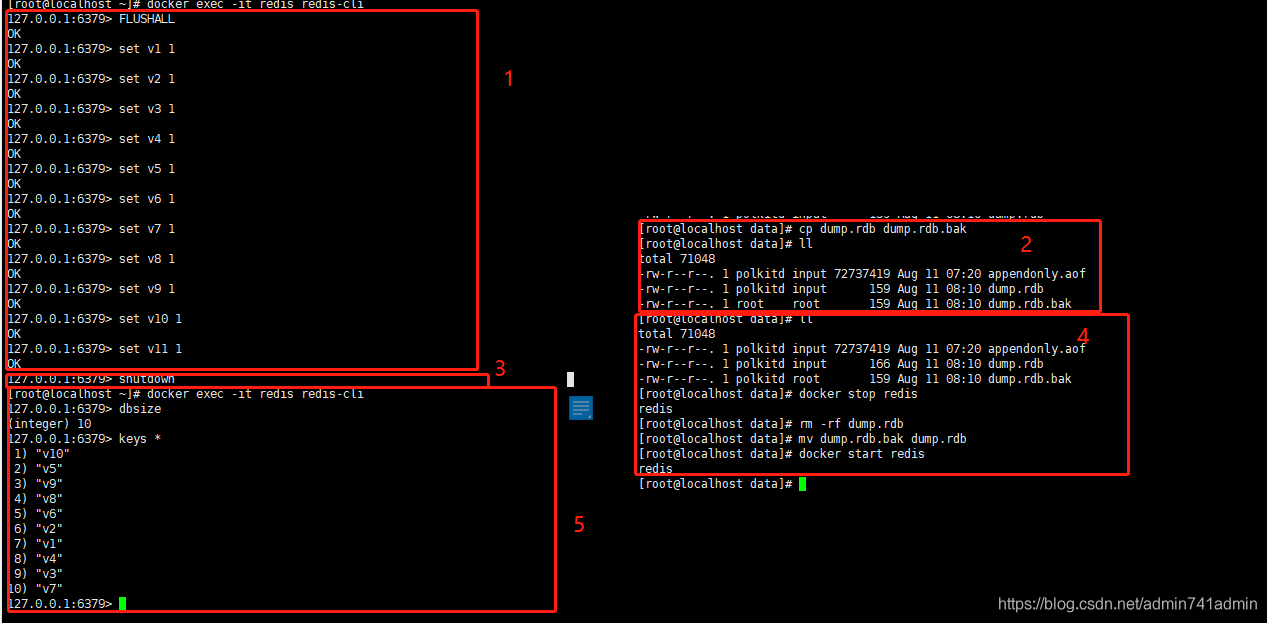

rdb backup

AOF of Redis persistence

concept

- Each write operation is recorded in the form of a log (incremental saving), and all write instructions executed by redis are recorded (read operations are not recorded). Only files can be added, but files cannot be overwritten. Redis will read the file and rebuild the data at the beginning of startup, in other words, When redis restarts, it executes the write instruction from front to back according to the contents of the log file to complete the data recovery

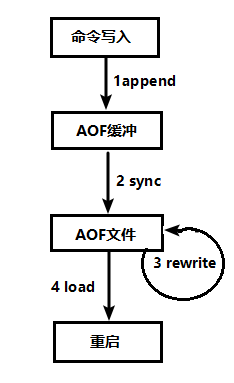

AOF persistence process

- The request write command of the client will be appended to the AOF buffer by append;

- The AOF buffer synchronizes the operation sync to the AOF file on the disk according to the AOF persistence policy [always,everysec,no];

- When the AOF file size exceeds the rewriting policy or manual rewriting, the AOF file will be rewritten and the AOF file capacity will be compressed;

- When the Redis service is restarted, the write operation in the AOF file will be re load ed to achieve the purpose of data recovery;

configuration file

-

aof is closed by default. If you want to open it, it is also in the redis configuration file redis Conf. if you want to open it, you can find appendonly in the configuration file and modify it as follows:

- appendonly yes

- It is generally turned on in the production environment;

-

appendfsync is configured for the time interval of aof refresh. There are three configuration methods:

- always: each one is written to the disk to save the data, which has a great impact on the performance;

- everysec: write to the disk once per second, which has little impact on performance;

- no: do not set, let the operating system automatically save;

-

The rewrite process of aof:

- redis creates a child process;

- The subprocess rewrites an aof file according to the current redis data;

- At the same time, the main process of redis continues to accept the written data and write the log in memory. At the same time, the new log will also be added to the old aof file;

- After the subprocess writes the new aof file, redis will append the log of new data in memory to the new aof file;

- Finally, replace the old aof file with the new aof file;

-

Configuration of rewrite operation

- auto-aof-rewrite-percentage 100

- auto-aof-rewrite-min-size 64mb

- The first indicates that when the capacity of the aof file exceeds twice the capacity of the original aof file, the aof file is rewritten;

- The second represents the minimum capacity of the aof file when performing aof rewriting;

AOF startup / repair

-

Although the backup mechanism and performance of AOF are different from RDB, the operations of backup and recovery are the same as RDB. The backup files are copied, and then copied to the Redis working directory when recovery is needed. The system is loaded when the system is started.

-

Normal start

- Modify the default appendonly no to yes

- Copy a copy of the aof file with data and save it to the corresponding directory (view Directory: config get dir)

-

Abnormal recovery

- Modify the default appendonly no to yes

- If the AOF file is damaged, use / usr / local / bin / redis check AOF – fix appendonly Aof recovery

- Back up the bad AOF file

- Restore: restart redis and reload

AOF synchronization frequency setting

- appendfsync always

- Always synchronize, and each Redis write will be logged immediately; Poor performance but good data integrity

- appendfsync everysec

- It is synchronized every second and logged every second. If it goes down, the data in this second may be lost.

- appendfsync no

- redis does not take the initiative to synchronize, and gives the synchronization time to the operating system.

Rewrite compression

concept

- Aof adopts the method of file addition, and the file will become larger and larger. In order to avoid this situation, a rewriting mechanism is added. When the size of AOF file exceeds the set threshold, Redis will start the content compression of AOF file and only retain the minimum instruction set that can recover data You can use the command bgrewriteaof

Rewriting principle

- When the AOF file continues to grow and is too large, a new process will fork out to rewrite the file (also write the temporary file first and then rename), redis4 Rewriting after version 0 means that the snapshot of rdb is attached to the new AOF header in the form of two-level system, which is used as the existing historical data to replace the original daily account operation.

- no-appendfsync-on-rewrite:

- If no appendfsync on rewrite = yes, the aof file will not be written, and only the cache will be written. The user request will not be blocked. However, if there is a downtime during this period, the cached data during this period will be lost. (reduce data security and improve performance)

- If no appendfsync on rewrite = no, the data will still be flushed to the disk, but blocking may occur when rewriting is encountered. (data security, but performance degradation)

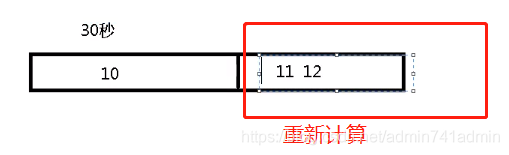

How to implement rewriting

- Although rewriting can save a lot of disk space and reduce recovery time. However, each rewriting still has a certain burden, so Redis is set to meet certain conditions before rewriting.

- Auto AOF rewrite percentage: set the benchmark value for rewriting, and start rewriting when the file reaches 100% (triggered when the file is twice the original rewritten file)

- Auto AOF rewrite min size: sets the reference value for rewriting, with a minimum file size of 64MB. When this value is reached, start rewriting.

- If the current AOF size of Redis > = base_ size +base_ When size * 100% (default) and current size > = 64MB (default), Redis will overwrite AOF.

Rewrite process

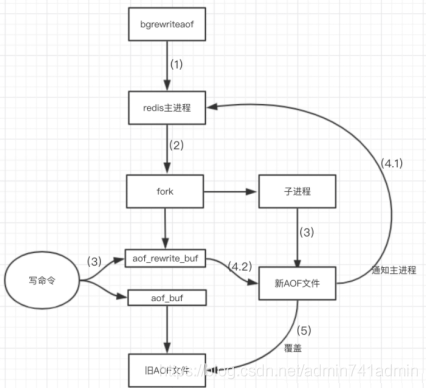

- Bgrewriteaof triggers rewriting to determine whether bgsave or bgrewriteaof is currently running. If so, wait for the command to finish before continuing.

- The main process fork s out the child processes to perform rewriting operations to ensure that the main process will not block.

- The subprocess traverses the data in redis memory to the temporary file, and the client's write request is written to AOF at the same time_ BUF buffer and AOF_ rewrite_ The buf rewriting buffer ensures the integrity of the original AOF file and that the new data modification actions during the generation of the new AOF file will not be lost.

- After the child process writes the new AOF file, it sends a signal to the main process, and the parent process updates the statistical information.

- Main process AOF_ rewrite_ The data in buf is written to a new AOF file.

- Overwrite the old AOF file with the new AOF file to complete the AOF rewrite.

advantage

- The backup mechanism is more robust and the probability of data loss is lower.

- The readable log text is robust through the operation of AOF, which can deal with misoperation.

inferiority

- It takes more disk space than RDB.

- Restore backup is slow.

- If each read and write is synchronized, there is a certain performance pressure.

- There are individual bugs, resulting in failure to recover.

summary

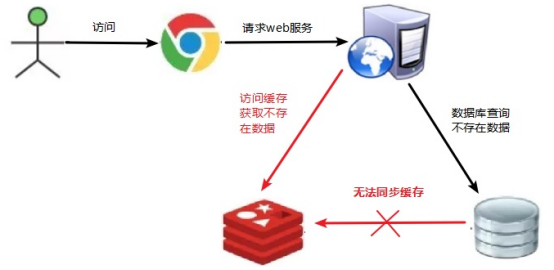

- AOF and RDB are enabled at the same time. Who does redis listen to?

- Aof and RDB are enabled at the same time. By default, the system takes AOF data (data will not be lost)

- Which is better

- The official recommendation is to enable both.

- If you are not sensitive to data, you can use RDB alone from the menu.

- AOF alone is not recommended because bugs may occur.

- If you only do pure memory caching, you can not use it.

Suggestions on the official website

- RDB persistence can snapshot and store your data at a specified time interval

- Aof persistence records every write operation to the server. When the server restarts, these commands will be re executed to recover the original data. AOF command saves each write operation to the end of the file with redis protocol

- Redis can also rewrite AOF files in the background, so that the volume of AOF files will not be too large

- Cache only: if you only want your data to exist when the server is running, you can also not use any persistence method

- Enable two persistence methods at the same time

- In this case, when redis restarts, AOF files will be loaded first to recover the original data, because normally, the data set saved in AOF files is more complete than that saved in RDB files

- RDB data is not real-time. When using both, the server will only find AOF files when restarting. Do you want to use AOF only?

- It is not recommended, because RDB is more suitable for backing up the database (AOF is changing and hard to back up), fast restart, and there will be no potential bug s in AOF. Keep it as a means in case.

- Performance recommendations

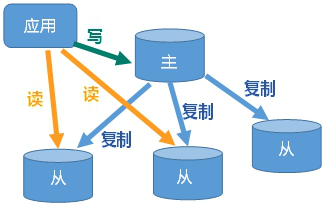

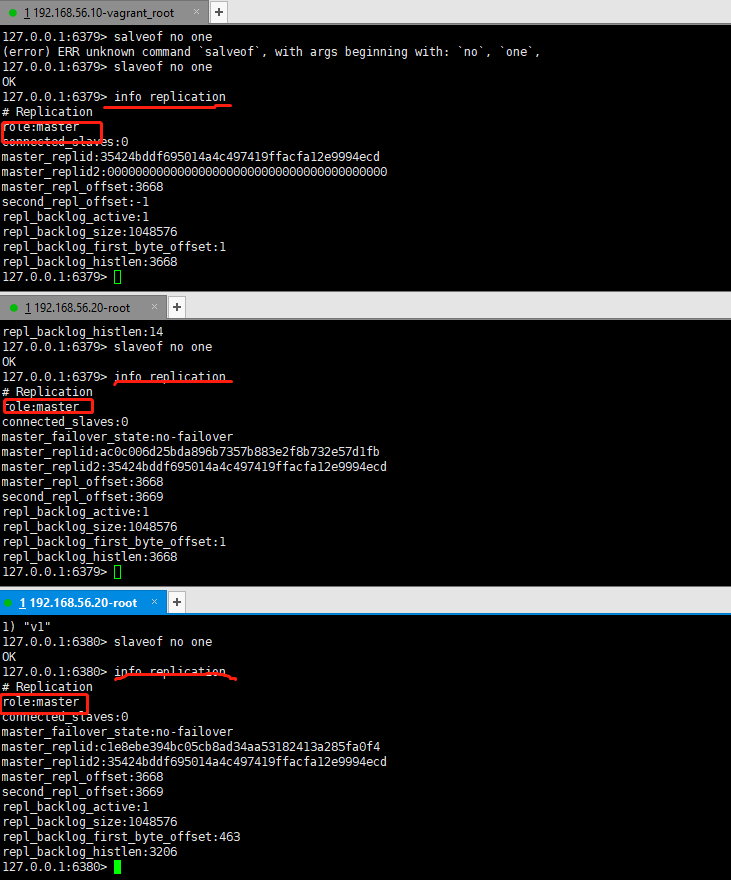

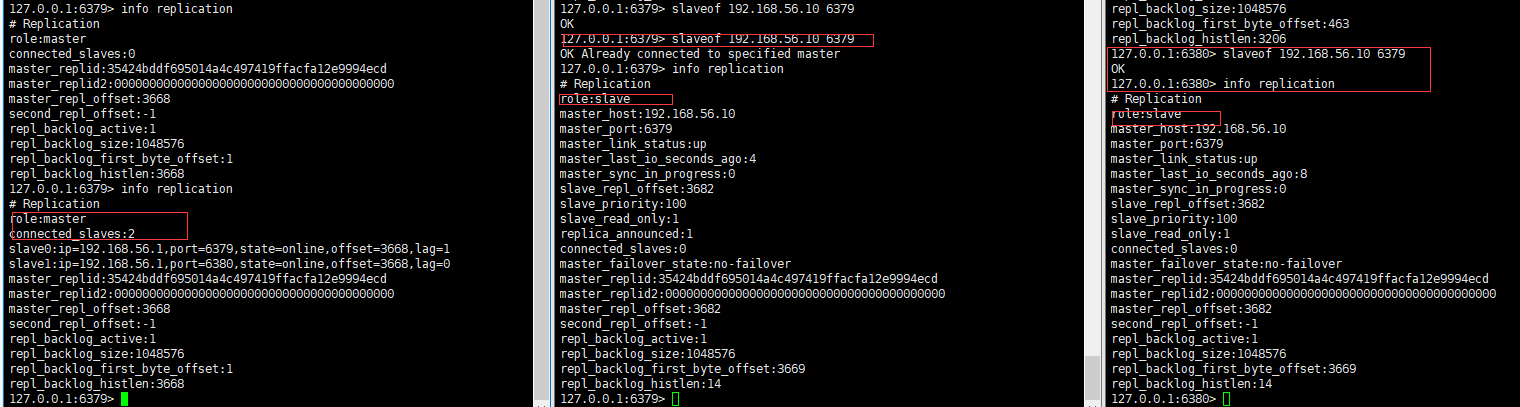

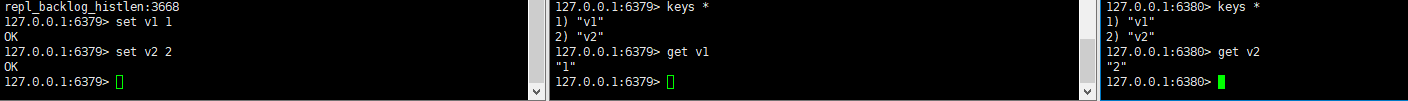

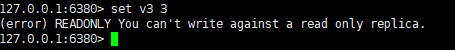

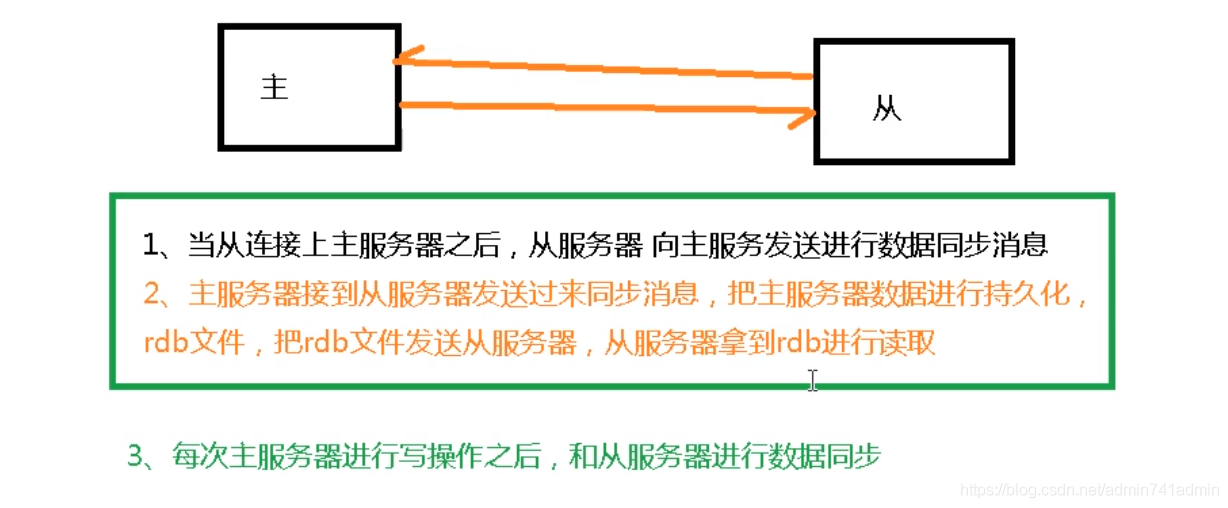

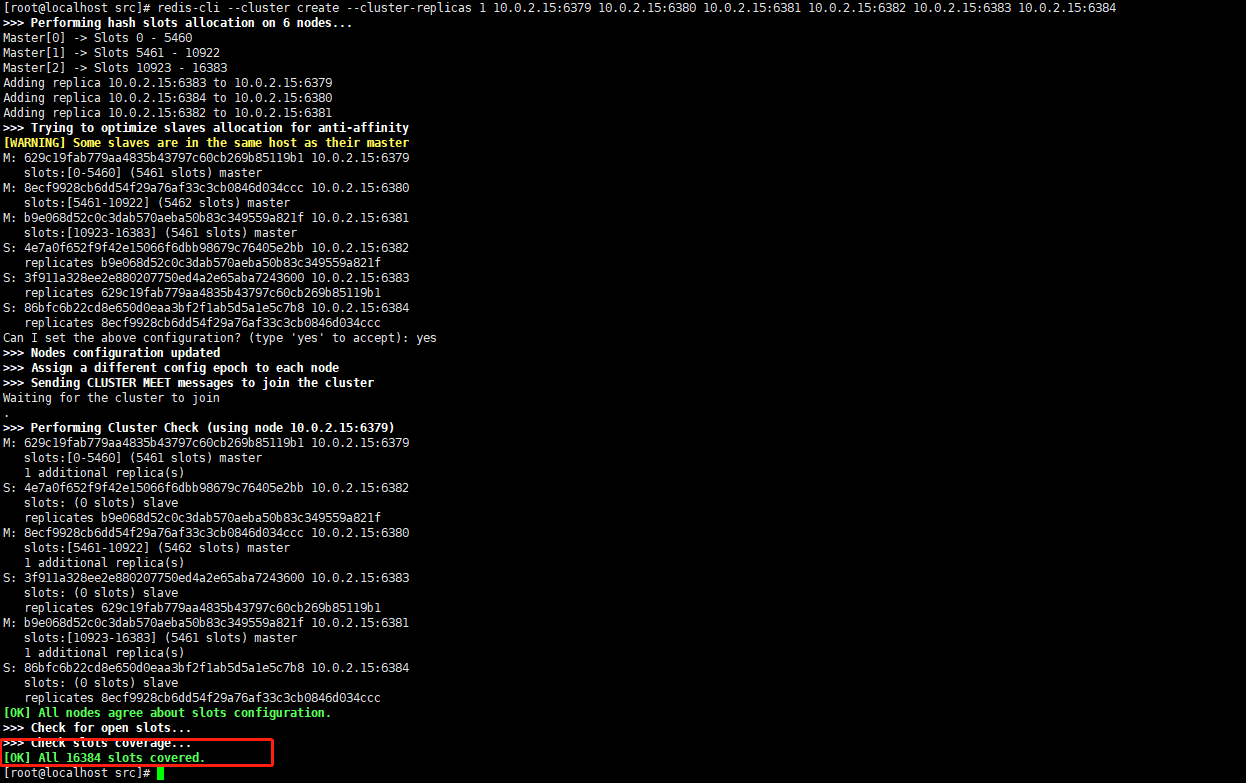

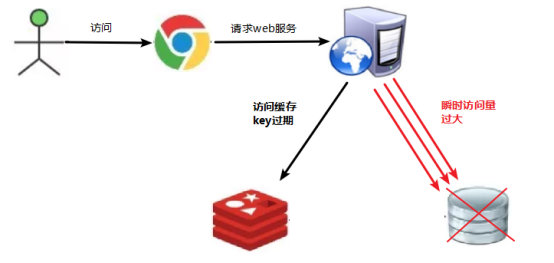

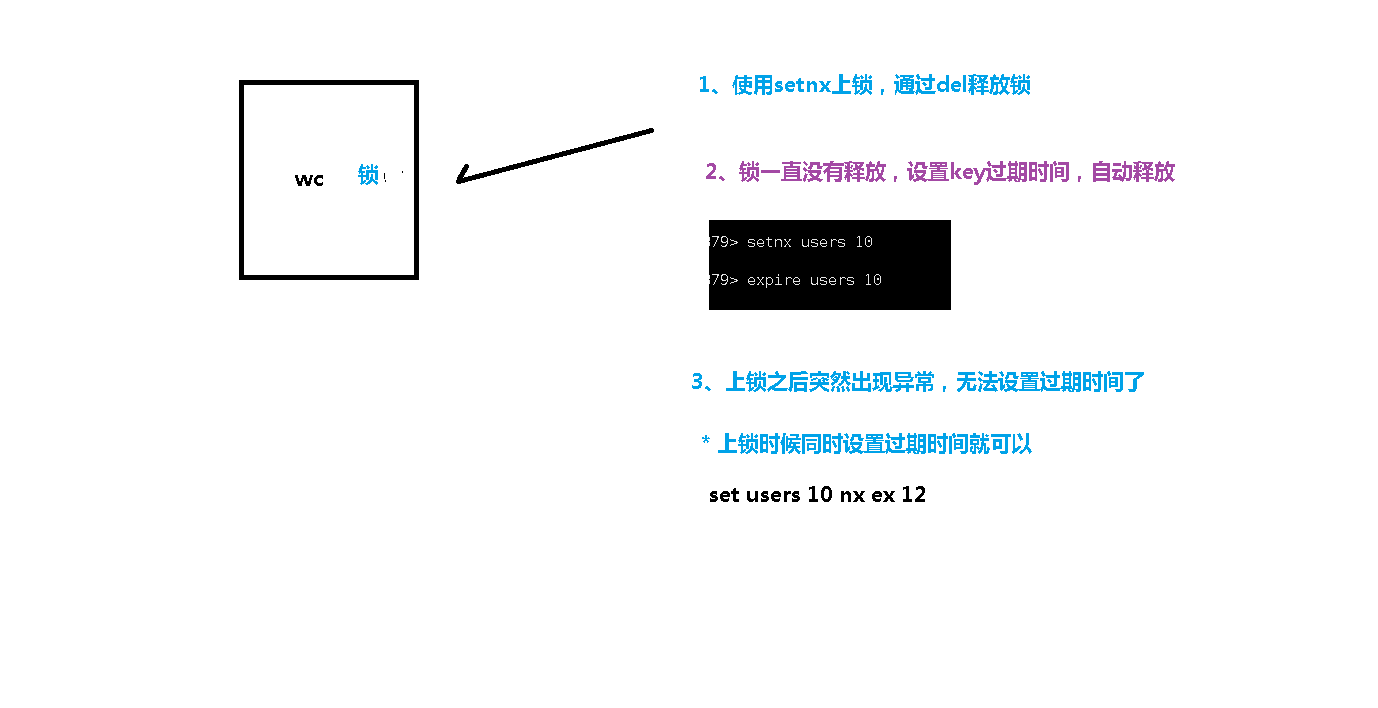

- Because RDB files are only used for backup purposes, it is recommended to only persist RDB files on Slave, and only backup once every 15 minutes is enough. Only save 900 1 is retained.