This article is shared from Huawei cloud community< Reflector of client go source code analysis >, by kaliarch.

One background

Reflector is the core component to ensure the reliability of Informer. There are many details to be considered in case of missing events, receiving exception events, handling event failures and other exceptions. The separate listwatcher lacks the mechanism of reconnection and resynchronization, which may lead to data inconsistency. Its response to events is synchronous. If complex operations are performed, it will cause blocking and need to be introduced into the queue.

II. Reflector

Reflector can become a reflector, reflecting the data in etcd into storage (DeltaFIFO). Reflector obtains all resource object data through its internal List operation and saves it to local storage. Then Watch monitors resource changes and triggers corresponding event processing, such as Add, Update, Delete, etc.

The Reflector structure is defined in staging/src/http://k8s.io/client-go/tools/cache/reflector.go Below:

// k8s.io/client-go/tools/cache/reflector.go

type Reflector struct {

// Name identifies the name of the reflector. The default is file: number of lines (for example, reflector.go:125)

// The default name is through k8s io/apimachinery/pkg/util/naming/from_ stack. Go is generated by the GetNameFromCallsite function below

name string

// The type name expected to be placed in the Store, if provided, is in the string form of expectedGVK

// Otherwise, it is the string of expectedType, which is only used for display, not for parsing or comparison.

expectedTypeName string

// An example object of the type we expect to place in the store.

// Only the type needs to be right, except that when that is

// `unstructured.Unstructured` the object's `"apiVersion"` and

// `"kind"` must also be right.

// Object type put in Store

expectedType reflect.Type

// If it is unstructured, the GVK of the object expected to be placed in Sotre

expectedGVK *schema.GroupVersionKind

// Target Store synchronized with the watch source

store Store

// listerWatcher interface used to perform lists and watches operations (the most important)

listerWatcher ListerWatcher

// backoff manages backoff of ListWatch

backoffManager wait.BackoffManager

resyncPeriod time.Duration

// ShouldResync will be called periodically. When it returns true, the Resync operation of the Store will be called

ShouldResync func() bool

// clock allows tests to manipulate time

clock clock.Clock

// paginatedResult defines whether pagination should be forced for list calls.

// It is set based on the result of the initial list call.

paginatedResult bool

// Kubernetes resources have versions in APIServer. Any modification (addition, deletion and update) of objects will cause the resource version to be updated. lastSyncResourceVersion refers to this version

lastSyncResourceVersion string

// If the previous list or watch request with lastSyncResourceVersion is a failed request of HTTP 410 (go ne), islastsyncresourceversion go ne is true

isLastSyncResourceVersionGone bool

// lastSyncResourceVersionMutex is used to ensure read / write access to lastSyncResourceVersion.

lastSyncResourceVersionMutex sync.RWMutex

// WatchListPageSize is the requested chunk size of initial and resync watch lists.

// If unset, for consistent reads (RV="") or reads that opt-into arbitrarily old data

// (RV="0") it will default to pager.PageSize, for the rest (RV != "" && RV != "0")

// it will turn off pagination to allow serving them from watch cache.

// NOTE: It should be used carefully as paginated lists are always served directly from

// etcd, which is significantly less efficient and may lead to serious performance and

// scalability problems.

WatchListPageSize int64

}

// NewReflector creates a new reflector object that will keep the given Store synchronized with the contents of the resource object specified in the server.

// The reflector only puts objects with the expectedType type into the Store, unless the expectedType is nil.

// If resyncPeriod is non-zero, the reflector periodically checks the ShouldResync function to determine whether to call the Resync operation of the Store

// `ShouldResync==nil ` means always perform Resync operations.

// This allows you to use reflectors to periodically process all full and incremental objects.

func NewReflector(lw ListerWatcher, expectedType interface{}, store Store, resyncPeriod time.Duration) *Reflector {

// The default reflector name is file:line

return NewNamedReflector(naming.GetNameFromCallsite(internalPackages...), lw, expectedType, store, resyncPeriod)

}

// Like NewReflector, NewNamedReflector only specifies a name for logging

func NewNamedReflector(name string, lw ListerWatcher, expectedType interface{}, store Store, resyncPeriod time.Duration) *Reflector {

realClock := &clock.RealClock{}

r := &Reflector{

name: name,

listerWatcher: lw,

store: store,

backoffManager: wait.NewExponentialBackoffManager(800*time.Millisecond, 30*time.Second, 2*time.Minute, 2.0, 1.0, realClock),

resyncPeriod: resyncPeriod,

clock: realClock,

}

r.setExpectedType(expectedType)

return r

}Three processes

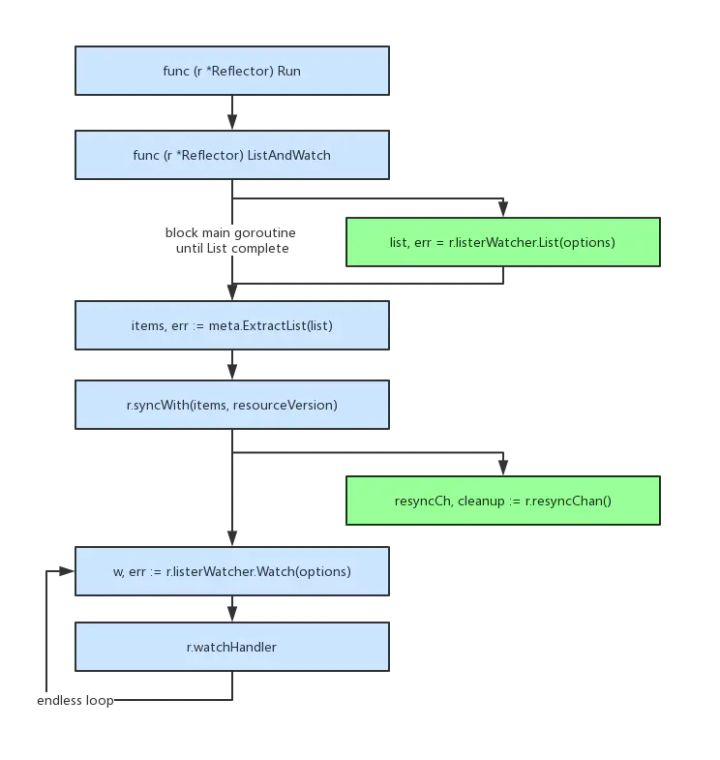

- Reflector.Run() calls ListAndWatch(), starts a child coroutine to execute the List, and the main coroutine blocks and waits for the List to complete.

- Meta.ExtractList(list) converts the List result into runtime Object array.

- r.syncWith(items, resourceVersion) writes DeltaFIFO and synchronizes the full amount to Indexer.

- r.resyncChan() is also executed in a subprocess.

- Loop to r.listerwatcher Watch(optiopns).

- r.WatchHandler incremental synchronization runtime Object to indexer.

- List only performs full synchronization once, and watch continues to perform incremental synchronization.

IV. Reflector key methods

4.1 construction method

// NewReflector creates a new reflector object that will keep the given Store synchronized with the contents of the resource object specified in the server.

// The reflector only puts objects with the expectedType type into the Store, unless the expectedType is nil.

// If resyncPeriod is non-zero, the reflector periodically checks the ShouldResync function to determine whether to call the Resync operation of the Store

// `ShouldResync==nil ` means always perform Resync operations.

// This allows you to use reflectors to periodically process all full and incremental objects.

func NewReflector(lw ListerWatcher, expectedType interface{}, store Store, resyncPeriod time.Duration) *Reflector {

// The default reflector name is file:line

return NewNamedReflector(naming.GetNameFromCallsite(internalPackages...), lw, expectedType, store, resyncPeriod)

}

// Like NewReflector, NewNamedReflector only specifies a name for logging

func NewNamedReflector(name string, lw ListerWatcher, expectedType interface{}, store Store, resyncPeriod time.Duration) *Reflector {

realClock := &clock.RealClock{}

r := &Reflector{

name: name,

listerWatcher: lw,

store: store,

backoffManager: wait.NewExponentialBackoffManager(800*time.Millisecond, 30*time.Second, 2*time.Minute, 2.0, 1.0, realClock),

resyncPeriod: resyncPeriod,

clock: realClock,

}

r.setExpectedType(expectedType)

return r

}

//New Indexer and reflector

func NewNamespaceKeyedIndexerAndReflector(lw ListerWatcher, expectedType interface{}, resyncPeriod time.Duration) (indexer Indexer, reflector *Reflector) {

indexer = NewIndexer(MetaNamespaceKeyFunc, Indexers{NamespaceIndex: MetaNamespaceIndexFunc})

reflector = NewReflector(lw, expectedType, indexer, resyncPeriod)

return indexer, reflector

}4.2 Run method

// Run reuses the ListAndWatch of the reflector to get all objects and subsequent increments. When stopCh is closed, the run will exit

func (r *Reflector) Run(stopCh <-chan struct{}) {

klog.V(2).Infof("Starting reflector %s (%s) from %s", r.expectedTypeName, r.resyncPeriod, r.name)

wait.BackoffUntil(func() {

if err := r.ListAndWatch(stopCh); err != nil {

r.watchErrorHandler(r, err)

}

}, r.backoffManager, true, stopCh)

klog.V(2).Infof("Stopping reflector %s (%s) from %s", r.expectedTypeName, r.resyncPeriod, r.name)

}4.3 ListWatch

- Logic of list part: set paging parameters; Execute the list method; Synchronizing the list results into the DeltaFIFO queue actually calls the Replace method in the store.

- Timing synchronization: timing synchronization runs in the way of CO process, uses timer to realize periodic synchronization, and Resync operation in Store.

- Partial logic of watch: in the for loop; Execute the watch function to obtain resultchan; Monitor and process the data in resultchan;

// The ListAndWatch function first lists all objects, obtains the resource version when calling, and then uses the resource version to perform the watch operation.

// If the ListAndWatch is not initialized and the watch succeeds, an error will be returned.

func (r *Reflector) ListAndWatch(stopCh <-chan struct{}) error {

klog.V(3).Infof("Listing and watching %v from %s", r.expectedTypeName, r.name)

var resourceVersion string

options := metav1.ListOptions{ResourceVersion: r.relistResourceVersion()}

// 1. Logic of list part: set paging parameters; Execute the list method; Synchronize the list results into the DeltaFIFO queue;

if err := func() error {

initTrace := trace.New("Reflector ListAndWatch", trace.Field{"name", r.name})

defer initTrace.LogIfLong(10 * time.Second)

var list runtime.Object

var paginatedResult bool

var err error

listCh := make(chan struct{}, 1)

panicCh := make(chan interface{}, 1)

go func() {

defer func() {

if r := recover(); r != nil {

panicCh <- r

}

}()

// Attempt to gather list in chunks, if supported by listerWatcher, if not, the first

// list request will return the full response.

pager := pager.New(pager.SimplePageFunc(func(opts metav1.ListOptions) (runtime.Object, error) {

return r.listerWatcher.List(opts)

}))

switch {

case r.WatchListPageSize != 0:

pager.PageSize = r.WatchListPageSize

case r.paginatedResult:

// We got a paginated result initially. Assume this resource and server honor

// paging requests (i.e. watch cache is probably disabled) and leave the default

// pager size set.

case options.ResourceVersion != "" && options.ResourceVersion != "0":

// User didn't explicitly request pagination.

//

// With ResourceVersion != "", we have a possibility to list from watch cache,

// but we do that (for ResourceVersion != "0") only if Limit is unset.

// To avoid thundering herd on etcd (e.g. on master upgrades), we explicitly

// switch off pagination to force listing from watch cache (if enabled).

// With the existing semantic of RV (result is at least as fresh as provided RV),

// this is correct and doesn't lead to going back in time.

//

// We also don't turn off pagination for ResourceVersion="0", since watch cache

// is ignoring Limit in that case anyway, and if watch cache is not enabled

// we don't introduce regression.

pager.PageSize = 0

}

list, paginatedResult, err = pager.List(context.Background(), options)

if isExpiredError(err) || isTooLargeResourceVersionError(err) {

r.setIsLastSyncResourceVersionUnavailable(true)

// Retry immediately if the resource version used to list is unavailable.

// The pager already falls back to full list if paginated list calls fail due to an "Expired" error on

// continuation pages, but the pager might not be enabled, the full list might fail because the

// resource version it is listing at is expired or the cache may not yet be synced to the provided

// resource version. So we need to fallback to resourceVersion="" in all to recover and ensure

// the reflector makes forward progress.

list, paginatedResult, err = pager.List(context.Background(), metav1.ListOptions{ResourceVersion: r.relistResourceVersion()})

}

close(listCh)

}()

select {

case <-stopCh:

return nil

case r := <-panicCh:

panic(r)

case <-listCh:

}

if err != nil {

return fmt.Errorf("failed to list %v: %v", r.expectedTypeName, err)

}

// We check if the list was paginated and if so set the paginatedResult based on that.

// However, we want to do that only for the initial list (which is the only case

// when we set ResourceVersion="0"). The reasoning behind it is that later, in some

// situations we may force listing directly from etcd (by setting ResourceVersion="")

// which will return paginated result, even if watch cache is enabled. However, in

// that case, we still want to prefer sending requests to watch cache if possible.

//

// Paginated result returned for request with ResourceVersion="0" mean that watch

// cache is disabled and there are a lot of objects of a given type. In such case,

// there is no need to prefer listing from watch cache.

if options.ResourceVersion == "0" && paginatedResult {

r.paginatedResult = true

}

r.setIsLastSyncResourceVersionUnavailable(false) // list was successful

initTrace.Step("Objects listed")

//

listMetaInterface, err := meta.ListAccessor(list)

if err != nil {

return fmt.Errorf("unable to understand list result %#v: %v", list, err)

}

// Get resource version number

resourceVersion = listMetaInterface.GetResourceVersion()

initTrace.Step("Resource version extracted")

// Convert the resource object into a resource list, say runtime Object object is converted to [] runtime Object object

items, err := meta.ExtractList(list)

if err != nil {

return fmt.Errorf("unable to understand list result %#v (%v)", list, err)

}

initTrace.Step("Objects extracted")

// Store the resource and version number in the resource object list in the store

if err := r.syncWith(items, resourceVersion); err != nil {

return fmt.Errorf("unable to sync list result: %v", err)

}

initTrace.Step("SyncWith done")

r.setLastSyncResourceVersion(resourceVersion)

initTrace.Step("Resource version updated")

return nil

}(); err != nil {

return err

}

// 2. Timing synchronization: timing synchronization runs in the way of collaborative process, and the timer is used to realize periodic synchronization

resyncerrc := make(chan error, 1)

cancelCh := make(chan struct{})

defer close(cancelCh)

go func() {

resyncCh, cleanup := r.resyncChan()

defer func() {

cleanup() // Call the last one written into cleanup

}()

for {

select {

case <-resyncCh:

case <-stopCh:

return

case <-cancelCh:

return

}

// If ShouldResync is nil or the call returns true, perform the Resync operation in the Store

if r.ShouldResync == nil || r.ShouldResync() {

klog.V(4).Infof("%s: forcing resync", r.name)

// Synchronize indexer data with deltafifo

if err := r.store.Resync(); err != nil {

resyncerrc <- err

return

}

}

cleanup()

resyncCh, cleanup = r.resyncChan()

}

}()

// 3. In the for loop; Execute the watch function to obtain resultchan; Monitor and process the data in resultchan;

for {

// give the stopCh a chance to stop the loop, even in case of continue statements further down on errors

select {

case <-stopCh:

return nil

default:

}

timeoutSeconds := int64(minWatchTimeout.Seconds() * (rand.Float64() + 1.0))

options = metav1.ListOptions{

ResourceVersion: resourceVersion,

// We want to avoid situations of hanging watchers. Stop any wachers that do not

// receive any events within the timeout window.

TimeoutSeconds: &timeoutSeconds,

// To reduce load on kube-apiserver on watch restarts, you may enable watch bookmarks.

// Reflector doesn't assume bookmarks are returned at all (if the server do not support

// watch bookmarks, it will ignore this field).

AllowWatchBookmarks: true,

}

// start the clock before sending the request, since some proxies won't flush headers until after the first watch event is sent

start := r.clock.Now()

w, err := r.listerWatcher.Watch(options)

if err != nil {

// If this is "connection refused" error, it means that most likely apiserver is not responsive.

// It doesn't make sense to re-list all objects because most likely we will be able to restart

// watch where we ended.

// If that's the case begin exponentially backing off and resend watch request.

if utilnet.IsConnectionRefused(err) {

<-r.initConnBackoffManager.Backoff().C()

continue

}

return err

}

if err := r.watchHandler(start, w, &resourceVersion, resyncerrc, stopCh); err != nil {

if err != errorStopRequested {

switch {

case isExpiredError(err):

// Don't set LastSyncResourceVersionUnavailable - LIST call with ResourceVersion=RV already

// has a semantic that it returns data at least as fresh as provided RV.

// So first try to LIST with setting RV to resource version of last observed object.

klog.V(4).Infof("%s: watch of %v closed with: %v", r.name, r.expectedTypeName, err)

default:

klog.Warningf("%s: watch of %v ended with: %v", r.name, r.expectedTypeName, err)

}

}

return nil

}

}

}4.4 LastSyncResourceVersion

Gets the resource version of the last synchronization

func (r *Reflector) LastSyncResourceVersion() string {

r.lastSyncResourceVersionMutex.RLock()

defer r.lastSyncResourceVersionMutex.RUnlock()

return r.lastSyncResourceVersion

}4.5 resyncChan

Return a timing channel and cleaning function. The cleaning function is to stop the timer. The timing resynchronization here is realized by using a timer.

func (r *Reflector) resyncChan() (<-chan time.Time, func() bool) {

if r.resyncPeriod == 0 {

return neverExitWatch, func() bool { return false }

}

// The cleanup function is required: imagine the scenario where watches

// always fail so we end up listing frequently. Then, if we don't

// manually stop the timer, we could end up with many timers active

// concurrently.

t := r.clock.NewTimer(r.resyncPeriod)

return t.C(), t.Stop

}4.6 syncWith

The resource object result from apiserver list will be synchronized into the DeltaFIFO queue, and the Replace method of the queue will be invoked.

func (r *Reflector) syncWith(items []runtime.Object, resourceVersion string) error {

found := make([]interface{}, 0, len(items))

for _, item := range items {

found = append(found, item)

}

return r.store.Replace(found, resourceVersion)

}4.7 watchHandler

Processing of watch: receive the interface of watch as a parameter. The external methods of watch interface are Stop and Resultchan. The former closes the result channel and the latter obtains the channel.

func (r *Reflector) watchHandler(start time.Time, w watch.Interface, resourceVersion *string, errc chan error, stopCh <-chan struct{}) error {

eventCount := 0

// Stopping the watcher should be idempotent and if we return from this function there's no way

// we're coming back in with the same watch interface.

defer w.Stop()

loop:

for {

select {

case <-stopCh:

return errorStopRequested

case err := <-errc:

return err

case event, ok := <-w.ResultChan():

if !ok {

break loop

}

if event.Type == watch.Error {

return apierrors.FromObject(event.Object)

}

if r.expectedType != nil {

if e, a := r.expectedType, reflect.TypeOf(event.Object); e != a {

utilruntime.HandleError(fmt.Errorf("%s: expected type %v, but watch event object had type %v", r.name, e, a))

continue

}

}

// Judge whether the expected type is consistent with the monitored event type

if r.expectedGVK != nil {

if e, a := *r.expectedGVK, event.Object.GetObjectKind().GroupVersionKind(); e != a {

utilruntime.HandleError(fmt.Errorf("%s: expected gvk %v, but watch event object had gvk %v", r.name, e, a))

continue

}

}

// Get event object

meta, err := meta.Accessor(event.Object)

if err != nil {

utilruntime.HandleError(fmt.Errorf("%s: unable to understand watch event %#v", r.name, event))

continue

}

newResourceVersion := meta.GetResourceVersion()

// Judge the event type and carry out corresponding operations

switch event.Type {

case watch.Added:

err := r.store.Add(event.Object)

if err != nil {

utilruntime.HandleError(fmt.Errorf("%s: unable to add watch event object (%#v) to store: %v", r.name, event.Object, err))

}

case watch.Modified:

err := r.store.Update(event.Object)

if err != nil {

utilruntime.HandleError(fmt.Errorf("%s: unable to update watch event object (%#v) to store: %v", r.name, event.Object, err))

}

case watch.Deleted:

// TODO: Will any consumers need access to the "last known

// state", which is passed in event.Object? If so, may need

// to change this.

err := r.store.Delete(event.Object)

if err != nil {

utilruntime.HandleError(fmt.Errorf("%s: unable to delete watch event object (%#v) from store: %v", r.name, event.Object, err))

}

case watch.Bookmark:

// Indicates that the monitoring has been synchronized here and only needs to be updated

// A `Bookmark` means watch has synced here, just update the resourceVersion

default:

utilruntime.HandleError(fmt.Errorf("%s: unable to understand watch event %#v", r.name, event))

}

*resourceVersion = newResourceVersion

r.setLastSyncResourceVersion(newResourceVersion)

if rvu, ok := r.store.(ResourceVersionUpdater); ok {

rvu.UpdateResourceVersion(newResourceVersion)

}

eventCount++

}

}

watchDuration := r.clock.Since(start)

if watchDuration < 1*time.Second && eventCount == 0 {

return fmt.Errorf("very short watch: %s: Unexpected watch close - watch lasted less than a second and no items received", r.name)

}

klog.V(4).Infof("%s: Watch close - %v total %v items received", r.name, r.expectedTypeName, eventCount)

return nil

}4.8 relistResourceVersion

The relistResourceVersion function obtains the resource version of the reflector relist. If the resource version is not 0, it means that the acquisition continues according to the resource version number. When the transmission is interrupted due to network failure or other reasons, the unfinished part will be transmitted according to the resource version number next time. The data in the local cache can be consistent with the data in the Etcd cluster. The implementation of this function is as follows:

// If the result of the last relist is HTTP 410 (Gone) status code, it will return "", so relist will read the latest resource version available in etcd through quorum.

// Return to use lastSyncResourceVersion, so that the reflector will not relist with the resource version older than the resource version observed in the relist result or watch event

func (r *Reflector) relistResourceVersion() string {

r.lastSyncResourceVersionMutex.RLock()

defer r.lastSyncResourceVersionMutex.RUnlock()

if r.isLastSyncResourceVersionUnavailable {

// Because the reflector will make paging List requests, if the lastSyncResourceVersion expires, all paging List requests will skip the watch cache

// Therefore, set ResourceVersion = "", and then List again, re-establish the reflector to the latest available resource version, and read it from etcd to maintain consistency.

return ""

}

if r.lastSyncResourceVersion == "" {

// The initial List operation performed by the reflector uses 0 as the resource version.

return "0"

}

return r.lastSyncResourceVersion

}V. overall process

// k8s.io/client-go/informers/apps/v1/deployment.go

// Newfiltered Deployment Informer constructs a new Informer for Deployment.

// It is always preferred to use an informer factory to obtain a shared informer instead of an independent informer, which can reduce the memory consumption and the number of connections to the server.

func NewFilteredDeploymentInformer(client kubernetes.Interface, namespace string, resyncPeriod time.Duration, indexers cache.Indexers, tweakListOptions internalinterfaces.TweakListOptionsFunc) cache.SharedIndexInformer {

return cache.NewSharedIndexInformer(

&cache.ListWatch{

ListFunc: func(options metav1.ListOptions) (runtime.Object, error) {

if tweakListOptions != nil {

tweakListOptions(&options)

}

return client.AppsV1().Deployments(namespace).List(context.TODO(), options)

},

WatchFunc: func(options metav1.ListOptions) (watch.Interface, error) {

if tweakListOptions != nil {

tweakListOptions(&options)

}

return client.AppsV1().Deployments(namespace).Watch(context.TODO(), options)

},

},

&appsv1.Deployment{},

resyncPeriod,

indexers,

)

}From the above code, we can see that when we call Informer() of a resource object, we will call the newfiltereddeploymentininformer function above for initialization, and the use of initialization will be passed into cache Listwatch object, including the implementation operations of list and Watch, that is, the list operation of listwatch called by the previous reflector in ListAndWatch is implemented in Informer of a specific resource object. For example, we obtain the resource list data of Deployment through the interaction between ClientSet client and APIServer, Through the client in ListFunc AppsV1(). Deployments(namespace). List (context. Todo(), options).

- After obtaining the full amount of List data, use listmetainterface Getresourceversion() to get the version number of the resource. ResourceVersion is very important. All resources in Kubernetes have this field, which identifies the version number of the current resource object. Every time the current resource object is modified (CUD), Kubernetes API Server will change the ResourceVersion, In this way, when client go performs the Watch operation, it can determine whether the current resource object has changed according to the ResourceVersion.

- Then through meta The extractlist function converts the resource data into a list of resource objects, and the runtime Convert object object to [] runtime Object object, because the full access is a resource list.

- Next, Store the resource object and resource version number in the resource object list in the Store through the syncWith function of the reflector, which will be described in detail in later chapters.

- After the final processing, set the latest resource version number through the r.setLastSyncResourceVersion(resourceVersion) operation. The other is to start a goroutine to regularly check whether resync operation is required and call r.store in the storage Resync().

- Then comes the watch operation. The watch operation establishes a long connection with APIServer through the HTTP protocol and receives the resource change event sent by Kubernetes API Server. Like the List operation, the real implementation of the watch is also passed in during the initialization of informer, such as the WatchFunc passed in during the initialization of Deployment Informer above, The bottom layer also performs the watch operation on the Deployment through the ClientSet client AppsV1(). Deployments(namespace). Watch (context. Todo(), options).

- After obtaining the resource data of the watch, process the resource change event by calling r.watchHandler. When the Add event, Update event and Delete event are triggered, Update the corresponding resource object to the local cache (DeltaFIFO) and Update the ResourceVersion resource version number.

This is the core implementation of ListAndWatch in the Reflector. From the above implementation, we can see that the obtained data eventually flows to the local Store, that is, DeltaFIFO. So next, we need to analyze the implementation of DeltaFIFO.

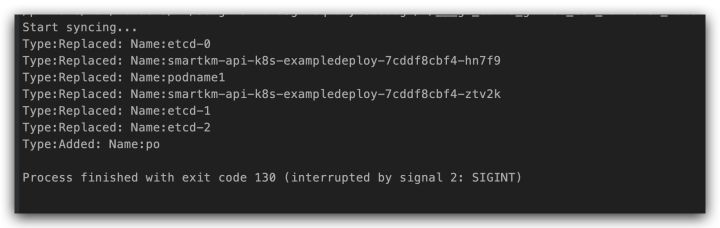

Six trial ox knives

package main

import (

"fmt"

corev1 "k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/fields"

"k8s.io/apimachinery/pkg/util/wait"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/tools/cache"

"k8s.io/client-go/tools/clientcmd"

"k8s.io/client-go/util/homedir"

"path/filepath"

"time"

)

func Must(e interface{}) {

if e != nil {

panic(e)

}

}

func InitClientSet() (*kubernetes.Clientset, error) {

kubeconfig := filepath.Join(homedir.HomeDir(), ".kube", "config")

restConfig, err := clientcmd.BuildConfigFromFlags("", kubeconfig)

if err != nil {

return nil, err

}

return kubernetes.NewForConfig(restConfig)

}

// Generate listwatcher

func InitListerWatcher(clientSet *kubernetes.Clientset, resource, namespace string, fieldSelector fields.Selector) cache.ListerWatcher {

restClient := clientSet.CoreV1().RESTClient()

return cache.NewListWatchFromClient(restClient, resource, namespace, fieldSelector)

}

// Generate pods reflector

func InitPodsReflector(clientSet *kubernetes.Clientset, store cache.Store) *cache.Reflector {

resource := "pods"

namespace := "default"

resyncPeriod := 0 * time.Second

expectedType := &corev1.Pod{}

lw := InitListerWatcher(clientSet, resource, namespace, fields.Everything())

return cache.NewReflector(lw, expectedType, store, resyncPeriod)

}

// Generate DeltaFIFO

func InitDeltaQueue(store cache.Store) cache.Queue {

return cache.NewDeltaFIFOWithOptions(cache.DeltaFIFOOptions{

// store implements KeyListerGetter

KnownObjects: store,

// EmitDeltaTypeReplaced indicates that the queue consumer understands the Replaced DeltaType.

// Before adding the 'Replaced' event type, the call to Replace() is handled in the same way as Sync().

// false by default for backward compatibility purposes.

// When true, a replace event is sent for the item passed to the Replace() call. When false, the 'Sync' event will be sent.

EmitDeltaTypeReplaced: true,

})

}

func InitStore() cache.Store {

return cache.NewStore(cache.MetaNamespaceKeyFunc)

}

func main() {

clientSet, err := InitClientSet()

Must(err)

// Used to get in processfunc

store := InitStore()

// queue

DeleteFIFOQueue := InitDeltaQueue(store)

// Generate podReflector

podReflector := InitPodsReflector(clientSet, DeleteFIFOQueue)

stopCh := make(chan struct{})

defer close(stopCh)

go podReflector.Run(stopCh)

//start-up

ProcessFunc := func(obj interface{}) error {

// The first received event will be processed first

for _, d := range obj.(cache.Deltas) {

switch d.Type {

case cache.Sync, cache.Replaced, cache.Added, cache.Updated:

if _, exists, err := store.Get(d.Object); err == nil && exists {

if err := store.Update(d.Object); err != nil {

return err

}

} else {

if err := store.Add(d.Object); err != nil {

return err

}

}

case cache.Deleted:

if err := store.Delete(d.Object); err != nil {

return err

}

}

pods, ok := d.Object.(*corev1.Pod)

if !ok {

return fmt.Errorf("not config: %T", d.Object)

}

fmt.Printf("Type:%s: Name:%s\n", d.Type, pods.Name)

}

return nil

}

fmt.Println("Start syncing...")

wait.Until(func() {

for {

_, err := DeleteFIFOQueue.Pop(ProcessFunc)

Must(err)

}

}, time.Second, stopCh)

}

VII. Summary

Through this article, you can understand the process of Reflector obtaining objects from Kubernetes API through ListWatcher and storing them in the store. Later, you will study the source code of DeltaFIFO and deepen your understanding of the whole informer by combining with informer.

Reference link

- https://blog.csdn.net/u013276277/article/details/108592288

- https://www.jianshu.com/p/1daeae7b6970

Click follow to learn about Huawei's new cloud technology for the first time~