| Reading guide | This article details how to recover from a deleted physical volume or failed disk scenario. |

System environment

Existing file system

The following uses / dev/sd[a-c] three disks, which are divided into a volume group to create two logical volumes:

# Create pv [root@localhost ~]# pvcreate /dev/sda [root@localhost ~]# pvcreate /dev/sdb [root@localhost ~]# pvcreate /dev/sdc # Create VG [root@localhost ~]# vgcreate vg_data /dev/sd[a-c] # Create LV [root@localhost ~]# lvcreate -L 25G -n lv_data vg_data [root@localhost ~]# lvcreate -L 25G -n lv_log vg_data

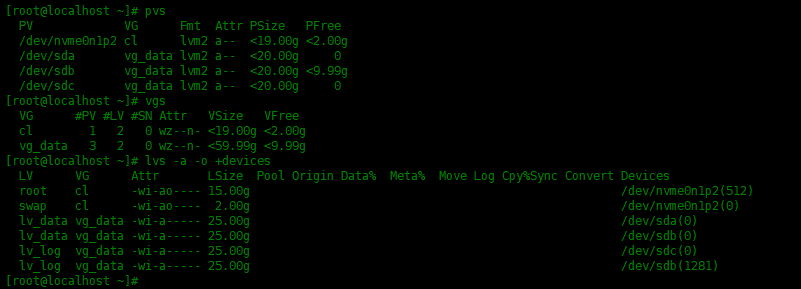

The allocation and usage information of logical volumes is listed below:

[root@localhost ~]# pvs PV VG Fmt Attr PSize PFree /dev/nvme0n1p2 cl lvm2 a-- <19.00g <2.00g /dev/sda vg_data lvm2 a-- <20.00g 0 /dev/sdb vg_data lvm2 a-- <20.00g <9.99g /dev/sdc vg_data lvm2 a-- <20.00g 0 [root@localhost ~]# vgs VG #PV #LV #SN Attr VSize VFree cl 1 2 0 wz--n- <19.00g <2.00g vg_data 3 2 0 wz--n- <59.99g <9.99g [root@localhost ~]# lvs -a -o +devices LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert Devices root cl -wi-ao---- 15.00g /dev/nvme0n1p2(512) swap cl -wi-ao---- 2.00g /dev/nvme0n1p2(0) lv_data vg_data -wi-a----- 25.00g /dev/sda(0) lv_data vg_data -wi-a----- 25.00g /dev/sdb(0) lv_log vg_data -wi-a----- 25.00g /dev/sdc(0) lv_log vg_data -wi-a----- 25.00g /dev/sdb(1281)

Create two folders / data and / log in the root directory, format the logical volume, mount the logical volume, and store some data:

[root@localhost ~]# mkdir /data /logs

[root@localhost ~]# mkfs.xfs /dev/vg_data/lv_data

[root@localhost ~]# mkfs.xfs /dev/vg_data/lv_log

[root@localhost ~]# mount /dev/vg_data/lv_data /data

[root@localhost ~]# mount /dev/vg_data/lv_log /data

[root@localhost ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 880M 0 880M 0% /dev

tmpfs 897M 0 897M 0% /dev/shm

tmpfs 897M 8.7M 888M 1% /run

tmpfs 897M 0 897M 0% /sys/fs/cgroup

/dev/mapper/cl-root 15G 1.9G 14G 13% /

/dev/nvme0n1p1 976M 183M 726M 21% /boot

tmpfs 180M 0 180M 0% /run/user/0

/dev/mapper/vg_data-lv_log 25G 211M 25G 1% /data

[root@localhost ~]# touch /data/file{1..10}.txt

[root@localhost ~]# touch /logs/text{1..10}.log

Set disk as failed or delete disk

In both cases, the physical volume or failed disk is accidentally deleted by running pvremove command Any physical volume is deleted, or a physical disk is removed from the virtual machine.

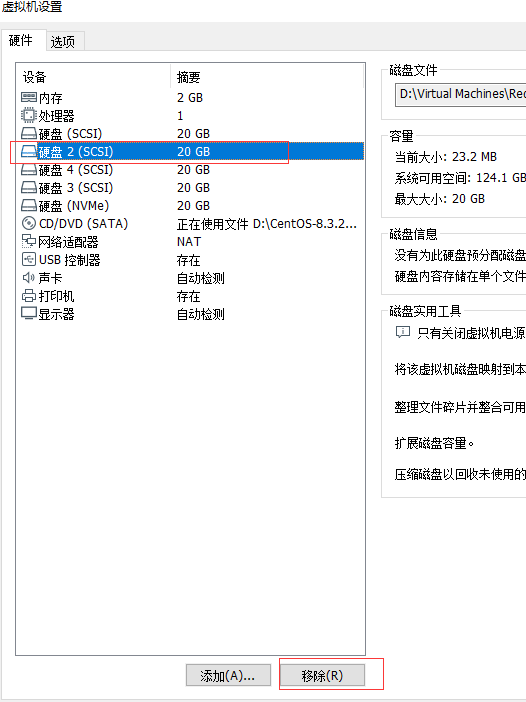

Next, remove a physical disk from the virtual machine:

After restarting the system, it is found that LV cannot be mounted_ Data and lv_log logical volume, VG not found in / dev directory_ Data volume group.

If the logical volume is set to boot automatically, after the disk fails, the two logical volumes cannot mount the file system, so the reboot cannot enter the system. You can only enter the single user mode and comment the entries related to the logical volume in the fstab configuration file.

Add a new physical hard disk

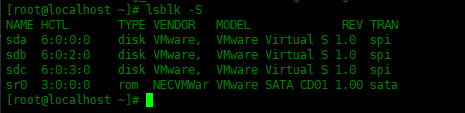

Next, add a new physical disk from the virtual machine, and the newly added disk will become / dev/sdc

[root@localhost ~]# lsblk -S NAME HCTL TYPE VENDOR MODEL REV TRAN sda 6:0:0:0 disk VMware, VMware Virtual S 1.0 spi sdb 6:0:2:0 disk VMware, VMware Virtual S 1.0 spi sdc 6:0:3:0 disk VMware, VMware Virtual S 1.0 spi sr0 3:0:0:0 rom NECVMWar VMware SATA CD01 1.00 sata

Recover metadata for deleted physical volumes

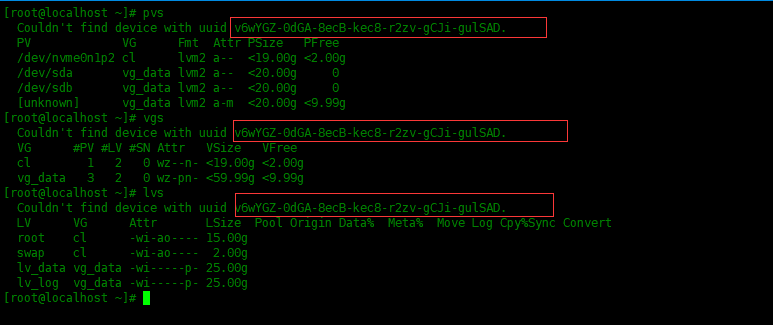

Now let's start restoring metadata for the deleted physical volume. When using pvs,vgs,lvs command When, it will warn one of the devices missing xxxxx UUID.

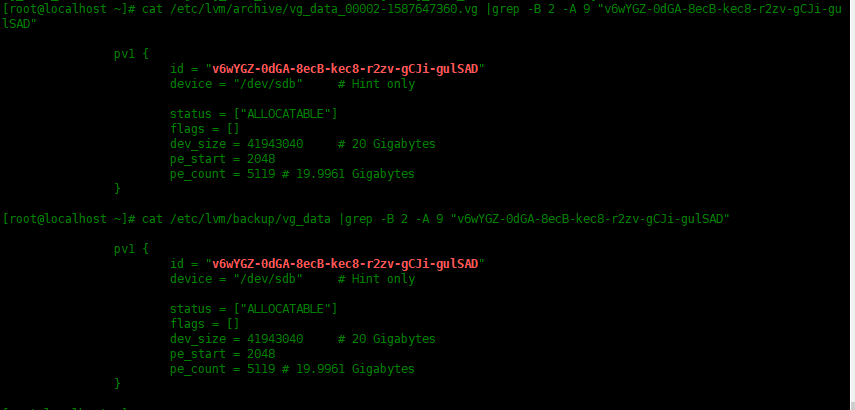

Just copy the UUID and use grep to view the archive and backup. The UUID reported before reboot refers to the / dev/sdb device.

[root@localhost ~]# cat /etc/lvm/archive/vg_data_00002-1587647360.vg |grep -B 2 -A 9 "v6wYGZ-0dGA-8ecB-kec8-r2zv-gCJi-gulSAD"

pv1 {

id = "v6wYGZ-0dGA-8ecB-kec8-r2zv-gCJi-gulSAD"

device = "/dev/sdb" # Hint only

status = ["ALLOCATABLE"]

flags = []

dev_size = 41943040 # 20 Gigabytes

pe_start = 2048

pe_count = 5119 # 19.9961 Gigabytes

}

[root@localhost ~]# cat /etc/lvm/backup/vg_data |grep -B 2 -A 9 "v6wYGZ-0dGA-8ecB-kec8-r2zv-gCJi-gulSAD"

pv1 {

id = "v6wYGZ-0dGA-8ecB-kec8-r2zv-gCJi-gulSAD"

device = "/dev/sdb" # Hint only

status = ["ALLOCATABLE"]

flags = []

dev_size = 41943040 # 20 Gigabytes

pe_start = 2048

pe_count = 5119 # 19.9961 Gigabytes

}

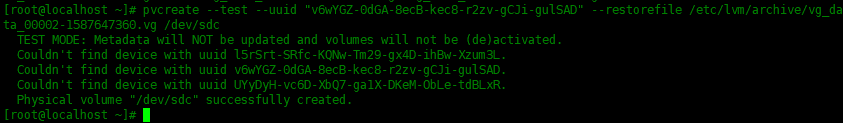

Let's start the trial run with pvcreate --test:

[root@localhost ~]# pvcreate --test --uuid "v6wYGZ-0dGA-8ecB-kec8-r2zv-gCJi-gulSAD" --restorefile /etc/lvm/archive/vg_data_00002-1587647360.vg /dev/sdc TEST MODE: Metadata will NOT be updated and volumes will not be (de)activated. Couldn't find device with uuid l5rSrt-SRfc-KQNw-Tm29-gx4D-ihBw-Xzum3L. Couldn't find device with uuid v6wYGZ-0dGA-8ecB-kec8-r2zv-gCJi-gulSAD. Couldn't find device with uuid UYyDyH-vc6D-XbQ7-ga1X-DKeM-ObLe-tdBLxR. Physical volume "/dev/sdc" successfully created.

- --test commissioning

- --uuid specifies the uuid value of the newly created physical volume. If this option is not available, a random uuid is generated. The uuid value specified in this experiment is the uuid value of the previously deleted physical volume.

- --restorefile reads the archive file generated by vgcfgbackup

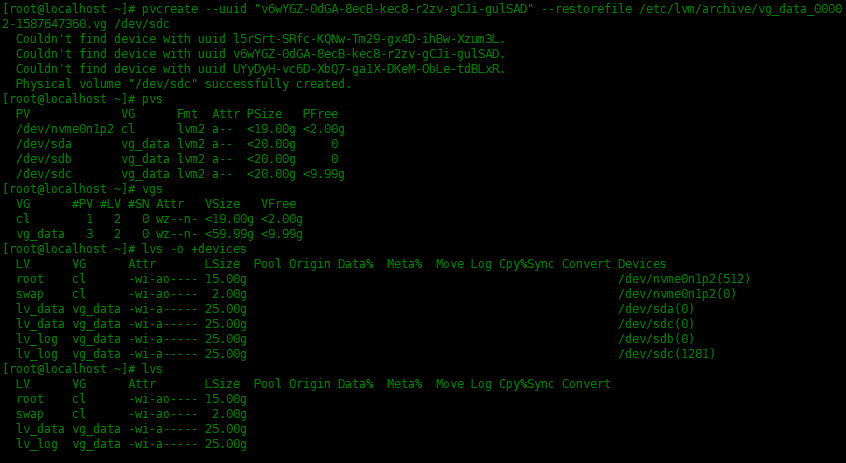

Remove the -- test option and formally create the physical volume:

[root@localhost ~]# pvcreate --uuid "v6wYGZ-0dGA-8ecB-kec8-r2zv-gCJi-gulSAD" --restorefile /etc/lvm/archive/vg_data_00002-1587647360.vg /dev/sdc Couldn't find device with uuid l5rSrt-SRfc-KQNw-Tm29-gx4D-ihBw-Xzum3L. Couldn't find device with uuid v6wYGZ-0dGA-8ecB-kec8-r2zv-gCJi-gulSAD. Couldn't find device with uuid UYyDyH-vc6D-XbQ7-ga1X-DKeM-ObLe-tdBLxR. Physical volume "/dev/sdc" successfully created. [root@localhost ~]# pvs PV VG Fmt Attr PSize PFree /dev/nvme0n1p2 cl lvm2 a-- <19.00g <2.00g /dev/sda vg_data lvm2 a-- <20.00g 0 /dev/sdb vg_data lvm2 a-- <20.00g 0 /dev/sdc vg_data lvm2 a-- <20.00g <9.99g [root@localhost ~]# vgs VG #PV #LV #SN Attr VSize VFree cl 1 2 0 wz--n- <19.00g <2.00g vg_data 3 2 0 wz--n- <59.99g <9.99g [root@localhost ~]# lvs -o +devices LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert Devices root cl -wi-ao---- 15.00g /dev/nvme0n1p2(512) swap cl -wi-ao---- 2.00g /dev/nvme0n1p2(0) lv_data vg_data -wi-a----- 25.00g /dev/sda(0) lv_data vg_data -wi-a----- 25.00g /dev/sdc(0) lv_log vg_data -wi-a----- 25.00g /dev/sdb(0) lv_log vg_data -wi-a----- 25.00g /dev/sdc(1281)

Recovery volume group

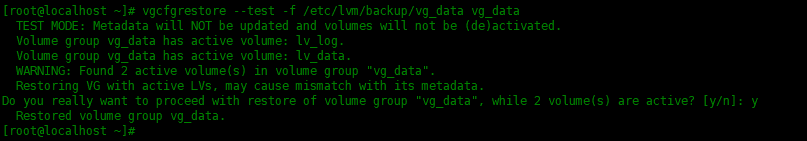

Then use lvm's backup to restore the volume group. First, add -- test for trial operation:

[root@localhost ~]# vgcfgrestore --test -f /etc/lvm/backup/vg_data vg_data TEST MODE: Metadata will NOT be updated and volumes will not be (de)activated. Volume group vg_data has active volume: lv_log. Volume group vg_data has active volume: lv_data. WARNING: Found 2 active volume(s) in volume group "vg_data". Restoring VG with active LVs, may cause mismatch with its metadata. Do you really want to proceed with restore of volume group "vg_data", while 2 volume(s) are active? [y/n]: y Restored volume group vg_data.

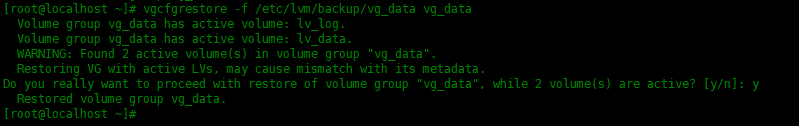

Let's officially run it and remove the -- test option:

[root@localhost ~]# vgcfgrestore -f /etc/lvm/backup/vg_data vg_data Volume group vg_data has active volume: lv_log. Volume group vg_data has active volume: lv_data. WARNING: Found 2 active volume(s) in volume group "vg_data". Restoring VG with active LVs, may cause mismatch with its metadata. Do you really want to proceed with restore of volume group "vg_data", while 2 volume(s) are active? [y/n]: y Restored volume group vg_data.

If volume groups and logical volumes are not activated, activate them using the following command:

# Scan volume group [root@localhost ~]# vgscan Reading all physical volumes. This may take a while... Found volume group "cl" using metadata type lvm2 Found volume group "vg_data" using metadata type lvm2 # Activate volume group vg_data [root@localhost ~]# vgchange -ay vg_data 2 logical volume(s) in volume group "vg_data" now active # Scan logical volumes [root@localhost ~]# lvscan ACTIVE '/dev/cl/swap' [2.00 GiB] inherit ACTIVE '/dev/cl/root' [15.00 GiB] inherit ACTIVE '/dev/vg_data/lv_data' [25.00 GiB] inherit ACTIVE '/dev/vg_data/lv_log' [25.00 GiB] inherit

summary

In lvm, you need to perform some steps to recover the deleted physical volume by adding a new disk and pointing to the new disk with an existing UUID.