Ribbon is an open-source client-based load balancing component of netflix. It is a very important module in the Spring Cloud family; Ribbon should also be a relatively complex module in the whole family, which directly affects the quality and performance of service scheduling. A comprehensive grasp of ribbon can help us understand each link that should be considered in service scheduling under the working mode of distributed micro service cluster.

This article will analyze the design principle of Ribbon in detail to help you have a better understanding of Spring Cloud.

I Ribbon working structure under Spring integration

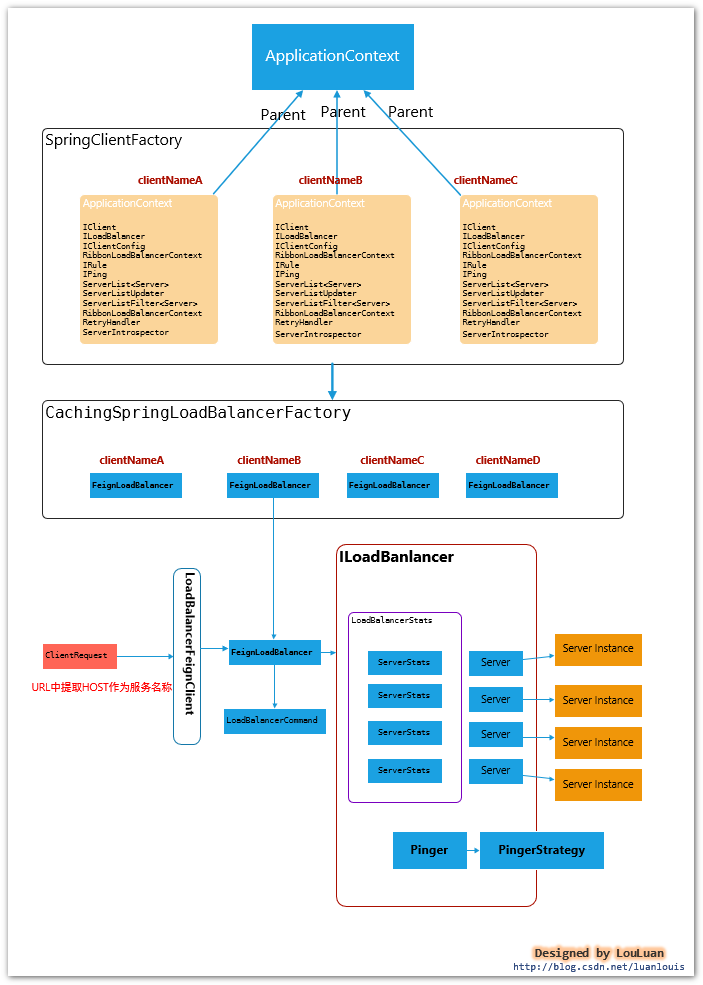

First, paste an overview diagram to explain how Spring integrates Ribbon, as shown below:

- Ribbon service configuration method

Each service configuration has a Spring ApplicationContext context, which is used to load instances of their respective services.

For example, there are several services in the current Spring Cloud system:

| Service name | role | dependent service |

|---|---|---|

| order | Order module | user |

| user | User module | nothing |

| mobile-bff | Mobile terminal BFF | order,user |

In the actual use of mobile BFF service, the order and user modules will be used. In the Spring context of mobile BFF service, a sub ApplicationContext will be created for order and user respectively to load the configuration of their respective service modules. That is, the configuration of each client is independent of each other.

2. Integration mode with Feign

When Feign is used as the client, the final request will be forwarded to the format of http: / / < service name > / < relative path to Service >. Through LoadBalancerFeignClient, extract the service ID < service name >, and then find the load balancer FeignLoadBalancer of the corresponding service in the context according to the service name. The load balancer is responsible for the statistics of existing service instances, Select the most appropriate service instance.

2, Implementation of integration with Feign in Spring Cloud mode

In the scenario combined with Feign, Feign's call will be packaged as a call request LoadBalancerCommand, and then the bottom layer sends the request through Rxjava based on the event coding style; The Spring Cloud framework extracts the service name through the URL requested by Feigin, then finds the load balancer of the corresponding service in the context to implement FeignLoadBalancer, then selects an appropriate Server instance through the load balancer, and then forwards the call request to the Server instance to complete the call. In this process, Record the call statistics of the corresponding Server instance.

/**

* Create an {@link Observable} that once subscribed execute network call asynchronously with a server chosen by load balancer.

* If there are any errors that are indicated as retriable by the {@link RetryHandler}, they will be consumed internally by the

* function and will not be observed by the {@link Observer} subscribed to the returned {@link Observable}. If number of retries has

* exceeds the maximal allowed, a final error will be emitted by the returned {@link Observable}. Otherwise, the first successful

* result during execution and retries will be emitted.

*/

public Observable<T> submit(final ServerOperation<T> operation) {

final ExecutionInfoContext context = new ExecutionInfoContext();

if (listenerInvoker != null) {

try {

listenerInvoker.onExecutionStart();

} catch (AbortExecutionException e) {

return Observable.error(e);

}

}

// Maximum number of attempts on the same Server

final int maxRetrysSame = retryHandler.getMaxRetriesOnSameServer();

//Next Server maximum attempts

final int maxRetrysNext = retryHandler.getMaxRetriesOnNextServer();

// Use the load balancer

// Using the load balancer, select the appropriate Server, and then execute the Server request to integrate the requested data and behavior into ServerStats

Observable<T> o =

(server == null ? selectServer() : Observable.just(server))

.concatMap(new Func1<Server, Observable<T>>() {

@Override

// Called for each server being selected

public Observable<T> call(Server server) {

// Get statistics for Server

context.setServer(server);

final ServerStats stats = loadBalancerContext.getServerStats(server);

// Called for each attempt and retry

Observable<T> o = Observable

.just(server)

.concatMap(new Func1<Server, Observable<T>>() {

@Override

public Observable<T> call(final Server server) {

context.incAttemptCount();//retry count

loadBalancerContext.noteOpenConnection(stats);//Link statistics

if (listenerInvoker != null) {

try {

listenerInvoker.onStartWithServer(context.toExecutionInfo());

} catch (AbortExecutionException e) {

return Observable.error(e);

}

}

//The execution monitor records the execution time

final Stopwatch tracer = loadBalancerContext.getExecuteTracer().start();

//After finding the appropriate server, start executing the request

//After the bottom call has the result, do message processing

return operation.call(server).doOnEach(new Observer<T>() {

private T entity;

@Override

public void onCompleted() {

recordStats(tracer, stats, entity, null);

// Record statistics

}

@Override

public void onError(Throwable e) {

recordStats(tracer, stats, null, e);//Record exception information

logger.debug("Got error {} when executed on server {}", e, server);

if (listenerInvoker != null) {

listenerInvoker.onExceptionWithServer(e, context.toExecutionInfo());

}

}

@Override

public void onNext(T entity) {

this.entity = entity;//Return result value

if (listenerInvoker != null) {

listenerInvoker.onExecutionSuccess(entity, context.toExecutionInfo());

}

}

private void recordStats(Stopwatch tracer, ServerStats stats, Object entity, Throwable exception) {

tracer.stop();//End timing

//Mark the end of the request and update the statistics

loadBalancerContext.noteRequestCompletion(stats, entity, exception, tracer.getDuration(TimeUnit.MILLISECONDS), retryHandler);

}

});

}

});

//If it fails, the retry logic is triggered according to the retry policy

// Use observable for retry logic, and make logical judgment according to predicate. Do it here

if (maxRetrysSame > 0)

o = o.retry(retryPolicy(maxRetrysSame, true));

return o;

}

});

// next request processing, based on retrier operation

if (maxRetrysNext > 0 && server == null)

o = o.retry(retryPolicy(maxRetrysNext, false));

return o.onErrorResumeNext(new Func1<Throwable, Observable<T>>() {

@Override

public Observable<T> call(Throwable e) {

if (context.getAttemptCount() > 0) {

if (maxRetrysNext > 0 && context.getServerAttemptCount() == (maxRetrysNext + 1)) {

e = new ClientException(ClientException.ErrorType.NUMBEROF_RETRIES_NEXTSERVER_EXCEEDED,

"Number of retries on next server exceeded max " + maxRetrysNext

+ " retries, while making a call for: " + context.getServer(), e);

}

else if (maxRetrysSame > 0 && context.getAttemptCount() == (maxRetrysSame + 1)) {

e = new ClientException(ClientException.ErrorType.NUMBEROF_RETRIES_EXEEDED,

"Number of retries exceeded max " + maxRetrysSame

+ " retries, while making a call for: " + context.getServer(), e);

}

}

if (listenerInvoker != null) {

listenerInvoker.onExecutionFailed(e, context.toFinalExecutionInfo());

}

return Observable.error(e);

}

});

}

Select the appropriate Server from a list of Server lists

/**

* Compute the final URI from a partial URI in the request. The following steps are performed:

* <ul>

* <li> If host is not specified, select host/port from the load balancer

* <li> If the host has not been specified and the load balancer has not been found, try to determine the host/port from the virtual address

* <li> If HOST is specified, and the authorization part of the URI is set through the virtual address, and there is a load balancer, determine the host/port in the load balancer (the specified HOST will be ignored)

* <li> If the host is specified, but the load balancer and virtual address configuration have not been specified, the real address is used as the host

* <li> if host is missing but none of the above applies, throws ClientException

* </ul>

*

* @param original Original URI passed from caller

*/

public Server getServerFromLoadBalancer(@Nullable URI original, @Nullable Object loadBalancerKey) throws ClientException {

String host = null;

int port = -1;

if (original != null) {

host = original.getHost();

}

if (original != null) {

Pair<String, Integer> schemeAndPort = deriveSchemeAndPortFromPartialUri(original);

port = schemeAndPort.second();

}

// Various Supported Cases

// The loadbalancer to use and the instances it has is based on how it was registered

// In each of these cases, the client might come in using Full Url or Partial URL

ILoadBalancer lb = getLoadBalancer();

if (host == null) {

// Some URI s are provided. In the absence of HOST

// well we have to just get the right instances from lb - or we fall back

if (lb != null){

Server svc = lb.chooseServer(loadBalancerKey);// Select Server using load balancer

if (svc == null){

throw new ClientException(ClientException.ErrorType.GENERAL,

"Load balancer does not have available server for client: "

+ clientName);

}

//Select host from the results selected by the load balancer

host = svc.getHost();

if (host == null){

throw new ClientException(ClientException.ErrorType.GENERAL,

"Invalid Server for :" + svc);

}

logger.debug("{} using LB returned Server: {} for request {}", new Object[]{clientName, svc, original});

return svc;

} else {

// No Full URL - and we dont have a LoadBalancer registered to

// obtain a server

// if we have a vipAddress that came with the registration, we

// can use that else we

// bail out

// Resolve the host configuration through the virtual address configuration and return

if (vipAddresses != null && vipAddresses.contains(",")) {

throw new ClientException(

ClientException.ErrorType.GENERAL,

"Method is invoked for client " + clientName + " with partial URI of ("

+ original

+ ") with no load balancer configured."

+ " Also, there are multiple vipAddresses and hence no vip address can be chosen"

+ " to complete this partial uri");

} else if (vipAddresses != null) {

try {

Pair<String,Integer> hostAndPort = deriveHostAndPortFromVipAddress(vipAddresses);

host = hostAndPort.first();

port = hostAndPort.second();

} catch (URISyntaxException e) {

throw new ClientException(

ClientException.ErrorType.GENERAL,

"Method is invoked for client " + clientName + " with partial URI of ("

+ original

+ ") with no load balancer configured. "

+ " Also, the configured/registered vipAddress is unparseable (to determine host and port)");

}

} else {

throw new ClientException(

ClientException.ErrorType.GENERAL,

this.clientName

+ " has no LoadBalancer registered and passed in a partial URL request (with no host:port)."

+ " Also has no vipAddress registered");

}

}

} else {

// The Full URL Case URL specifies the full address, which may be a virtual address or hostAndPort

// This could either be a vipAddress or a hostAndPort or a real DNS

// if vipAddress or hostAndPort, we just have to consult the loadbalancer

// but if it does not return a server, we should just proceed anyways

// and assume its a DNS

// For restClients registered using a vipAddress AND executing a request

// by passing in the full URL (including host and port), we should only

// consult lb IFF the URL passed is registered as vipAddress in Discovery

boolean shouldInterpretAsVip = false;

if (lb != null) {

shouldInterpretAsVip = isVipRecognized(original.getAuthority());

}

if (shouldInterpretAsVip) {

Server svc = lb.chooseServer(loadBalancerKey);

if (svc != null){

host = svc.getHost();

if (host == null){

throw new ClientException(ClientException.ErrorType.GENERAL,

"Invalid Server for :" + svc);

}

logger.debug("using LB returned Server: {} for request: {}", svc, original);

return svc;

} else {

// just fall back as real DNS

logger.debug("{}:{} assumed to be a valid VIP address or exists in the DNS", host, port);

}

} else {

// consult LB to obtain vipAddress backed instance given full URL

//Full URL execute request - where url!=vipAddress

logger.debug("Using full URL passed in by caller (not using load balancer): {}", original);

}

}

// end of creating final URL

if (host == null){

throw new ClientException(ClientException.ErrorType.GENERAL,"Request contains no HOST to talk to");

}

// just verify that at this point we have a full URL

return new Server(host, port);

}

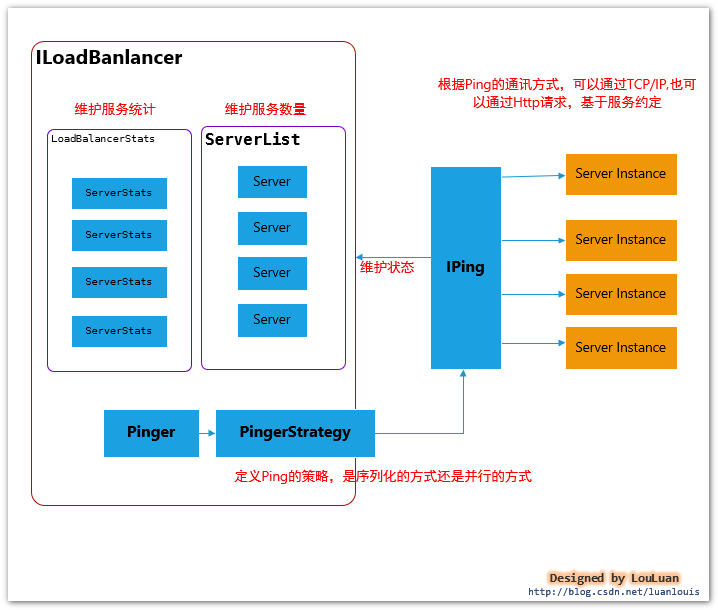

III LoadBalancer – the core of the load balancer

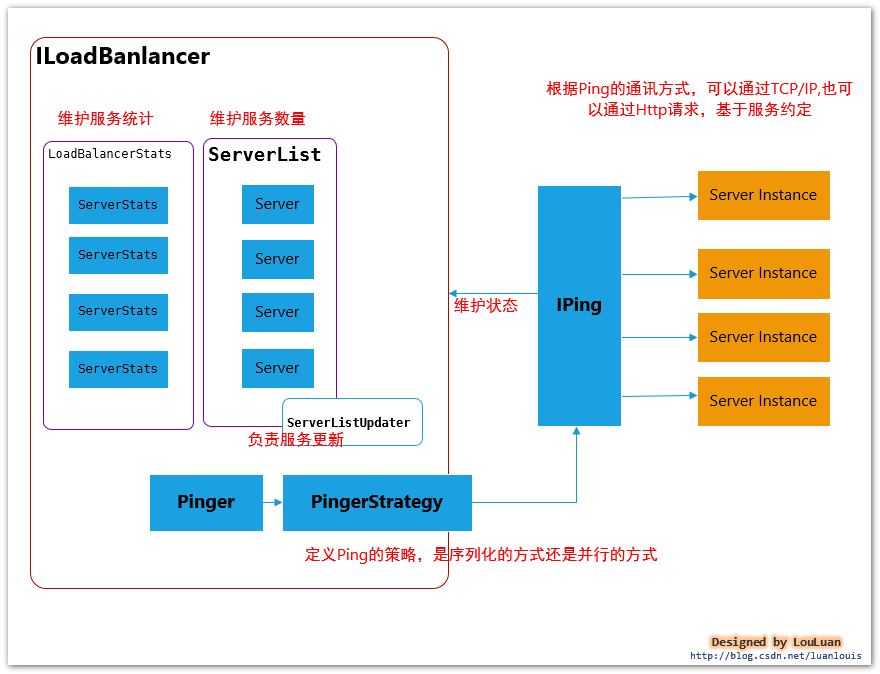

LoadBalancer has three main functions:

- Maintain the number of Sever lists (add, update, delete, etc.)

- Maintain the status of the Server list (status update)

- When requesting a Server instance, can the most appropriate Server instance be returned

This chapter will elaborate on these three aspects.

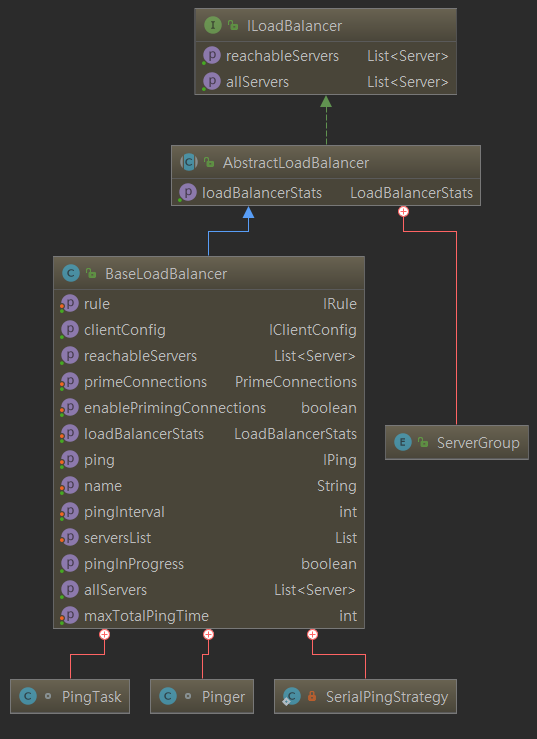

3.1 internal basic implementation principle of load balancer

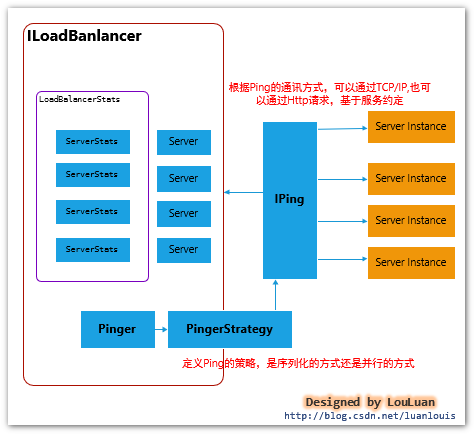

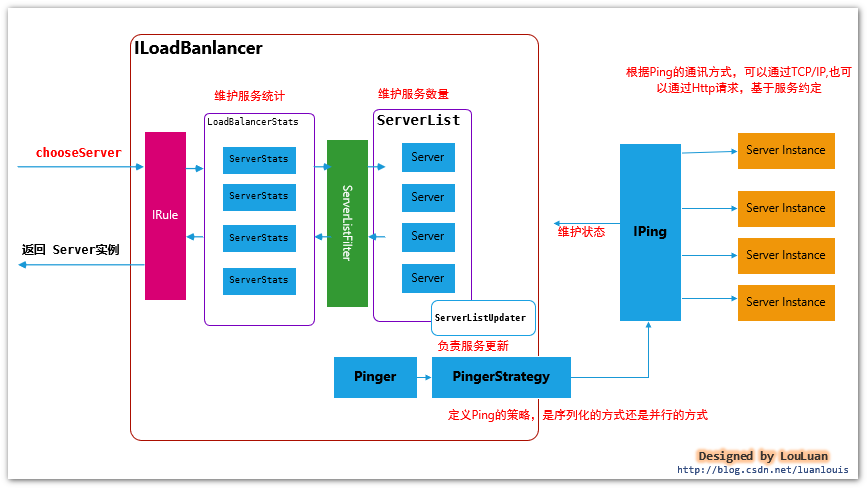

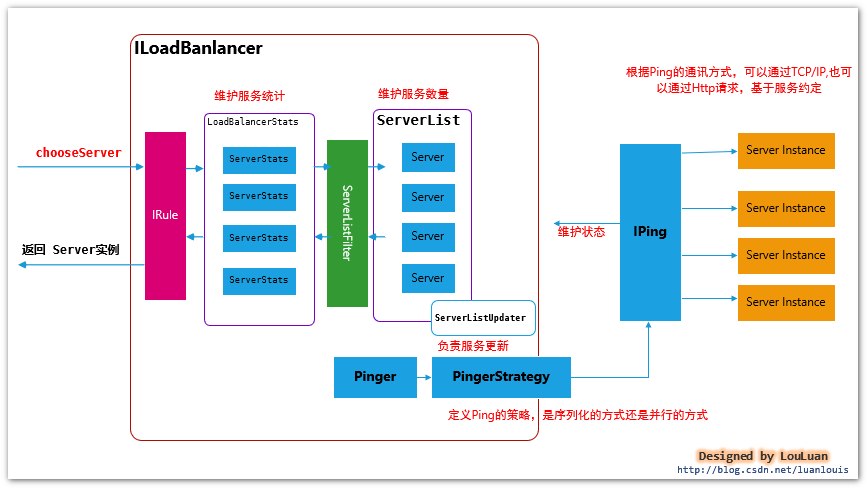

First, familiarize yourself with the implementation schematic diagram of load balancer:

| component | function | Reference section |

|---|---|---|

| Server | As the representation of a service instance, Server records the relevant information of the service instance, such as service address, zone, service name, instance ID, etc | |

| ServerStats | As the operation statistics of the corresponding Server, it generally refers to the Server average response time, cumulative request failure count, fuse time control, etc. in the process of service invocation. A ServerStats instance uniquely corresponds to a Server instance | |

| LoadBalancerStats | As a container for the ServerStats instance list, it is maintained uniformly | |

| ServerListUpdater | The load balancer updates the ServerList through the ServerListUpdater. For example, it implements a scheduled task to obtain the latest Server instance list at regular intervals | |

| Pinger | The service status checker is responsible for maintaining the service status in the ServerList. Note: Pinger is only responsible for the status of the Server and is not able to decide whether to delete it | |

| PingerStrategy | Define how to also verify the effectiveness of services, such as sequential or parallel | |

| IPing | Ping is a method to check whether the service is available. It is common to check whether the service has normal requests through HTTP or TCP/IP |

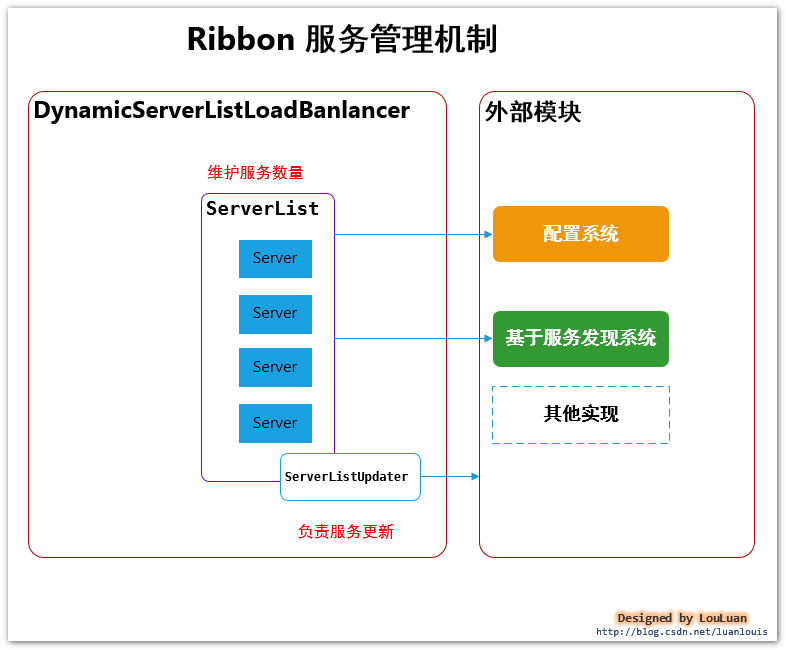

3.2 how to maintain a Server list? (add, update, delete)

From the perspective of service list maintenance, the Ribbon structure is as follows:

The maintenance of Server list can be divided into two types in terms of implementation methods:

The maintenance of Server list can be divided into two types in terms of implementation methods:

1. Service list based on configuration

This method is generally to statically configure the server list through the configuration file. This method is relatively simple, but it does not mean that it will remain unchanged when the machine is running. When making the Spring cloud suite, netflix uses the distributed configuration framework netflix archius. One feature of the archius framework is that it will dynamically monitor the changes of configuration files and refresh the changes to various applications. That is, when we modify the service list based on configuration without closing the service, the service list can be refreshed directly

2. Dynamically maintain the service list in combination with the service registration information of service discovery components (such as Eureka)

Based on the Spring Cloud framework, service registration and discovery is an essential component of a distributed service cluster. It is responsible for maintaining different service instances (registration, renewal and deregistration). This paper will introduce how to dynamically refresh the service list of ribbon with the help of Eureka's service registration information under the integration mode with Eureka

Ribbon configuration item: < service name > ribbon. Niwsserverlistclassname() to determine which implementation to use. Correspondingly:

| strategy | ServerList implementation |

|---|---|

| Configuration based | com.netflix.loadbalancer.ConfigurationBasedServerList |

| Service based discovery | com.netflix.loadbalancer.DiscoveryEnabledNIWSServerList |

The Server list may be dynamically updated during operation, and the specific update method is determined by < service name > ribbon. Serverlistupdaterclassname can be implemented in the following two ways:

| Update strategy | ServerListUpdater implementation |

|---|---|

| Pull service list based on scheduled task | com.netflix.loadbalancer.PollingServerListUpdater |

| Update mode based on Eureka service event notification | com.netflix.loadbalancer.EurekaNotificationServerListUpdater |

- Pull the service list based on scheduled tasks. The implementation of this method is: com netflix. loadbalancer. Pollingserverlistupdater internally maintains a periodic scheduled task, pulls the latest service list, and then updates the latest service list to ServerList. Its core implementation logic is as follows:

public class PollingServerListUpdater implements ServerListUpdater {

private static final Logger logger = LoggerFactory.getLogger(PollingServerListUpdater.class);

private static long LISTOFSERVERS_CACHE_UPDATE_DELAY = 1000; // msecs;

private static int LISTOFSERVERS_CACHE_REPEAT_INTERVAL = 30 * 1000; // msecs;

// Updater thread pool definition and hook settings

private static class LazyHolder {

private final static String CORE_THREAD = "DynamicServerListLoadBalancer.ThreadPoolSize";

private final static DynamicIntProperty poolSizeProp = new DynamicIntProperty(CORE_THREAD, 2);

private static Thread _shutdownThread;

static ScheduledThreadPoolExecutor _serverListRefreshExecutor = null;

static {

int coreSize = poolSizeProp.get();

ThreadFactory factory = (new ThreadFactoryBuilder())

.setNameFormat("PollingServerListUpdater-%d")

.setDaemon(true)

.build();

_serverListRefreshExecutor = new ScheduledThreadPoolExecutor(coreSize, factory);

poolSizeProp.addCallback(new Runnable() {

@Override

public void run() {

_serverListRefreshExecutor.setCorePoolSize(poolSizeProp.get());

}

});

_shutdownThread = new Thread(new Runnable() {

public void run() {

logger.info("Shutting down the Executor Pool for PollingServerListUpdater");

shutdownExecutorPool();

}

});

Runtime.getRuntime().addShutdownHook(_shutdownThread);

}

private static void shutdownExecutorPool() {

if (_serverListRefreshExecutor != null) {

_serverListRefreshExecutor.shutdown();

if (_shutdownThread != null) {

try {

Runtime.getRuntime().removeShutdownHook(_shutdownThread);

} catch (IllegalStateException ise) { // NOPMD

// this can happen if we're in the middle of a real

// shutdown,

// and that's 'ok'

}

}

}

}

}

// Omit some codes

@Override

public synchronized void start(final UpdateAction updateAction) {

if (isActive.compareAndSet(false, true)) {

//Create a scheduled task and perform the update operation according to a specific implementation cycle

final Runnable wrapperRunnable = new Runnable() {

@Override

public void run() {

if (!isActive.get()) {

if (scheduledFuture != null) {

scheduledFuture.cancel(true);

}

return;

}

try {

//Execute the update operation, which is defined in the LoadBalancer

updateAction.doUpdate();

lastUpdated = System.currentTimeMillis();

} catch (Exception e) {

logger.warn("Failed one update cycle", e);

}

}

};

//Scheduled task creation

scheduledFuture = getRefreshExecutor().scheduleWithFixedDelay(

wrapperRunnable,

initialDelayMs, //Initial delay time

refreshIntervalMs, //Internal refresh time

TimeUnit.MILLISECONDS

);

} else {

logger.info("Already active, no-op");

}

}

//Omit some codes

}

As can be seen from the above code, serverlistupdater only defines the update method, and the specific update method is encapsulated into UpdateAction:

/**

* an interface for the updateAction that actually executes a server list update

*/

public interface UpdateAction {

void doUpdate();

}

//Specific operations are implemented in the DynamicServerListLoadBalancer:

public DynamicServerListLoadBalancer() {

this.isSecure = false;

this.useTunnel = false;

this.serverListUpdateInProgress = new AtomicBoolean(false);

this.updateAction = new UpdateAction() {

public void doUpdate() {

//Update service list

DynamicServerListLoadBalancer.this.updateListOfServers();

}

};

}

@VisibleForTesting

public void updateListOfServers() {

List<T> servers = new ArrayList();

// Get the latest service list through ServerList

if (this.serverListImpl != null) {

servers = this.serverListImpl.getUpdatedListOfServers();

LOGGER.debug("List of Servers for {} obtained from Discovery client: {}", this.getIdentifier(), servers);

//The returned results are filtered through filters

if (this.filter != null) {

servers = this.filter.getFilteredListOfServers((List)servers);

LOGGER.debug("Filtered List of Servers for {} obtained from Discovery client: {}", this.getIdentifier(), servers);

}

}

//Update list

this.updateAllServerList((List)servers);

}

protected void updateAllServerList(List<T> ls) {

if (this.serverListUpdateInProgress.compareAndSet(false, true)) {

try {

Iterator var2 = ls.iterator();

while(var2.hasNext()) {

T s = (Server)var2.next();

s.setAlive(true);

}

this.setServersList(ls);

super.forceQuickPing();

} finally {

this.serverListUpdateInProgress.set(false);

}

}

}

- The update method based on Eureka service event notification is somewhat different from that based on Eureka. When the Server service registration information changes in Eureka registry, The message notification is sent to eurekanotification serverlistupdater, and then this updater triggers the refresh of ServerList:

public class EurekaNotificationServerListUpdater implements ServerListUpdater { //Omit some codes @Override public synchronized void start(final UpdateAction updateAction) { if (isActive.compareAndSet(false, true)) { //Create Eureka time listener. When Eureka changes, the corresponding logic will be triggered this.updateListener = new EurekaEventListener() { @Override public void onEvent(EurekaEvent event) { if (event instanceof CacheRefreshedEvent) { //Internal message queue if (!updateQueued.compareAndSet(false, true)) { // if an update is already queued logger.info("an update action is already queued, returning as no-op"); return; } if (!refreshExecutor.isShutdown()) { try { //Submit the update operation request to the message queue refreshExecutor.submit(new Runnable() { @Override public void run() { try { updateAction.doUpdate(); // Perform a real update operation lastUpdated.set(System.currentTimeMillis()); } catch (Exception e) { logger.warn("Failed to update serverList", e); } finally { updateQueued.set(false); } } }); // fire and forget } catch (Exception e) { logger.warn("Error submitting update task to executor, skipping one round of updates", e); updateQueued.set(false); // if submit fails, need to reset updateQueued to false } } else { logger.debug("stopping EurekaNotificationServerListUpdater, as refreshExecutor has been shut down"); stop(); } } } }; //EurekaClient client instance if (eurekaClient == null) { eurekaClient = eurekaClientProvider.get(); } //Registering event listener based on EeurekaClient if (eurekaClient != null) { eurekaClient.registerEventListener(updateListener); } else { logger.error("Failed to register an updateListener to eureka client, eureka client is null"); throw new IllegalStateException("Failed to start the updater, unable to register the update listener due to eureka client being null."); } } else { logger.info("Update listener already registered, no-op"); } } }3.2. 1 related configuration items

| Configuration item | explain | Effective scenario | Default value |

|---|---|---|---|

| <service-name>.ribbon.NIWSServerListClassName | For the implementation of ServerList, refer to the above description | ConfigurationBasedServerList | |

| <service-name>.ribbon.listOfServers | The service list is in the form of hostname:port, separated by commas | When ServerList implements configuration based | |

| <service-name>.ribbon.ServerListUpdaterClassName | For the implementation of service list update strategy, refer to the above description | PollingServerListUpdater | |

| <service-name>.ribbon.ServerListRefreshInterval | Service list refresh rate | When pulling based on scheduled tasks | 30s |

3.2. 2. Default implementation of ribbon

By default, the ribbon will adopt the following configuration items, that is, it will maintain the service list based on configuration and pull the service list on time based on scheduled tasks. The frequency is 30s

<service-name>.ribbon.NIWSServerListClassName=com.netflix.loadbalancer.ConfigurationBasedServerList <service-name>.ribbon.listOfServers=<ip:port>,<ip:port> <service-name>.ribbon.ServerListUpdaterClassName=com.netflix.loadbalancer.EurekaNotificationServerListUpdater <service-name>.ribbon.ServerListRefreshInterval=30 ### Update thread pool size DynamicServerListLoadBalancer.ThreadPoolSize=2

3.2. 3 configuration under spring cloud integration

By default, the ribbon will adopt the following configuration items, that is, it will maintain the service list based on configuration and pull the service list on time based on scheduled tasks. The frequency is 30s

<service-name>.ribbon.NIWSServerListClassName=com.netflix.loadbalancer.DiscoveryEnabledNIWSServerList <service-name>.ribbon.ServerListUpdaterClassName=com.netflix.loadbalancer.EurekaNotificationServerListUpdater ### Update thread pool size EurekaNotificationServerListUpdater.ThreadPoolSize=2 ###Notification queue receive size EurekaNotificationServerListUpdater.queueSize=1000

3.3 how does the load balancer maintain the status of service instances?

The Ribbon load balancer entrusts the state maintenance of service instances to Pinger, PingerStrategy and IPing ; for maintenance. The specific interaction mode is as follows:

/**

* The policy to define whether the Ping service state is effective, whether it is serialization sequential Ping or parallel Ping. In this process, it should be ensured that they are not affected by each other

*

*/

public interface IPingStrategy {

boolean[] pingServers(IPing ping, Server[] servers);

}

/**

* Define how to Ping a service to ensure its effectiveness

* @author stonse

*

*/

public interface IPing {

/**

* Checks whether the given <code>Server</code> is "alive" i.e. should be

* considered a candidate while loadbalancing

* Verify survival

*/

public boolean isAlive(Server server);

}

3.3. 1. Create Ping scheduled task

By default, a periodic scheduled task is created inside the load balancer

| Control parameters | explain | Default value |

|---|---|---|

| <service-name>.ribbon.NFLoadBalancerPingInterval | Ping scheduled task cycle | 30 s |

| <service-name>.ribbon.NFLoadBalancerMaxTotalPingTime | Ping timeout | 2s |

| <service-name>.ribbon.NFLoadBalancerPingClassName | IPing implementation class | DummyPing, directly return true |

The default PingStrategy adopts the serialization implementation method to check whether the service instances are available in turn:

/**

* Default implementation for <c>IPingStrategy</c>, performs ping

* serially, which may not be desirable, if your <c>IPing</c>

* implementation is slow, or you have large number of servers.

*/

private static class SerialPingStrategy implements IPingStrategy {

@Override

public boolean[] pingServers(IPing ping, Server[] servers) {

int numCandidates = servers.length;

boolean[] results = new boolean[numCandidates];

logger.debug("LoadBalancer: PingTask executing [{}] servers configured", numCandidates);

for (int i = 0; i < numCandidates; i++) {

results[i] = false; /* Default answer is DEAD. */

try {

// Check whether the service is normal in sequence, and return the corresponding array representation

if (ping != null) {

results[i] = ping.isAlive(servers[i]);

}

} catch (Exception e) {

logger.error("Exception while pinging Server: '{}'", servers[i], e);

}

}

return results;

}

}

3.3. 2. Ribbon's default IPing implementation: DummyPing

The default IPing implementation, which returns true directly:

public class DummyPing extends AbstractLoadBalancerPing {

public DummyPing() {

}

public boolean isAlive(Server server) {

return true;

}

@Override

public void initWithNiwsConfig(IClientConfig clientConfig) {

}

}

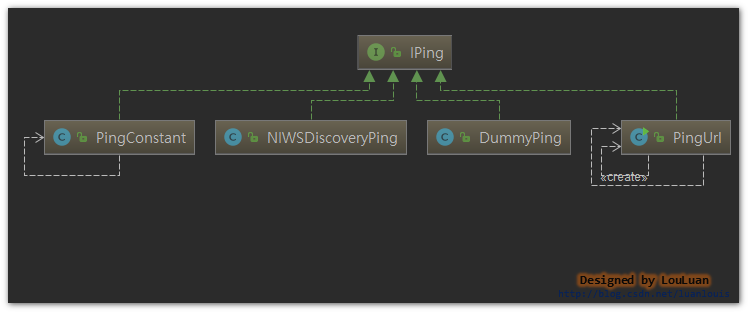

In addition, IPing has the following implementations:

3.3. 3. IPing implementation under spring cloud integration: NIWSDiscoveryPing

After integration with Spring Cloud, the default implementation of IPing is NIWSDiscoveryPing, which uses Eureka as service registration and discovery, checks whether the service is available, and updates the Ribbon Server status by listening to Eureka service updates. The specific implementation is NIWSDiscoveryPing:

/**

* "Ping" Discovery Client

* i.e. we dont do a real "ping". We just assume that the server is up if Discovery Client says so

* @author stonse

*

*/

public class NIWSDiscoveryPing extends AbstractLoadBalancerPing {

BaseLoadBalancer lb = null;

public NIWSDiscoveryPing() {

}

public BaseLoadBalancer getLb() {

return lb;

}

/**

* Non IPing interface method - only set this if you care about the "newServers Feature"

* @param lb

*/

public void setLb(BaseLoadBalancer lb) {

this.lb = lb;

}

public boolean isAlive(Server server) {

boolean isAlive = true;

//Take the Instance status of Eureka Server as the status of Ribbon service

if (server!=null && server instanceof DiscoveryEnabledServer){

DiscoveryEnabledServer dServer = (DiscoveryEnabledServer)server;

InstanceInfo instanceInfo = dServer.getInstanceInfo();

if (instanceInfo!=null){

InstanceStatus status = instanceInfo.getStatus();

if (status!=null){

isAlive = status.equals(InstanceStatus.UP);

}

}

}

return isAlive;

}

@Override

public void initWithNiwsConfig(

IClientConfig clientConfig) {

}

}

Default implementation entry under Spring Cloud:

@Bean

@ConditionalOnMissingBean

public IPing ribbonPing(IClientConfig config) {

if (this.propertiesFactory.isSet(IPing.class, serviceId)) {

return this.propertiesFactory.get(IPing.class, config, serviceId);

}

NIWSDiscoveryPing ping = new NIWSDiscoveryPing();

ping.initWithNiwsConfig(config);

return ping;

}

3.4 how to select an appropriate service instance from the service list?

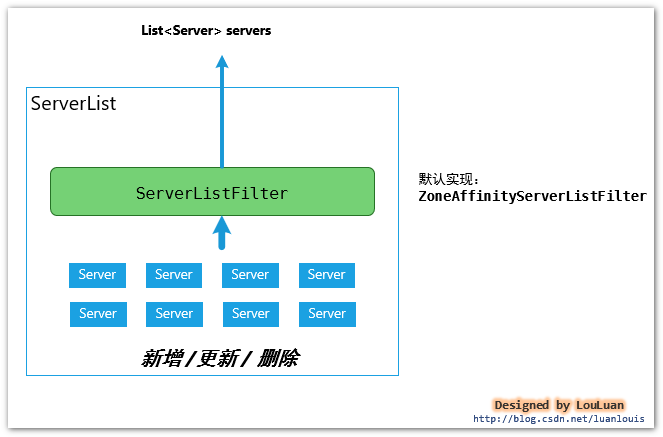

3.4. 1. Service instance container: maintenance of ServerList

The load balancer uniformly maintains service instances through ServerList. The specific mode is as follows:

The basic interface definition is very simple:

/**

* Interface that defines the methods sed to obtain the List of Servers

* @author stonse

*

* @param <T>

*/

public interface ServerList<T extends Server> {

//Gets the list of initialized services

public List<T> getInitialListOfServers();

/**

* Return updated list of servers. This is called say every 30 secs

* (configurable) by the Loadbalancer's Ping cycle

* Get the updated service list

*/

public List<T> getUpdatedListOfServers();

}

In the Ribbon implementation, the Server instance is maintained in the ServerList, and the latest list < Server > collection is returned for use by the LoadBalancer

Functions of ServerList:

Maintain service instances and use ServerListFilter filter to filter out qualified service instance list < server >

3.4. 2 service instance list filter ServerListFilter

The function of ServerListFilter is very simple:Pass in a service instance list and filter out the list of services that meet the filtering conditions

public interface ServerListFilter<T extends Server> {

public List<T> getFilteredListOfServers(List<T> servers);

}

3.4.2.1 Ribbon's default ServerListFilter implementation: ZoneAffinityServerListFilter

By default, the Ribbon adopts the region first filtering strategy, that is, when in the server list, the list of servers consistent with the region (zone) where the current instance is located is filtered

Associated with this, the Ribbon has two related configuration parameters:

| Control parameters | explain | Default value |

|---|---|---|

| <service-name>.ribbon.EnableZoneAffinity | Whether to enable area priority | false |

| <service-name>.ribbon.EnableZoneExclusivity | Whether to adopt regional exclusivity, that is, only return service instances consistent with the current Zone | false |

| <service-name>.ribbon.zoneAffinity.maxLoadPerServer | Maximum active request load per Server threshold | 0.6 |

| <service-name>.ribbon.zoneAffinity.maxBlackOutServesrPercentage | Percentage of maximum open circuit filtration | 0.8 |

| <service-name>.ribbon.zoneAffinity.minAvailableServers | Minimum available service instance threshold | 2 |

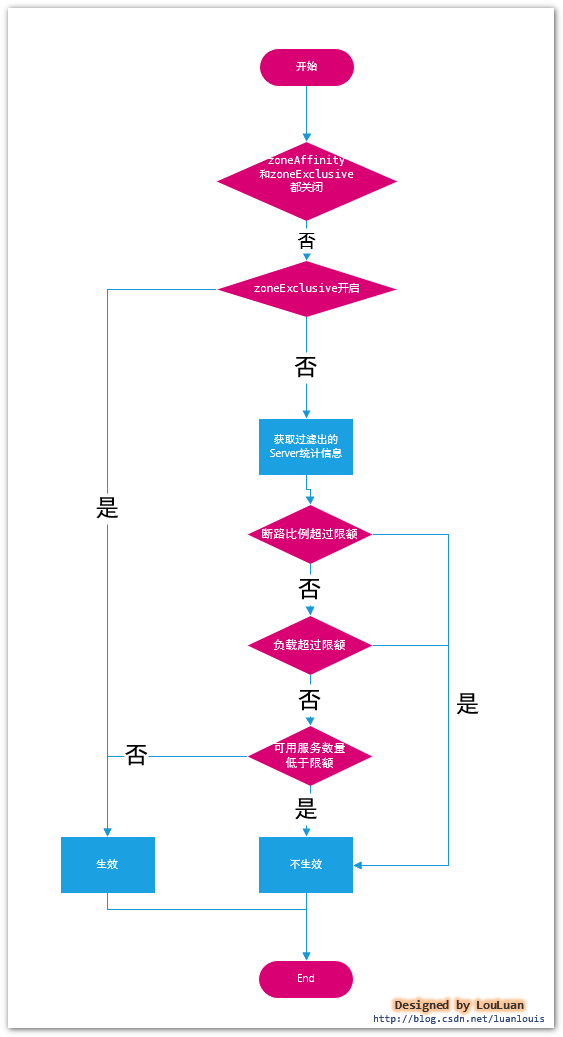

Its core implementation is as follows:

public class ZoneAffinityServerListFilter<T extends Server> extends

AbstractServerListFilter<T> implements IClientConfigAware {

@Override

public List<T> getFilteredListOfServers(List<T> servers) {

//The zone is not empty, the region priority is enabled, and the number of service instances is not empty

if (zone != null && (zoneAffinity || zoneExclusive) && servers !=null && servers.size() > 0){

//Filter service list based on assertion

List<T> filteredServers = Lists.newArrayList(Iterables.filter(

servers, this.zoneAffinityPredicate.getServerOnlyPredicate()));

//If region priority is allowed, the filter list is returned

if (shouldEnableZoneAffinity(filteredServers)) {

return filteredServers;

} else if (zoneAffinity) {

overrideCounter.increment();

}

}

return servers;

}

// Determine whether area priority filter conditions should be used

private boolean shouldEnableZoneAffinity(List<T> filtered) {

if (!zoneAffinity && !zoneExclusive) {

return false;

}

if (zoneExclusive) {

return true;

}

// Get statistics

LoadBalancerStats stats = getLoadBalancerStats();

if (stats == null) {

return zoneAffinity;

} else {

logger.debug("Determining if zone affinity should be enabled with given server list: {}", filtered);

//Get a snapshot of the regional Server, including statistics

ZoneSnapshot snapshot = stats.getZoneSnapshot(filtered);

//Average load, which means the average number of active requests on each machine in all current servers

double loadPerServer = snapshot.getLoadPerServer();

int instanceCount = snapshot.getInstanceCount();

int circuitBreakerTrippedCount = snapshot.getCircuitTrippedCount();

// 1. If the proportion of Server open circuit exceeds the set upper limit (default ` 0.8 ')

// 2. Or the current load exceeds the set upper load limit

// 3. If the available services are less than the set service limit, the default value is 2`

if (((double) circuitBreakerTrippedCount) / instanceCount >= blackOutServerPercentageThreshold.get()

|| loadPerServer >= activeReqeustsPerServerThreshold.get()

|| (instanceCount - circuitBreakerTrippedCount) < availableServersThreshold.get()) {

logger.debug("zoneAffinity is overriden. blackOutServerPercentage: {}, activeReqeustsPerServer: {}, availableServers: {}",

new Object[] {(double) circuitBreakerTrippedCount / instanceCount, loadPerServer, instanceCount - circuitBreakerTrippedCount});

return false;

} else {

return true;

}

}

}

}

The specific judgment process is as follows:

3.4.2.2 Ribbon's ServerListFilter implementation 2: ZonePreferenceServerListFilter

ZonePreferenceServerListFilter is integrated from ZoneAffinityServerListFilter and expanded on this basis. Based on the results returned by ZoneAffinityServerListFilter, the service list in the same area (zone) as the local service is filtered.

Core logic - when does it work?

When the Zone of the current service is specified and the ZoneAffinityServerListFilter has no filtering effect, ZonePreferenceServerListFilter will return the Server list of the current Zone.

public class ZonePreferenceServerListFilter extends ZoneAffinityServerListFilter<Server> {

private String zone;

@Override

public void initWithNiwsConfig(IClientConfig niwsClientConfig) {

super.initWithNiwsConfig(niwsClientConfig);

if (ConfigurationManager.getDeploymentContext() != null) {

this.zone = ConfigurationManager.getDeploymentContext().getValue(

ContextKey.zone);

}

}

@Override

public List<Server> getFilteredListOfServers(List<Server> servers) {

//Get the filtering results based on the parent class

List<Server> output = super.getFilteredListOfServers(servers);

//If there is no filtering effect, area priority filtering shall be carried out

if (this.zone != null && output.size() == servers.size()) {

List<Server> local = new ArrayList<>();

for (Server server : output) {

if (this.zone.equalsIgnoreCase(server.getZone())) {

local.add(server);

}

}

if (!local.isEmpty()) {

return local;

}

}

return output;

}

public String getZone() {

return zone;

}

public void setZone(String zone) {

this.zone = zone;

}

3.4.2.3 Ribbon's ServerListFilter implementation 3: ServerListSubsetFilter

This filter is used when the list of servers is very large (for example, there are hundreds of Server instances). At this time, it is not appropriate to maintain Http links for a long time. Some services can be properly reserved and some of them can be abandoned, so that unnecessary links can be released.

This filter is also inherited from ZoneAffinityServerListFilter. It has been expanded on this basis and is not very common in practical use. This will be introduced later, and the table will not be used for the time being.

3.4. 3. The loadbalancer selects the service instance

The core function of LoadBalancer is to select the most appropriate service instance from the service list according to the load. The LoadBalancer uses components as shown in the following figure:

LoadBalancer process for selecting service instances

- Obtain the list of currently available service instances through ServerList;

- Filter the service list obtained in step 1 through ServerListFilter to return the list of service instances that meet the filter conditions;

- Apply the Rule and return a service instance that meets the Rule in combination with the statistical information of the service instance;

As can be seen from the above process, in fact, there are two filtering opportunities in the process of service instance list selection: the first is to filter through ServerListFilter, and the other is to filter through IRule selection rules

The strategy of filtering service instances through ServerListFilter has been described in detail above. Next, we will introduce how Rule selects services from a list of services.

Before introducing Rule, we need to introduce a concept: Server statistics

After the LoadBalancer selects an appropriate Server to provide to the application, the application will send a service request to the Server. In the process of the request, the application will make statistics according to the corresponding time of the request or network connection; When the application subsequently selects the appropriate Server from the LoadBalancer, the LoadBalancer will determine which service is the most appropriate according to the statistical information of each service and rules.

3.4. 3.1 what information about the service instance Server is counted by the load balancer LoaderBalancer?

| ServerStats | explain | type | Default value |

|---|---|---|---|

| zone | The zone to which the current service belongs | to configure | Available through # Eureka instance. meta. Zone} assignment |

| totalRequests | The total number of requests. Each time the client calls, the number will increase | real time | 0 |

| activeRequestsCountTimeout | Activity request count time window niws loadbalancer. serverStats. activeRequestsCount. Effectivewindowseconds: if there is no change in the value of activerequestscount within the time window, activerequestscount is initialized to 0 | to configure | 60*10(seconds) |

| successiveConnectionFailureCount | Continuous connection failure count | real time | |

| connectionFailureThreshold | Connection failure threshold passed attribute niws loadbalancer. default. Connectionfailurecountthreshold + | to configure | 3 |

| circuitTrippedTimeoutFactor | Circuit breaker timeout factor, niws loadbalancer. default. circuitTripTimeoutFactorSeconds | to configure | 10(seconds) |

| maxCircuitTrippedTimeout | Maximum breaker timeout seconds, niws loadbalancer. default. circuitTripMaxTimeoutSeconds | to configure | 30(seconds) |

| totalCircuitBreakerBlackOutPeriod | Cumulative breaker terminal time interval | real time | milliseconds |

| lastAccessedTimestamp | Last connection time | real time | |

| lastConnectionFailedTimestamp | Last connection failure time | real time | |

| firstConnectionTimestamp | First connection time | real time | |

| activeRequestsCount | Number of currently active connections | real time | |

| failureCountSlidingWindowInterval | Failure count time window | to configure | 1000(ms) |

| serverFailureCounts | Number of connection failures in the current time window | real time | |

| responseTimeDist.mean | Average request response time | real time | |

| responseTimeDist.max | Request maximum response time | real time | |

| responseTimeDist.minimum | Request minimum response time | real time | |

| responseTimeDist.stddev | Standard deviation of request response time | real time | |

| dataDist.sampleSize | QoS quality of service collection point size | real time | |

| dataDist.timestamp | QoS quality of service last calculation time point | real time | |

| dataDist.timestampMillis | The number of milliseconds at the last time point of QoS service quality calculation, since January 1970 1 start | real time | |

| dataDist.mean | QoS average response time of requests in the latest time window | real time | |

| dataDist.10thPercentile | QoS 10% time to process requests | real time | |

| dataDist.25thPercentile | QoS 25% time to process requests | real time | |

| dataDist.50thPercentile | QoS 50% time to process requests | real time | |

| dataDist.75thPercentile | QoS 75% time to process requests | real time | |

| dataDist.95thPercentile | QoS 95% time to process requests | real time | |

| dataDist.99thPercentile | QoS 99% time to process requests | real time | |

| dataDist.99.5thPercentile | 99.5% time to process requests before QoS | real time |

3.4. 3.2 working principle of service circuit breaker

When there are multiple instances of a service, during the request process, The load balancer counts each request (corresponding time of request, whether network exception occurs, etc.), when cumulative retry occurs in a request, the load balancer will identify the current service instance and set the open time interval of the current service instance. Within this interval, when the request comes, the load balancer will temporarily remove this service instance from the list of available service instances and give priority to other service instances.

Relevant statistics are as follows:

| ServerStats | explain | type | Default value |

| successiveConnectionFailureCount | Continuous connection failure count | real time | |

| connectionFailureThreshold | Connection failure threshold passed attribute niws loadbalancer. default. Connectionfailurecountthreshold. When successionconnectionfailurecount exceeds this limit, the fusing time will be calculated | to configure | 3 |

| circuitTrippedTimeoutFactor | Circuit breaker timeout factor, niws loadbalancer. default. circuitTripTimeoutFactorSeconds | to configure | 10(seconds) |

| axCircuitTrippedTimeout | Maximum breaker timeout seconds, niws loadbalancer. default. circuitTripMaxTimeoutSeconds | to configure | 30(seconds) |

| totalCircuitBreakerBlackOutPeriod | Cumulative breaker terminal time interval | real time | milliseconds |

| lastAccessedTimestamp | Last connection time | real time | |

| lastConnectionFailedTimestamp | Last connection failure time | real time | |

| firstConnectionTimestamp | First connection time | real time |

@Monitor(name="CircuitBreakerTripped", type = DataSourceType.INFORMATIONAL)

public boolean isCircuitBreakerTripped() {

return isCircuitBreakerTripped(System.currentTimeMillis());

}

public boolean isCircuitBreakerTripped(long currentTime) {

//Time stamp of circuit breaker fusing

long circuitBreakerTimeout = getCircuitBreakerTimeout();

if (circuitBreakerTimeout <= 0) {

return false;

}

return circuitBreakerTimeout > currentTime;//If it is still within the fusing range, the fusing result is returned

}

//Get fusing timeout

private long getCircuitBreakerTimeout() {

long blackOutPeriod = getCircuitBreakerBlackoutPeriod();

if (blackOutPeriod <= 0) {

return 0;

}

return lastConnectionFailedTimestamp + blackOutPeriod;

}

//Returns the number of interrupt milliseconds

private long getCircuitBreakerBlackoutPeriod() {

int failureCount = successiveConnectionFailureCount.get();

int threshold = connectionFailureThreshold.get();

// If it fails continuously, but the upper limit has not been exceeded, the service interruption period is 0, indicating that it is available

if (failureCount < threshold) {

return 0;

}

//When the link failure exceeds the threshold, it will fuse. The fuse interval is:

int diff = (failureCount - threshold) > 16 ? 16 : (failureCount - threshold);

int blackOutSeconds = (1 << diff) * circuitTrippedTimeoutFactor.get();

if (blackOutSeconds > maxCircuitTrippedTimeout.get()) {

blackOutSeconds = maxCircuitTrippedTimeout.get();

}

return blackOutSeconds * 1000L;

}

Calculation of fusing time

- Calculate whether the cumulative connection failure count successionconnectionfailurecount exceeds the link failure threshold connectionFailureThreshold. If successionconnectionfailurecount < connectionFailureThreshold, that is, the limit has not been exceeded, the fusing time is 0; Conversely, if the limit is exceeded, perform the calculation in step 2;

- Calculate the base number of failures. The maximum number must not exceed 16: diff = (failurecount - threshold) > 16? 16 : (failureCount - threshold)

- Calculate the timeout according to the timeout factor circuitTrippedTimeoutFactor: int blackoutseconds = (1 < < diff) * circuitTrippedTimeoutFactor get();

- Timeout cannot exceed the maximum timeout ` maxcircuitrippedtimeout,

In case of link failure and open circuit logic, the request fusing time of 1 < < 16 * 10 = 320s at most and 1 < < 1 * 10 = 100s at least will be ignored during this period.

Namely:

Maximum fusing time: 1 < < 16 * 10 = 320s

Minimum fusing time: 1 < < 1 * 10 = 100s

When are the statistics cleared?

Fuse statistics have their own clearing strategy. When the following scenarios exist, fuse statistics will be cleared and zeroed:

- When the Exception occurred during the request is not an Exception of the open circuit interception class (whether the node is an Exception of the open circuit interception class can be customized)

- When no exception occurs to the request and the result is returned

3.4. 3.3 define IRule and select the most appropriate Server instance from the list of service instances

As can be seen from the above figure, IRule will return the most appropriate Server instance from the service list according to its own defined rules. Its interface definition is as follows:

public interface IRule{

/*

* choose one alive server from lb.allServers or

* lb.upServers according to key

*

* @return choosen Server object. NULL is returned if none

* server is available

*/

public Server choose(Object key);

public void setLoadBalancer(ILoadBalancer lb);

public ILoadBalancer getLoadBalancer();

}

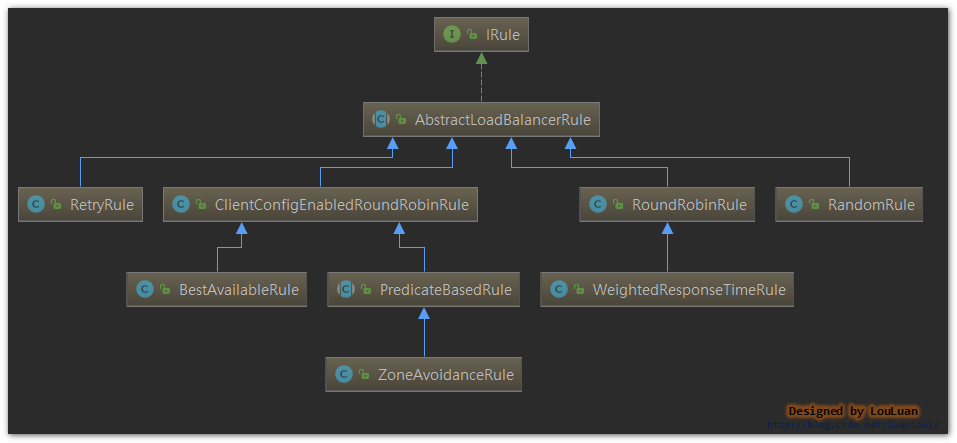

Ribbon defines some common rules

| realization | describe | remarks |

|---|---|---|

| RoundRobinRule | By polling, the selection process will have a retry mechanism of up to 10 times | |

| RandomRule | Randomly select a service from the list | |

| ZoneAvoidanceRule | Rules based on ZoneAvoidancePredicate assertions and availabilitypredicte assertions. Zoneavoidancepredict calculates which Zone has the worst service, and then removes the services of this Zone from the service list; Avaliabilitypredict filters out services that are in the fusing state; After filtering the results of the above two assertions, select a service from the list through RoundRobin polling | |

| BestAvailableRule | Optimal matching rule: select the Server with the least concurrency from the service list | |

| WeightedResponseTimeRule | The rule based on the weighted calculation of request response time. If this rule does not take effect, the service instance will be selected using the policy of roundrobin rule |

3.4. 3.3. 1. Implementation of roundrobin rule

public class RoundRobinRule extends AbstractLoadBalancerRule {

private AtomicInteger nextServerCyclicCounter;

private static final boolean AVAILABLE_ONLY_SERVERS = true;

private static final boolean ALL_SERVERS = false;

private static Logger log = LoggerFactory.getLogger(RoundRobinRule.class);

public RoundRobinRule() {

nextServerCyclicCounter = new AtomicInteger(0);

}

public RoundRobinRule(ILoadBalancer lb) {

this();

setLoadBalancer(lb);

}

public Server choose(ILoadBalancer lb, Object key) {

if (lb == null) {

log.warn("no load balancer");

return null;

}

Server server = null;

int count = 0;

//10 retry mechanism

while (server == null && count++ < 10) {

List<Server> reachableServers = lb.getReachableServers();

List<Server> allServers = lb.getAllServers();

int upCount = reachableServers.size();

int serverCount = allServers.size();

if ((upCount == 0) || (serverCount == 0)) {

log.warn("No up servers available from load balancer: " + lb);

return null;

}

// Generate polling data

int nextServerIndex = incrementAndGetModulo(serverCount);

server = allServers.get(nextServerIndex);

if (server == null) {

/* Transient. */

Thread.yield();

continue;

}

if (server.isAlive() && (server.isReadyToServe())) {

return (server);

}

// Next.

server = null;

}

if (count >= 10) {

log.warn("No available alive servers after 10 tries from load balancer: "

+ lb);

}

return server;

}

/**

* Inspired by the implementation of {@link AtomicInteger#incrementAndGet()}.

*

* @param modulo The modulo to bound the value of the counter.

* @return The next value.

*/

private int incrementAndGetModulo(int modulo) {

for (;;) {

int current = nextServerCyclicCounter.get();

int next = (current + 1) % modulo;

if (nextServerCyclicCounter.compareAndSet(current, next))

return next;

}

}

@Override

public Server choose(Object key) {

return choose(getLoadBalancer(), key);

}

}

3.4. 3.3. 2 implementation of zoneavoidancerule

Processing idea of ZoneAvoidanceRule:

- Zoneavoidancepredict calculates which Zone has the worst service, and then removes the services of this Zone from the service list;

- Availability predict removes the service in the fuse state;

- Select a service instance from roundrobin rule to return the services filtered in the above two steps

Zoneavoidancepredict the process of eliminating the worst Zone:

public static Set<String> getAvailableZones(

Map<String, ZoneSnapshot> snapshot, double triggeringLoad,

double triggeringBlackoutPercentage) {

if (snapshot.isEmpty()) {

return null;

}

Set<String> availableZones = new HashSet<String>(snapshot.keySet());

if (availableZones.size() == 1) {

return availableZones;

}

Set<String> worstZones = new HashSet<String>();

double maxLoadPerServer = 0;

boolean limitedZoneAvailability = false;

for (Map.Entry<String, ZoneSnapshot> zoneEntry : snapshot.entrySet()) {

String zone = zoneEntry.getKey();

ZoneSnapshot zoneSnapshot = zoneEntry.getValue();

int instanceCount = zoneSnapshot.getInstanceCount();

if (instanceCount == 0) {

availableZones.remove(zone);

limitedZoneAvailability = true;

} else {

double loadPerServer = zoneSnapshot.getLoadPerServer();

//If the load exceeds the limit, it will be removed from the available area

if (((double) zoneSnapshot.getCircuitTrippedCount())

/ instanceCount >= triggeringBlackoutPercentage

|| loadPerServer < 0) {

availableZones.remove(zone);

limitedZoneAvailability = true;

} else {

//Calculate the worst Zone

if (Math.abs(loadPerServer - maxLoadPerServer) < 0.000001d) {

// they are the same considering double calculation

// round error

worstZones.add(zone);

} else if (loadPerServer > maxLoadPerServer) {

maxLoadPerServer = loadPerServer;

worstZones.clear();

worstZones.add(zone);

}

}

}

}

// If the maximum load does not exceed the upper limit, all available partitions are returned

if (maxLoadPerServer < triggeringLoad && !limitedZoneAvailability) {

// zone override is not needed here

return availableZones;

}

// Select one from the worst available partitions at random to ensure high service availability

String zoneToAvoid = randomChooseZone(snapshot, worstZones);

if (zoneToAvoid != null) {

availableZones.remove(zoneToAvoid);

}

return availableZones;

}

IV Ribbon configuration parameters

| Control parameters | explain | Default value |

|---|---|---|

| <service-name>.ribbon.NFLoadBalancerPingInterval | Ping scheduled task cycle | 30 s |

| <service-name>.ribbon.NFLoadBalancerMaxTotalPingTime | Ping timeout | 2s |

| <service-name>.ribbon.NFLoadBalancerRuleClassName | IRule implementation class | Roundrobin rule, which selects service instances based on polling scheduling algorithm rules |

| <service-name>.ribbon.NFLoadBalancerPingClassName | IPing implementation class | DummyPing, directly return true |

| <service-name>.ribbon.NFLoadBalancerClassName | Load balancer implementation class | 2s |

| <service-name>.ribbon.NIWSServerListClassName | ServerList implementation class | ConfigurationBasedServerList, a list of services based on configuration |

| <service-name>.ribbon.ServerListUpdaterClassName | Service list update class | PollingServerListUpdater, |

| name>.ribbon.NIWSServerListFilterClassName | Service instance filter | 2s |

| <service-name>.ribbon.NIWSServerListFilterClassName | Service instance filter | 2s |

| <service-name>.ribbon.ServerListRefreshInterval | Service list refresh rate | 2s |

| <service-name>.ribbon.NFLoadBalancerClassName | Custom load balancer implementation class | 2s |

V Conclusion

Ribbon is a core module in the Spring Cloud framework, responsible for service load invocation. Ribbon can also be used separately from Spring Cloud.

In addition, Ribbon is the load balancing framework of the client, that is, each client independently maintains its own call information statistics and is isolated from each other; In other words, Ribbon's load balancing performance is not completely consistent on all machines

Ribbon is also the most complex part of the whole component framework. In the control process, in order to ensure the high availability of services, there are many detailed parameter controls. In the process of use, it is necessary to deeply clarify the processing mechanism of each link, so that it will be much more efficient in problem positioning.

--------

Copyright notice: This article is the original article of CSDN blogger "Yishan", which follows the CC 4.0 BY-SA copyright agreement. For reprint, please attach the source link of the original text and this notice.

Original link: https://blog.csdn.net/luanlouis/article/details/83060310